diff --git a/README.md b/README.md

index 75d45cf6..ddb61a2a 100644

--- a/README.md

+++ b/README.md

@@ -397,3 +397,4 @@ Execute a pipeline over an iterable parameter.

[](https://mermaid.live/edit#pako:eNqVlF1rwjAUhv9KyG4qKNR-3AS2m8nuBgN3Z0Sy5tQG20SSdE7E_76kVVEr2CY3Ied9Tx6Sk3PAmeKACc5LtcsKpi36nlGZFbXciHwfLN79CuWiBLMcEULWGkBSaeosA2OCxbxdXMd89Get2bZASsLiSyuvQE2mJZXIjW27t2rOmQZ3Gp9rD6UjatWnwy7q6zPPukd50WTydmemEiS_QbQ79RwxGoQY9UaMuojRA8TCXexzyHgQZNwbMu5Cxl3IXNX6OWMyiDHpzZh0GZMHjOK3xz2mgxjT3oxplzG9MPp5_nVOhwJjteDwOg3HyFj3L1dCcvh7DUc-iftX18n6Waet1xX8cG908vpKHO6OW7cvkeHm5GR2b3drdvaSGTODHLW37mxabYC8fLgRhlfxpjNdwmEets-Dx7gCXTHBXQc8-D2KbQEVUEzckjO9oZjKo9Ox2qr5XmaYWF3DGNdbzizMBHOVVWGSs9K4XeDCKv3ZttSmsx7_AYa341E)

### [Arbitrary nesting](https://astrazeneca.github.io/magnus-core/concepts/nesting/)

+Any nesting of parallel within map and so on.

diff --git a/docs/concepts/catalog.md b/docs/concepts/catalog.md

index a7ae7c98..af02b1af 100644

--- a/docs/concepts/catalog.md

+++ b/docs/concepts/catalog.md

@@ -18,10 +18,11 @@ For example, a local directory structure partitioned by a ```run_id``` or S3 buc

The directory structure within a partition is the same as the project directory structure. This enables you to

get/put data in the catalog as if you are working with local directory structure. Every interaction with the catalog

-(either by API or configuration) results in an entry in the [```run log```](/concepts/run-log/#step_log)

+(either by API or configuration) results in an entry in the [```run log```](../concepts/run-log.md/#step_log)

Internally, magnus also uses the catalog to store execution logs of tasks i.e stdout and stderr from

-[python](/concepts/task/#python) or [shell](/concepts/task/#shell) and executed notebook from [notebook tasks](/concepts/task/#notebook).

+[python](../concepts/task.md/#python) or [shell](../concepts/task.md/#shell) and executed notebook

+from [notebook tasks](../concepts/task.md/#notebook).

Since the catalog captures the data files flowing through the pipeline and the execution logs, it enables you

to debug failed pipelines or keep track of data lineage.

@@ -448,11 +449,11 @@ The execution results in the ```catalog``` populated with the artifacts and the

## Using python API

-Files could also be cataloged using [python API](/interactions)

+Files could also be cataloged using [python API](../interactions.md)

-This functionality is possible in [python](/concepts/task/#python_functions)

-and [notebook](/concepts/task/#notebook) tasks.

+This functionality is possible in [python](../concepts/task.md/#python_functions)

+and [notebook](../concepts/task.md/#notebook) tasks.

```python linenums="1" hl_lines="11 23 35 45"

--8<-- "examples/concepts/catalog_api.py"

@@ -463,9 +464,10 @@ and [notebook](/concepts/task/#notebook) tasks.

## Passing Data Objects

-Data objects can be shared between [python](/concepts/task/#python_functions) or [notebook](/concepts/task/#notebook) tasks,

+Data objects can be shared between [python](../concepts/task.md/#python_functions) or

+[notebook](../concepts/task.md/#notebook) tasks,

instead of serializing data and deserializing to file structure, using

-[get_object](/interactions/#magnus.get_object) and [put_object](/interactions/#magnus.put_object).

+[get_object](../interactions.md/#magnus.get_object) and [put_object](../interactions.md/#magnus.put_object).

Internally, we use [pickle](https:/docs.python.org/3/library/pickle.html) to serialize and

deserialize python objects. Please ensure that the object can be serialized via pickle.

diff --git a/docs/concepts/executor.md b/docs/concepts/executor.md

index 6e024d56..307f7fe0 100644

--- a/docs/concepts/executor.md

+++ b/docs/concepts/executor.md

@@ -1,6 +1,6 @@

Executors are the heart of magnus, they traverse the workflow and execute the tasks within the

workflow while coordinating with different services

-(eg. [run log](/concepts/run-log), [catalog](/concepts/catalog), [secrets](/concepts/secrets) etc)

+(eg. [run log](../concepts/run-log.md), [catalog](../concepts/catalog.md), [secrets](../concepts/secrets.md) etc)

To enable workflows run in varied computational environments, we distinguish between two core functions of

any workflow engine.

@@ -61,7 +61,7 @@ translated to argo specification just by changing the configuration.

In this configuration, we are using [argo workflows](https://argoproj.github.io/argo-workflows/)

as our workflow engine. We are also instructing the workflow engine to use a docker image,

```magnus:demo``` defined in line #4, as our execution environment. Please read

- [containerised environments](/configurations/executors/container-environments) for more information.

+ [containerised environments](../configurations/executors/container-environments.md) for more information.

Since magnus needs to track the execution status of the workflow, we are using a ```run log```

which is persistent and available in for jobs in kubernetes environment.

@@ -195,7 +195,7 @@ translated to argo specification just by changing the configuration.

```

-As seen from the above example, once a [pipeline is defined in magnus](/concepts/pipeline) either via yaml or SDK, we can

+As seen from the above example, once a [pipeline is defined in magnus](../concepts/pipeline.md) either via yaml or SDK, we can

run the pipeline in different environments just by providing a different configuration. Most often, there is

no need to change the code or deviate from standard best practices while coding.

@@ -287,22 +287,22 @@ def execute_single_node(workflow, step_name, configuration):

##### END POST EXECUTION #####

```

-1. The [run log](/concepts/run-log) maintains the state of the execution of the tasks and subsequently the pipeline. It also

+1. The [run log](../concepts/run-log.md) maintains the state of the execution of the tasks and subsequently the pipeline. It also

holds the latest state of parameters along with captured metrics.

-2. The [catalog](/concepts/catalog) contains the information about the data flowing through the pipeline. You can get/put

+2. The [catalog](../concepts/catalog.md) contains the information about the data flowing through the pipeline. You can get/put

artifacts generated during the current execution of the pipeline to a central storage.

-3. Read the workflow and get the [step definition](/concepts/task) which holds the ```command``` or ```function``` to

+3. Read the workflow and get the [step definition](../concepts/task.md) which holds the ```command``` or ```function``` to

execute along with the other optional information.

4. Any artifacts from previous steps that are needed to execute the current step can be

-[retrieved from the catalog](/concepts/catalog).

+[retrieved from the catalog](../concepts/catalog.md).

5. The current function or step might need only some of the

-[parameters casted as pydantic models](/concepts/task/#accessing_parameters), filter and cast them appropriately.

+[parameters casted as pydantic models](../concepts/task.md/#accessing_parameters), filter and cast them appropriately.

6. At this point in time, we have the required parameters and data to execute the actual command. The command can

-internally request for more data using the [python API](/interactions) or record

-[experiment tracking metrics](/concepts/experiment-tracking).

+internally request for more data using the [python API](..//interactions.md) or record

+[experiment tracking metrics](../concepts/experiment-tracking.md).

7. If the task failed, we update the run log with that information and also raise an exception for the

-workflow engine to handle. Any [on-failure](/concepts/pipeline/#on_failure) traversals are already handled

+workflow engine to handle. Any [on-failure](../concepts/pipeline.md/#on_failure) traversals are already handled

as part of the workflow definition.

8. Upon successful execution, we update the run log with current state of parameters for downstream steps.

-9. Any artifacts generated from this step are [put into the central storage](/concepts/catalog) for downstream steps.

+9. Any artifacts generated from this step are [put into the central storage](../concepts/catalog.md) for downstream steps.

10. We send a success message to the workflow engine and mark the step as completed.

diff --git a/docs/concepts/experiment-tracking.md b/docs/concepts/experiment-tracking.md

index a3b4863f..4f72d125 100644

--- a/docs/concepts/experiment-tracking.md

+++ b/docs/concepts/experiment-tracking.md

@@ -1,6 +1,6 @@

# Overview

-[Run log](/concepts/run-log) stores a lot of information about the execution along with the metrics captured

+[Run log](../concepts/run-log.md) stores a lot of information about the execution along with the metrics captured

during the execution of the pipeline.

@@ -9,7 +9,7 @@ during the execution of the pipeline.

=== "Using the API"

- The highlighted lines in the below example show how to [use the API](/interactions/#magnus.track_this)

+ The highlighted lines in the below example show how to [use the API](../interactions.md/#magnus.track_this)

Any pydantic model as a value would be dumped as a dict, respecting the alias, before tracking it.

@@ -207,7 +207,7 @@ The step is defaulted to be 0.

=== "Using the API"

- The highlighted lines in the below example show how to [use the API](/interactions/#magnus.track_this) with

+ The highlighted lines in the below example show how to [use the API](../interactions.md/#magnus.track_this) with

the step parameter.

You can run this example by ```python run examples/concepts/experiment_tracking_step.py```

@@ -452,17 +452,17 @@ Since mlflow does not support step wise logging of parameters, the key name is f

=== "In mlflow UI"

- { width="800" height="600"}

+ { width="800" height="600"}

mlflow UI for the execution. The run_id remains the same as the run_id of magnus

- { width="800" height="600"}

+ { width="800" height="600"}

The step wise metric plotted as a graph in mlflow

To provide implementation specific capabilities, we also provide a

-[python API](/interactions/#magnus.get_experiment_tracker_context) to obtain the client context. The default

+[python API](../interactions.md/#magnus.get_experiment_tracker_context) to obtain the client context. The default

client context is a [null context manager](https://docs.python.org/3/library/contextlib.html#contextlib.nullcontext).

diff --git a/docs/concepts/map.md b/docs/concepts/map.md

index 0a20b111..7024bb49 100644

--- a/docs/concepts/map.md

+++ b/docs/concepts/map.md

@@ -829,7 +829,7 @@ of the files to process.

## Traversal

A branch of a map step is considered success only if the ```success``` step is reached at the end.

-The steps of the pipeline can fail and be handled by [on failure](/concepts/pipeline/#on_failure) and

+The steps of the pipeline can fail and be handled by [on failure](../concepts/pipeline.md/#on_failure) and

redirected to ```success``` if that is the desired behavior.

The map step is considered successful only if all the branches of the step have terminated successfully.

@@ -838,7 +838,7 @@ The map step is considered successful only if all the branches of the step have

## Parameters

All the tasks defined in the branches of the map pipeline can

-[access to parameters and data as usual](/concepts/task).

+[access to parameters and data as usual](../concepts/task.md).

!!! warning

diff --git a/docs/concepts/nesting.md b/docs/concepts/nesting.md

index cdd3874c..cfeedaea 100644

--- a/docs/concepts/nesting.md

+++ b/docs/concepts/nesting.md

@@ -1,4 +1,5 @@

-As seen from the definitions of [parallel](/concepts/parallel) or [map](/concepts/map), the branches are pipelines

+As seen from the definitions of [parallel](../concepts/parallel.md) or

+[map](../concepts/map.md), the branches are pipelines

themselves. This allows for deeply nested workflows in **magnus**.

Technically there is no limit in the depth of nesting but there are some practical considerations.

diff --git a/docs/concepts/parallel.md b/docs/concepts/parallel.md

index 4c1e4ea9..112b5ea1 100644

--- a/docs/concepts/parallel.md

+++ b/docs/concepts/parallel.md

@@ -7,7 +7,7 @@ Parallel nodes in magnus allows you to run multiple pipelines in parallel and us

All the steps in the below example are ```stubbed``` for convenience. The functionality is similar

even if the steps are execution units like ```tasks``` or any other nodes.

- We support deeply [nested steps](/concepts/nesting). For example, a step in the parallel branch can be a ```map``` which internally

+ We support deeply [nested steps](../concepts/nesting.md). For example, a step in the parallel branch can be a ```map``` which internally

loops over a ```dag``` and so on. Though this functionality is useful, it can be difficult to debug and

understand in large code bases.

@@ -549,7 +549,7 @@ ensemble model happens only after both models are (successfully) trained.

All pipelines, nested or parent, have the same structure as defined in

-[pipeline definition](/concepts/pipeline).

+[pipeline definition](../concepts/pipeline.md).

The parent pipeline defines a step ```Train models``` which is a parallel step.

The branches, XGBoost and RF model, are pipelines themselves.

@@ -557,7 +557,7 @@ The branches, XGBoost and RF model, are pipelines themselves.

## Traversal

A branch of a parallel step is considered success only if the ```success``` step is reached at the end.

-The steps of the pipeline can fail and be handled by [on failure](/concepts/pipeline/#on_failure) and

+The steps of the pipeline can fail and be handled by [on failure](../concepts/pipeline.md/#on_failure) and

redirected to ```success``` if that is the desired behavior.

The parallel step is considered successful only if all the branches of the step have terminated successfully.

@@ -566,7 +566,7 @@ The parallel step is considered successful only if all the branches of the step

## Parameters

All the tasks defined in the branches of the parallel pipeline can

-[access to parameters and data as usual](/concepts/task).

+[access to parameters and data as usual](../concepts/task.md).

!!! warning

diff --git a/docs/concepts/parameters.md b/docs/concepts/parameters.md

index d6967bc9..dbdc2ae0 100644

--- a/docs/concepts/parameters.md

+++ b/docs/concepts/parameters.md

@@ -1,16 +1,16 @@

In magnus, ```parameters``` are python data types that can be passed from one ```task```

to the next ```task```. These parameters can be accessed by the ```task``` either as

environment variables, arguments of the ```python function``` or using the

-[API](/interactions).

+[API](../interactions.md).

## Initial parameters

The initial parameters of the pipeline can set by using a ```yaml``` file and presented

during execution

-```--parameters-file, -parameters``` while using the [magnus CLI](/usage/#usage)

+```--parameters-file, -parameters``` while using the [magnus CLI](../usage.md/#usage)

-or by using ```parameters_file``` with [the sdk](/sdk/#magnus.Pipeline.execute).

+or by using ```parameters_file``` with [the sdk](..//sdk.md/#magnus.Pipeline.execute).

They can also be set using environment variables which override the parameters defined by the file.

@@ -42,5 +42,5 @@ They can also be set using environment variables which override the parameters d

## Parameters flow

Tasks can access and return parameters and the patterns are specific to the

-```command_type``` of the task nodes. Please refer to [tasks](/concepts/task)

+```command_type``` of the task nodes. Please refer to [tasks](../concepts/task.md)

for more information.

diff --git a/docs/concepts/run-log.md b/docs/concepts/run-log.md

index 3bb41405..8f677fb9 100644

--- a/docs/concepts/run-log.md

+++ b/docs/concepts/run-log.md

@@ -10,7 +10,7 @@ when running the ```command``` of a task.

=== "pipeline"

- This is the same example [described in tasks](/concepts/task/#shell).

+ This is the same example [described in tasks](../concepts/task.md/#shell).

tl;dr a pipeline that consumes some initial parameters and passes them

to the next step. Both the steps are ```shell``` based tasks.

@@ -389,7 +389,7 @@ A snippet from the above example:

- For non-nested steps, the key is the name of the step. For example, the first entry

in the steps mapping is "access initial" which corresponds to the name of the task in

the pipeline. For nested steps, the step log is also nested and shown in more detail for

- [parallel](/concepts/parallel), [map](/concepts/map).

+ [parallel](../concepts/parallel.md), [map](../concepts/map.md).

- ```status```: In line #5 is the status of the step with three possible states,

```SUCCESS```, ```PROCESSING``` or ```FAILED```

@@ -426,12 +426,12 @@ end time, duration of the execution and the parameters at the time of execution

}

```

-- ```user_defined_metrics```: are any [experiment tracking metrics](/concepts/task/#experiment_tracking)

+- ```user_defined_metrics```: are any [experiment tracking metrics](../concepts/task.md/#experiment_tracking)

captured during the execution of the step.

- ```branches```: This only applies to parallel, map or dag steps and shows the logs captured during the

execution of the branch.

-- ```data_catalog```: Captures any data flowing through the tasks by the [catalog](/concepts/catalog).

+- ```data_catalog```: Captures any data flowing through the tasks by the [catalog](../concepts/catalog.md).

By default, the execution logs of the task are put in the catalog for easier debugging purposes.

For example, the below lines from the snippet specifies one entry into the catalog which is the execution log

@@ -463,7 +463,7 @@ reproduced in local environments and fixed.

- non-nested, linear pipelines

- non-chunked run log store

- [mocked executor](/configurations/executors/mocked) provides better support in debugging failures.

+ [mocked executor](../configurations/executors/mocked.md) provides better support in debugging failures.

### Example

@@ -1237,10 +1237,10 @@ reproduced in local environments and fixed.

## API

Tasks can access the ```run log``` during the execution of the step

-[using the API](/interactions/#magnus.get_run_log). The run log returned by this method is a deep copy

+[using the API](../interactions.md/#magnus.get_run_log). The run log returned by this method is a deep copy

to prevent any modifications.

Tasks can also access the ```run_id``` of the current execution either by

-[using the API](/interactions/#magnus.get_run_id) or by the environment

+[using the API](../interactions.md/#magnus.get_run_id) or by the environment

variable ```MAGNUS_RUN_ID```.

diff --git a/docs/concepts/secrets.md b/docs/concepts/secrets.md

index 2a31d25e..b6ee7fb6 100644

--- a/docs/concepts/secrets.md

+++ b/docs/concepts/secrets.md

@@ -11,7 +11,7 @@ Most complex pipelines require secrets to hold sensitive information during task

They could be database credentials, API keys or any information that need to present at

the run-time but invisible at all other times.

-Magnus provides a [clean API](/interactions/#magnus.get_secret) to access secrets

+Magnus provides a [clean API](../interactions.md/#magnus.get_secret) to access secrets

and independent of the actual secret provider, the interface remains the same.

A typical example would be a task requiring the database connection string to connect

@@ -29,7 +29,7 @@ class CustomObject:

# Do something with the secrets

```

-Please refer to [configurations](/configurations/secrets) for available implementations.

+Please refer to [configurations](../configurations/secrets.md) for available implementations.

## Example

diff --git a/docs/concepts/task.md b/docs/concepts/task.md

index 6d198bd6..3cfcae84 100644

--- a/docs/concepts/task.md

+++ b/docs/concepts/task.md

@@ -79,8 +79,8 @@ is to execute this function.

- ```command``` : Should refer to the function in [dotted path notation](#python_functions).

- ```command_type```: Defaults to python and not needed for python task types.

-- [next](../pipeline/#linking): is required for any step of the pipeline except for success and fail steps.

-- [on_failure](../pipeline/#on_failure): Name of the step to execute if the step fails.

+- [next](pipeline.md/#linking): is required for any step of the pipeline except for success and fail steps.

+- [on_failure](pipeline.md/#on_failure): Name of the step to execute if the step fails.

- catalog: Optional required for data access patterns from/to the central storage.

@@ -111,7 +111,7 @@ is to execute this function.

-Please refer to [Initial Parameters](/concepts/parameters/#initial_parameters) for more information about setting

+Please refer to [Initial Parameters](parameters.md/#initial_parameters) for more information about setting

initial parameters.

Lets assume that the initial parameters are:

@@ -177,14 +177,14 @@ Lets assume that the initial parameters are:

=== "Using the API"

- Magnus also has [python API](/interactions) to access parameters.

+ Magnus also has [python API](../interactions.md) to access parameters.

- Use [get_parameter](/interactions/#magnus.get_parameter) to access a parameter at the root level.

+ Use [get_parameter](../interactions.md/#magnus.get_parameter) to access a parameter at the root level.

You can optionally specify the ```type``` by using ```cast_as``` argument to the API.

For example, line 19 would cast ```eggs```parameter into ```EggsModel```.

Native python types do not need any explicit ```cast_as``` argument.

- Use [set_parameter](/interactions/#magnus.set_parameter) to set parameters at the root level.

+ Use [set_parameter](../interactions.md/#magnus.set_parameter) to set parameters at the root level.

Multiple parameters can be set at the same time, for example, line 26 would set both the ```spam```

and ```eggs``` in a single call.

@@ -234,7 +234,7 @@ Lets assume that the initial parameters are:

### Passing data and execution logs

-Please refer to [catalog](/concepts/catalog) for more details and examples on passing

+Please refer to [catalog](../concepts/catalog.md) for more details and examples on passing

data between tasks and the storage of execution logs.

---

@@ -261,7 +261,7 @@ The output notebook is also saved in the ```catalog``` for logging and ease of d

- { width="800" height="600"}

+ { width="800" height="600"}

@@ -290,8 +290,8 @@ the current project are readily available.

- ```notebook_output_path```: the location of the executed notebook. Defaults to the

notebook name defined in ```command``` with ```_out``` post-fixed. The location should be relative

to the project root and also would be stored in catalog in the same location.

-- [next](/concepts/pipeline/#linking): is required for any step of the pipeline except for success and fail steps.

-- [on_failure](/concepts/pipeline/#on_failure): Name of the step to execute if the step fails.

+- [next](../concepts/pipeline.md/#linking): is required for any step of the pipeline except for success and fail steps.

+- [on_failure](../concepts/pipeline.md/#on_failure): Name of the step to execute if the step fails.

- catalog: Optional required for data access patterns from/to the central storage.

### ploomber arguments

@@ -315,7 +315,7 @@ You can set additional arguments or override these by sending an optional dictio

### Accessing parameters

-Please refer to [Initial Parameters](/concepts/parameters/#initial_parameters) for more information about setting

+Please refer to [Initial Parameters](parameters.md/#initial_parameters) for more information about setting

initial parameters.

Assume that the initial parameters are:

@@ -343,7 +343,7 @@ Assume that the initial parameters are:

=== "Notebook"

- { width="800" height="600"}

+ { width="800" height="600"}

@@ -359,7 +359,7 @@ Assume that the initial parameters are:

For example, the initial parameters will be passed to the notebook as shown below.

- { width="800" height="600"}

+ { width="800" height="600"}

@@ -378,7 +378,7 @@ Assume that the initial parameters are:

- { width="800" height="600"}

+ { width="800" height="600"}

@@ -405,7 +405,7 @@ Assume that the initial parameters are:

- { width="800" height="600"}

+ { width="800" height="600"}

@@ -441,7 +441,7 @@ Assume that the initial parameters are:

### Passing data and execution logs

-Please refer to [catalog](/concepts/catalog) for more details and examples on passing

+Please refer to [catalog](catalog.md) for more details and examples on passing

data between tasks and the storage of execution logs.

@@ -465,14 +465,14 @@ to execute the command.

- ```command``` : Should refer to the exact command to execute. Multiple commands can be run by using the ```&&``` delimiter.

- ```command_type```: Should be shell.

-- [next](../pipeline/#linking): is required for any step of the pipeline except for success and fail steps.

-- [on_failure](../pipeline/#on_failure): Name of the step to execute if the step fails.

+- [next](pipeline.md/#linking): is required for any step of the pipeline except for success and fail steps.

+- [on_failure](pipeline.md/#on_failure): Name of the step to execute if the step fails.

- catalog: Optional required for data access patterns from/to the central storage.

### Accessing parameters

-Please refer to [Initial Parameters](/concepts/parameters/#initial_parameters) for more information about setting

+Please refer to [Initial Parameters](parameters.md/#initial_parameters) for more information about setting

initial parameters.

Assuming the initial parameters are:

@@ -513,10 +513,10 @@ lines 33-35.

### Passing data and execution logs

-Please refer to [catalog](/concepts/catalog) for more details and examples on passing

+Please refer to [catalog](catalog.md) for more details and examples on passing

data between tasks and the storage of execution logs.

## Experiment tracking

-Please refer to [experiment tracking](/concepts/experiment-tracking) for more details and examples on experiment tracking.

+Please refer to [experiment tracking](experiment-tracking.md) for more details and examples on experiment tracking.

diff --git a/docs/concepts/the-big-picture.md b/docs/concepts/the-big-picture.md

index 62bd9208..60a6eb2f 100644

--- a/docs/concepts/the-big-picture.md

+++ b/docs/concepts/the-big-picture.md

@@ -2,7 +2,7 @@ Magnus revolves around the concept of pipelines or workflows and tasks that happ

---

-A [workflow](/concepts/pipeline) is simply a series of steps that you want to execute for a desired outcome.

+A [workflow](pipeline.md) is simply a series of steps that you want to execute for a desired outcome.

``` mermaid

%%{ init: { 'flowchart': { 'curve': 'linear' } } }%%

@@ -20,16 +20,16 @@ flowchart LR

To define a workflow, we need:

-- [List of steps](/concepts/pipeline/#steps)

-- [starting step](/concepts/pipeline/#start_at)

+- [List of steps](pipeline.md/#steps)

+- [starting step](pipeline.md/#start_at)

- Next step

- - [In case of success](/concepts/pipeline/#linking)

- - [In case of failure](/concepts/pipeline/#on_failure)

+ - [In case of success](pipeline.md/#linking)

+ - [In case of failure](pipeline.md/#on_failure)

-- [Terminating](/concepts/pipeline/#terminating)

+- [Terminating](pipeline.md/#terminating)

-The workflow can be defined either in ```yaml``` or using the [```python sdk```](/sdk).

+The workflow can be defined either in ```yaml``` or using the [```python sdk```](../sdk.md).

---

@@ -40,18 +40,18 @@ A step in the workflow can be:

A step in the workflow that does a logical unit work.

- The unit of work can be a [python function](/concepts/task/#python_functions),

- a [shell script](/concepts/task/#shell) or a

- [notebook](/concepts/task/#notebook).

+ The unit of work can be a [python function](task.md/#python_functions),

+ a [shell script](task.md/#shell) or a

+ [notebook](task.md/#notebook).

All the logs, i.e stderr and stdout or executed notebooks are stored

- in [catalog](/concepts/catalog) for easier access and debugging.

+ in [catalog](catalog.md) for easier access and debugging.

=== "stub"

- An [abstract step](/concepts/stub) that is not yet fully implemented.

+ An [abstract step](stub.md) that is not yet fully implemented.

For example in python:

@@ -63,7 +63,7 @@ A step in the workflow can be:

=== "parallel"

- A step that has a defined number of [parallel workflows](/concepts/parallel) executing

+ A step that has a defined number of [parallel workflows](parallel.md) executing

simultaneously.

In the below visualisation, the green lined steps happen in sequence and wait for the previous step to

@@ -108,7 +108,7 @@ A step in the workflow can be:

=== "map"

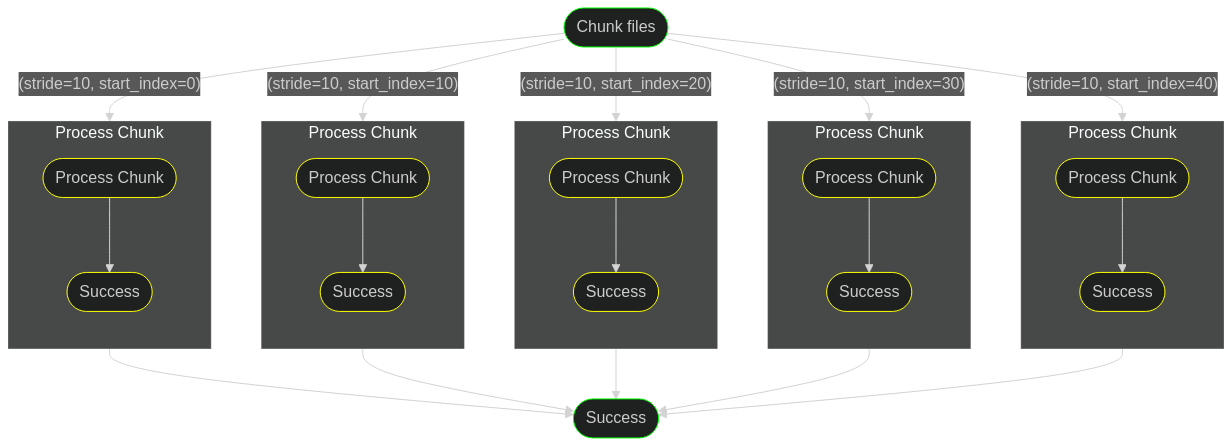

- A step that executes a workflow over an [iterable parameter](/concepts/map).

+ A step that executes a workflow over an [iterable parameter](map.md).

The step "chunk files" identifies the number of files to process and computes the start index of every

batch of files to process for a chunk size of 10, the stride.

@@ -172,19 +172,19 @@ A step in the workflow can be:

---

-A [step type of task](/concepts/task) is the functional unit of the pipeline.

+A [step type of task](task.md) is the functional unit of the pipeline.

To be useful, it can:

- Access parameters

- - Either [defined statically](/concepts/parameters/#initial_parameters) at the start of the

+ - Either [defined statically](parameters.md/#initial_parameters) at the start of the

pipeline

- - Or by [upstream steps](/concepts/parameters/#parameters_flow)

+ - Or by [upstream steps](parameters.md/#parameters_flow)

-- [Publish or retrieve artifacts](/concepts/catalog) from/to other steps.

-- [Publish metrics](/concepts/experiment-tracking) that are interesting.

-- Have [access to secrets](/concepts/secrets).

+- [Publish or retrieve artifacts](catalog.md) from/to other steps.

+- [Publish metrics](experiment-tracking.md) that are interesting.

+- Have [access to secrets](secrets.md).

All the above functionality is possible either via:

@@ -193,15 +193,15 @@ All the above functionality is possible either via:

- Application native way.

- Or via environment variables.

-- Or via the [python API](/interactions) which involves ```importing magnus``` in your code.

+- Or via the [python API](../interactions.md) which involves ```importing magnus``` in your code.

---

All executions of the pipeline should be:

-- [Reproducible](/concepts/run-log) for audit and data lineage purposes.

+- [Reproducible](run-log.md) for audit and data lineage purposes.

- Runnable at local environments for

-[debugging failed runs](/concepts/run-log/#retrying_failures).

+[debugging failed runs](run-log.md/#retrying_failures).

---

@@ -212,7 +212,7 @@ Pipelines should be portable between different infrastructure patterns.

Infrastructure patterns change all the time and

so are the demands from the infrastructure.

-We achieve this by [changing configurations](/configurations/overview), rather than

+We achieve this by [changing configurations](../configurations/overview.md), rather than

changing the application code.

For example a pipeline should be able to run:

diff --git a/docs/configurations/catalog.md b/docs/configurations/catalog.md

index a12d5609..2d20191d 100644

--- a/docs/configurations/catalog.md

+++ b/docs/configurations/catalog.md

@@ -1,5 +1,5 @@

Catalog provides a way to store and retrieve data generated by the individual steps of the dag to downstream

-steps of the dag. Please refer to [concepts](/concepts/catalog) for more detailed information.

+steps of the dag. Please refer to [concepts](../concepts/catalog.md) for more detailed information.

## do-nothing

diff --git a/docs/configurations/executors/argo.md b/docs/configurations/executors/argo.md

index bb732e31..4898eff8 100644

--- a/docs/configurations/executors/argo.md

+++ b/docs/configurations/executors/argo.md

@@ -16,7 +16,7 @@ to get inputs from infrastructure teams or ML engineers in defining the configur

## Configuration

Only ```image``` is the required parameter. Please refer to the

-[note on containers](/configurations/executors/container-environments) on building images.

+[note on containers](container-environments.md) on building images.

```yaml linenums="1"

@@ -333,7 +333,7 @@ as inputs to the workflow. This allows for changing the parameters at runtime.

=== "Run Submission"

- { width="800" height="600"}

+ { width="800" height="600"}

argo workflows UI exposing the parameters

@@ -411,7 +411,7 @@ The parallelism constraint [only applies to the step](https://github.com/argopro

=== "Pipeline"

- This example is the same as [detailed in map](/concepts/map).

+ This example is the same as [detailed in map](../../concepts/map.md).

```yaml linenums="1" hl_lines="22-23 25-36"

--8<-- "examples/concepts/map.yaml"

@@ -423,7 +423,7 @@ The parallelism constraint [only applies to the step](https://github.com/argopro

tasks execute simultaneously.

- { width="800" height="600"}

+ { width="800" height="600"}

argo workflows UI exposing the parameters

@@ -442,7 +442,7 @@ The parallelism constraint [only applies to the step](https://github.com/argopro

=== "Pipeline"

- The pipeline defined here is nearly the same as [detailed in map](/concepts/map) with the

+ The pipeline defined here is nearly the same as [detailed in map](../../concepts/map.md) with the

only exception in lines 25-26 which use the ```sequential``` override.

```yaml linenums="1" hl_lines="22-23 25-36"

@@ -456,7 +456,7 @@ The parallelism constraint [only applies to the step](https://github.com/argopro

instead of parallel as seen in the default.

- { width="800" height="600"}

+ { width="800" height="600"}

argo workflows UI exposing the parameters

@@ -538,7 +538,7 @@ code versioning tools. We recommend using ```secrets_from_k8s``` in the configur

Assumed to be present at ```examples/configs/argo-config.yaml```

- The docker image is a [variable](/configurations/executors/container-environments/#dynamic_name_of_the_image) and

+ The docker image is a [variable](container-environments.md/#dynamic_name_of_the_image) and

dynamically set during execution.

```yaml linenums="1" hl_lines="4"

@@ -547,7 +547,7 @@ code versioning tools. We recommend using ```secrets_from_k8s``` in the configur

1. Use ```argo``` executor type to execute the pipeline.

2. By default, all the tasks are executed in the docker image . Please

- refer to [building docker images](/configurations/executors/container-environments/)

+ refer to [building docker images](container-environments.md)

3. Mount the persistent volume ```magnus-volume``` to all the containers as ```/mnt```.

4. Store the run logs in the file-system. As all containers have access to ```magnus-volume```

as ```/mnt```. We use that to mounted folder as run log store.

@@ -556,7 +556,7 @@ code versioning tools. We recommend using ```secrets_from_k8s``` in the configur

=== "python SDK"

Running the SDK defined pipelines for any container based executions [happens in

- multi-stage process](/configurations/executors/container-environments/).

+ multi-stage process](container-environments.md).

1. Generate the ```yaml``` definition file by:

```MAGNUS_CONFIGURATION_FILE=examples/configs/argo-config.yaml python examples/concepts/simple.py```

@@ -570,7 +570,7 @@ code versioning tools. We recommend using ```secrets_from_k8s``` in the configur

```

1. You can provide a configuration file dynamically by using the environment

- variable ```MAGNUS_CONFIGURATION_FILE```. Please see [SDK for more details](../../sdk).

+ variable ```MAGNUS_CONFIGURATION_FILE```. Please see [SDK for more details](../../sdk.md).

=== "yaml"

@@ -594,12 +594,12 @@ code versioning tools. We recommend using ```secrets_from_k8s``` in the configur

=== "Screenshots"

- { width="800" height="600"}

+ { width="800" height="600"}

argo workflows UI showing the pipeline

- { width="800" height="600"}

+ { width="800" height="600"}

argo workflows UI showing the logs

@@ -788,7 +788,7 @@ Magnus compiled argo workflows support deeply nested workflows.

=== "Nested workflow"

- This is the same example as shown in [nested](/concepts/nesting).

+ This is the same example as shown in [nested](../../concepts/nesting.md).

```yaml linenums="1"

--8<-- "examples/concepts/nesting.yaml"

@@ -799,7 +799,7 @@ Magnus compiled argo workflows support deeply nested workflows.

Assumed to be present at ```examples/configs/argo-config.yaml```

- The docker image is a [variable](/configurations/executors/container-environments/) and

+ The docker image is a [variable](container-environments.md) and

dynamically set during execution.

```yaml linenums="1" hl_lines="4"

@@ -808,7 +808,7 @@ Magnus compiled argo workflows support deeply nested workflows.

1. Use ```argo``` executor type to execute the pipeline.

2. By default, all the tasks are executed in the docker image . Please

- refer to [building docker images](#container_environments)

+ refer to [building docker images](container-environments.md)

3. Mount the persistent volume ```magnus-volume``` to all the containers as ```/mnt```.

4. Store the run logs in the file-system. As all containers have access to ```magnus-volume```

as ```/mnt```. We use that to mounted folder as run log store.

@@ -1628,7 +1628,7 @@ Magnus compiled argo workflows support deeply nested workflows.

=== "In argo UI"

- { width="800" height="600"}

+ { width="800" height="600"}

argo workflows UI showing the deeply nested workflows.

@@ -1636,15 +1636,15 @@ Magnus compiled argo workflows support deeply nested workflows.

## Kubeflow

Kubeflow pipelines compiles workflows defined in SDK to Argo workflows and thereby

-has support for uploading argo workflows. Below is a screenshot of the [map](/concepts/map) pipeline uploaded to Kubeflow.

+has support for uploading argo workflows. Below is a screenshot of the [map](../../concepts/map.md) pipeline uploaded to Kubeflow.

- { width="800" height="600"}

+ { width="800" height="600"}

argo workflows UI showing the map workflow definition.

- { width="800" height="600"}

+ { width="800" height="600"}

argo workflows UI showing the map workflow execution.

diff --git a/docs/configurations/executors/container-environments.md b/docs/configurations/executors/container-environments.md

index 32d18f9b..2bb965fe 100644

--- a/docs/configurations/executors/container-environments.md

+++ b/docs/configurations/executors/container-environments.md

@@ -1,11 +1,11 @@

## Pipeline definition

Executing pipelines in containers needs a ```yaml``` based definition of the pipeline which is

-referred during the [task execution](/concepts/executor/#step_execution).

+referred during the [task execution](../../concepts/executor.md/#step_execution).

-Any execution of the pipeline [defined by SDK](/sdk) generates the pipeline

-definition in```yaml``` format for all executors apart from the [```local``` executor](../local).

+Any execution of the pipeline [defined by SDK](../../sdk.md) generates the pipeline

+definition in```yaml``` format for all executors apart from the [```local``` executor](local.md).

Follow the below steps to execute the pipeline defined by SDK.

@@ -20,7 +20,7 @@ Follow the below steps to execute the pipeline defined by SDK.

tagging the docker image with the short git sha to uniquely identify the docker image (1).

3. Define a [variable to temporarily hold](https://docs.python.org/3/library/string.html#template-strings) the docker image name in the

pipeline definition, if the docker image name is not known.

-4. Execute the pipeline using the [magnus CLI](/usage/#usage).

+4. Execute the pipeline using the [magnus CLI](../../usage.md/#usage).

diff --git a/docs/configurations/executors/local-container.md b/docs/configurations/executors/local-container.md

index b40ccaf5..94819c3d 100644

--- a/docs/configurations/executors/local-container.md

+++ b/docs/configurations/executors/local-container.md

@@ -1,6 +1,6 @@

Execute all the steps of the pipeline in containers. Please refer to the

-[note on containers](/configurations/executors/container-environments/) on building images.

+[note on containers](container-environments.md) on building images.

- [x] Provides a way to test the containers and the execution of the pipeline in local environment.

- [x] Any failure in cloud native container environments can be replicated in local environments.

@@ -33,14 +33,14 @@ config:

```

1. By default, all tasks are sequentially executed. Provide ```true``` to enable tasks within

-[parallel](/concepts/parallel) or [map](/concepts/map) to be executed in parallel.

+[parallel](../../concepts/parallel.md) or [map](../../concepts/map.md) to be executed in parallel.

2. Set it to false, to debug a failed container.

-3. Setting it to true will behave exactly like a [local executor](/configurations/executors/local/).

+3. Setting it to true will behave exactly like a [local executor](local.md).

4. Pass any environment variables into the container.

5. Please refer to [step overrides](#step_override) for more details.

The ```docker_image``` field is required and default image to execute tasks

-of the pipeline. Individual [tasks](/concepts/task) can

+of the pipeline. Individual [tasks](../../concepts/task.md) can

[override](#step_override) the global defaults of executor by providing ```overrides```

@@ -63,7 +63,7 @@ the patterns.

Assumed to be present at ```examples/configs/local-container.yaml```

- The docker image is a [variable](/configurations/executors/container-environments/#dynamic_name_of_the_image) and

+ The docker image is a [variable](container-environments.md/#dynamic_name_of_the_image) and

dynamically set during execution.

```yaml linenums="1" hl_lines="4"

@@ -72,7 +72,7 @@ the patterns.

1. Use local-container executor type to execute the pipeline.

2. By default, all the tasks are executed in the docker image . Please

- refer to [building docker images](/configurations/executors/container-environments/#dynamic_name_of_the_image)

+ refer to [building docker images](container-environments.md/#dynamic_name_of_the_image)

3. Pass any environment variables that are needed for the container.

4. Store the run logs in the file-system. Magnus will handle the access to them

by mounting the file system into the container.

@@ -81,7 +81,7 @@ the patterns.

=== "python sdk"

Running the SDK defined pipelines for any container based executions [happens in

- multi-stage process](/configurations/executors/container-environments/).

+ multi-stage process](container-environments.md).

1. Generate the ```yaml``` definition file by:

```MAGNUS_CONFIGURATION_FILE=examples/configs/local-container.yaml python examples/concepts/simple.py```

@@ -95,7 +95,7 @@ the patterns.

```

1. You can provide a configuration file dynamically by using the environment

- variable ```MAGNUS_CONFIGURATION_FILE```. Please see [SDK for more details](sdk).

+ variable ```MAGNUS_CONFIGURATION_FILE```. Please see [SDK for more details](../../sdk.md).

@@ -302,7 +302,7 @@ executor.

As seen in the above example,

running the SDK defined pipelines for any container based executions [happens in

- multi-stage process](/configurations/executors/container-environments/).

+ multi-stage process](container-environments.md).

1. Generate the ```yaml``` definition file by:

```MAGNUS_CONFIGURATION_FILE=examples/executors/local-container-override.yaml python examples/executors/step_overrides_container.py```

diff --git a/docs/configurations/executors/local.md b/docs/configurations/executors/local.md

index f1299126..01252a50 100644

--- a/docs/configurations/executors/local.md

+++ b/docs/configurations/executors/local.md

@@ -24,7 +24,7 @@ config:

```

1. By default, all tasks are sequentially executed. Provide ```true``` to enable tasks within

-[parallel](/concepts/parallel) or [map](/concepts/map) to be executed in parallel.

+[parallel](../..//concepts/parallel.md) or [map](../../concepts/map.md) to be executed in parallel.

diff --git a/docs/configurations/executors/mocked.md b/docs/configurations/executors/mocked.md

index 84d26f83..79070547 100644

--- a/docs/configurations/executors/mocked.md

+++ b/docs/configurations/executors/mocked.md

@@ -23,7 +23,8 @@ to run and the configuration of the command.

#### Command configuration for notebook nodes

```python``` and ```shell``` based tasks have no configuration options apart from the ```command```.

-Notebook nodes have additional configuration options [detailed in concepts](/concepts/task/#notebook). Ploomber engine provides [rich options](https://engine.ploomber.io/en/docs/user-guide/debugging/debuglater.html) in debugging failed notebooks.

+Notebook nodes have additional configuration options [detailed in concepts](../../concepts/task.md/#notebook).

+Ploomber engine provides [rich options](https://engine.ploomber.io/en/docs/user-guide/debugging/debuglater.html) in debugging failed notebooks.

## Example

@@ -211,7 +212,7 @@ take an example pipeline to test the behavior of the traversal.

The below pipeline is designed to follow: ```step 1 >> step 2 >> step 3``` in case of no failures

and ```step 1 >> step3``` in case of failure. The traversal is

-[shown in concepts](/concepts/pipeline/#on_failure).

+[shown in concepts](../../concepts/pipeline.md/#on_failure).

!!! tip "Asserting Run log"

diff --git a/docs/configurations/overview.md b/docs/configurations/overview.md

index 892f8c31..29f1acdc 100644

--- a/docs/configurations/overview.md

+++ b/docs/configurations/overview.md

@@ -1,15 +1,15 @@

**Magnus** is designed to make effective collaborations between data scientists/researchers

and infrastructure engineers.

-All the features described in the [concepts](/concepts/the-big-picture) are

+All the features described in the [concepts](../concepts/the-big-picture.md) are

aimed at the *research* side of data science projects while configurations add *scaling* features to them.

Configurations are presented during the execution:

-For ```yaml``` based pipeline, use the ```--config-file, -c``` option in the [magnus CLI](/usage/#usage).

+For ```yaml``` based pipeline, use the ```--config-file, -c``` option in the [magnus CLI](../usage.md/#usage).

-For [python SDK](/sdk/#magnus.Pipeline.execute), use the ```configuration_file``` option or via

+For [python SDK](../sdk.md/#magnus.Pipeline.execute), use the ```configuration_file``` option or via

environment variable ```MAGNUS_CONFIGURATION_FILE```

## Default configuration

@@ -26,11 +26,11 @@ environment variable ```MAGNUS_CONFIGURATION_FILE```

Run log still captures the metrics, but are not passed to the experiment tracking tools.

The default configuration for all the pipeline executions runs on the

-[local compute](/configurations/executors/local), using a

-[buffered run log](/configurations/run-log/#buffered) store with

-[no catalog](/configurations/catalog/#do-nothing) or

-[secrets](/configurations/secrets/#do-nothing) or

-[experiment tracking functionality](/configurations/experiment-tracking/).

+[local compute](executors/local.md), using a

+[buffered run log](run-log.md/#buffered) store with

+[no catalog](catalog.md/#do-nothing) or

+[secrets](secrets.md/#do-nothing) or

+[experiment tracking functionality](experiment-tracking.md/).

diff --git a/docs/configurations/run-log.md b/docs/configurations/run-log.md

index 9a728a10..118ab11c 100644

--- a/docs/configurations/run-log.md

+++ b/docs/configurations/run-log.md

@@ -2,7 +2,7 @@ Along with tracking the progress and status of the execution of the pipeline, ru

also keeps a track of parameters, experiment tracking metrics, data flowing through

the pipeline and any reproducibility metrics emitted by the tasks of the pipeline.

-Please refer here for detailed [information about run log](/concepts/run-log).

+Please refer here for detailed [information about run log](../concepts/run-log.md).

## buffered

@@ -74,7 +74,7 @@ run_log_store:

=== "Run log"

- The structure of the run log is [detailed in concepts](/concepts/run-log).

+ The structure of the run log is [detailed in concepts](../concepts/run-log.md).

```json linenums="1"

{

@@ -276,7 +276,7 @@ run_log_store:

=== "Run log"

- The structure of the run log is [detailed in concepts](/concepts/run-log).

+ The structure of the run log is [detailed in concepts](../concepts/run-log.md).

=== "RunLog.json"

diff --git a/docs/configurations/secrets.md b/docs/configurations/secrets.md

index d767ab34..edba9a11 100644

--- a/docs/configurations/secrets.md

+++ b/docs/configurations/secrets.md

@@ -1,7 +1,7 @@

**Magnus** provides an interface to secrets managers

-[via the API](/interactions/#magnus.get_secret).

+[via the API](../interactions.md/#magnus.get_secret).

-Please refer to [Secrets in concepts](/concepts/secrets) for more information.

+Please refer to [Secrets in concepts](../concepts/secrets.md) for more information.

## do-nothing

diff --git a/docs/example/dataflow.md b/docs/example/dataflow.md

index 039620c5..37a9177f 100644

--- a/docs/example/dataflow.md

+++ b/docs/example/dataflow.md

@@ -13,12 +13,12 @@ using catalog. This can be controlled either by the configuration or by python A

## Flow of Parameters

-The [initial parameters](/concepts/parameters) of the pipeline can set by using a ```yaml``` file and presented

+The [initial parameters](../concepts/parameters.md) of the pipeline can set by using a ```yaml``` file and presented

during execution

-```--parameters-file, -parameters``` while using the [magnus CLI](/usage/#usage)

+```--parameters-file, -parameters``` while using the [magnus CLI](../usage.md/#usage)

-or by using ```parameters_file``` with [the sdk](/sdk/#magnus.Pipeline.execute).

+or by using ```parameters_file``` with [the sdk](../sdk.md/#magnus.Pipeline.execute).

=== "Initial Parameters"

@@ -121,7 +121,7 @@ or by using ```parameters_file``` with [the sdk](/sdk/#magnus.Pipeline.execute).

**Magnus** stores all the artifacts/files/logs generated by ```task``` nodes in a central storage called

-[catalog](/concepts/catalog).

+[catalog](../concepts/catalog.md).

The catalog is indexed by the ```run_id``` of the pipeline and is unique for every execution of the pipeline.

Any ```task``` of the pipeline can interact with the ```catalog``` to get and put artifacts/files

diff --git a/docs/example/example.md b/docs/example/example.md

index ca52d9c1..d96bde7c 100644

--- a/docs/example/example.md

+++ b/docs/example/example.md

@@ -1,6 +1,6 @@

-Magnus revolves around the concept of [pipelines or workflows](/concepts/pipeline).

+Magnus revolves around the concept of [pipelines or workflows](../concepts/pipeline.md).

Pipelines defined in magnus are translated into

other workflow engine definitions like [Argo workflows](https://argoproj.github.io/workflows/) or

[AWS step functions](https://aws.amazon.com/step-functions/).

@@ -60,7 +60,7 @@ This pipeline can be represented in **magnus** as below:

=== "Run log"

- Please see [Run log](/concepts/run-log) for more detailed information about the structure.

+ Please see [Run log](../concepts/run-log.md) for more detailed information about the structure.

```json linenums="1"

{

@@ -331,10 +331,10 @@ This pipeline can be represented in **magnus** as below:

Independent of the platform it is run on,

-- [x] The [pipeline definition](/concepts/pipeline) remains the same from an author point of view.

+- [x] The [pipeline definition](..//concepts/pipeline.md) remains the same from an author point of view.

The data scientists are always part of the process and contribute to the development even in production environments.

-- [x] The [run log](/concepts/run-log) remains the same except for the execution configuration enabling users

+- [x] The [run log](../concepts/run-log.md) remains the same except for the execution configuration enabling users

to debug the pipeline execution in lower environments for failed executions or to validate the

expectation of the execution.

@@ -344,7 +344,7 @@ expectation of the execution.

## Example configuration

To run the pipeline in different environments, we just provide the

-[required configuration](/configurations/overview).

+[required configuration](../configurations/overview.md).

=== "Default Configuration"

@@ -360,7 +360,7 @@ To run the pipeline in different environments, we just provide the

=== "Argo Configuration"

- To render the pipeline in [argo specification](/configurations/executors/argo/), mention the

+ To render the pipeline in [argo specification](../configurations/executors/argo.md), mention the

configuration during execution.

yaml:

@@ -370,7 +370,7 @@ To run the pipeline in different environments, we just provide the

python:

- Please refer to [containerised environments](/configurations/executors/container-environments/) for more information.

+ Please refer to [containerised environments](../configurations/executors/container-environments.md) for more information.

MAGNUS_CONFIGURATION_FILE=examples/configs/argo-config.yaml python examples/contrived.py && magnus execute -f magnus-pipeline.yaml -c examples/configs/argo-config.yaml

@@ -380,7 +380,7 @@ To run the pipeline in different environments, we just provide the

1. Use argo workflows as the execution engine to run the pipeline.

2. Run this docker image for every step of the pipeline. Please refer to

- [containerised environments](/configurations/executors/container-environments/) for more details.

+ [containerised environments](../configurations/executors/container-environments.md) for more details.

3. Mount the volume from Kubernetes persistent volumes (magnus-volume) to /mnt directory.

4. Resource constraints for the container runtime.

5. Since every step runs in a container, the run log should be persisted. Here we are using the file-system as our

diff --git a/docs/example/experiment-tracking.md b/docs/example/experiment-tracking.md

index e63e04ca..03109a5c 100644

--- a/docs/example/experiment-tracking.md

+++ b/docs/example/experiment-tracking.md

@@ -1,8 +1,8 @@

Metrics in data science projects summarize important information about the execution and performance of the

experiment.

-Magnus captures [this information as part of the run log](/concepts/experiment-tracking) and also provides

-an [interface to experiment tracking tools](/concepts/experiment-tracking/#experiment_tracking_tools)

+Magnus captures [this information as part of the run log](../concepts/experiment-tracking.md) and also provides

+an [interface to experiment tracking tools](../concepts/experiment-tracking.md/#experiment_tracking_tools)

like [mlflow](https://mlflow.org/docs/latest/tracking.html) or

[Weights and Biases](https://wandb.ai/site/experiment-tracking).

@@ -197,6 +197,6 @@ like [mlflow](https://mlflow.org/docs/latest/tracking.html) or

The metrics are also sent to mlflow.

- { width="800" height="600"}

+ { width="800" height="600"}

mlflow UI for the execution. The run_id remains the same as the run_id of magnus

diff --git a/docs/example/reproducibility.md b/docs/example/reproducibility.md

index 47ef34cb..8b31e6fa 100644

--- a/docs/example/reproducibility.md

+++ b/docs/example/reproducibility.md

@@ -1,4 +1,4 @@

-Magnus stores a variety of information about the current execution in [run log](/concepts/run-log).

+Magnus stores a variety of information about the current execution in [run log](../concepts/run-log.md).

The run log is internally used

for keeping track of the execution (status of different steps, parameters, etc) but also has rich information

for reproducing the state at the time of pipeline execution.

@@ -227,5 +227,5 @@ Below we show an example pipeline and the different layers of the run log.

-This [structure of the run log](/concepts/run-log) is the same independent of where the pipeline was executed.

+This [structure of the run log](../concepts/run-log.md) is the same independent of where the pipeline was executed.

This enables you to reproduce a failed execution in complex environments on local environments for easier debugging.

diff --git a/docs/example/retry-after-failure.md b/docs/example/retry-after-failure.md

index 69060f29..1d3c5441 100644

--- a/docs/example/retry-after-failure.md

+++ b/docs/example/retry-after-failure.md

@@ -1,4 +1,4 @@

-Magnus allows you to [debug and recover](/concepts/run-log/#retrying_failures) from a

+Magnus allows you to [debug and recover](../concepts/run-log.md/#retrying_failures) from a

failure during the execution of pipeline. The pipeline can be

restarted in any suitable environment for debugging.

@@ -585,7 +585,7 @@ Below is an example of retrying a pipeline that failed.

```

-Magnus also supports [```mocked``` executor](/configurations/executors/mocked) which can

+Magnus also supports [```mocked``` executor](../configurations/executors/mocked.md) which can

patch and mock tasks to isolate and focus on the failed task. Since python functions and notebooks

are run in the same shell, it is possible to use

[python debugger](https://docs.python.org/3/library/pdb.html) and

diff --git a/docs/example/secrets.md b/docs/example/secrets.md

index 6ecdea06..7edb3c91 100644

--- a/docs/example/secrets.md

+++ b/docs/example/secrets.md

@@ -1,5 +1,5 @@

Secrets are required assets as the complexity of the application increases. Magnus provides a

-[python API](/interactions/#magnus.get_secret) to get secrets from various sources.

+[python API](../interactions.md/#magnus.get_secret) to get secrets from various sources.

!!! info annotate inline end "from magnus import get_secret"

diff --git a/docs/example/steps.md b/docs/example/steps.md

index 9b2360c3..873d10ff 100644

--- a/docs/example/steps.md

+++ b/docs/example/steps.md

@@ -2,10 +2,10 @@ Magnus provides a rich definition of of step types.

-- [stub](/concepts/stub): A mock step which is handy during designing and debugging pipelines.

-- [task](/concepts/task): To execute python functions, jupyter notebooks, shell scripts.

-- [parallel](/concepts/parallel): To execute many tasks in parallel.

-- [map](/concepts/map): To execute the same task over a list of parameters. (1)

+- [stub](../concepts/stub.md): A mock step which is handy during designing and debugging pipelines.

+- [task](../concepts/task.md): To execute python functions, jupyter notebooks, shell scripts.

+- [parallel](../concepts/parallel.md): To execute many tasks in parallel.

+- [map](../concepts/map.md): To execute the same task over a list of parameters. (1)

@@ -40,12 +40,12 @@ Used as a mock node or a placeholder before the actual implementation (1).

## task

-Used to execute a single unit of work. You can use [python](/concepts/task/#python_functions),

-[shell](/concepts/task/#shell), [notebook](/concepts/task/#notebook) as command types.

+Used to execute a single unit of work. You can use [python](../concepts/task.md/#python_functions),

+[shell](../concepts/task.md/#shell), [notebook](../concepts/task.md/#notebook) as command types.

!!! note annotate "Execution logs"

- You can view the execution logs of the tasks in the [catalog](/concepts/catalog) without digging through the

+ You can view the execution logs of the tasks in the [catalog](../concepts/catalog.md) without digging through the

logs from the underlying executor.

@@ -63,7 +63,7 @@ Used to execute a single unit of work. You can use [python](/concepts/task/#pyth

--8<-- "examples/python-tasks.yaml"

```

- 1. Note that the ```command``` is the [path to the python function](/concepts/task/#python_functions).

+ 1. Note that the ```command``` is the [path to the python function](../concepts/task.md/#python_functions).

2. ```python``` is default command type, you can use ```shell```, ```notebook``` too.

=== "python"

@@ -72,7 +72,7 @@ Used to execute a single unit of work. You can use [python](/concepts/task/#pyth

--8<-- "examples/python-tasks.py"

```

- 1. Note that the command is the [path to the function](/concepts/task/#python_functions).

+ 1. Note that the command is the [path to the function](../concepts/task.md/#python_functions).

2. There are many ways to define dependencies within nodes, step1 >> step2, step1 << step2 or during the definition of step1, we can define a next step.

3. ```terminate_with_success``` indicates that the dag is completed successfully. You can also use ```terminate_with_failure``` to indicate the dag failed.

4. Add ```success``` and ```fail``` nodes to the dag.

diff --git a/docs/extensions.md b/docs/extensions.md

index 1a42f8e6..132c1482 100644

--- a/docs/extensions.md

+++ b/docs/extensions.md

@@ -2,7 +2,7 @@

Magnus is built around the idea to decouple the pipeline definition and pipeline execution.

-[All the concepts](/concepts/the-big-picture/) are defined with this principle and therefore

+[All the concepts](concepts/the-big-picture.md/) are defined with this principle and therefore

are extendible as long as the API is satisfied.

We internally use [stevedore](https:/pypi.org/project/stevedore/) to manage extensions.

@@ -82,9 +82,9 @@ are extended from pydantic BaseModel.

Register to namespace: [tool.poetry.plugins."executor"]

-Examples: [local](/configurations/executors/local),

-[local-container](/configurations/executors/local-container),

-[argo](/configurations/executors/argo)

+Examples: [local](configurations/executors/local.md),

+[local-container](configurations/executors/local-container.md),

+[argo](configurations/executors/argo.md)

::: magnus.executor.BaseExecutor

options:

@@ -99,9 +99,9 @@ Examples: [local](/configurations/executors/local),

Register to namespace: [tool.poetry.plugins."run_log_store"]

-Examples: [buffered](/configurations/run-log/#buffered),

-[file-system](/configurations/run-log/#file-system),

- [chunked-fs](/configurations/run-log/#chunked-fs)

+Examples: [buffered](configurations/run-log.md/#buffered),

+[file-system](configurations/run-log.md/#file-system),

+ [chunked-fs](configurations/run-log.md/#chunked-fs)

::: magnus.datastore.BaseRunLogStore

options:

@@ -120,8 +120,8 @@ The ```RunLog``` is a nested pydantic model and is located in ```magnus.datastor

Register to namespace: [tool.poetry.plugins."catalog"]

Example:

-[do-nothing](/configurations/catalog/#do-nothing),

- [file-system](/configurations/catalog/#file-system)

+[do-nothing](configurations/catalog.md/#do-nothing),

+ [file-system](configurations/catalog.md/#file-system)

::: magnus.catalog.BaseCatalog

options:

@@ -137,9 +137,9 @@ Example:

Register to namespace: [tool.poetry.plugins."secrets"]

Example:

-[do-nothing](/configurations/secrets/#do-nothing),

- [env-secrets-manager](/configurations/secrets/#environment_secret_manager),

- [dotenv](/configurations/secrets/#dotenv)

+[do-nothing](configurations/secrets.md/#do-nothing),

+ [env-secrets-manager](configurations/secrets.md/#environment_secret_manager),

+ [dotenv](configurations/secrets.md/#dotenv)

::: magnus.secrets.BaseSecrets

options:

@@ -155,7 +155,7 @@ Example:

Register to namespace: [tool.poetry.plugins."experiment_tracker"]

Example:

-[do-nothing](/configurations/experiment-tracking), ```mlflow```

+[do-nothing](configurations/experiment-tracking.md), ```mlflow```

::: magnus.experiment_tracker.BaseExperimentTracker

options:

@@ -170,10 +170,10 @@ Example:

Register to namespace: [tool.poetry.plugins."nodes"]

Example:

-[task](/concepts/task),

-[stub](/concepts/stub),

-[parallel](/concepts/parallel),

-[map](/concepts/map)

+[task](concepts/task.md),

+[stub](concepts/stub.md),

+[parallel](concepts/parallel.md),

+[map](concepts/map.md)

::: magnus.nodes.BaseNode

options:

@@ -190,9 +190,9 @@ Example:

Register to namespace: [tool.poetry.plugins."tasks"]

Example:

-[python](/concepts/task/#python_functions),

-[shell](/concepts/task/#shell),

-[notebook](/concepts/task/#notebook)

+[python](concepts/task.md/#python_functions),

+[shell](concepts/task.md/#shell),

+[notebook](concepts/task.md/#notebook)

::: magnus.tasks.BaseTaskType

options:

diff --git a/docs/index.md b/docs/index.md

index 90b7df8f..b41efe86 100644

--- a/docs/index.md

+++ b/docs/index.md

@@ -13,32 +13,33 @@ sidebarDepth: 0

Magnus is a simplified workflow definition language that helps in:

- **Streamlined Design Process:** Magnus enables users to efficiently plan their pipelines with

-[stubbed nodes](concepts/stub), along with offering support for various structures such as

-[tasks](../concepts/task), [parallel branches](concepts/parallel), and [loops or map branches](concepts/map)

-in both [yaml](concepts/pipeline) or a [python SDK](sdk) for maximum flexibility.

+[stubbed nodes](concepts/stub.md), along with offering support for various structures such as

+[tasks](concepts/task.md), [parallel branches](concepts/parallel.md), and [loops or map branches](concepts/map.md)

+in both [yaml](concepts/pipeline.md) or a [python SDK](sdk.md) for maximum flexibility.

- **Incremental Development:** Build your pipeline piece by piece with Magnus, which allows for the

-implementation of tasks as [python functions](concepts/task/#python_functions),

-[notebooks](concepts/task/#notebooks), or [shell scripts](concepts/task/#shell),

+implementation of tasks as [python functions](concepts/task.md/#python_functions),

+[notebooks](concepts/task.md/#notebooks), or [shell scripts](concepts/task.md/#shell),

adapting to the developer's preferred tools and methods.

- **Robust Testing:** Ensure your pipeline performs as expected with the ability to test using sampled data. Magnus

-also provides the capability to [mock and patch tasks](configurations/executors/mocked)

+also provides the capability to [mock and patch tasks](configurations/executors/mocked.md)

for thorough evaluation before full-scale deployment.

- **Seamless Deployment:** Transition from the development stage to production with ease.

-Magnus simplifies the process by requiring [only configuration changes](configurations/overview)

-to adapt to different environments, including support for [argo workflows](configurations/executors/argo).

+Magnus simplifies the process by requiring

+[only configuration changes](configurations/overview.md)

+to adapt to different environments, including support for [argo workflows](configurations/executors/argo.md).

- **Efficient Debugging:** Quickly identify and resolve issues in pipeline execution with Magnus's local

-debugging features. Retrieve data from failed tasks and [retry failures](concepts/run-log/#retrying_failures)

+debugging features. Retrieve data from failed tasks and [retry failures](concepts/run-log.md/#retrying_failures)

using your chosen debugging tools to maintain a smooth development experience.

Along with the developer friendly features, magnus also acts as an interface to production grade concepts

-such as [data catalog](concepts/catalog), [reproducibility](concepts/run-log),

-[experiment tracking](concepts/experiment-tracking)

-and secure [access to secrets](concepts/secrets).

+such as [data catalog](concepts/catalog.md), [reproducibility](concepts/run-log.md),

+[experiment tracking](concepts/experiment-tracking.md)

+and secure [access to secrets](concepts/secrets.md).

## Motivation

diff --git a/docs/usage.md b/docs/usage.md

index 44b553e1..deaaffb4 100644

--- a/docs/usage.md

+++ b/docs/usage.md

@@ -40,14 +40,14 @@ pip install "magnus[mlflow]"

## Usage

-Pipelines defined in **magnus** can be either via [python sdk](/sdk) or ```yaml``` based definitions.

+Pipelines defined in **magnus** can be either via [python sdk](sdk.md) or ```yaml``` based definitions.

To execute a pipeline, defined in ```yaml```, use the **magnus** cli.

The options are detailed below:

- ```-f, --file``` (str): The pipeline definition file, defaults to pipeline.yaml

-- ```-c, --config-file``` (str): [config file](/configurations/overview) to be used for the run [default: None]

-- ```-p, --parameters-file``` (str): [Parameters](/concepts/parameters) accessible by the application [default: None]

+- ```-c, --config-file``` (str): [config file](configurations/overview.md) to be used for the run [default: None]

+- ```-p, --parameters-file``` (str): [Parameters](concepts/parameters.md) accessible by the application [default: None]

- ```--log-level``` : The log level, one of ```INFO | DEBUG | WARNING| ERROR| FATAL``` [default: INFO]

- ```--tag``` (str): A tag attached to the run[default: ]

- ```--run-id``` (str): An optional run_id, one would be generated if not provided

diff --git a/docs/why-magnus.md b/docs/why-magnus.md

index 90aa747c..6db6bcfc 100644

--- a/docs/why-magnus.md

+++ b/docs/why-magnus.md

@@ -11,7 +11,7 @@ straightforward implementation for task orchestration. Nonetheless, due to their

design, orchestrating the flow of data—whether parameters or artifacts—can introduce complexity and

require careful handling.

-Magnus simplifies this aspect by introducing an [intuitive mechanism for data flow](/example/dataflow),

+Magnus simplifies this aspect by introducing an [intuitive mechanism for data flow](example/dataflow.md),

thereby streamlining data management. This approach allows the orchestrators to focus on their core

competency: allocating the necessary computational resources for task execution.

@@ -23,7 +23,7 @@ In the context of the project's proof-of-concept (PoC) phase, the utilization of

which is most effectively achieved through the use of local development tools.

Magnus serves as an intermediary stage, simulating the production environment by offering [local

-versions](/configurations/overview/) of essential services—such as execution engines, data catalogs, secret management, and

+versions](configurations/overview.md/) of essential services—such as execution engines, data catalogs, secret management, and

experiment tracking—without necessitating intricate configuration. As the project transitions into the

production phase, these local stand-ins are replaced with their robust, production-grade counterparts.

@@ -40,8 +40,8 @@ experimentation, thus impeding iterative research and development.

Magnus is engineered to minimize the need for such extensive refactoring when operationalizing

-projects. It achieves this by allowing tasks to be defined as [simple Python functions](/concepts/task/#python_functions)

-or [Jupyter notebooks](/concepts/task/#notebook). This means that the research-centric components of the code

+projects. It achieves this by allowing tasks to be defined as [simple Python functions](concepts/task.md/#python_functions)

+or [Jupyter notebooks](concepts/task.md/#notebook). This means that the research-centric components of the code

can remain unchanged, avoiding

the need for immediate refactoring and allowing for the postponement of these efforts until they

become necessary for the long-term maintenance of the product.

diff --git a/magnus/sdk.py b/magnus/sdk.py

index e9603c58..30f6704b 100644

--- a/magnus/sdk.py

+++ b/magnus/sdk.py

@@ -26,7 +26,7 @@

class Catalog(BaseModel):

"""

Use to instruct a task to sync data from/to the central catalog.

- Please refer to [concepts](../../concepts/catalog) for more information.

+ Please refer to [concepts](concepts/catalog.md) for more information.

Attributes:

get (List[str]): List of glob patterns to get from central catalog to the compute data folder.

@@ -110,13 +110,13 @@ def create_node(self) -> TraversalNode:

class Task(BaseTraversal):

"""

An execution node of the pipeline.

- Please refer to [concepts](../../concepts/task) for more information.

+ Please refer to [concepts](concepts/task.md) for more information.

Attributes:

name (str): The name of the node.

command (str): The command to execute.

- - For python functions, [dotted path](../../concepts/task/#python_functions) to the function.

+ - For python functions, [dotted path](concepts/task.md/#python_functions) to the function.

- For shell commands: command to execute in the shell.

- For notebooks: path to the notebook.

command_type (str): The type of command to execute.

@@ -197,7 +197,7 @@ class Stub(BaseTraversal):

A node that does nothing.

A stub node can tak arbitrary number of arguments.

- Please refer to [concepts](../../concepts/stub) for more information.

+ Please refer to [concepts](concepts/stub.md) for more information.

Attributes:

name (str): The name of the node.

@@ -220,7 +220,7 @@ def create_node(self) -> StubNode:

class Parallel(BaseTraversal):

"""

A node that executes multiple branches in parallel.

- Please refer to [concepts](../../concepts/parallel) for more information.

+ Please refer to [concepts](concepts/parallel.md) for more information.

Attributes:

name (str): The name of the node.

@@ -249,7 +249,7 @@ def create_node(self) -> ParallelNode:

class Map(BaseTraversal):

"""

A node that iterates over a list of items and executes a pipeline for each item.

- Please refer to [concepts](../../concepts/map) for more information.

+ Please refer to [concepts](concepts/map.md) for more information.

Attributes:

branch: The pipeline to execute for each item.

@@ -400,11 +400,11 @@ def execute(

Execution of pipeline could either be:

- Traverse and execute all the steps of the pipeline, eg. [local execution](../../configurations/executors/local).

+ Traverse and execute all the steps of the pipeline, eg. [local execution](configurations/executors/local.md).

Or create the ```yaml``` representation of the pipeline for other executors.

- Please refer to [concepts](../../concepts/executor) for more information.

+ Please refer to [concepts](concepts/executor.md) for more information.

Args:

configuration_file (str, optional): The path to the configuration file. Defaults to "".