-Magnus is a simplified workflow definition language that helps in:

+runnable is a simplified workflow definition language that helps in:

-- **Streamlined Design Process:** Magnus enables users to efficiently plan their pipelines with

-[stubbed nodes](https://astrazeneca.github.io/magnus-core/concepts/stub), along with offering support for various structures such as

-[tasks](https://astrazeneca.github.io/magnus-core/concepts/task), [parallel branches](https://astrazeneca.github.io/magnus-core/concepts/parallel), and [loops or map branches](https://astrazeneca.github.io/magnus-core/concepts/map)

-in both [yaml](https://astrazeneca.github.io/magnus-core/concepts/pipeline) or a [python SDK](https://astrazeneca.github.io/magnus-core/sdk) for maximum flexibility.

+- **Streamlined Design Process:** runnable enables users to efficiently plan their pipelines with

+[stubbed nodes](https://astrazeneca.github.io/runnable-core/concepts/stub), along with offering support for various structures such as

+[tasks](https://astrazeneca.github.io/runnable-core/concepts/task), [parallel branches](https://astrazeneca.github.io/runnable-core/concepts/parallel), and [loops or map branches](https://astrazeneca.github.io/runnable-core/concepts/map)

+in both [yaml](https://astrazeneca.github.io/runnable-core/concepts/pipeline) or a [python SDK](https://astrazeneca.github.io/runnable-core/sdk) for maximum flexibility.

-- **Incremental Development:** Build your pipeline piece by piece with Magnus, which allows for the

-implementation of tasks as [python functions](https://astrazeneca.github.io/magnus-core/concepts/task/#python_functions),

-[notebooks](https://astrazeneca.github.io/magnus-core/concepts/task/#notebooks), or [shell scripts](https://astrazeneca.github.io/magnus-core/concepts/task/#shell),

+- **Incremental Development:** Build your pipeline piece by piece with runnable, which allows for the

+implementation of tasks as [python functions](https://astrazeneca.github.io/runnable-core/concepts/task/#python_functions),

+[notebooks](https://astrazeneca.github.io/runnable-core/concepts/task/#notebooks), or [shell scripts](https://astrazeneca.github.io/runnable-core/concepts/task/#shell),

adapting to the developer's preferred tools and methods.

-- **Robust Testing:** Ensure your pipeline performs as expected with the ability to test using sampled data. Magnus

-also provides the capability to [mock and patch tasks](https://astrazeneca.github.io/magnus-core/configurations/executors/mocked)

+- **Robust Testing:** Ensure your pipeline performs as expected with the ability to test using sampled data. runnable

+also provides the capability to [mock and patch tasks](https://astrazeneca.github.io/runnable-core/configurations/executors/mocked)

for thorough evaluation before full-scale deployment.

- **Seamless Deployment:** Transition from the development stage to production with ease.

-Magnus simplifies the process by requiring [only configuration changes](https://astrazeneca.github.io/magnus-core/configurations/overview)

-to adapt to different environments, including support for [argo workflows](https://astrazeneca.github.io/magnus-core/configurations/executors/argo).

+runnable simplifies the process by requiring [only configuration changes](https://astrazeneca.github.io/runnable-core/configurations/overview)

+to adapt to different environments, including support for [argo workflows](https://astrazeneca.github.io/runnable-core/configurations/executors/argo).

-- **Efficient Debugging:** Quickly identify and resolve issues in pipeline execution with Magnus's local

-debugging features. Retrieve data from failed tasks and [retry failures](https://astrazeneca.github.io/magnus-core/concepts/run-log/#retrying_failures)

+- **Efficient Debugging:** Quickly identify and resolve issues in pipeline execution with runnable's local

+debugging features. Retrieve data from failed tasks and [retry failures](https://astrazeneca.github.io/runnable-core/concepts/run-log/#retrying_failures)

using your chosen debugging tools to maintain a smooth development experience.

-Along with the developer friendly features, magnus also acts as an interface to production grade concepts

-such as [data catalog](https://astrazeneca.github.io/magnus-core/concepts/catalog), [reproducibility](https://astrazeneca.github.io/magnus-core/concepts/run-log),

-[experiment tracking](https://astrazeneca.github.io/magnus-core/concepts/experiment-tracking)

-and secure [access to secrets](https://astrazeneca.github.io/magnus-core/concepts/secrets).

+Along with the developer friendly features, runnable also acts as an interface to production grade concepts

+such as [data catalog](https://astrazeneca.github.io/runnable-core/concepts/catalog), [reproducibility](https://astrazeneca.github.io/runnable-core/concepts/run-log),

+[experiment tracking](https://astrazeneca.github.io/runnable-core/concepts/experiment-tracking)

+and secure [access to secrets](https://astrazeneca.github.io/runnable-core/concepts/secrets).

@@ -59,19 +76,19 @@ and secure [access to secrets](https://astrazeneca.github.io/magnus-core/concept

## Documentation

-[More details about the project and how to use it available here](https://astrazeneca.github.io/magnus-core/).

+[More details about the project and how to use it available here](https://astrazeneca.github.io/runnable-core/).

## Installation

-The minimum python version that magnus supports is 3.8

+The minimum python version that runnable supports is 3.8

```shell

-pip install magnus

+pip install runnable

```

-Please look at the [installation guide](https://astrazeneca.github.io/magnus-core/usage)

+Please look at the [installation guide](https://astrazeneca.github.io/runnable-core/usage)

for more information.

@@ -117,7 +134,7 @@ def return_parameter() -> Parameter:

def display_parameter(x: int, y: InnerModel):

"""

Annotating the arguments of the function is important for

- magnus to understand the type of parameters you want.

+ runnable to understand the type of parameters you want.

Input args can be a pydantic model or the individual attributes.

"""

@@ -138,7 +155,7 @@ my_param = return_parameter()

display_parameter(my_param.x, my_param.y)

```

-### Orchestration using magnus

+### Orchestration using runnable

@@ -153,7 +170,7 @@ Example present at: ```examples/python-tasks.py```

Run it as: ```python examples/python-tasks.py```

```python

-from magnus import Pipeline, Task

+from runnable import Pipeline, Task

def main():

step1 = Task(

@@ -188,7 +205,7 @@ if __name__ == "__main__":

Example present at: ```examples/python-tasks.yaml```

-Execute via the cli: ```magnus execute -f examples/python-tasks.yaml```

+Execute via the cli: ```runnable execute -f examples/python-tasks.yaml```

```yaml

dag:

@@ -231,9 +248,9 @@ No code change, just change the configuration.

executor:

type: "argo"

config:

- image: magnus:demo

+ image: runnable:demo

persistent_volumes:

- - name: magnus-volume

+ - name: runnable-volume

mount_path: /mnt

run_log_store:

@@ -242,9 +259,9 @@ run_log_store:

log_folder: /mnt/run_log_store

```

-More details can be found in [argo configuration](https://astrazeneca.github.io/magnus-core/configurations/executors/argo).

+More details can be found in [argo configuration](https://astrazeneca.github.io/runnable-core/configurations/executors/argo).

-Execute the code as ```magnus execute -f examples/python-tasks.yaml -c examples/configs/argo-config.yam```

+Execute the code as ```runnable execute -f examples/python-tasks.yaml -c examples/configs/argo-config.yam```

Expand

@@ -253,12 +270,12 @@ Execute the code as ```magnus execute -f examples/python-tasks.yaml -c examples/

apiVersion: argoproj.io/v1alpha1

kind: Workflow

metadata:

- generateName: magnus-dag-

+ generateName: runnable-dag-

annotations: {}

labels: {}

spec:

activeDeadlineSeconds: 172800

- entrypoint: magnus-dag

+ entrypoint: runnable-dag

podGC:

strategy: OnPodCompletion

retryStrategy:

@@ -270,7 +287,7 @@ spec:

maxDuration: '3600'

serviceAccountName: default-editor

templates:

- - name: magnus-dag

+ - name: runnable-dag

failFast: true

dag:

tasks:

@@ -285,9 +302,9 @@ spec:

depends: step-2-task-772vg3.Succeeded

- name: step-1-task-uvdp7h

container:

- image: magnus:demo

+ image: runnable:demo

command:

- - magnus

+ - runnable

- execute_single_node

- '{{workflow.parameters.run_id}}'

- step%1

@@ -310,9 +327,9 @@ spec:

cpu: 250m

- name: step-2-task-772vg3

container:

- image: magnus:demo

+ image: runnable:demo

command:

- - magnus

+ - runnable

- execute_single_node

- '{{workflow.parameters.run_id}}'

- step%2

@@ -335,9 +352,9 @@ spec:

cpu: 250m

- name: success-success-igzq2e

container:

- image: magnus:demo

+ image: runnable:demo

command:

- - magnus

+ - runnable

- execute_single_node

- '{{workflow.parameters.run_id}}'

- success

@@ -368,7 +385,7 @@ spec:

volumes:

- name: executor-0

persistentVolumeClaim:

- claimName: magnus-volume

+ claimName: runnable-volume

```

@@ -379,22 +396,22 @@ spec:

### Linear

A simple linear pipeline with tasks either

-[python functions](https://astrazeneca.github.io/magnus-core/concepts/task/#python_functions),

-[notebooks](https://astrazeneca.github.io/magnus-core/concepts/task/#notebooks), or [shell scripts](https://astrazeneca.github.io/magnus-core/concepts/task/#shell)

+[python functions](https://astrazeneca.github.io/runnable-core/concepts/task/#python_functions),

+[notebooks](https://astrazeneca.github.io/runnable-core/concepts/task/#notebooks), or [shell scripts](https://astrazeneca.github.io/runnable-core/concepts/task/#shell)

[](https://mermaid.live/edit#pako:eNpl0bFuwyAQBuBXQVdZTqTESpxMDJ0ytkszhgwnOCcoNo4OaFVZfvcSx20tGSQ4fn0wHB3o1hBIyLJOWGeDFJ3Iq7r90lfkkA9HHfmTUpnX1hFyLvrHzDLl_qB4-1BOOZGGD3TfSikvTDSNFqdj2sT2vBTr9euQlXNWjqycsN2c7UZWFMUE7udwP0L3y6JenNKiyfvz8t8_b-gavT9QJYY0PcDtjeTLptrAChriBq1JzeoeWkG4UkMKZCoN8k2Bcn1yGEN7_HYaZOBIK4h3g4EOFi-MDcgKa59SMja0_P7s_vAJ_Q_YOH6o)

-### [Parallel branches](https://astrazeneca.github.io/magnus-core/concepts/parallel)

+### [Parallel branches](https://astrazeneca.github.io/runnable-core/concepts/parallel)

Execute branches in parallel

[](https://mermaid.live/edit#pako:eNp9k01rwzAMhv-K8S4ZtJCzDzuMLmWwwkh2KMQ7eImShiZ2sB1KKf3vs52PpsWNT7LySHqlyBeciRwwwUUtTtmBSY2-YsopR8MpQUfAdCdBBekWNBpvv6-EkFICzGAtWcUTDW3wYy20M7lr5QGBK2j-anBAkH4M1z6grnjpy17xAiTwDII07jj6HK8-VnVZBspITnpjztyoVkLLJOy3Qfrdm6gQEu2370Io7WLORo84PbRoA_oOl9BBg4UHbHR58UkMWq_fxjrOnhLRx1nH0SgkjlBjh7ekxNKGc0NelDLknhePI8qf7MVNr_31nm1wwNTeM2Ao6pmf-3y3Mp7WlqA7twOnXfKs17zt-6azmim1gQL1A0NKS3EE8hKZE4Yezm3chIVFiFe4AdmwKjdv7mIjKNYHaIBiYsycySPFlF8NxzotkjPPMNGygxXu2pxp2FSslKzBpGC1Ml7IKy3krn_E7i1f_wEayTcn)

-### [loops or map](https://astrazeneca.github.io/magnus-core/concepts/map)

+### [loops or map](https://astrazeneca.github.io/runnable-core/concepts/map)

Execute a pipeline over an iterable parameter.

[](https://mermaid.live/edit#pako:eNqVlF1rwjAUhv9KyG4qKNR-3AS2m8nuBgN3Z0Sy5tQG20SSdE7E_76kVVEr2CY3Ied9Tx6Sk3PAmeKACc5LtcsKpi36nlGZFbXciHwfLN79CuWiBLMcEULWGkBSaeosA2OCxbxdXMd89Get2bZASsLiSyuvQE2mJZXIjW27t2rOmQZ3Gp9rD6UjatWnwy7q6zPPukd50WTydmemEiS_QbQ79RwxGoQY9UaMuojRA8TCXexzyHgQZNwbMu5Cxl3IXNX6OWMyiDHpzZh0GZMHjOK3xz2mgxjT3oxplzG9MPp5_nVOhwJjteDwOg3HyFj3L1dCcvh7DUc-iftX18n6Waet1xX8cG908vpKHO6OW7cvkeHm5GR2b3drdvaSGTODHLW37mxabYC8fLgRhlfxpjNdwmEets-Dx7gCXTHBXQc8-D2KbQEVUEzckjO9oZjKo9Ox2qr5XmaYWF3DGNdbzizMBHOVVWGSs9K4XeDCKv3ZttSmsx7_AYa341E)

-### [Arbitrary nesting](https://astrazeneca.github.io/magnus-core/concepts/nesting/)

+### [Arbitrary nesting](https://astrazeneca.github.io/runnable-core/concepts/nesting/)

Any nesting of parallel within map and so on.

diff --git a/assets/favicon.png b/assets/favicon.png

deleted file mode 100644

index 101427f5..00000000

Binary files a/assets/favicon.png and /dev/null differ

diff --git a/assets/logo-readme.png b/assets/logo-readme.png

deleted file mode 100644

index b859410d..00000000

Binary files a/assets/logo-readme.png and /dev/null differ

diff --git a/assets/logo.png b/assets/logo.png

deleted file mode 100644

index f170eb03..00000000

Binary files a/assets/logo.png and /dev/null differ

diff --git a/assets/work.png b/assets/work.png

deleted file mode 100644

index ca4f48cb..00000000

Binary files a/assets/work.png and /dev/null differ

diff --git a/docs/.DS_Store b/docs/.DS_Store

deleted file mode 100644

index b996586c..00000000

Binary files a/docs/.DS_Store and /dev/null differ

diff --git a/docs/assets/cropped.png b/docs/assets/cropped.png

new file mode 100644

index 00000000..ee79c445

Binary files /dev/null and b/docs/assets/cropped.png differ

diff --git a/docs/assets/favicon.png b/docs/assets/favicon.png

deleted file mode 100644

index 101427f5..00000000

Binary files a/docs/assets/favicon.png and /dev/null differ

diff --git a/docs/assets/logo.png b/docs/assets/logo.png

deleted file mode 100644

index f170eb03..00000000

Binary files a/docs/assets/logo.png and /dev/null differ

diff --git a/docs/assets/logo1.png b/docs/assets/logo1.png

deleted file mode 100644

index a29af02d..00000000

Binary files a/docs/assets/logo1.png and /dev/null differ

diff --git a/docs/assets/speed.png b/docs/assets/speed.png

new file mode 100644

index 00000000..fb02a09e

Binary files /dev/null and b/docs/assets/speed.png differ

diff --git a/docs/assets/sport.png b/docs/assets/sport.png

new file mode 100644

index 00000000..800f8e15

Binary files /dev/null and b/docs/assets/sport.png differ

diff --git a/docs/assets/whatdo.png b/docs/assets/whatdo.png

deleted file mode 100644

index 77f2ce04..00000000

Binary files a/docs/assets/whatdo.png and /dev/null differ

diff --git a/docs/assets/work.png b/docs/assets/work.png

deleted file mode 100644

index ca4f48cb..00000000

Binary files a/docs/assets/work.png and /dev/null differ

diff --git a/docs/concepts/catalog.md b/docs/concepts/catalog.md

index af02b1af..384eb196 100644

--- a/docs/concepts/catalog.md

+++ b/docs/concepts/catalog.md

@@ -4,6 +4,8 @@

data between tasks. The default configuration of ```do-nothing``` is no-op by design.

We kindly request to raise a feature request to make us aware of the eco-system.

+# TODO: Simplify this

+

Catalog provides a way to store and retrieve data generated by the individual steps of the dag to downstream

steps of the dag. It can be any storage system that indexes its data by a unique identifier.

@@ -20,7 +22,7 @@ The directory structure within a partition is the same as the project directory

get/put data in the catalog as if you are working with local directory structure. Every interaction with the catalog

(either by API or configuration) results in an entry in the [```run log```](../concepts/run-log.md/#step_log)

-Internally, magnus also uses the catalog to store execution logs of tasks i.e stdout and stderr from

+Internally, runnable also uses the catalog to store execution logs of tasks i.e stdout and stderr from

[python](../concepts/task.md/#python) or [shell](../concepts/task.md/#shell) and executed notebook

from [notebook tasks](../concepts/task.md/#notebook).

@@ -153,7 +155,7 @@ The execution results in the ```catalog``` populated with the artifacts and the

"code_identifier": "6029841c3737fe1163e700b4324d22a469993bb0",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -199,7 +201,7 @@ The execution results in the ```catalog``` populated with the artifacts and the

"code_identifier": "6029841c3737fe1163e700b4324d22a469993bb0",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -245,7 +247,7 @@ The execution results in the ```catalog``` populated with the artifacts and the

"code_identifier": "6029841c3737fe1163e700b4324d22a469993bb0",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -284,7 +286,7 @@ The execution results in the ```catalog``` populated with the artifacts and the

"code_identifier": "6029841c3737fe1163e700b4324d22a469993bb0",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -337,7 +339,7 @@ The execution results in the ```catalog``` populated with the artifacts and the

"code_identifier": "6029841c3737fe1163e700b4324d22a469993bb0",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -467,7 +469,7 @@ and [notebook](../concepts/task.md/#notebook) tasks.

Data objects can be shared between [python](../concepts/task.md/#python_functions) or

[notebook](../concepts/task.md/#notebook) tasks,

instead of serializing data and deserializing to file structure, using

-[get_object](../interactions.md/#magnus.get_object) and [put_object](../interactions.md/#magnus.put_object).

+[get_object](../interactions.md/#runnable.get_object) and [put_object](../interactions.md/#runnable.put_object).

Internally, we use [pickle](https:/docs.python.org/3/library/pickle.html) to serialize and

deserialize python objects. Please ensure that the object can be serialized via pickle.

diff --git a/docs/concepts/executor.md b/docs/concepts/executor.md

index 307f7fe0..d9dc3208 100644

--- a/docs/concepts/executor.md

+++ b/docs/concepts/executor.md

@@ -1,4 +1,7 @@

-Executors are the heart of magnus, they traverse the workflow and execute the tasks within the

+

+## TODO: Simplify

+

+Executors are the heart of runnable, they traverse the workflow and execute the tasks within the

workflow while coordinating with different services

(eg. [run log](../concepts/run-log.md), [catalog](../concepts/catalog.md), [secrets](../concepts/secrets.md) etc)

@@ -23,7 +26,7 @@ any workflow engine.

## Graph Traversal

-In magnus, the graph traversal can be performed by magnus itself or can be handed over to other

+In runnable, the graph traversal can be performed by runnable itself or can be handed over to other

orchestration frameworks (e.g Argo workflows, AWS step functions).

### Example

@@ -44,7 +47,7 @@ translated to argo specification just by changing the configuration.

You can execute the pipeline in default configuration by:

- ```magnus execute -f examples/concepts/task_shell_simple.yaml```

+ ```runnable execute -f examples/concepts/task_shell_simple.yaml```

``` yaml linenums="1"

--8<-- "examples/configs/default.yaml"

@@ -60,16 +63,16 @@ translated to argo specification just by changing the configuration.

In this configuration, we are using [argo workflows](https://argoproj.github.io/argo-workflows/)

as our workflow engine. We are also instructing the workflow engine to use a docker image,

- ```magnus:demo``` defined in line #4, as our execution environment. Please read

+ ```runnable:demo``` defined in line #4, as our execution environment. Please read

[containerised environments](../configurations/executors/container-environments.md) for more information.

- Since magnus needs to track the execution status of the workflow, we are using a ```run log```

+ Since runnable needs to track the execution status of the workflow, we are using a ```run log```

which is persistent and available in for jobs in kubernetes environment.

You can execute the pipeline in argo configuration by:

- ```magnus execute -f examples/concepts/task_shell_simple.yaml -c examples/configs/argo-config.yaml```

+ ```runnable execute -f examples/concepts/task_shell_simple.yaml -c examples/configs/argo-config.yaml```

``` yaml linenums="1"

--8<-- "examples/configs/argo-config.yaml"

@@ -78,7 +81,7 @@ translated to argo specification just by changing the configuration.

1. Use argo workflows as the execution engine to run the pipeline.

2. Run this docker image for every step of the pipeline. The docker image should have the same directory structure

as the project directory.

- 3. Mount the volume from Kubernetes persistent volumes (magnus-volume) to /mnt directory.

+ 3. Mount the volume from Kubernetes persistent volumes (runnable-volume) to /mnt directory.

4. Resource constraints for the container runtime.

5. Since every step runs in a container, the run log should be persisted. Here we are using the file-system as our

run log store.

@@ -94,7 +97,7 @@ translated to argo specification just by changing the configuration.

- The graph traversal rules follow the the same rules as our workflow. The

step ```success-success-ou7qlf``` in line #15 only happens if the step ```shell-task-dz3l3t```

defined in line #12 succeeds.

- - The execution fails if any of the tasks fail. Both argo workflows and magnus ```run log```

+ - The execution fails if any of the tasks fail. Both argo workflows and runnable ```run log```

mark the execution as failed.

@@ -102,12 +105,12 @@ translated to argo specification just by changing the configuration.

apiVersion: argoproj.io/v1alpha1

kind: Workflow

metadata:

- generateName: magnus-dag-

+ generateName: runnable-dag-

annotations: {}

labels: {}

spec:

activeDeadlineSeconds: 172800

- entrypoint: magnus-dag

+ entrypoint: runnable-dag

podGC:

strategy: OnPodCompletion

retryStrategy:

@@ -119,7 +122,7 @@ translated to argo specification just by changing the configuration.

maxDuration: '3600'

serviceAccountName: default-editor

templates:

- - name: magnus-dag

+ - name: runnable-dag

failFast: true

dag:

tasks:

@@ -131,9 +134,9 @@ translated to argo specification just by changing the configuration.

depends: shell-task-4jy8pl.Succeeded

- name: shell-task-4jy8pl

container:

- image: magnus:demo

+ image: runnable:demo

command:

- - magnus

+ - runnable

- execute_single_node

- '{{workflow.parameters.run_id}}'

- shell

@@ -156,9 +159,9 @@ translated to argo specification just by changing the configuration.

cpu: 250m

- name: success-success-djhm6j

container:

- image: magnus:demo

+ image: runnable:demo

command:

- - magnus

+ - runnable

- execute_single_node

- '{{workflow.parameters.run_id}}'

- success

@@ -189,13 +192,13 @@ translated to argo specification just by changing the configuration.

volumes:

- name: executor-0

persistentVolumeClaim:

- claimName: magnus-volume

+ claimName: runnable-volume

```

-As seen from the above example, once a [pipeline is defined in magnus](../concepts/pipeline.md) either via yaml or SDK, we can

+As seen from the above example, once a [pipeline is defined in runnable](../concepts/pipeline.md) either via yaml or SDK, we can

run the pipeline in different environments just by providing a different configuration. Most often, there is

no need to change the code or deviate from standard best practices while coding.

@@ -204,11 +207,11 @@ no need to change the code or deviate from standard best practices while coding.

!!! note

- This section is to understand the internal mechanism of magnus and not required if you just want to

+ This section is to understand the internal mechanism of runnable and not required if you just want to

use different executors.

-Independent of traversal, all the tasks are executed within the ```context``` of magnus.

+Independent of traversal, all the tasks are executed within the ```context``` of runnable.

A closer look at the actual task implemented as part of transpiled workflow in argo

specification details the inner workings. Below is a snippet of the argo specification from

@@ -217,9 +220,9 @@ lines 18 to 34.

```yaml linenums="18"

- name: shell-task-dz3l3t

container:

- image: magnus-example:latest

+ image: runnable-example:latest

command:

- - magnus

+ - runnable

- execute_single_node

- '{{workflow.parameters.run_id}}'

- shell

@@ -235,17 +238,17 @@ lines 18 to 34.

```

The actual ```command``` to run is not the ```command``` defined in the workflow,

-i.e ```echo hello world```, but a command in the CLI of magnus which specifies the workflow file,

+i.e ```echo hello world```, but a command in the CLI of runnable which specifies the workflow file,

the step name and the configuration file.

-### Context of magnus

+### Context of runnable

Any ```task``` defined by the user as part of the workflow always runs as a *sub-command* of

-magnus. In that sense, magnus follows the

+runnable. In that sense, runnable follows the

[decorator pattern](https://en.wikipedia.org/wiki/Decorator_pattern) without being part of the

application codebase.

-In a very simplistic sense, the below stubbed-code explains the context of magnus during

+In a very simplistic sense, the below stubbed-code explains the context of runnable during

execution of a task.

```python linenums="1"

diff --git a/docs/concepts/experiment-tracking.md b/docs/concepts/experiment-tracking.md

index 4f72d125..9c47ff93 100644

--- a/docs/concepts/experiment-tracking.md

+++ b/docs/concepts/experiment-tracking.md

@@ -9,7 +9,7 @@ during the execution of the pipeline.

=== "Using the API"

- The highlighted lines in the below example show how to [use the API](../interactions.md/#magnus.track_this)

+ The highlighted lines in the below example show how to [use the API](../interactions.md/#runnable.track_this)

Any pydantic model as a value would be dumped as a dict, respecting the alias, before tracking it.

@@ -61,7 +61,7 @@ during the execution of the pipeline.

"code_identifier": "793b052b8b603760ff1eb843597361219832b61c",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -106,7 +106,7 @@ during the execution of the pipeline.

"code_identifier": "793b052b8b603760ff1eb843597361219832b61c",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -162,7 +162,7 @@ during the execution of the pipeline.

"start_at": "shell",

"name": "",

"description": "An example pipeline to demonstrate setting experiment tracking metrics\nusing environment variables. Any environment variable with

- prefix\n'MAGNUS_TRACK_' will be recorded as a metric captured during the step.\n\nYou can run this pipeline as:\n magnus execute -f

+ prefix\n'runnable_TRACK_' will be recorded as a metric captured during the step.\n\nYou can run this pipeline as:\n runnable execute -f

examples/concepts/experiment_tracking_env.yaml\n",

"internal_branch_name": "",

"steps": {

@@ -207,7 +207,7 @@ The step is defaulted to be 0.

=== "Using the API"

- The highlighted lines in the below example show how to [use the API](../interactions.md/#magnus.track_this) with

+ The highlighted lines in the below example show how to [use the API](../interactions.md/#runnable.track_this) with

the step parameter.

You can run this example by ```python run examples/concepts/experiment_tracking_step.py```

@@ -247,7 +247,7 @@ The step is defaulted to be 0.

"code_identifier": "858c4df44f15d81139341641c63ead45042e0d89",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -301,7 +301,7 @@ The step is defaulted to be 0.

"code_identifier": "858c4df44f15d81139341641c63ead45042e0d89",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -393,14 +393,14 @@ The step is defaulted to be 0.

!!! note "Opt out"

Pipelines need not use the ```experiment-tracking``` if the preferred tools of choice is

- not implemented in magnus. The default configuration of ```do-nothing``` is no-op by design.

+ not implemented in runnable. The default configuration of ```do-nothing``` is no-op by design.

We kindly request to raise a feature request to make us aware of the eco-system.

-The default experiment tracking tool of magnus is a no-op as the ```run log``` captures all the

+The default experiment tracking tool of runnable is a no-op as the ```run log``` captures all the

required details. To make it compatible with other experiment tracking tools like

[mlflow](https://mlflow.org/docs/latest/tracking.html) or

-[Weights and Biases](https://wandb.ai/site/experiment-tracking), we map attributes of magnus

+[Weights and Biases](https://wandb.ai/site/experiment-tracking), we map attributes of runnable

to the underlying tool.

For example, for mlflow:

@@ -420,7 +420,7 @@ Since mlflow does not support step wise logging of parameters, the key name is f

!!! note inline end "Shortcomings"

- Experiment tracking capabilities of magnus are inferior in integration with

+ Experiment tracking capabilities of runnable are inferior in integration with

popular python frameworks like pytorch and tensorflow as compared to other

experiment tracking tools.

@@ -453,7 +453,7 @@ Since mlflow does not support step wise logging of parameters, the key name is f

{ width="800" height="600"}

- mlflow UI for the execution. The run_id remains the same as the run_id of magnus

+ mlflow UI for the execution. The run_id remains the same as the run_id of runnable

@@ -464,5 +464,5 @@ Since mlflow does not support step wise logging of parameters, the key name is f

To provide implementation specific capabilities, we also provide a

-[python API](../interactions.md/#magnus.get_experiment_tracker_context) to obtain the client context. The default

+[python API](../interactions.md/#runnable.get_experiment_tracker_context) to obtain the client context. The default

client context is a [null context manager](https://docs.python.org/3/library/contextlib.html#contextlib.nullcontext).

diff --git a/docs/concepts/map.md b/docs/concepts/map.md

index 7024bb49..a98f96dd 100644

--- a/docs/concepts/map.md

+++ b/docs/concepts/map.md

@@ -1,4 +1,4 @@

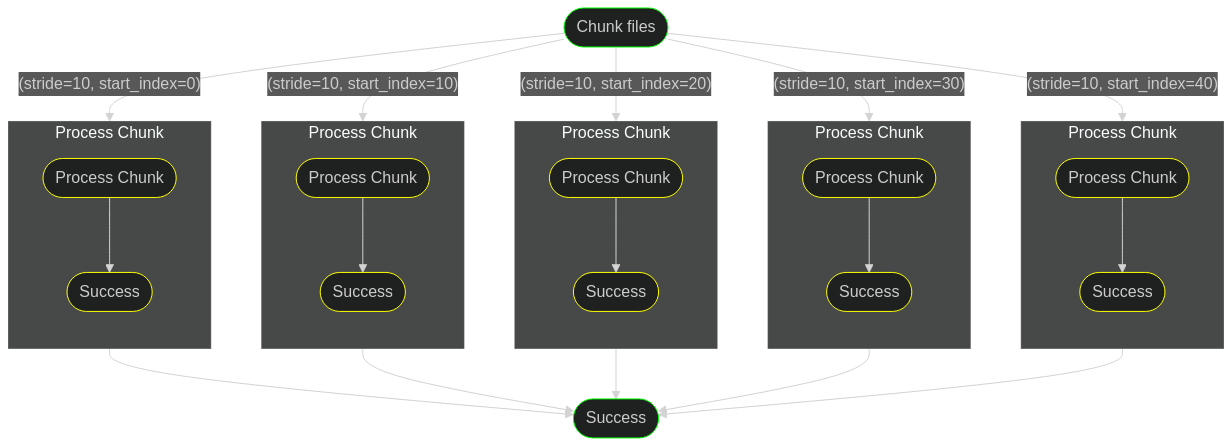

-```map``` nodes in magnus allows you to execute a sequence of nodes (i.e a pipeline) for all the items in a list. This is similar to

+```map``` nodes in runnable allows you to execute a sequence of nodes (i.e a pipeline) for all the items in a list. This is similar to

[Map state of AWS Step functions](https://docs.aws.amazon.com/step-functions/latest/dg/amazon-states-language-map-state.html) or

[loops in Argo workflows](https://argo-workflows.readthedocs.io/en/latest/walk-through/loops/).

@@ -87,8 +87,8 @@ of the files to process.

over ```chunks```.

If the argument ```start_index``` is not provided, you can still access the current

- value by ```MAGNUS_MAP_VARIABLE``` environment variable.

- The environment variable ```MAGNUS_MAP_VARIABLE``` is a dictionary with keys as

+ value by ```runnable_MAP_VARIABLE``` environment variable.

+ The environment variable ```runnable_MAP_VARIABLE``` is a dictionary with keys as

```iterate_as```

This instruction is set while defining the map node.

@@ -108,7 +108,7 @@ of the files to process.

This instruction is set while defining the map node.

Note that the ```branch``` of the map node has a similar schema of the pipeline.

- You can run this example by ```magnus execute examples/concepts/map.yaml```

+ You can run this example by ```runnable execute examples/concepts/map.yaml```

```yaml linenums="1" hl_lines="23-26"

--8<-- "examples/concepts/map.yaml"

@@ -120,11 +120,11 @@ of the files to process.

functions.

The map branch "iterate and execute" iterates over chunks and exposes the current start_index of

- as environment variable ```MAGNUS_MAP_VARIABLE```.

+ as environment variable ```runnable_MAP_VARIABLE```.

- The environment variable ```MAGNUS_MAP_VARIABLE``` is a json string with keys of the ```iterate_as```.

+ The environment variable ```runnable_MAP_VARIABLE``` is a json string with keys of the ```iterate_as```.

- You can run this example by ```magnus execute examples/concepts/map_shell.yaml```

+ You can run this example by ```runnable execute examples/concepts/map_shell.yaml```

```yaml linenums="1" hl_lines="26-27 29-32"

--8<-- "examples/concepts/map_shell.yaml"

@@ -156,7 +156,7 @@ of the files to process.

"code_identifier": "30ca73bb01ac45db08b1ca75460029da142b53fa",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -195,7 +195,7 @@ of the files to process.

"code_identifier": "30ca73bb01ac45db08b1ca75460029da142b53fa",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -218,7 +218,7 @@ of the files to process.

"code_identifier": "30ca73bb01ac45db08b1ca75460029da142b53fa",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -266,7 +266,7 @@ of the files to process.

"code_identifier": "30ca73bb01ac45db08b1ca75460029da142b53fa",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -312,7 +312,7 @@ of the files to process.

"code_identifier": "30ca73bb01ac45db08b1ca75460029da142b53fa",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -360,7 +360,7 @@ of the files to process.

"code_identifier": "30ca73bb01ac45db08b1ca75460029da142b53fa",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -406,7 +406,7 @@ of the files to process.

"code_identifier": "30ca73bb01ac45db08b1ca75460029da142b53fa",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -454,7 +454,7 @@ of the files to process.

"code_identifier": "30ca73bb01ac45db08b1ca75460029da142b53fa",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -500,7 +500,7 @@ of the files to process.

"code_identifier": "30ca73bb01ac45db08b1ca75460029da142b53fa",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -548,7 +548,7 @@ of the files to process.

"code_identifier": "30ca73bb01ac45db08b1ca75460029da142b53fa",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -594,7 +594,7 @@ of the files to process.

"code_identifier": "30ca73bb01ac45db08b1ca75460029da142b53fa",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -642,7 +642,7 @@ of the files to process.

"code_identifier": "30ca73bb01ac45db08b1ca75460029da142b53fa",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -687,7 +687,7 @@ of the files to process.

"code_identifier": "30ca73bb01ac45db08b1ca75460029da142b53fa",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

diff --git a/docs/concepts/nesting.md b/docs/concepts/nesting.md

index cfeedaea..05a152a7 100644

--- a/docs/concepts/nesting.md

+++ b/docs/concepts/nesting.md

@@ -1,11 +1,11 @@

As seen from the definitions of [parallel](../concepts/parallel.md) or

[map](../concepts/map.md), the branches are pipelines

-themselves. This allows for deeply nested workflows in **magnus**.

+themselves. This allows for deeply nested workflows in **runnable**.

Technically there is no limit in the depth of nesting but there are some practical considerations.

-- Not all workflow engines that magnus can transpile the workflow to support deeply nested workflows.

+- Not all workflow engines that runnable can transpile the workflow to support deeply nested workflows.

AWS Step functions and Argo workflows support them.

- Deeply nested workflows are complex to understand and debug during errors.

@@ -25,7 +25,7 @@ AWS Step functions and Argo workflows support them.

=== "yaml"

- You can run this pipeline by ```magnus execute examples/concepts/nesting.yaml```

+ You can run this pipeline by ```runnable execute examples/concepts/nesting.yaml```

```yaml linenums="1"

--8<-- "examples/concepts/nesting.yaml"

@@ -57,7 +57,7 @@ AWS Step functions and Argo workflows support them.

"code_identifier": "99139c3507898c60932ad5d35c08b395399a19f6",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -96,7 +96,7 @@ AWS Step functions and Argo workflows support them.

"code_identifier": "99139c3507898c60932ad5d35c08b395399a19f6",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -119,7 +119,7 @@ AWS Step functions and Argo workflows support them.

"code_identifier": "99139c3507898c60932ad5d35c08b395399a19f6",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -142,7 +142,7 @@ AWS Step functions and Argo workflows support them.

"code_identifier": "99139c3507898c60932ad5d35c08b395399a19f6",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -165,7 +165,7 @@ AWS Step functions and Argo workflows support them.

"code_identifier": "99139c3507898c60932ad5d35c08b395399a19f6",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -201,7 +201,7 @@ AWS Step functions and Argo workflows support them.

"code_identifier": "99139c3507898c60932ad5d35c08b395399a19f6",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -243,7 +243,7 @@ AWS Step functions and Argo workflows support them.

"code_identifier": "99139c3507898c60932ad5d35c08b395399a19f6",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -279,7 +279,7 @@ AWS Step functions and Argo workflows support them.

"code_identifier": "99139c3507898c60932ad5d35c08b395399a19f6",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -320,7 +320,7 @@ AWS Step functions and Argo workflows support them.

"code_identifier": "99139c3507898c60932ad5d35c08b395399a19f6",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -362,7 +362,7 @@ AWS Step functions and Argo workflows support them.

"code_identifier": "99139c3507898c60932ad5d35c08b395399a19f6",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -385,7 +385,7 @@ AWS Step functions and Argo workflows support them.

"code_identifier": "99139c3507898c60932ad5d35c08b395399a19f6",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -421,7 +421,7 @@ AWS Step functions and Argo workflows support them.

"code_identifier": "99139c3507898c60932ad5d35c08b395399a19f6",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -463,7 +463,7 @@ AWS Step functions and Argo workflows support them.

"code_identifier": "99139c3507898c60932ad5d35c08b395399a19f6",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -499,7 +499,7 @@ AWS Step functions and Argo workflows support them.

"code_identifier": "99139c3507898c60932ad5d35c08b395399a19f6",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -540,7 +540,7 @@ AWS Step functions and Argo workflows support them.

"code_identifier": "99139c3507898c60932ad5d35c08b395399a19f6",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -581,7 +581,7 @@ AWS Step functions and Argo workflows support them.

"code_identifier": "99139c3507898c60932ad5d35c08b395399a19f6",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -623,7 +623,7 @@ AWS Step functions and Argo workflows support them.

"code_identifier": "99139c3507898c60932ad5d35c08b395399a19f6",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -646,7 +646,7 @@ AWS Step functions and Argo workflows support them.

"code_identifier": "99139c3507898c60932ad5d35c08b395399a19f6",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -669,7 +669,7 @@ AWS Step functions and Argo workflows support them.

"code_identifier": "99139c3507898c60932ad5d35c08b395399a19f6",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -705,7 +705,7 @@ AWS Step functions and Argo workflows support them.

"code_identifier": "99139c3507898c60932ad5d35c08b395399a19f6",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -747,7 +747,7 @@ AWS Step functions and Argo workflows support them.

"code_identifier": "99139c3507898c60932ad5d35c08b395399a19f6",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -783,7 +783,7 @@ AWS Step functions and Argo workflows support them.

"code_identifier": "99139c3507898c60932ad5d35c08b395399a19f6",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -824,7 +824,7 @@ AWS Step functions and Argo workflows support them.

"code_identifier": "99139c3507898c60932ad5d35c08b395399a19f6",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -866,7 +866,7 @@ AWS Step functions and Argo workflows support them.

"code_identifier": "99139c3507898c60932ad5d35c08b395399a19f6",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -889,7 +889,7 @@ AWS Step functions and Argo workflows support them.

"code_identifier": "99139c3507898c60932ad5d35c08b395399a19f6",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -925,7 +925,7 @@ AWS Step functions and Argo workflows support them.

"code_identifier": "99139c3507898c60932ad5d35c08b395399a19f6",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -967,7 +967,7 @@ AWS Step functions and Argo workflows support them.

"code_identifier": "99139c3507898c60932ad5d35c08b395399a19f6",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -1003,7 +1003,7 @@ AWS Step functions and Argo workflows support them.

"code_identifier": "99139c3507898c60932ad5d35c08b395399a19f6",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -1044,7 +1044,7 @@ AWS Step functions and Argo workflows support them.

"code_identifier": "99139c3507898c60932ad5d35c08b395399a19f6",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -1085,7 +1085,7 @@ AWS Step functions and Argo workflows support them.

"code_identifier": "99139c3507898c60932ad5d35c08b395399a19f6",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -1126,7 +1126,7 @@ AWS Step functions and Argo workflows support them.

"code_identifier": "99139c3507898c60932ad5d35c08b395399a19f6",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

diff --git a/docs/concepts/parallel.md b/docs/concepts/parallel.md

index 112b5ea1..1c2f882c 100644

--- a/docs/concepts/parallel.md

+++ b/docs/concepts/parallel.md

@@ -1,4 +1,4 @@

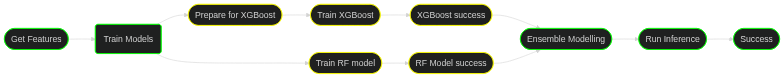

-Parallel nodes in magnus allows you to run multiple pipelines in parallel and use your compute resources efficiently.

+Parallel nodes in runnable allows you to run multiple pipelines in parallel and use your compute resources efficiently.

## Example

@@ -98,7 +98,7 @@ ensemble model happens only after both models are (successfully) trained.

"code_identifier": "f0a2719001de9be30c27069933e4b4a64a065e2b",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -129,7 +129,7 @@ ensemble model happens only after both models are (successfully) trained.

"code_identifier": "f0a2719001de9be30c27069933e4b4a64a065e2b",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -152,7 +152,7 @@ ensemble model happens only after both models are (successfully) trained.

"code_identifier": "f0a2719001de9be30c27069933e4b4a64a065e2b",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -183,7 +183,7 @@ ensemble model happens only after both models are (successfully) trained.

"code_identifier": "f0a2719001de9be30c27069933e4b4a64a065e2b",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -214,7 +214,7 @@ ensemble model happens only after both models are (successfully) trained.

"code_identifier": "f0a2719001de9be30c27069933e4b4a64a065e2b",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -251,7 +251,7 @@ ensemble model happens only after both models are (successfully) trained.

"code_identifier": "f0a2719001de9be30c27069933e4b4a64a065e2b",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -282,7 +282,7 @@ ensemble model happens only after both models are (successfully) trained.

"code_identifier": "f0a2719001de9be30c27069933e4b4a64a065e2b",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -318,7 +318,7 @@ ensemble model happens only after both models are (successfully) trained.

"code_identifier": "f0a2719001de9be30c27069933e4b4a64a065e2b",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -349,7 +349,7 @@ ensemble model happens only after both models are (successfully) trained.

"code_identifier": "f0a2719001de9be30c27069933e4b4a64a065e2b",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -380,7 +380,7 @@ ensemble model happens only after both models are (successfully) trained.

"code_identifier": "f0a2719001de9be30c27069933e4b4a64a065e2b",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

diff --git a/docs/concepts/parameters.md b/docs/concepts/parameters.md

index dbdc2ae0..1de3b83e 100644

--- a/docs/concepts/parameters.md

+++ b/docs/concepts/parameters.md

@@ -1,4 +1,6 @@

-In magnus, ```parameters``` are python data types that can be passed from one ```task```

+## TODO: Concretly show an example!

+

+In runnable, ```parameters``` are python data types that can be passed from one ```task```

to the next ```task```. These parameters can be accessed by the ```task``` either as

environment variables, arguments of the ```python function``` or using the

[API](../interactions.md).

@@ -8,9 +10,9 @@ environment variables, arguments of the ```python function``` or using the

The initial parameters of the pipeline can set by using a ```yaml``` file and presented

during execution

-```--parameters-file, -parameters``` while using the [magnus CLI](../usage.md/#usage)

+```--parameters-file, -parameters``` while using the [runnable CLI](../usage.md/#usage)

-or by using ```parameters_file``` with [the sdk](..//sdk.md/#magnus.Pipeline.execute).

+or by using ```parameters_file``` with [the sdk](..//sdk.md/#runnable.Pipeline.execute).

They can also be set using environment variables which override the parameters defined by the file.

@@ -25,14 +27,14 @@ They can also be set using environment variables which override the parameters d

=== "environment variables"

- Any environment variables prefixed with ```MAGNUS_PRM_ ``` are interpreted as

+ Any environment variables prefixed with ```runnable_PRM_ ``` are interpreted as

parameters by the ```tasks```.

The yaml formatted parameters can also be defined as:

```shell

- export MAGNUS_PRM_spam="hello"

- export MAGNUS_PRM_eggs='{"ham": "Yes, please!!"}'

+ export runnable_PRM_spam="hello"

+ export runnable_PRM_eggs='{"ham": "Yes, please!!"}'

```

Parameters defined by environment variables override parameters defined by

diff --git a/docs/concepts/pipeline.md b/docs/concepts/pipeline.md

index 4aadf3dd..5398eaaa 100644

--- a/docs/concepts/pipeline.md

+++ b/docs/concepts/pipeline.md

@@ -1,6 +1,6 @@

???+ tip inline end "Steps"

- In magnus, a step can be a simple ```task``` or ```stub``` or complex nested pipelines like

+ In runnable, a step can be a simple ```task``` or ```stub``` or complex nested pipelines like

```parallel``` branches, embedded ```dags``` or dynamic workflows.

In this section, we use ```stub``` for convenience. For more in depth information about other types,

@@ -8,7 +8,7 @@

-In **magnus**, we use the words

+In **runnable**, we use the words

- ```dag```, ```workflows``` and ```pipeline``` interchangeably.

- ```nodes```, ```steps``` interchangeably.

@@ -79,7 +79,7 @@ one more node.

???+ warning inline end "Step names"

- In magnus, the names of steps should not have ```%``` or ```.``` in them.

+ In runnable, the names of steps should not have ```%``` or ```.``` in them.

You can name them as descriptive as you want.

@@ -223,7 +223,7 @@ Reaching one of these states as part of traversal indicates the status of the pi

You can, alternatively, create a ```success``` and ```fail``` state and link them together.

```python

- from magnus import Success, Fail

+ from runnable import Success, Fail

success = Success(name="Custom Success")

fail = Fail(name="Custom Failure")

diff --git a/docs/concepts/run-log.md b/docs/concepts/run-log.md

index 8f677fb9..b18b0be5 100644

--- a/docs/concepts/run-log.md

+++ b/docs/concepts/run-log.md

@@ -1,6 +1,6 @@

# Run Log

-Internally, magnus uses a ```run log``` to keep track of the execution of the pipeline. It

+Internally, runnable uses a ```run log``` to keep track of the execution of the pipeline. It

also stores the parameters, experiment tracking metrics and reproducibility information captured during the execution.

It should not be confused with application logs generated during the execution of a ```task``` i.e the stdout and stderr

@@ -43,7 +43,7 @@ when running the ```command``` of a task.

"code_identifier": "ca4c5fbff4148d3862a4738942d4607a9c4f0d88",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -87,7 +87,7 @@ when running the ```command``` of a task.

"code_identifier": "ca4c5fbff4148d3862a4738942d4607a9c4f0d88",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -131,7 +131,7 @@ when running the ```command``` of a task.

"code_identifier": "ca4c5fbff4148d3862a4738942d4607a9c4f0d88",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -175,7 +175,7 @@ when running the ```command``` of a task.

"code_identifier": "ca4c5fbff4148d3862a4738942d4607a9c4f0d88",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -247,7 +247,7 @@ when running the ```command``` of a task.

the parameters and sets them back as environment variables.\nThe step

display_again displays the updated parameters from modify_initial and updates

them.\n\n

- You can run this pipeline as:\n magnus execute -f

+ You can run this pipeline as:\n runnable execute -f

examples/concepts/task_shell_parameters.yaml -p examples/concepts/parameters.

yaml\n",

"internal_branch_name": "",

@@ -350,7 +350,7 @@ A snippet from the above example:

"code_identifier": "ca4c5fbff4148d3862a4738942d4607a9c4f0d88",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -509,7 +509,7 @@ reproduced in local environments and fixed.

"code_identifier": "f94e49a4fcecebac4d5eecbb5b691561b08e45c0",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -548,7 +548,7 @@ reproduced in local environments and fixed.

"code_identifier": "f94e49a4fcecebac4d5eecbb5b691561b08e45c0",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -594,7 +594,7 @@ reproduced in local environments and fixed.

"code_identifier": "f94e49a4fcecebac4d5eecbb5b691561b08e45c0",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -640,7 +640,7 @@ reproduced in local environments and fixed.

"expose_parameters_as_inputs": true,

"secrets_from_k8s": [],

"output_file": "argo-pipeline.yaml",

- "name": "magnus-dag-",

+ "name": "runnable-dag-",

"annotations": {},

"labels": {},

"activeDeadlineSeconds": 172800,

@@ -661,7 +661,7 @@ reproduced in local environments and fixed.

"service_account_name": "default-editor",

"persistent_volumes": [

{

- "name": "magnus-volume",

+ "name": "runnable-volume",

"mount_path": "/mnt"

}

],

@@ -696,7 +696,7 @@ reproduced in local environments and fixed.

"dag": {

"start_at": "Setup",

"name": "",

- "description": "This is a simple pipeline that demonstrates retrying failures.\n\n1. Setup: We setup a data folder, we ignore if it is already present\n2. Create Content: We create a \"hello.txt\" and \"put\" the file in catalog\n3. Retrieve Content: We \"get\" the file \"hello.txt\" from the catalog and show the contents\n5. Cleanup: We remove the data folder. Note that this is stubbed to prevent accidental deletion.\n\n\nYou can run this pipeline by:\n magnus execute -f examples/catalog.yaml -c examples/configs/fs-catalog.yaml\n",

+ "description": "This is a simple pipeline that demonstrates retrying failures.\n\n1. Setup: We setup a data folder, we ignore if it is already present\n2. Create Content: We create a \"hello.txt\" and \"put\" the file in catalog\n3. Retrieve Content: We \"get\" the file \"hello.txt\" from the catalog and show the contents\n5. Cleanup: We remove the data folder. Note that this is stubbed to prevent accidental deletion.\n\n\nYou can run this pipeline by:\n runnable execute -f examples/catalog.yaml -c examples/configs/fs-catalog.yaml\n",

"steps": {

"Setup": {

"type": "task",

@@ -724,7 +724,7 @@ reproduced in local environments and fixed.

},

"max_attempts": 1,

"command_type": "shell",

- "command": "echo \"Hello from magnus\" >> data/hello.txt\n",

+ "command": "echo \"Hello from runnable\" >> data/hello.txt\n",

"node_name": "Create Content"

},

"Retrieve Content": {

@@ -794,7 +794,7 @@ reproduced in local environments and fixed.

"code_identifier": "f94e49a4fcecebac4d5eecbb5b691561b08e45c0",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -815,7 +815,7 @@ reproduced in local environments and fixed.

"code_identifier": "f94e49a4fcecebac4d5eecbb5b691561b08e45c0",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -836,7 +836,7 @@ reproduced in local environments and fixed.

"code_identifier": "f94e49a4fcecebac4d5eecbb5b691561b08e45c0",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -882,7 +882,7 @@ reproduced in local environments and fixed.

"code_identifier": "f94e49a4fcecebac4d5eecbb5b691561b08e45c0",

"code_identifier_type": "git",

"code_identifier_dependable": true,

- "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

"code_identifier_message": ""

}

],

@@ -934,7 +934,7 @@ reproduced in local environments and fixed.

"tag": "",

"run_id": "polynomial-bartik-2226",

"variables": {

- "argo_docker_image": "harbor.csis.astrazeneca.net/mlops/magnus:latest"

+ "argo_docker_image": "harbor.csis.astrazeneca.net/mlops/runnable:latest"

},

"use_cached": true,

"original_run_id": "toFail",

@@ -945,7 +945,7 @@ reproduced in local environments and fixed.

present\n2. Create Content: We create a \"hello.txt\" and \"put\" the file in catalog\n3. Clean up to get again: We remove the data folder. Note that this is stubbed

to prevent\n accidental deletion of your contents. You can change type to task to make really run.\n4. Retrieve Content: We \"get\" the file \"hello.txt\" from the

catalog and show the contents\n5. Cleanup: We remove the data folder. Note that this is stubbed to prevent accidental deletion.\n\n\nYou can run this pipeline by:\n

- magnus execute -f examples/catalog.yaml -c examples/configs/fs-catalog.yaml\n",

+ runnable execute -f examples/catalog.yaml -c examples/configs/fs-catalog.yaml\n",

"steps": {

"Setup": {

"type": "task",

@@ -973,7 +973,7 @@ reproduced in local environments and fixed.

},

"max_attempts": 1,

"command_type": "shell",

- "command": "echo \"Hello from magnus\" >> data/hello.txt\n",

+ "command": "echo \"Hello from runnable\" >> data/hello.txt\n",

"node_name": "Create Content"

},

"Retrieve Content": {

@@ -1065,7 +1065,7 @@ reproduced in local environments and fixed.

> "code_identifier": "f94e49a4fcecebac4d5eecbb5b691561b08e45c0",

> "code_identifier_type": "git",

> "code_identifier_dependable": true,

- > "code_identifier_url": "https://github.com/AstraZeneca/magnus-core.git",

+ > "code_identifier_url": "https://github.com/AstraZeneca/runnable-core.git",

> "code_identifier_message": ""

> }

> ],

@@ -1163,7 +1163,7 @@ reproduced in local environments and fixed.

< "expose_parameters_as_inputs": true,

< "secrets_from_k8s": [],

< "output_file": "argo-pipeline.yaml",

- < "name": "magnus-dag-",

+ < "name": "runnable-dag-",

< "annotations": {},

< "labels": {},

< "activeDeadlineSeconds": 172800,

@@ -1184,7 +1184,7 @@ reproduced in local environments and fixed.

< "service_account_name": "default-editor",

< "persistent_volumes": [

< {

- < "name": "magnus-volume",

+ < "name": "runnable-volume",

< "mount_path": "/mnt"

< }

< ],

@@ -1215,14 +1215,14 @@ reproduced in local environments and fixed.

---

> "run_id": "polynomial-bartik-2226",

> "variables": {

- > "argo_docker_image": "harbor.csis.astrazeneca.net/mlops/magnus:latest"

+ > "argo_docker_image": "harbor.csis.astrazeneca.net/mlops/runnable:latest"

> },

> "use_cached": true,

> "original_run_id": "toFail",

208c168

- < "description": "This is a simple pipeline that demonstrates retrying failures.\n\n1. Setup: We setup a data folder, we ignore if it is already present\n2. Create Content: We create a \"hello.txt\" and \"put\" the file in catalog\n3. Retrieve Content: We \"get\" the file \"hello.txt\" from the catalog and show the contents\n5. Cleanup: We remove the data folder. Note that this is stubbed to prevent accidental deletion.\n\n\nYou can run this pipeline by:\n magnus execute -f examples/catalog.yaml -c examples/configs/fs-catalog.yaml\n",

+ < "description": "This is a simple pipeline that demonstrates retrying failures.\n\n1. Setup: We setup a data folder, we ignore if it is already present\n2. Create Content: We create a \"hello.txt\" and \"put\" the file in catalog\n3. Retrieve Content: We \"get\" the file \"hello.txt\" from the catalog and show the contents\n5. Cleanup: We remove the data folder. Note that this is stubbed to prevent accidental deletion.\n\n\nYou can run this pipeline by:\n runnable execute -f examples/catalog.yaml -c examples/configs/fs-catalog.yaml\n",

---

- > "description": "This is a simple pipeline that demonstrates passing data between steps.\n\n1. Setup: We setup a data folder, we ignore if it is already present\n2. Create Content: We create a \"hello.txt\" and \"put\" the file in catalog\n3. Clean up to get again: We remove the data folder. Note that this is stubbed to prevent\n accidental deletion of your contents. You can change type to task to make really run.\n4. Retrieve Content: We \"get\" the file \"hello.txt\" from the catalog and show the contents\n5. Cleanup: We remove the data folder. Note that this is stubbed to prevent accidental deletion.\n\n\nYou can run this pipeline by:\n magnus execute -f examples/catalog.yaml -c examples/configs/fs-catalog.yaml\n",

+ > "description": "This is a simple pipeline that demonstrates passing data between steps.\n\n1. Setup: We setup a data folder, we ignore if it is already present\n2. Create Content: We create a \"hello.txt\" and \"put\" the file in catalog\n3. Clean up to get again: We remove the data folder. Note that this is stubbed to prevent\n accidental deletion of your contents. You can change type to task to make really run.\n4. Retrieve Content: We \"get\" the file \"hello.txt\" from the catalog and show the contents\n5. Cleanup: We remove the data folder. Note that this is stubbed to prevent accidental deletion.\n\n\nYou can run this pipeline by:\n runnable execute -f examples/catalog.yaml -c examples/configs/fs-catalog.yaml\n",

253c213

< "command": "cat data/hello1.txt",

---

@@ -1237,10 +1237,10 @@ reproduced in local environments and fixed.

## API