A monitoring solution for node runners and validators utilizing docker containers with Prometheus, Grafana, NodeExporter, and alerting with AlertManager.

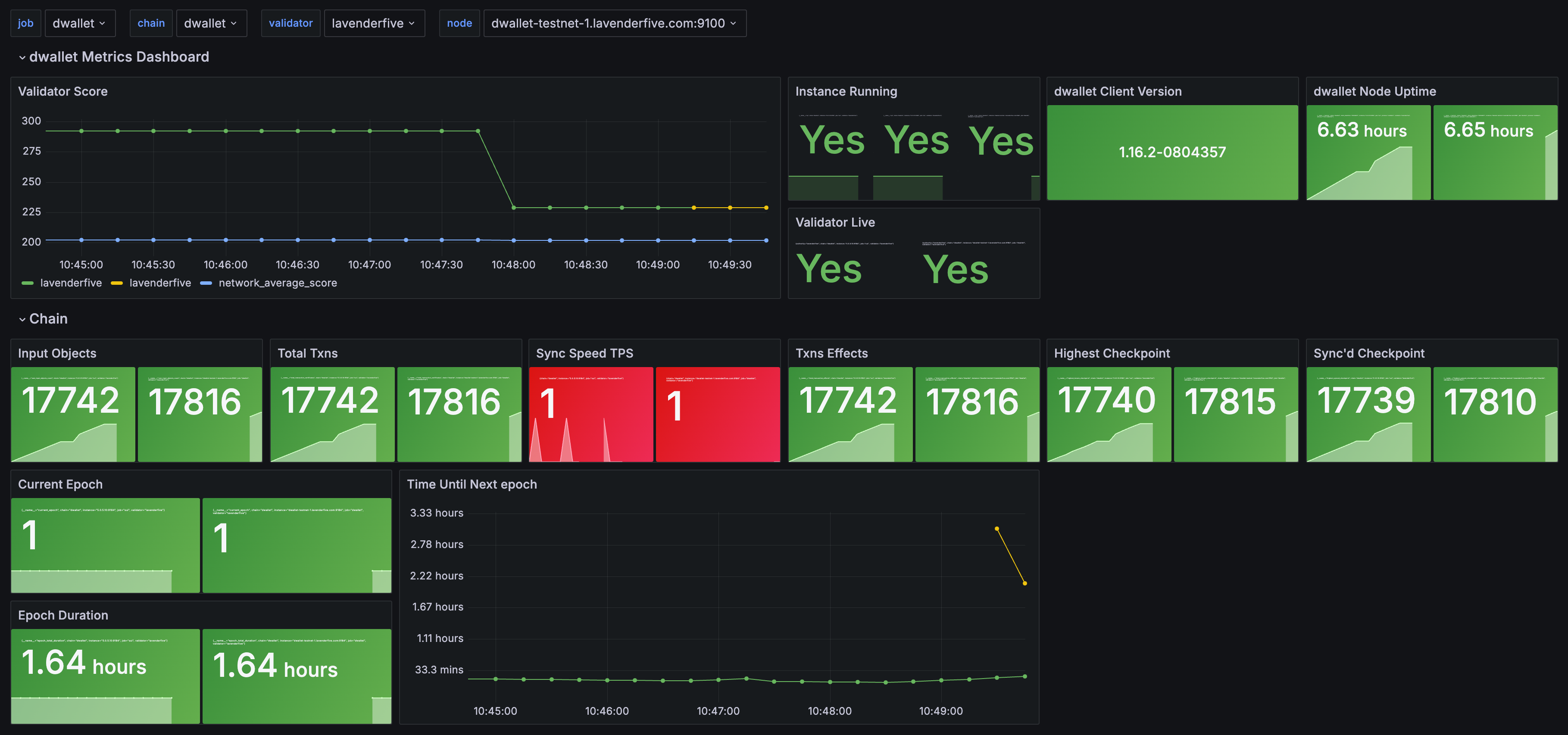

This is intended to be a single-stop solution for monitoring your dWallet validator signing state.

Clone this repository on your Docker host, cd into dwallet-monitoring directory and run compose up:

- Basic Setup

git clone https://github.com/LavenderFive/dwallet-monitoring

cd dwallet-monitoring

cp .env.sample .env- Setup Prometheus

You'll need your node ip or dns (

dwallet-tesnet-1.lavenderfive.com) in addition to your validator name.

nano prometheus/prometheus.yaml- Start monitoring containers

docker compose up- Confirm monitoring is working

Open

localhost:3000in your browser (or whatever IP you're running these containers from). Login withadmin/admin.

Prerequisites:

- Docker Engine >= 1.13

- Docker Compose >= 1.11

Containers:

- Prometheus (metrics database)

http://<host-ip>:9090 - AlertManager (alerts management)

http://<host-ip>:9093 - Grafana (visualize metrics)

http://<host-ip>:3000- Infinity Plugin

- NodeExporter (host metrics collector)

- cAdvisor (containers metrics collector)

- Caddy (reverse proxy and basic auth provider for prometheus and alertmanager)

1. cd ~/dwallet-monitoring

1. cp .env.sample .env

----- Caddy ------

1. under caddy/Caddyfile:

1. replace YOUR_WEBSITE.COM with your website

1. replace [email protected] with your email

1. point your dns to your monitoring server

---- dwallet ----

1. add your node ip and validator under prometheus/prometheus.yaml

-----------------

1. docker compose up -d

This monitoring solution comes built in with a very basic Peggo Monitoring dashboard, which works out of the box. Grafana, Prometheus, and Infinity are installed automatically.

To support persistent storage, you'll first need to create the volume:

docker volume create grafana-storage && docker volume create prometheus_data

Navigate to http://<host-ip>:3000 and login with user admin password admin. You can change the credentials in the compose file or by supplying the ADMIN_USER and ADMIN_PASSWORD environment variables on compose up. The config file can be added directly in grafana part like this

grafana:

image: grafana/grafana:7.2.0

env_file:

- .envand the config file format should have this content

GF_SECURITY_ADMIN_USER=admin

GF_SECURITY_ADMIN_PASSWORD=changeme

GF_USERS_ALLOW_SIGN_UP=falseIf you want to change the password, you have to remove this entry, otherwise the change will not take effect

- grafana_data:/var/lib/grafanaGrafana is preconfigured with dashboards and Prometheus as the default data source:

- Name: Prometheus

- Type: Prometheus

- Url: http://prometheus:9090

- Access: proxy

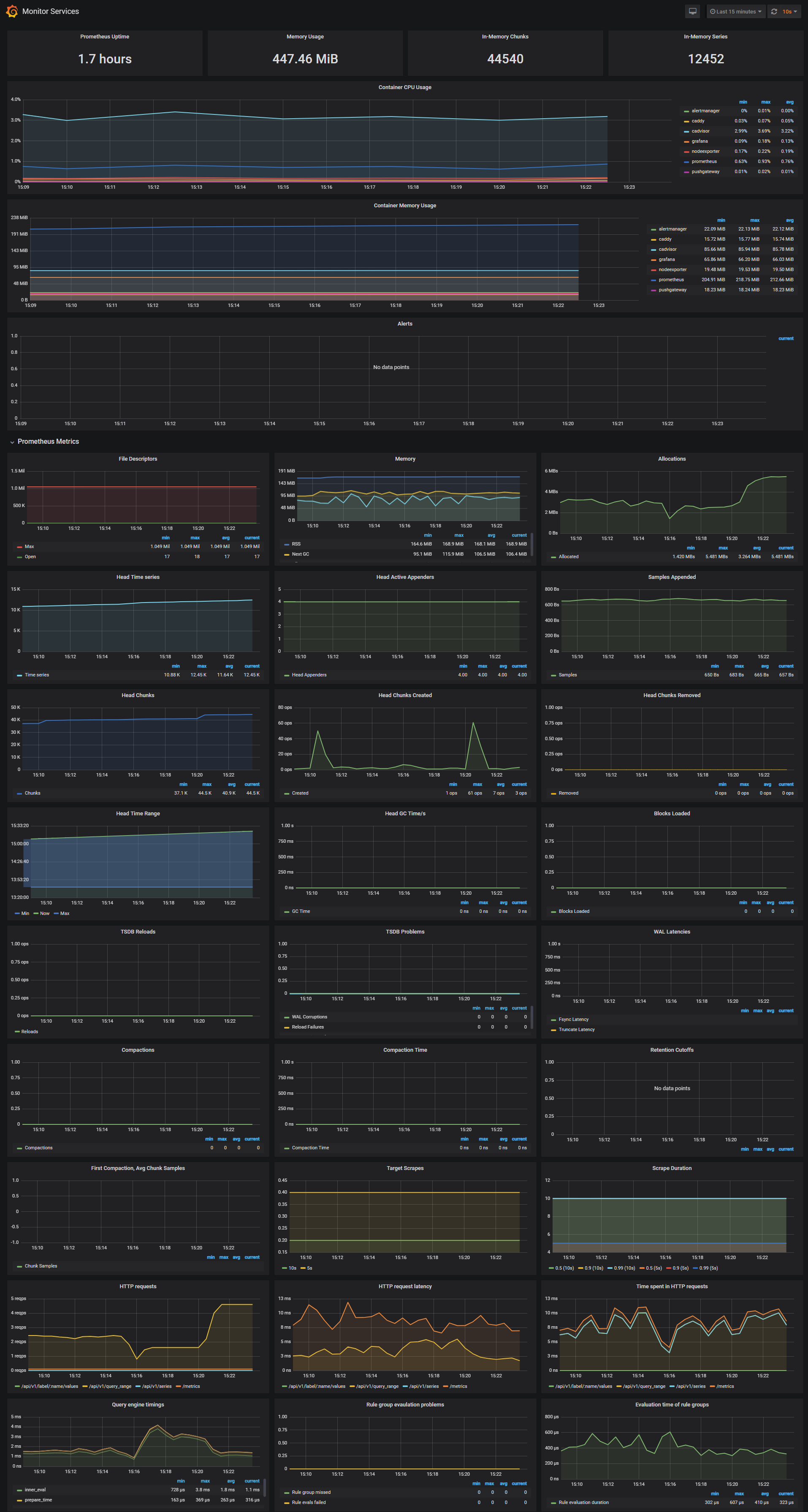

Monitor Services Dashboard

The Monitor Services Dashboard shows key metrics for monitoring the containers that make up the monitoring stack:

- Prometheus container uptime, monitoring stack total memory usage, Prometheus local storage memory chunks and series

- Container CPU usage graph

- Container memory usage graph

- Prometheus chunks to persist and persistence urgency graphs

- Prometheus chunks ops and checkpoint duration graphs

- Prometheus samples ingested rate, target scrapes and scrape duration graphs

- Prometheus HTTP requests graph

- Prometheus alerts graph

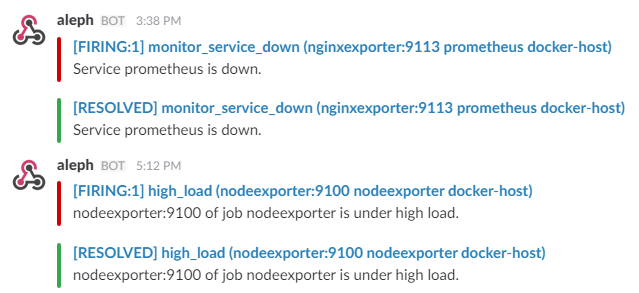

Two alert groups have been setup within the alert.rules configuration file:

You can modify the alert rules and reload them by making a HTTP POST call to Prometheus:

curl -X POST http://admin:admin@<host-ip>:9090/-/reloadMonitoring services alerts

Trigger an alert if any of the monitoring targets (node-exporter and cAdvisor) are down for more than 30 seconds:

- alert: monitor_service_down

expr: up == 0

for: 30s

labels:

severity: critical

annotations:

summary: "Monitor service non-operational"

description: "Service {{ $labels.instance }} is down."Peggo alerts

Trigger an alert if Peggo isn't catching up, AND it's more than 5 nonce behind the network

- name: peggo_alerts

rules:

- alert: HighNonceDifference

expr: abs(peggo_network_nonce - peggo_orchestrator_nonce) > 5 and increase(peggo_orchestrator_nonce[1h]) <= 0

for: 15m

labels:

severity: critical

annotations:

summary: "High difference between peggo_orchestrator_nonce and peggo_network_nonce"

description: "The difference between peggo_orchestrator_nonce and peggo_network_nonce has been greater than 5 for more than 15 minutes."The AlertManager service is responsible for handling alerts sent by Prometheus server. AlertManager can send notifications via email, Pushover, Slack, HipChat or any other system that exposes a webhook interface. A complete list of integrations can be found here.

You can view and silence notifications by accessing http://<host-ip>:9093.

The notification receivers can be configured in alertmanager/config.yml file.

To receive alerts via Slack you need to make a custom integration by choose incoming web hooks in your Slack team app page. You can find more details on setting up Slack integration here.

Copy the Slack Webhook URL into the api_url field and specify a Slack channel.

route:

receiver: 'slack'

receivers:

- name: 'slack'

slack_configs:

- send_resolved: true

text: "{{ .CommonAnnotations.description }}"

username: 'Prometheus'

channel: '#<channel>'

api_url: 'https://hooks.slack.com/services/<webhook-id>'