Day 2 - Wednesday 12/06/2024 DAY 1 - Tuesday 11/06/2024 09:00 CEST 10:00 EEST Welcome and Introduction Presenters: J\u00f8rn Dietze (LUST) and Harvey Richardson (HPE) 09:15 CEST 10:15 EEST LUMI Architecture, Programming and Runtime Environment Presenters: Harvey Richardson (HPE) 09:45 CEST 10:45 EEST Exercises (session 1) Presenter: Alfio Lazzaro (HPE) 10:15 CEST 11:15 EEST Break (15 minutes) 10:30 CEST 11:30 EEST Introduction to Performance Analysis with Perftools Presenters Thierry Braconnier (HPE) 11:10 CEST 12:10 EEST Performance Optimization: Improving Single-Core Efficiency Presenter: Jean-Yves Vet (HPE) 11:40 CEST 12:40 EEST Application Placement Presenters: Jean-Yves Vet (HPE) 12:00 CEST 13:00 EEST Lunch break (60 minutes) 13:00 CEST 14:00 EEST Optimization/performance analysis demo and exercise Presenters: Alfio Lazzaro (HPE) 14:30 CEST 15:30 EEST Break (30 minutes) 15:00 CEST 16:00 EEST Optimization/performance analysis demo and exercise Presenters: Alfio Lazzaro (HPE) 16:30 CEST 17:30 EEST End of the workshop day DAY 2 - Wednesday 12/06/2024 09:00 CEST 10:00 EEST AMD Profiling Tools Overview & Omnitrace Presenter: Samuel A\u00f1tao (AMD) 10:00 CEST 11:00 EEST Exercises (session 2) Presenter: Samuel A\u00f1tao (AMD) 10:30 CEST 11:30 EEST Break (15 minutes) 10:45 CEST 11:45 EEST Introduction to Omniperf Presenters: Samuel A\u00f1tao (AMD) 11:30 CEST 12:#0 EEST Exercises (session 3) Presenter: Samuel A\u00f1tao (AMD) 12:00 CEST 13:00 EEST Lunch break (60 minutes) 13:00 CEST 14:00 EEST MPI Optimizations Presenters: Harvey Richardson (HPE) 13:30 CEST 14:30 EEST Exercises (session 4) Presenter: Harvey Richardson (HPE) 14:00 CEST 15:00 EEST IO Optimizations Presenters: Harvey Richardson (HPE) 14:35 CEST 15:35 EEST Exercises (session 5) Presenter: Harvey Richardson (HPE) 15:05 CEST 16:05 EEST Break (15 minutes) 15:20 CEST 16:20 EEST Open session and Q&A Option to work on your own code 16:30 CEST 17:30 EEST End of the workshop"}]}

\ No newline at end of file

+{"config":{"lang":["en"],"separator":"[\\s\\-]+","pipeline":["stopWordFilter"]},"docs":[{"location":"","title":"Overview of LUMI trainings","text":""},{"location":"#organised-by-lust-in-cooperation-with-partners","title":"Organised by LUST in cooperation with partners","text":""},{"location":"#regular-trainings","title":"Regular trainings","text":"Upcoming or currently running events with materials already partly available:

- Advanced LUMI course (October 28-31, 2024)

Most recently completed main training events:

-

Introductory LUMI training aimed at regular users: LUMI 2-day training (May 2-3, 2023)

Short URL to the most recently completed introductory training for regular users: lumi-supercomputer.github.io/intro-latest

-

4-day comprehensive LUMI training aimed at developers and advanced users, mostly focusing on traditional HPC users: Comprehensive general LUMI course (April 23-26, 2024)

Short URL to the most recently completed comprehensive LUMI training aimed at developers and advanced users: lumi-supercomputer.github.io/comprehensive-latest

-

Comprehensive training specifically for AI users: Moving your AI training jobs to LUMI: A Hands-On Workshop. A 2-day AI workshop (May 29-30, 2024)

Short URL to the most recently completed training for AI users: lumi-supercomputer.github.io/AI-latest

-

Performance Analysis and Optimization Workshop, Oslo, 12-12 June 2024

Other recent LUST-organised trainings for which the material is still very up-to-date and relevant:

- HPE and AMD profiling tools (October 9, 2024)

"},{"location":"#lumi-user-coffee-break-talks","title":"LUMI User Coffee Break Talks","text":"Archive of recordings and questions

-

LUMI Update Webinar (October 2, 2024)

-

HyperQueue (January 31, 2024)

-

Open OnDemand: A web interface for LUMI (November 29, 2023)

-

Cotainr on LUMI (September 27, 2023)

-

Spack on LUMI (August 30, 2023)

-

Current state of running AI workloads on LUMI (June 28, 2023)

"},{"location":"#recent-courses-made-available-by-lumi-consortium-partners-and-coes","title":"Recent courses made available by LUMI consortium partners and CoEs","text":" - Workshop: How to run GROMACS efficiently on LUMI (January 24-25, 2024, BioExcel/CSC Finland/KTH Sweden)

- Interactive Slurm tutorial developed by DeiC (Denmark)

"},{"location":"#course-archive","title":"Course archive","text":""},{"location":"#lust-provided-regular-trainings","title":"LUST-provided regular trainings","text":"By theme in reverse chronological order:

- Short introductory trainings to LUMI

- Supercomputing with LUMI (May, 2024 in Amsterdam)

- LUMI 1-day training (February, 2024)

- LUMI 1-day training (September, 2023)

- LUMI 1-day training (May 9 and 16, 2023)

- Comprehensive general LUMI trainings aimed at at developers and advanced users, mostly focusing on traditional HPC users

- Advanced LUMI course (October 28-31, 2024)

- Comprehensive general LUMI course (April 23-26, 2024)

- Comprehensive general LUMI course (October 3-6, 2023)

- Comprehensive general LUMI course (May 30 - June 2, 2023)

- Comprehensive general LUMI course (February 14-17, 2023)

- LUMI-G Training (January 11, 2023)

- Detailed introduction to the LUMI-C environment and architecture (November 23/24, 2022)

- LUMI-G Pilot Training (August 23, 2022)

- Detailed introduction to the LUMI-C environment and architecture (April 27/28, 2022)

- Comprehensive AI trainings for LUMI:

- Moving your AI training jobs to LUMI: A Hands-On Workshop. A 2-day AI workshop (May 29-30, 2024)

- Performance analysis tools and/or program optimization

- HPE and AMD profiling tools (October 9, 2024)

- Performance Analysis and Optimization Workshop, Oslo, 12-12 June 2024

- HPE and AMD profiling tools (November 22, 2023)

- HPE and AMD profiling tools (April 13, 2023)

- Materials from Hackathons

- LUMI-G hackathon (October 14-18, 2024)

- LUMI-G hackathon (April 17-21, 2023)

- EasyBuild on LUMI

- EasyBuild course for CSC and local organisations (May 9/11, 2022)

"},{"location":"#courses-made-available-by-lumi-consortium-partners-and-coes","title":"Courses made available by LUMI consortium partners and CoEs","text":" - Workshop: How to run GROMACS efficiently on LUMI (January 24-25, 2024, BioExcel/CSC Finland/KTH Sweden)

- Interactive Slurm tutorial developed by DeiC (Denmark)

"},{"location":"#information-for-local-organisations","title":"Information for local organisations","text":"The materials in the GitHub repository Lumi-supercomputer/LUMI-training-materials and all materials linked in this web site and served from 462000265.lumidata.eu that are presented by a member of LUST are licensed under the Creative Commons Attribution 4.0 International (CC BY 4.0) license. This includes all of the material of the LUMI 1-day trainings series.

Presentations given by AMD of which some material can be downloaded from this web site and from 462000265.lumidata.eu are copyrighted by AMD, or in cases where AMD is using material available in the public domain (e.g., for exercises), licensed under the source license for that material. Using AMD copyrighted materials should be discussed with AMD.

All presentation material presented by HPE is copyrighted by HPE and can only be shared with people who have an account on LUMI. Therefore that material is not available via this web site and can only be accessed on LUMI itself.

"},{"location":"1day-20230509/","title":"LUMI 1-day training May 2023","text":""},{"location":"1day-20230509/#organisation","title":"Organisation","text":""},{"location":"1day-20230509/#setting-up-for-the-exercises","title":"Setting up for the exercises","text":" -

Create a directory in the scratch of the training project, or if you want to keep the exercises around for a while after the session and have already another project on LUMI, in a subdirectory or your project directory or in your home directory (though we don't recommend the latter). Then go into that directory.

E.g., in the scratch directory of the project:

mkdir -p /scratch/project_465000523/$USER/exercises\ncd /scratch/project_465000523/$USER/exercises\n

-

Now download the exercises and un-tar:

wget https://462000265.lumidata.eu/1day-20230509/files/exercises-20230509.tar.gz\ntar -xf exercises-20230509.tar.gz\n

Link to the tar-file with the exercises

-

You're all set to go!

"},{"location":"1day-20230509/#downloads","title":"Downloads","text":"Presentation Slides Notes recording Introduction / / recording LUMI Architecture slides notes recording HPE Cray Programming Environment slides notes recording Modules on LUMI slides notes recording LUMI Software Stacks slides notes recording Exercises 1 / notes / Running Jobs on LUMI slides / recording Exercises 2 / notes / Introduction to Lustre and Best Practices slides / recording LUMI User Support slides / recording"},{"location":"1day-20230509/01_Architecture/","title":"The LUMI Architecture","text":"In this presentation, we will build up LUMI part by part, stressing those aspects that are important to know to run on LUMI efficiently and define jobs that can scale.

"},{"location":"1day-20230509/01_Architecture/#lumi-is","title":"LUMI is ...","text":"LUMI is a pre-exascale supercomputer, and not a superfast PC nor a compute cloud architecture.

Each of these architectures have their own strengths and weaknesses and offer different compromises and it is key to chose the right infrastructure for the job and use the right tools for each infrastructure.

Just some examples of using the wrong tools or infrastructure:

-

We've had users who were disappointed about the speed of a single core and were expecting that this would be much faster than their PCs. Supercomputers however are optimised for performance per Watt and get their performance from using lots of cores through well-designed software. If you want the fastest core possible, you'll need a gaming PC.

-

Even the GPU may not be that much faster for some tasks than GPUs in gaming PCs, especially since an MI250x should be treated as two GPUs for most practical purposes. The better double precision floating point operations and matrix operations, also at full precision, requires transistors also that on some other GPUs are used for rendering hardware or for single precision compute units.

-

A user complained that they did not succeed in getting their nice remote development environment to work on LUMI. The original author of these notes took a test license and downloaded a trial version. It was a very nice environment but really made for local development and remote development in a cloud environment with virtual machines individually protected by personal firewalls and was not only hard to get working on a supercomputer but also insecure.

-

CERN came telling on a EuroHPC Summit Week before the COVID pandemic that they would start using more HPC and less cloud and that they expected a 40% cost reduction that way. A few years later they published a paper with their experiences and it was mostly disappointment. The HPC infrastructure didn't fit their model for software distribution and performance was poor. Basically their solution was designed around the strengths of a typical cloud infrastructure and relied precisely on those things that did make their cloud infrastructure more expensive than the HPC infrastructure they tested. It relied on fast local disks that require a proper management layer in the software, (ab)using the file system as a database for unstructured data, a software distribution mechanism that requires an additional daemon running permanently on the compute nodes (and local storage on those nodes), ...

True supercomputers, and LUMI in particular, are built for scalable parallel applications and features that are found on smaller clusters or on workstations that pose a threat to scalability are removed from the system. It is also a shred infrastructure but with a much more lightweight management layer than a cloud infrastructure and far less isolation between users, meaning that abuse by one user can have more of a negative impact on other users than in a cloud infrastructure. Supercomputers since the mid to late '80s are also build according to the principle of trying to reduce the hardware cost by using cleverly designed software both at the system and application level. They perform best when streaming data through the machine at all levels of the memory hierarchy and are not built at all for random access to small bits of data (where the definition of \"small\" depends on the level in the memory hierarchy).

At several points in this course you will see how this impacts what you can do with a supercomputer and how you work with a supercomputer.

"},{"location":"1day-20230509/01_Architecture/#lumi-spec-sheet-a-modular-system","title":"LUMI spec sheet: A modular system","text":"So we've already seen that LUMI is in the first place a EuroHPC pre-exascale machine. LUMI is built to prepare for the exascale era and to fit in the EuroHPC ecosystem. But it does not even mean that it has to cater to all pre-exascale compute needs. The EuroHPC JU tries to build systems that have some flexibility, but also does not try to cover all needs with a single machine. They are building 3 pre-exascale systems with different architecture to explore multiple architectures and to cater to a more diverse audience.

LUMI is also a very modular machine designed according to the principles explored in a series of European projects, and in particular DEEP and its successors) that explored the cluster-booster concept.

LUMI is in the first place a huge GPGPU supercomputer. The GPU partition of LUMI, called LUMI-G, contains 2560 nodes with a single 64-core AMD EPYC 7A53 CPU and 4 AMD MI250x GPUs. Each node has 512 GB of RAM attached to the CPU (the maximum the CPU can handle without compromising bandwidth) and 128 GB of HBM2e memory per GPU. Each GPU node has a theoretical peak performance of 200 TFlops in single (FP32) or double (FP64) precision vector arithmetic (and twice that with the packed FP32 format, but that is not well supported so this number is not often quoted). The matrix units are capable of about 400 TFlops in FP32 or FP64. However, compared to the NVIDIA GPUs, the performance for lower precision formats used in some AI applications is not that stellar.

LUMI also has a large CPU-only partition, called LUMI-C, for jobs that do not run well on GPUs, but also integrated enough with the GPU partition that it is possible to have applications that combine both node types. LUMI-C consists of 1536 nodes with 2 64-core AMD EPYC 7763 CPUs. 32 of those nodes have 1TB of RAM (with some of these nodes actually reserved for special purposes such as connecting to a Quantum computer), 128 have 512 GB and 1376 have 256 GB of RAM.

LUMI also has a 7 PB flash based file system running the Lustre parallel file system. This system is often denoted as LUMI-F. The bandwidth of that system is 1740 GB/s. Note however that this is still a remote file system with a parallel file system on it, so do not expect that it will behave as the local SSD in your laptop. But that is also the topic of another session in this course.

The main work storage is provided by 4 20 PB hard disk based Lustre file systems with a bandwidth of 240 GB/s each. That section of the machine is often denoted as LUMI-P.

Big parallel file systems need to be used in the proper way to be able to offer the performance that one would expect from their specifications. This is important enough that we have a separate session about that in this course.

Currently LUMI has 4 login nodes, called user access nodes in the HPE Cray world. They each have 2 64-core AMD EPYC 7742 processors and 1 TB of RAM. Note that whereas the GPU and CPU compute nodes have the Zen3 architecture code-named \"Milan\", the processors on the login nodes are Zen2 processors, code-named \"Rome\". Zen3 adds some new instructions so if a compiler generates them, that code would not run on the login nodes. These instructions are basically used in cryptography though. However, many instructions have very different latency, so a compiler that optimises specifically for Zen3 may chose another ordering of instructions then when optimising for Zen2 so it may still make sense to compile specifically for the compute nodes on LuMI.

All compute nodes, login nodes and storage are linked together through a high-performance interconnect. LUMI uses the Slingshot 11 interconnect which is developed by HPE Cray, so not the Mellanox/NVIDIA InfiniBand that you may be familiar with from many smaller clusters, and as we shall discuss later this also influences how you work on LUMI.

Some services for LUMI are still in the planning.

LUMI also has nodes for interactive data analytics. 8 of those have two 64-core Zen2/Rome CPUs with 4 TB of RAM per node, while 8 others have dual 64-core Zen2/Rome CPUs and 8 NVIDIA A40 GPUs for visualisation. Currently we are working on an Open OnDemand based service to make some fo those facilities available. Note though that these nodes are meant for a very specific use, so it is not that we will also be offering, e.g., GPU compute facilities on NVIDIA hardware, and that these are shared resources that should not be monopolised by a single user (so no hope to run an MPI job on 8 4TB nodes).

An object based file system similar to the Allas service of CSC that some of the Finnish users may be familiar with is also being worked on.

Early on a small partition for containerised micro-services managed with Kubernetes was also planned, but that may never materialize due to lack of people to set it up and manage it.

In this section of the course we will now build up LUMI step by step.

"},{"location":"1day-20230509/01_Architecture/#building-lumi-the-cpu-amd-7xx3-milanzen3-cpu","title":"Building LUMI: The CPU AMD 7xx3 (Milan/Zen3) CPU","text":"The LUMI-C and LUMI-G compute nodes use third generation AMD EPYC CPUs. Whereas Intel CPUs launched in the same period were built out of a single large monolithic piece of silicon (that only changed recently with some variants of the Sapphire Rapids CPU launched in early 2023), AMD CPUs are build out of multiple so-called chiplets.

The basic building block of Zen3 CPUs is the Core Complex Die (CCD). Each CCD contains 8 cores, and each core has 32 kB of L1 instruction and 32 kB of L1 data cache, and 512 kB of L2 cache. The L3 cache is shared across all cores on a chiplet and has a total size of 32 MB on LUMI (there are some variants of the processor where this is 96MB). At the user level, the instruction set is basically equivalent to that of the Intel Broadwell generation. AVX2 vector instructions and the FMA instruction are fully supported, but there is no support for any of the AVX-512 versions that can be found on Intel Skylake server processors and later generations. Hence the number of floating point operations that a core can in theory do each clock cycle is 16 (in double precision) rather than the 32 some Intel processors are capable of.

The full processor package for the AMD EPYC processors used in LUMI have 8 such Core Complex Dies for a total of 64 cores. The caches are not shared between different CCDs, so it also implies that the processor has 8 so-called L3 cache regions. (Some cheaper variants have only 4 CCDs, and some have CCDs with only 6 or fewer cores enabled but the same 32 MB of L3 cache per CCD).

Each CCD connects to the memory/IO die through an Infinity Fabric link. The memory/IO die contains the memory controllers, connections to connect two CPU packages together, PCIe lanes to connect to external hardware, and some additional hardware, e.g., for managing the processor. The memory/IO die supports 4 dual channel DDR4 memory controllers providing a total of 8 64-bit wide memory channels. From a logical point of view the memory/IO-die is split in 4 quadrants, with each quadrant having a dual channel memory controller and 2 CCDs. They basically act as 4 NUMA domains. For a core it is slightly faster to access memory in its own quadrant than memory attached to another quadrant, though for the 4 quadrants within the same socket the difference is small. (In fact, the BIOS can be set to show only two or one NUMA domain which is advantageous in some cases, like the typical load pattern of login nodes where it is impossible to nicely spread processes and their memory across the 4 NUMA domains).

The theoretical memory bandwidth of a complete package is around 200 GB/s. However, that bandwidth is not available to a single core but can only be used if enough cores spread over all CCDs are used.

"},{"location":"1day-20230509/01_Architecture/#building-lumi-a-lumi-c-node","title":"Building LUMI: a LUMI-C node","text":"A compute node is then built out of two such processor packages, connected though 4 16-bit wide Infinity Fabric connections with a total theoretical bandwidth of 144 GB/s in each direction. So note that the bandwidth in each direction is less than the memory bandwidth of a socket. Again, it is not really possible to use the full memory bandwidth of a node using just cores on a single socket. Only one of the two sockets has a direct connection to the high performance Slingshot interconnect though.

"},{"location":"1day-20230509/01_Architecture/#a-strong-hierarchy-in-the-node","title":"A strong hierarchy in the node","text":"As can be seen from the node architecture in the previous slide, the CPU compute nodes have a very hierarchical architecture. When mapping an application onto one or more compute nodes, it is key for performance to take that hierarchy into account. This is also the reason why we will pay so much attention to thread and process pinning in this tutorial course.

At the coarsest level, each core supports two hardware threads (what Intel calls hyperthreads). Those hardware threads share all the resources of a core, including the L1 data and instruction caches and the L2 cache. At the next level, a Core Complex Die contains (up to) 8 cores. These cores share the L3 cache and the link to the memory/IO die. Next, as configured on the LUMI compute nodes, there are 2 Core Complex Dies in a NUMA node. These two CCDs share the DRAM channels of that NUMA node. At the fourth level in our hierarchy 4 NUMA nodes are grouped in a socket. Those 4 nodes share an inter-socket link. At the fifth and last level in our shared memory hierarchy there are two sockets in a node. On LUMI, they share a single Slingshot inter-node link.

The finer the level (the lower the number), the shorter the distance and hence the data delay is between threads that need to communicate with each other through the memory hierarchy, and the higher the bandwidth.

This table tells us a lot about how one should map jobs, processes and threads onto a node. E.g., if a process has fewer then 8 processing threads running concurrently, these should be mapped to cores on a single CCD so that they can share the L3 cache, unless they are sufficiently independent of one another, but even in the latter case the additional cores on those CCDs should not be used by other processes as they may push your data out of the cache or saturate the link to the memory/IO die and hence slow down some threads of your process. Similarly, on a 256 GB compute node each NUMA node has 32 GB of RAM (or actually a bit less as the OS also needs memory, etc.), so if you have a job that uses 50 GB of memory but only, say, 12 threads, you should really have two NuMA nodes reserved for that job as otherwise other threads or processes running on cores in those NUMA nodes could saturate some resources needed by your job. It might also be preferential to spread those 12 threads over the 4 CCDs in those 2 NUMA domains unless communication through the L3 threads would be the bottleneck in your application.

"},{"location":"1day-20230509/01_Architecture/#hierarchy-delays-in-numbers","title":"Hierarchy: delays in numbers","text":"This slide shows the ACPI System Locality distance Information Table (SLIT) as returned by, e.g., numactl -H which gives relative distances to memory from a core. E.g., a value of 32 means that access takes 3.2x times the time it would take to access memory attached to the same NUMA node. We can see from this table that the penalty for accessing memory in another NUMA domain in the same socket is still relatively minor (20% extra time), but accessing memory attached to the other socket is a lot more expensive. If a process running on one socket would only access memory attached to the other socket, it would run a lot slower which is why Linux has mechanisms to try to avoid that, but this cannot be done in all scenarios which is why on some clusters you will be allocated cores in proportion to the amount of memory you require, even if that is more cores than you really need (and you will be billed for them).

"},{"location":"1day-20230509/01_Architecture/#building-lumi-concept-lumi-g-node","title":"Building LUMI: Concept LUMI-G node","text":"This slide shows a conceptual view of a LUMI-G compute node. This node is unlike any Intel-architecture-CPU-with-NVIDIA-GPU compute node you may have seen before, and rather mimics the architecture of the USA pre-exascale machines Summit and Sierra which have IBM POWER9 CPUs paired with NVIDIA V100 GPUs.

Each GPU node consists of one 64-core AMD EPYC CPU and 4 AMD MI250x GPUs. So far nothing special. However, two elements make this compute node very special. The GPUs are not connected to the CPU though a PCIe bus. Instead they are connected through the same links that AMD uses to link the GPUs together, or to link the two sockets in the LUMI-C compute nodes, known as xGMI or Infinity Fabric. This enables CPU and GPU to access each others memory rather seamlessly and to implement coherent caches across the whole system. The second remarkable element is that the Slingshot interface cards connect directly to the GPUs (through a PCIe interface on the GPU) rather than two the CPU. The CPUs have a shorter path to the communication network than the CPU in this design.

This makes the LUMI-G compute node really a \"GPU first\" system. The architecture looks more like a GPU system with a CPU as the accelerator for tasks that a GPU is not good at such as some scalar processing or running an OS, rather than a CPU node with GPU accelerator.

It is also a good fit with the cluster-booster design explored in the DEEP project series. In that design, parts of your application that cannot be properly accelerated would run on CPU nodes, while booster GPU nodes would be used for those parts that can (at least if those two could execute concurrently with each other). Different node types are mixed and matched as needed for each specific application, rather than building clusters with massive and expensive nodes that few applications can fully exploit. As the cost per transistor does not decrease anymore, one has to look for ways to use each transistor as efficiently as possible...

It is also important to realise that even though we call the partition \"LUMI-G\", the MI250x is not a GPU in the true sense of the word. It is not a rendering GPU, which for AMD is currently the RDNA architecture with version 3 just out, but a compute accelerator with an architecture that evolved from a GPU architecture, in this case the VEGA architecture from AMD. The architecture of the MI200 series is also known as CDNA2, with the MI100 series being just CDNA, the first version. Much of the hardware that does not serve compute purposes has been removed from the design to have more transistors available for compute. Rendering is possible, but it will be software-based rendering with some GPU acceleration for certain parts of the pipeline, but not full hardware rendering.

This is not an evolution at AMD only. The same is happening with NVIDIA GPUs and there is a reason why the latest generation is called \"Hopper\" for compute and \"Ada Lovelace\" for rendering GPUs. Several of the functional blocks in the Ada Lovelace architecture are missing in the Hopper architecture to make room for more compute power and double precision compute units. E.g., Hopper does not contain the ray tracing units of Ada Lovelace.

Graphics on one hand and HPC and AI on the other hand are becoming separate workloads for which manufacturers make different, specialised cards, and if you have applications that need both, you'll have to rework them to work in two phases, or to use two types of nodes and communicate between them over the interconnect, and look for supercomputers that support both workloads.

But so far for the sales presentation, let's get back to reality...

"},{"location":"1day-20230509/01_Architecture/#building-lumi-what-a-lumi-g-node-really-looks-like","title":"Building LUMI: What a LUMI-G node really looks like","text":"Or the full picture with the bandwidths added to it:

The LUMI-G node uses the 64-core AMD 7A53 EPYC processor, known under the code name \"Trento\". This is basically a Zen3 processor but with a customised memory/IO die, designed specifically for HPE Cray (and in fact Cray itself, before the merger) for the USA Coral-project to build the Frontier supercomputer, the fastest system in the world at the end of 2022 according to at least the Top500 list. Just as the CPUs in the LUMI-C nodes, it is a design with 8 CCDs and a memory/IO die.

The MI250x GPU is also not a single massive die, but contains two compute dies besides the 8 stacks of HBM2e memory, 4 stacks or 64 GB per compute die. The two compute dies in a package are linked together through 4 16-bit Infinity Fabric links. These links run at a higher speed than the links between two CPU sockets in a LUMI-C node, but per link the bandwidth is still only 50 GB/s per direction, creating a total bandwidth of 200 GB/s per direction between the two compute dies in an MI250x GPU. That amount of bandwidth is very low compared to even the memory bandwidth, which is roughly 1.6 TB/s peak per die, let alone compared to whatever bandwidth caches on the compute dies would have or the bandwidth of the internal structures that connect all compute engines on the compute die. Hence the two dies in a single package cannot function efficiently as as single GPU which is one reason why each MI250x GPU on LUMI is actually seen as two GPUs.

Each compute die uses a further 2 or 3 of those Infinity Fabric (or xGNI) links to connect to some compute dies in other MI250x packages. In total, each MI250x package is connected through 5 such links to other MI250x packages. These links run at the same 25 GT/s speed as the links between two compute dies in a package, but even then the bandwidth is only a meager 250 GB/s per direction, less than an NVIDIA A100 GPU which offers 300 GB/s per direction or the NVIDIA H100 GPU which offers 450 GB/s per direction. Each Infinity Fabric link may be twice as fast as each NVLINK 3 or 4 link (NVIDIA Ampere and Hopper respectively), offering 50 GB/s per direction rather than 25 GB/s per direction for NVLINK, but each Ampere GPU has 12 such links and each Hopper GPU 18 (and in fact a further 18 similar ones to link to a Grace CPU), while each MI250x package has only 5 such links available to link to other GPUs (and the three that we still need to discuss).

Note also that even though the connection between MI250x packages is all-to-all, the connection between GPU dies is all but all-to-all. as each GPU die connects to only 3 other GPU dies. There are basically two rings that don't need to share links in the topology, and then some extra connections. The rings are:

- 1 - 0 - 6 - 7 - 5 - 4 - 2 - 3 - 1

- 1 - 5 - 4 - 2 - 3 - 7 - 6 - 0 - 1

Each compute die is also connected to one CPU Core Complex Die (or as documentation of the node sometimes says, L3 cache region). This connection only runs at the same speed as the links between CPUs on the LUMI-C CPU nodes, i.e., 36 GB/s per direction (which is still enough for all 8 GPU compute dies together to saturate the memory bandwidth of the CPU). This implies that each of the 8 GPU dies has a preferred CPU die to work with, and this should definitely be taken into account when mapping processes and threads on a LUMI-G node.

The figure also shows another problem with the LUMI-G node: The mapping between CPU cores/dies and GPU dies is all but logical:

GPU die CCD hardware threads NUMA node 0 6 48-55, 112-119 3 1 7 56-63, 120-127 3 2 2 16-23, 80-87 1 3 3 24-31, 88-95 1 4 0 0-7, 64-71 0 5 1 8-15, 72-79 0 6 4 32-39, 96-103 2 7 5 40-47, 104, 11 2 and as we shall see later in the course, exploiting this is a bit tricky at the moment.

"},{"location":"1day-20230509/01_Architecture/#what-the-future-looks-like","title":"What the future looks like...","text":"Some users may be annoyed by the \"small\" amount of memory on each node. Others may be annoyed by the limited CPU capacity on a node compared to some systems with NVIDIA GPUs. It is however very much in line with the cluster-booster philosophy already mentioned a few times, and it does seem to be the future according to both AMD and Intel. In fact, it looks like with respect to memory capacity things may even get worse.

We saw the first little steps of bringing GPU and CPU closer together and integrating both memory spaces in the USA pre-exascale systems Summit and Sierra. The LUMI-G node which was really designed for the first USA exascale systems continues on this philosophy, albeit with a CPU and GPU from a different manufacturer. Given that manufacturing large dies becomes prohibitively expensive in newer semiconductor processes and that the transistor density on a die is also not increasing at the same rate anymore with process shrinks, manufacturers are starting to look at other ways of increasing the number of transistors per \"chip\" or should we say package. So multi-die designs are here to stay, and as is already the case in the AMD CPUs, different dies may be manufactured with different processes for economical reasons.

Moreover, a closer integration of CPU and GPU would not only make programming easier as memory management becomes easier, it would also enable some code to run on GPU accelerators that is currently bottlenecked by memory transfers between GPU and CPU.

AMD at its 2022 Investor day and at CES 2023 in early January, and Intel at an Investor day in 2022 gave a glimpse of how they see the future. The future is one where one or more CPU dies, GPU dies and memory controllers are combined in a single package and - contrary to the Grace Hopper design of NVIDIA - where CPU and GPU share memory controllers. At CES 2023, AMD already showed a MI300 package that will be used in El Capitan, one of the next USA exascale systems (the third one if Aurora gets built in time). It employs 13 chiplets in two layers, linked to (still only) 8 memory stacks (albeit of a slightly faster type than on the MI250x). The 4 dies on the bottom layer are likely the controllers for memory and inter-GPU links as they produce the least heat, while it was announced that the GPU would feature 24 Zen4 cores, so the top layer consists likely of 3 CPU and 6 GPU chiplets. It looks like the AMD design may have no further memory beyond the 8 HBM stacks, likely providing 128 GB of RAM.

Intel has shown only very conceptual drawings of its Falcon Shores chip which it calls an XPU, but those drawings suggest that that chip will also support some low-bandwidth but higher capacity external memory, similar to the approach taken in some Sapphire Rapids Xeon processors that combine HBM memory on-package with DDR5 memory outside the package. Falcon Shores will be the next generation of Intel GPUs for HPC, after Ponte Vecchio which will be used in the Aurora supercomputer. It is currently not clear though if Intel will already use the integrated CPU+GPU model for the Falcon Shores generation or if this is getting postponed.

However, a CPU closely integrated with accelerators is nothing new as Apple Silicon is rumoured to do exactly that in its latest generations, including the M-family chips.

"},{"location":"1day-20230509/01_Architecture/#building-lumi-the-slingshot-interconnect","title":"Building LUMI: The Slingshot interconnect","text":"All nodes of LUMI, including the login, management and storage nodes, are linked together using the Slingshot interconnect (and almost all use Slingshot 11, the full implementation with 200 Gb/s bandwidth per direction).

Slingshot is an interconnect developed by HPE Cray and based on Ethernet, but with proprietary extensions for better HPC performance. It adapts to the regular Ethernet protocols when talking to a node that only supports Ethernet, so one of the attractive features is that regular servers with Ethernet can be directly connected to the Slingshot network switches. HPE Cray has a tradition of developing their own interconnect for very large systems. As in previous generations, a lot of attention went to adaptive routing and congestion control. There are basically two versions of it. The early version was named Slingshot 10, ran at 100 Gb/s per direction and did not yet have all features. It was used on the initial deployment of LUMI-C compute nodes but has since been upgraded to the full version. The full version with all features is called Slingshot 11. It supports a bandwidth of 200 Gb/s per direction, comparable to HDR InfiniBand with 4x links.

Slingshot is a different interconnect from your typical Mellanox/NVIDIA InfiniBand implementation and hence also has a different software stack. This implies that there are no UCX libraries on the system as the Slingshot 11 adapters do not support that. Instead, the software stack is based on libfabric (as is the stack for many other Ethernet-derived solutions and even Omni-Path has switched to libfabric under its new owner).

LUMI uses the dragonfly topology. This topology is designed to scale to a very large number of connections while still minimizing the amount of long cables that have to be used. However, with its complicated set of connections it does rely on adaptive routing and congestion control for optimal performance more than the fat tree topology used in many smaller clusters. It also needs so-called high-radix switches. The Slingshot switch, code-named Rosetta, has 64 ports. 16 of those ports connect directly to compute nodes (and the next slide will show you how). Switches are then combined in groups. Within a group there is an all-to-all connection between switches: Each switch is connected to each other switch. So traffic between two nodes of a group passes only via two switches if it takes the shortest route. However, as there is typically only one 200 Gb/s direct connection between two switches in a group, if all 16 nodes on two switches in a group would be communicating heavily with each other, it is clear that some traffic will have to take a different route. In fact, it may be statistically better if the 32 involved nodes would be spread more evenly over the group, so topology based scheduling of jobs and getting the processes of a job on as few switches as possible may not be that important on a dragonfly Slingshot network. The groups in a slingshot network are then also connected in an all-to-all fashion, but the number of direct links between two groups is again limited so traffic again may not always want to take the shortest path. The shortest path between two nodes in a dragonfly topology never involves more than 3 hops between switches (so 4 switches): One from the switch the node is connected to the switch in its group that connects to the other group, a second hop to the other group, and then a third hop in the destination group to the switch the destination node is attached to.

"},{"location":"1day-20230509/01_Architecture/#assembling-lumi","title":"Assembling LUMI","text":"Let's now have a look at how everything connects together to the supercomputer LUMI. LUMI does use a custom rack design for the compute nodes that is also fully water cooled. It is build out of units that can contain up to 4 custom cabinets, and a cooling distribution unit (CDU). The size of the complex as depicted in the slide is approximately 12 m2. Each cabinet contains 8 compute chassis in 2 columns of 4 rows. In between the two columns is all the power circuitry. Each compute chassis can contain 8 compute blades that are mounted vertically. Each compute blade can contain multiple nodes, depending on the type of compute blades. HPE Cray have multiple types of compute nodes, also with different types of GPUs. In fact, the Aurora supercomputer which uses Intel CPUs and GPUs and El Capitan, which uses the MI300 series of APU (integrated CPU and GPU) will use the same design with a different compute blade. Each LUMI-C compute blade contains 4 compute nodes and two network interface cards, with each network interface card implementing two Slingshot interfaces and connecting to two nodes. A LUMI-G compute blade contains two nodes and 4 network interface cards, where each interface card now connects to two GPUs in the same node. All connections for power, management network and high performance interconnect of the compute node are at the back of the compute blade. At the front of the compute blades one can find the connections to the cooling manifolds that distribute cooling water to the blades. One compute blade of LUMI-G can consume up to 5kW, so the power density of this setup is incredible, with 40 kW for a single compute chassis.

The back of each cabinet is equally genius. At the back each cabinet has 8 switch chassis, each matching the position of a compute chassis. The switch chassis contains the connection to the power delivery system and a switch for the management network and has 8 positions for switch blades. These are mounted horizontally and connect directly to the compute blades. Each slingshot switch has 8x2 ports on the inner side for that purpose, two for each compute blade. Hence for LUMI-C two switch blades are needed in each switch chassis as each blade has 4 network interfaces, and for LUMI-G 4 switch blades are needed for each compute chassis as those nodes have 8 network interfaces. Note that this also implies that the nodes on the same compute blade of LUMI-C will be on two different switches even though in the node numbering they are numbered consecutively. For LUMI-G both nodes on a blade will be on a different pair of switches and each node is connected to two switches. Thw switch blades are also water cooled (each one can consume up to 250W). No currently possible configuration of the Cray EX system needs that all switch positions in the switch chassis.

This does not mean that the extra positions cannot be useful in the future. If not for an interconnect, one could, e.g., export PCIe ports to the back and attach, e.g., PCIe-based storage via blades as the switch blade environment is certainly less hostile to such storage than the very dense and very hot compute blades.

"},{"location":"1day-20230509/01_Architecture/#lumi-assembled","title":"LUMI assembled","text":"This slide shows LUMI fully assembled (as least as it was at the end of 2022).

At the front there are 5 rows of cabinets similar to the ones in the exploded Cray EX picture on the previous slide. Each row has 2 CDUs and 6 cabinets with compute nodes. The first row, the one with the wolf, contains all nodes of LUMI-C, while the other four rows, with the letters of LUMI, contain the GPU accelerator nodes. At the back of the room there are more regular server racks that house the storage, management nodes, some special compute nodes , etc. The total size is roughly the size of a tennis court.

Remark

The water temperature that a system like the Cray EX can handle is so high that in fact the water can be cooled again with so-called \"free cooling\", by just radiating the heat to the environment rather than using systems with compressors similar to air conditioning systems, especially in regions with a colder climate. The LUMI supercomputer is housed in Kajaani in Finland, with moderate temperature almost year round, and the heat produced by the supercomputer is fed into the central heating system of the city, making it one of the greenest supercomputers in the world as it is also fed with renewable energy.

"},{"location":"1day-20230509/02_CPE/","title":"The HPE Cray Programming Environment","text":"Every user needs to know the basics about the programming environment as after all most software is installed through the programming environment, and to some extent it also determines how programs should be run.

"},{"location":"1day-20230509/02_CPE/#why-do-i-need-to-know-this","title":"Why do I need to know this?","text":"The typical reaction of someone who only wants to run software on an HPC system when confronted with a talk about development tools is \"I only want to run some programs, why do I need to know about programming environments?\"

The answer is that development environments are an intrinsic part of an HPC system. No HPC system is as polished as a personal computer and the software users want to use is typically very unpolished.

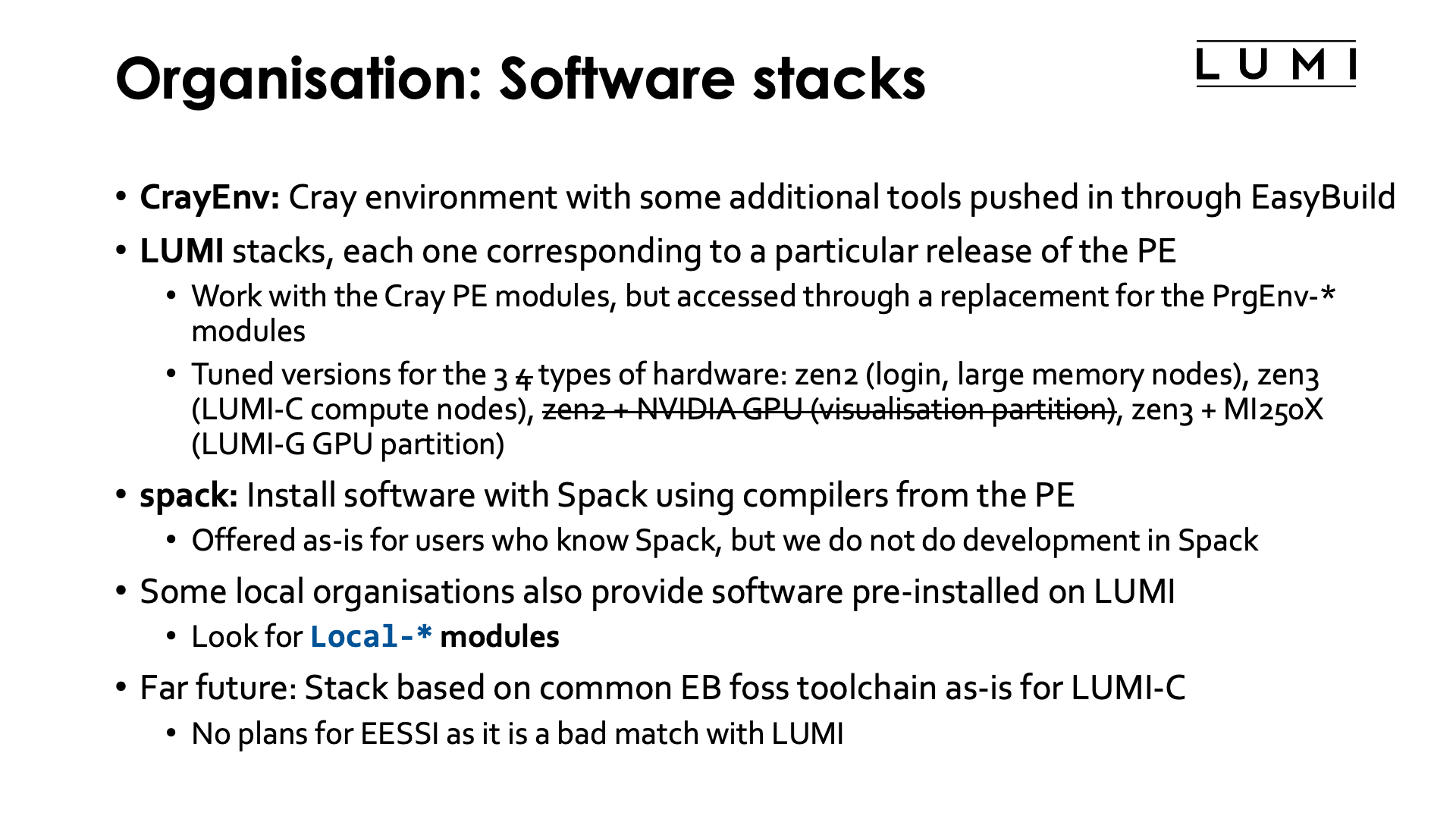

Programs on an HPC cluster are preferably installed from sources to generate binaries optimised for the system. CPUs have gotten new instructions over time that can sometimes speed-up execution of a program a lot, and compiler optimisations that take specific strengths and weaknesses of particular CPUs into account can also gain some performance. Even just a 10% performance gain on an investment of 160 million EURO such as LUMI means a lot of money. When running, the build environment on most systems needs to be at least partially recreated. This is somewhat less relevant on Cray systems as we will see at the end of this part of the course, but if you want reproducibility it becomes important again.

Even when installing software from prebuild binaries some modules might still be needed, e.g., as you may want to inject an optimised MPI library as we shall see in the container section of this course.

"},{"location":"1day-20230509/02_CPE/#the-operating-system-on-lumi","title":"The operating system on LUMI","text":"The login nodes of LUMI run a regular SUSE Linux Enterprise Server 15 SP4 distribution. The compute nodes however run Cray OS, a restricted version of the SUSE Linux that runs on the login nodes. Some daemons are inactive or configured differently and Cray also does not support all regular file systems. The goal of this is to minimize OS jitter, interrupts that the OS handles and slow down random cores at random moments, that can limit scalability of programs. Yet on the GPU nodes there was still the need to reserve one core for the OS and driver processes.

This also implies that some software that works perfectly fine on the login nodes may not work on the compute nodes. E.g., there is no /run/user/$UID directory and we have experienced that D-Bus (which stands for Desktop-Bus) also does not work as one should expect.

Large HPC clusters also have a small system image, so don't expect all the bells-and-whistles from a Linux workstation to be present on a large supercomputer. Since LUMI compute nodes are diskless, the system image actually occupies RAM which is another reason to keep it small.

"},{"location":"1day-20230509/02_CPE/#programming-models","title":"Programming models","text":"On LUMI we have several C/C++ and Fortran compilers. These will be discussed more in this session.

There is also support for MPI and SHMEM for distributed applications. And we also support RCCL, the ROCm-equivalent of the CUDA NCCL library that is popular in machine learning packages.

All compilers have some level of OpenMP support, and two compilers support OpenMP offload to the AMD GPUs, but again more about that later.

OpenACC, the other directive-based model for GPU offloading, is only supported in the Cray Fortran compiler. There is no commitment of neither HPE Cray or AMD to extend that support to C/C++ or other compilers, even though there is work going on in the LLVM community and several compilers on the system are based on LLVM.

The other important programming model for AMD GPUs is HIP, which is their alternative for the proprietary CUDA model. It does not support all CUDA features though (basically it is more CUDA 7 or 8 level) and there is also no equivalent to CUDA Fortran.

The commitment to OpenCL is very unclear, and this actually holds for other GPU vendors also.

We also try to provide SYCL as it is a programming language/model that works on all three GPU families currently used in HPC.

Python is of course pre-installed on the system but we do ask to use big Python installations in a special way as Python puts a tremendous load on the file system. More about that later in this course.

Some users also report some success in running Julia. We don't have full support though and have to depend on binaries as provided by julialang.org. The AMD GPUs are not yet fully supported by Julia.

It is important to realise that there is no CUDA on AMD GPUs and there will never be as this is a proprietary technology that other vendors cannot implement. LUMI will in the future have some nodes with NVIDIA GPUs but these nodes are meant for visualisation and not for compute.

"},{"location":"1day-20230509/02_CPE/#the-development-environment-on-lumi","title":"The development environment on LUMI","text":"Long ago, Cray designed its own processors and hence had to develop their own compilers. They kept doing so, also when they moved to using more standard components, and had a lot of expertise in that field, especially when it comes to the needs of scientific codes, programming models that are almost only used in scientific computing or stem from such projects, and as they develop their own interconnects, it does make sense to also develop an MPI implementation that can use the interconnect in an optimal way. They also have a long tradition in developing performance measurement and analysis tools and debugging tools that work in the context of HPC.

The first important component of the HPE Cray Programming Environment is the compilers. Cray still builds its own compilers for C/C++ and Fortran, called the Cray Compiling Environment (CCE). Furthermore, the GNU compilers are also supported on every Cray system, though at the moment AMD GPU support is not enabled. Depending on the hardware of the system other compilers will also be provided and integrated in the environment. On LUMI two other compilers are available: the AMD AOCC compiler for CPU-only code and the AMD ROCm compilers for GPU programming. Both contain a C/C++ compiler based on Clang and LLVM and a Fortran compiler which is currently based on the former PGI frontend with LLVM backend. The ROCm compilers also contain the support for HIP, AMD's CUDA clone.

The second component is the Cray Scientific and Math libraries, containing the usual suspects as BLAS, LAPACK and ScaLAPACK, and FFTW, but also some data libraries and Cray-only libraries.

The third component is the Cray Message Passing Toolkit. It provides an MPI implementation optimized for Cray systems, but also the Cray SHMEM libraries, an implementation of OpenSHMEM 1.5.

The fourth component is some Cray-unique sauce to integrate all these components, and support for hugepages to make memory access more efficient for some programs that allocate huge chunks of memory at once.

Other components include the Cray Performance Measurement and Analysis Tools and the Cray Debugging Support Tools that will not be discussed in this one-day course, and Python and R modules that both also provide some packages compiled with support for the Cray Scientific Libraries.

Besides the tools provided by HPE Cray, several of the development tools from the ROCm stack are also available on the system while some others can be user-installed (and one of those, Omniperf, is not available due to security concerns). Furthermore there are some third party tools available on LUMI, including Linaro Forge (previously ARM Forge) and Vampir and some open source profiling tools.

We will now discuss some of these components in a little bit more detail, but refer to the 4-day trainings that we organise three times a year with HPE for more material.

"},{"location":"1day-20230509/02_CPE/#the-cray-compiling-environment","title":"The Cray Compiling Environment","text":"The Cray Compiling Environment are the default compilers on many Cray systems and on LUMI. These compilers are designed specifically for scientific software in an HPC environment. The current versions use are LLVM-based with extensions by HPE Cray for automatic vectorization and shared memory parallelization, technology that they have experience with since the late '70s or '80s.

The compiler offers extensive standards support. The C and C++ compiler is essentially their own build of Clang with LLVM with some of their optimisation plugins and OpenMP run-time. The version numbering of the CCE currently follows the major versions of the Clang compiler used. The support for C and C++ language standards corresponds to that of Clang. The Fortran compiler uses a frontend developed by HPE Cray, but an LLVM-based backend. The compiler supports most of Fortran 2018 (ISO/IEC 1539:2018). The CCE Fortran compiler is known to be very strict with language standards. Programs that use GNU or Intel extensions will usually fail to compile, and unfortunately since many developers only test with these compilers, much Fortran code is not fully standards compliant and will fail.

All CCE compilers support OpenMP, with offload for AMD and NVIDIA GPUs. They claim full OpenMP 4.5 support with partial (and growing) support for OpenMP 5.0 and 5.1. More information about the OpenMP support is found by checking a manual page:

man intro_openmp\n

which does require that the cce module is loaded. The Fortran compiler also supports OpenACC for AMD and NVIDIA GPUs. That implementation claims to be fully OpenACC 2.0 compliant, and offers partial support for OpenACC 2.x/3.x. Information is available via man intro_openacc\n

AMD and HPE Cray still recommend moving to OpenMP which is a much broader supported standard. There are no plans to also support OpenACC in the C/C++ compiler. The CCE compilers also offer support for some PGAS (Partitioned Global Address Space) languages. UPC 1.2 is supported, as is Fortran 2008 coarray support. These implementations do not require a preprocessor that first translates the code to regular C or Fortran. There is also support for debugging with Linaro Forge.

Lastly, there are also bindings for MPI.

"},{"location":"1day-20230509/02_CPE/#scientific-and-math-libraries","title":"Scientific and math libraries","text":"Some mathematical libraries have become so popular that they basically define an API for which several implementations exist, and CPU manufacturers and some open source groups spend a significant amount of resources to make optimal implementations for each CPU architecture.

The most notorious library of that type is BLAS, a set of basic linear algebra subroutines for vector-vector, matrix-vector and matrix-matrix implementations. It is the basis for many other libraries that need those linear algebra operations, including Lapack, a library with solvers for linear systems and eigenvalue problems.

The HPE Cray LibSci library contains BLAS and its C-interface CBLAS, and LAPACK and its C interface LAPACKE. It also adds ScaLAPACK, a distributed memory version of LAPACK, and BLACS, the Basic Linear Algebra Communication Subprograms, which is the communication layer used by ScaLAPACK. The BLAS library combines implementations from different sources, to try to offer the most optimal one for several architectures and a range of matrix and vector sizes.

LibSci also contains one component which is HPE Cray-only: IRT, the Iterative Refinement Toolkit, which allows to do mixed precision computations for LAPACK operations that can speed up the generation of a double precision result with nearly a factor of two for those problems that are suited for iterative refinement. If you are familiar with numerical analysis, you probably know that the matrix should not be too ill-conditioned for that.

There is also a GPU-optimized version of LibSci, called LibSCi_ACC, which contains a subset of the routines of LibSci. We don't have much experience in the support team with this library though. It can be compared with what Intel is doing with oneAPI MKL which also offers GPU versions of some of the traditional MKL routines.

Another separate component of the scientific and mathematical libraries is FFTW3, Fastest Fourier Transforms in the West, which comes with optimized versions for all CPU architectures supported by recent HPE Cray machines.

Finally, the scientific and math libraries also contain HDF5 and netCDF libraries in sequential and parallel versions.

"},{"location":"1day-20230509/02_CPE/#cray-mpi","title":"Cray MPI","text":"HPE Cray build their own MPI library with optimisations for their own interconnects. The Cray MPI library is derived from the ANL MPICH 3.4 code base and fully support the ABI (Application Binary Interface) of that application which implies that in principle it should be possible to swap the MPI library of applications build with that ABI with the Cray MPICH library. Or in other words, if you can only get a binary distribution of an application and that application was build against an MPI library compatible with the MPICH 3.4 ABI (which includes Intel MPI) it should be possible to exchange that library for the Cray one to have optimised communication on the Cray Slingshot interconnect.

Cray MPI contains many tweaks specifically for Cray systems. HPE Cray claim improved algorithms for many collectives, an asynchronous progress engine to improve overlap of communications and computations, customizable collective buffering when using MPI-IO, and optimized remote memory access (MPI one-sided communication) which also supports passive remote memory access.

When used in the correct way (some attention is needed when linking applications) it is allo fully GPU aware with currently support for AMD and NVIDIA GPUs.

The MPI library also supports bindings for Fortran 2008.

MPI 3.1 is almost completely supported, with two exceptions. Dynamic process management is not supported (and a problem anyway on systems with batch schedulers), and when using CCE MPI_LONG_DOUBLE and MPI_C_LONG_DOUBLE_COMPLEX are also not supported.

The Cray MPI library does not support the mpirun or mpiexec commands, which is in fact allowed by the standard which only requires a process starter and suggest mpirun or mpiexec depending on the version of the standard. Instead the Slurm srun command is used as the process starter. This actually makes a lot of sense as the MPI application should be mapped correctly on the allocated resources, and the resource manager is better suited to do so.

Cray MPI on LUMI is layered on top of libfabric, which in turn uses the so-called Cassini provider to interface with the hardware. UCX is not supported on LUMI (but Cray MPI can support it when used on InfiniBand clusters). It also uses a GPU Transfer Library (GTL) for GPU-aware MPI.

"},{"location":"1day-20230509/02_CPE/#lmod","title":"Lmod","text":"Virtually all clusters use modules to enable the users to configure the environment and select the versions of software they want. There are three different module systems around. One is an old implementation that is hardly evolving anymore but that can still be found on\\ a number of clusters. HPE Cray still offers it as an option. Modulefiles are written in TCL, but the tool itself is in C. The more popular tool at the moment is probably Lmod. It is largely compatible with modulefiles for the old tool, but prefers modulefiles written in LUA. It is also supported by the HPE Cray PE and is our choice on LUMI. The final implementation is a full TCL implementation developed in France and also in use on some large systems in Europe.

Fortunately the basic commands are largely similar in those implementations, but what differs is the way to search for modules. We will now only discuss the basic commands, the more advanced ones will be discussed in the next session of this tutorial course.

Modules also play an important role in configuring the HPE Cray PE, but before touching that topic we present the basic commands:

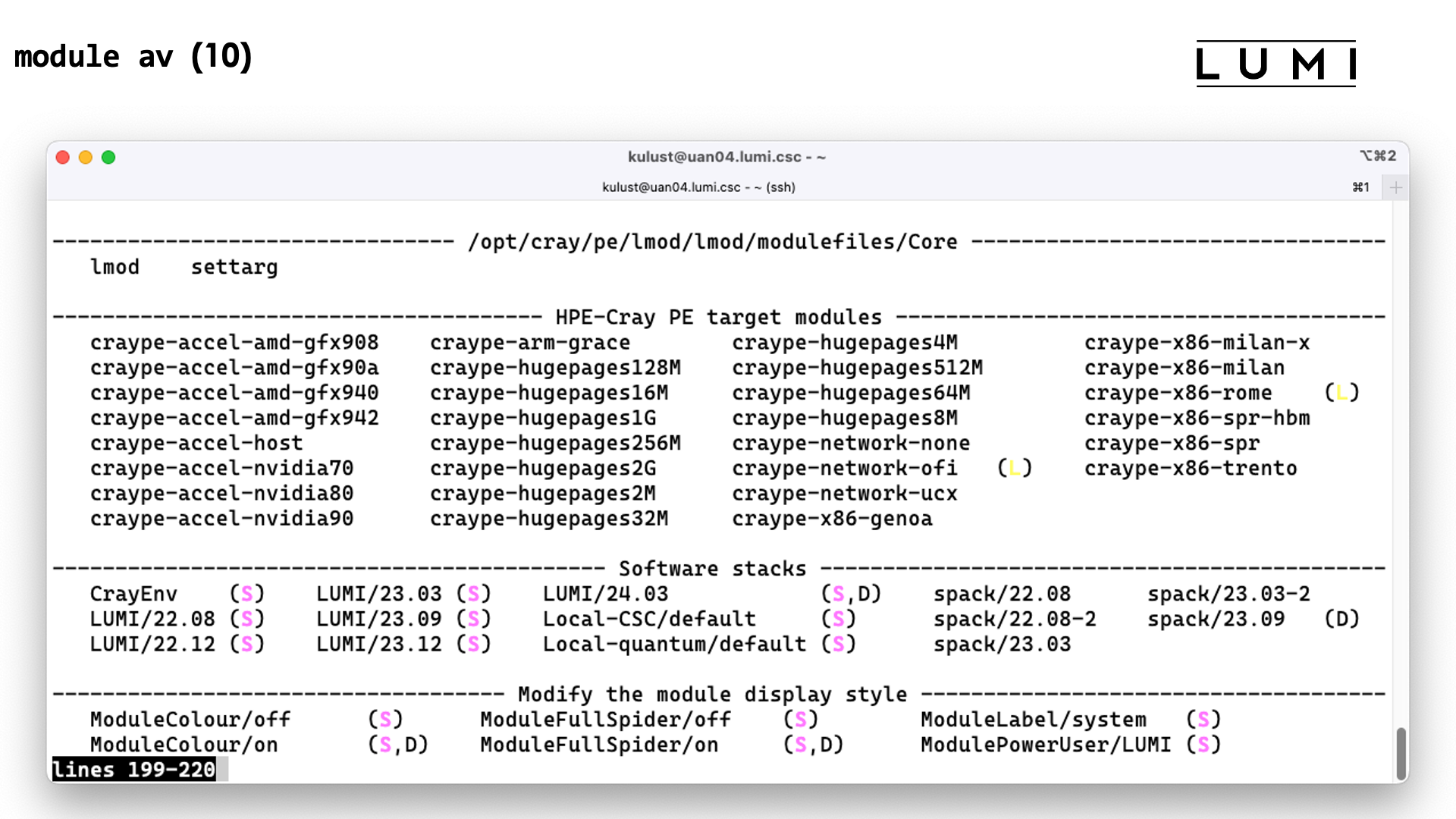

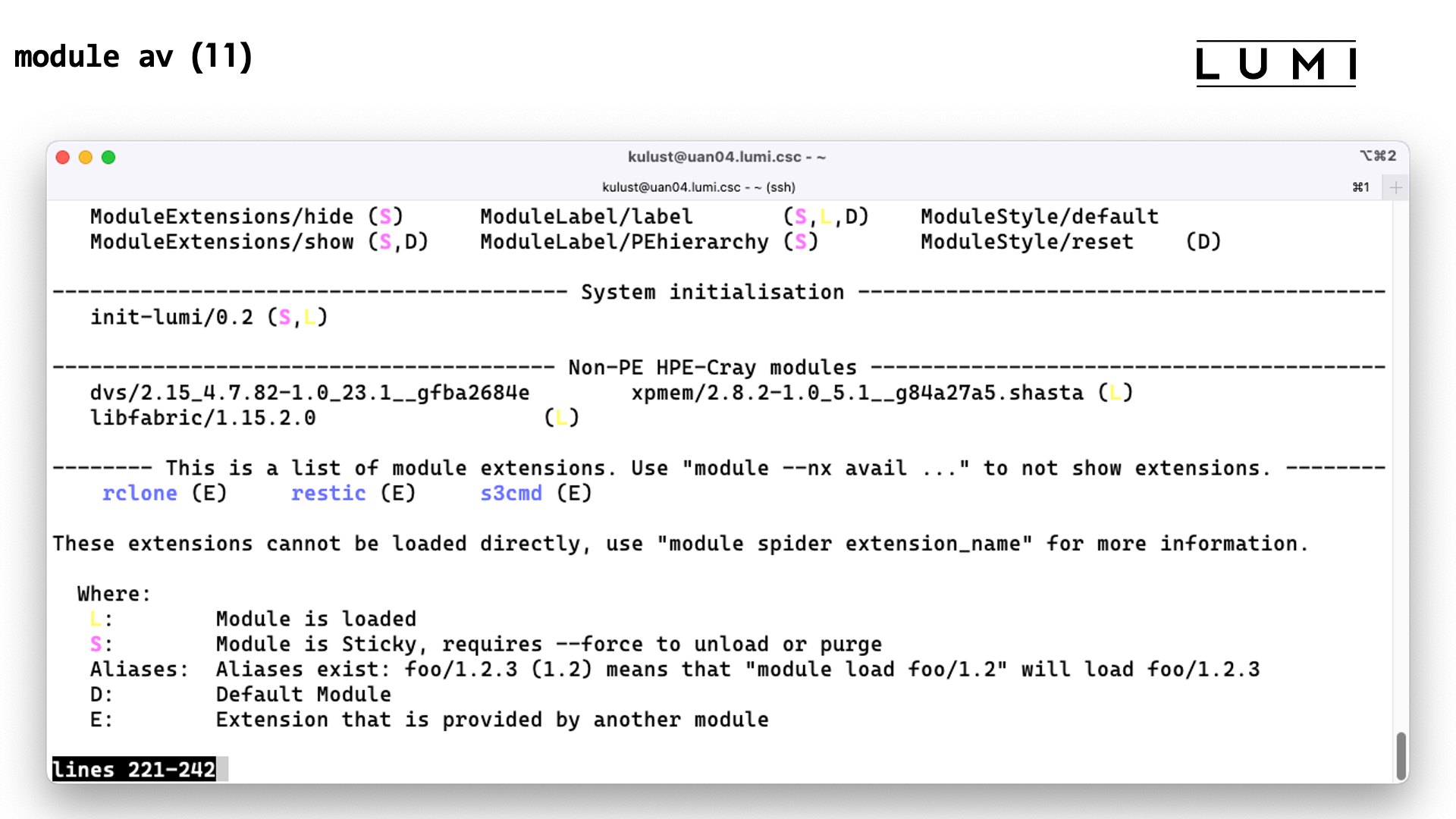

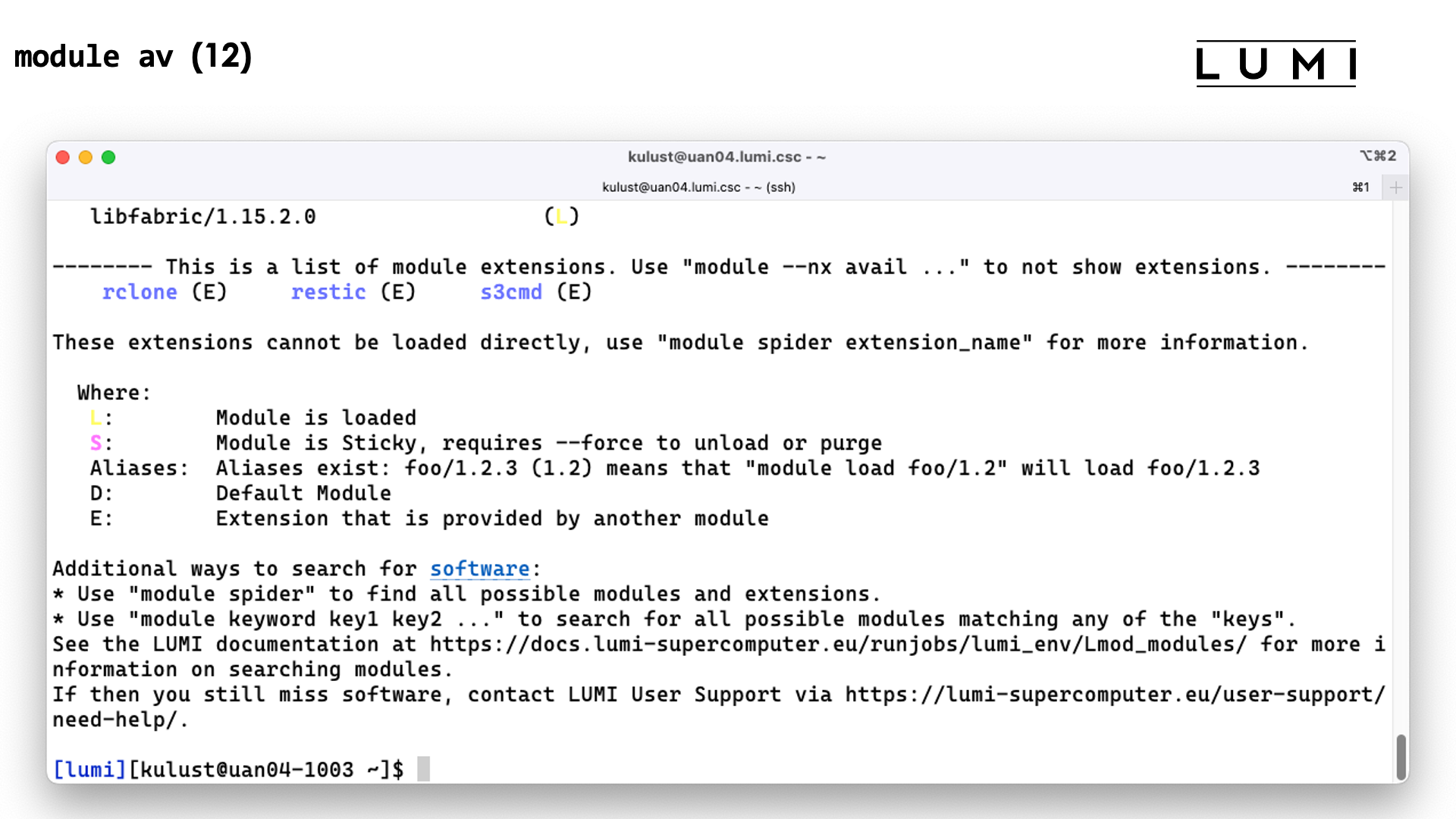

module avail: Lists all modules that can currently be loaded. module list: Lists all modules that are currently loadedmodule load: Command used to load a module. Add the name and version of the module.module unload: Unload a module. Using the name is enough as there can only one version be loaded of a module.module swap: Unload the first module given and then load the second one. In Lmod this is really equivalent to a module unload followed by a module load.

Lmod supports a hierarchical module system. Such a module setup distinguishes between installed modules and available modules. The installed modules are all modules that can be loaded in one way or another by the module systems, but loading some of those may require loading other modules first. The available modules are the modules that can be loaded directly without loading any other module. The list of available modules changes all the time based on modules that are already loaded, and if you unload a module that makes other loaded modules unavailable, those will also be deactivated by Lmod. The advantage of a hierarchical module system is that one can support multiple configurations of a module while all configurations can have the same name and version. This is not fully exploited on LUMI, but it is used a lot in the HPE Cray PE. E.g., the MPI libraries for the various compilers on the system all have the same name and version yet make different binaries available depending on the compiler that is being used.

"},{"location":"1day-20230509/02_CPE/#compiler-wrappers","title":"Compiler wrappers","text":"The HPE Cray PE compilers are usually used through compiler wrappers. The wrapper for C is cc, the one for C++ is CC and the one for Fortran is ftn. The wrapper then calls the selected compiler. Which compiler will be called is determined by which compiler module is loaded. As shown on the slide \"Development environment on LUMI\", on LUMI the Cray Compiling Environment (module cce), GNU Compiler Collection (module gcc), the AMD Optimizing Compiler for CPUs (module aocc) and the ROCm LLVM-based compilers (module amd) are available. On other HPE Cray systems, you may also find the Intel compilers or on systems with NVIDIA GPUS, the NVIDIA HPC compilers.

The target architectures for CPU and GPU are also selected through modules, so it is better to not use compiler options such as -march=native. This makes cross compiling also easier.

The wrappers will also automatically link in certain libraries, and make the include files available, depending on which other modules are loaded. In some cases it tries to do so cleverly, like selecting an MPI, OpenMP, hybrid or sequential option depending on whether the MPI module is loaded and/or OpenMP compiler flag is used. This is the case for:

- The MPI libraries. There is no

mpicc, mpiCC, mpif90, etc. on LUMI. The regular compiler wrappers do the job as soon as the cray-mpich module is loaded. - LibSci and FFTW are linked automatically if the corresponding modules are loaded. So no need to look, e.g., for the BLAS or LAPACK libraries: They will be offered to the linker if the

cray-libsci module is loaded (and it is an example of where the wrappers try to take the right version based not only on compiler, but also on whether MPI is loaded or not and the OpenMP compiler flag). - netCDF and HDF5

It is possible to see which compiler and linker flags the wrappers add through the --craype-verbose flag.

The wrappers do have some flags of their own, but also accept all flags of the selected compiler and simply pass those to those compilers.

"},{"location":"1day-20230509/02_CPE/#selecting-the-version-of-the-cpe","title":"Selecting the version of the CPE","text":"The version numbers of the HPE Cray PE are of the form yy.dd, e.g., 22.08 for the version released in August 2022. There are usually 10 releases per year (basically every month except July and January), though not all versions are ever offered on LUMI.

There is always a default version assigned by the sysadmins when installing the programming environment. It is possible to change the default version for loading further modules by loading one of the versions of the cpe module. E.g., assuming the 22.08 version would be present on the system, it can be loaded through

module load cpe/22.08\n

Loading this module will also try to switch the already loaded PE modules to the versions from that release. This does not always work correctly, due to some bugs in most versions of this module and a limitation of Lmod. Executing the module load twice will fix this: module load cpe/22.08\nmodule load cpe/22.08\n

The module will also produce a warning when it is unloaded (which is also the case when you do a module load of cpe when one is already loaded, as it then first unloads the already loaded cpe module). The warning can be ignored, but keep in mind that what it says is true, it cannot restore the environment you found on LUMI at login. The cpe module is also not needed when using the LUMI software stacks, but more about that later.

"},{"location":"1day-20230509/02_CPE/#the-target-modules","title":"The target modules","text":"The target modules are used to select the CPU and GPU optimization targets and to select the network communication layer.

On LUMI there are three CPU target modules that are relevant:

craype-x86-rome selects the Zen2 CPU family code named Rome. These CPUs are used on the login nodes and the nodes of the data analytics and visualisation partition of LUMI. However, as Zen3 is a superset of Zen2, software compiled to this target should run everywhere, but may not exploit the full potential of the LUMI-C and LUMI-G nodes (though the performance loss is likely minor).craype-x86-milan is the target module for the Zen3 CPUs code named Milan that are used on the CPU-only compute nodes of LUMI (the LUMI-C partition).craype-x86-trento is the target module for the Zen3 CPUs code named Trento that are used on the GPU compute nodes of LUMI (the LUMI-G partition).

Two GPU target modules are relevant for LUMI:

craype-accel-host: Will tell some compilers to compile offload code for the host instead.craype-accel-gfx90a: Compile offload code for the MI200 series GPUs that are used on LUMI-G.

Two network target modules are relevant for LUMI:

craype-network-ofi selects the libfabric communication layer which is needed for Slingshot 11.craype-network-none omits all network specific libraries.

The compiler wrappers also have corresponding compiler flags that can be used to overwrite these settings: -target-cpu, -target-accel and -target-network.

"},{"location":"1day-20230509/02_CPE/#prgenv-and-compiler-modules","title":"PrgEnv and compiler modules","text":"In the HPE Cray PE, the PrgEnv-* modules are usually used to load a specific variant of the programming environment. These modules will load the compiler wrapper (craype), compiler, MPI and LibSci module and may load some other modules also.

The following table gives an overview of the available PrgEnv-* modules and the compilers they activate:

PrgEnv Description Compiler module Compilers PrgEnv-cray Cray Compiling Environment cce craycc, crayCC, crayftn PrgEnv-gnu GNU Compiler Collection gcc gcc, g++, gfortran PrgEnv-aocc AMD Optimizing Compilers(CPU only) aocc clang, clang++, flang PrgEnv-amd AMD ROCm LLVM compilers (GPU support) amd amdclang, amdclang++, amdflang There is also a second module that offers the AMD ROCm environment, rocm. That module has to be used with PrgEnv-cray and PrgEnv-gnu to enable MPI-aware GPU, hipcc with the GNU compilers or GPU support with the Cray compilers.

"},{"location":"1day-20230509/02_CPE/#getting-help","title":"Getting help","text":"Help on the HPE Cray Programming Environment is offered mostly through manual pages and compiler flags. Online help is limited and difficult to locate.

For the compilers and compiler wrappers, the following man pages are relevant:

PrgEnv C C++ Fortran PrgEnv-cray man craycc man crayCC man crayftn PrgEnv-gnu man gcc man g++ man gfortran PrgEnv-aocc/PrgEnv-amd - - - Compiler wrappers man cc man CC man ftn Recently, HPE Cray have also created a web version of some of the CPE documentation.

Some compilers also support the --help flag, e.g., amdclang --help. For the wrappers, the switch -help should be used instead as the double dash version is passed to the compiler.

The wrappers also support the -dumpversion flag to show the version of the underlying compiler. Many other commands, including the actual compilers, use --version to show the version.

For Cray Fortran compiler error messages, the explain command is also helpful. E.g.,

$ ftn\nftn-2107 ftn: ERROR in command line\n No valid filenames are specified on the command line.\n$ explain ftn-2107\n\nError : No valid filenames are specified on the command line.\n\nAt least one file name must appear on the command line, with any command-line\noptions. Verify that a file name was specified, and also check for an\nerroneous command-line option which may cause the file name to appear to be\nan argument to that option.\n

On older Cray systems this used to be a very useful command with more compilers but as HPE Cray is using more and more open source components instead there are fewer commands that give additional documentation via the explain command.

Lastly, there is also a lot of information in the \"Developing\" section of the LUMI documentation.

"},{"location":"1day-20230509/02_CPE/#google-chatgpt-and-lumi","title":"Google, ChatGPT and LUMI","text":"When looking for information on the HPE Cray Programming Environment using search engines such as Google, you'll be disappointed how few results show up. HPE doesn't put much information on the internet, and the environment so far was mostly used on Cray systems of which there are not that many.

The same holds for ChatGPT. In fact, much of the training of the current version of ChatGPT was done with data of two or so years ago and there is not that much suitable training data available on the internet either.

The HPE Cray environment has a command line alternative to search engines though: the man -K command that searches for a term in the manual pages. It is often useful to better understand some error messages. E.g., sometimes Cray MPICH will suggest you to set some environment variable to work around some problem. You may remember that man intro_mpi gives a lot of information about Cray MPICH, but if you don't and, e.g., the error message suggests you to set FI_CXI_RX_MATCH_MODE to either software or hybrid, one way to find out where you can get more information about this environment variable is

man -K FI_CXI_RX_MATCH_MODE\n

"},{"location":"1day-20230509/02_CPE/#other-modules","title":"Other modules","text":"Other modules that are relevant even to users who do not do development:

- MPI:

cray-mpich. - LibSci:

cray-libsci - Cray FFTW3 library:

cray-fftw - HDF5:

cray-hdf5: Serial HDF5 I/O librarycray-hdf5-parallel: Parallel HDF5 I/O library

- NetCDF:

cray-netcdfcray-netcdf-hdf5parallelcray-parallel-netcdf

- Python:

cray-python, already contains a selection of packages that interface with other libraries of the HPE Cray PE, including mpi4py, NumPy, SciPy and pandas. - R:

cray-R

The HPE Cray PE also offers other modules for debugging, profiling, performance analysis, etc. that are not covered in this short version of the LUMI course. Many more are covered in the 4-day courses for developers that we organise several times per year with the help of HPE and AMD.

"},{"location":"1day-20230509/02_CPE/#warning-1-you-do-not-always-get-what-you-expect","title":"Warning 1: You do not always get what you expect...","text":"The HPE Cray PE packs a surprise in terms of the libraries it uses, certainly for users who come from an environment where the software is managed through EasyBuild, but also for most other users.

The PE does not use the versions of many libraries determined by the loaded modules at runtime but instead uses default versions of libraries (which are actually in /opt/cray/pe/lib64 on the system) which correspond to the version of the programming environment that is set as the default when installed. This is very much the behaviour of Linux applications also that pick standard libraries in a few standard directories and it enables many programs build with the HPE Cray PE to run without reconstructing the environment and in some cases to mix programs compiled with different compilers with ease (with the emphasis on some as there may still be library conflicts between other libraries when not using the so-called rpath linking). This does have an annoying side effect though: If the default PE on the system changes, all applications will use different libraries and hence the behaviour of your application may change.

Luckily there are some solutions to this problem.

By default the Cray PE uses dynamic linking, and does not use rpath linking, which is a form of dynamic linking where the search path for the libraries is stored in each executable separately. On Linux, the search path for libraries is set through the environment variable LD_LIBRARY_PATH. Those Cray PE modules that have their libraries also in the default location, add the directories that contain the actual version of the libraries corresponding to the version of the module to the PATH-style environment variable CRAY_LD_LIBRARY_PATH. Hence all one needs to do is to ensure that those directories are put in LD_LIBRARY_PATH which is searched before the default location:

export LD_LIBRARY_PATH=$CRAY_LD_LIBRARY_PATH:$LD_LIBRARY_PATH\n

Small demo of adapting LD_LIBRARY_PATH: An example that can only be fully understood after the section on the LUMI software stacks:

$ module load LUMI/22.08\n$ module load lumi-CPEtools/1.0-cpeGNU-22.08\n$ ldd $EBROOTLUMIMINCPETOOLS/bin/mpi_check\n linux-vdso.so.1 (0x00007f420cd55000)\n libdl.so.2 => /lib64/libdl.so.2 (0x00007f420c929000)\n libmpi_gnu_91.so.12 => /opt/cray/pe/lib64/libmpi_gnu_91.so.12 (0x00007f4209da4000)\n ...\n$ export LD_LIBRARY_PATH=$CRAY_LD_LIBRARY_PATH:$LD_LIBRARY_PATH\n$ ldd $EBROOTLUMIMINCPETOOLS/bin/mpi_check\n linux-vdso.so.1 (0x00007fb38c1e0000)\n libdl.so.2 => /lib64/libdl.so.2 (0x00007fb38bdb4000)\n libmpi_gnu_91.so.12 => /opt/cray/pe/mpich/8.1.18/ofi/gnu/9.1/lib/libmpi_gnu_91.so.12 (0x00007fb389198000)\n ...\n

The ldd command shows which libraries are used by an executable. Only a part of the very long output is shown in the above example. But we can already see that in the first case, the library libmpi_gnu_91.so.12 is taken from opt/cray/pe/lib64 which is the directory with the default versions, while in the second case it is taken from /opt/cray/pe/mpich/8.1.18/ofi/gnu/9.1/lib/ which clearly is for a specific version of cray-mpich. We do provide an experimental module lumi-CrayPath that tries to fix LD_LIBRARY_PATH in a way that unloading the module fixes LD_LIBRARY_PATH again to the state before adding CRAY_LD_LIBRARY_PATH and that reloading the module adapts LD_LIBRARY_PATH to the current value of CRAY_LD_LIBRARY_PATH. Loading that module after loading all other modules should fix this issue for most if not all software.

The second solution would be to use rpath-linking for the Cray PE libraries, which can be done by setting the CRAY_ADD_RPATHenvironment variable:

export CRAY_ADD_RPATH=yes\n

"},{"location":"1day-20230509/02_CPE/#warning-2-order-matters","title":"Warning 2: Order matters","text":"Lmod is a hierarchical module scheme and this is exploited by the HPE Cray PE. Not all modules are available right away and some only become available after loading other modules. E.g.,

cray-fftw only becomes available when a processor target module is loadedcray-mpich requires both the network target module craype-network-ofi and a compiler module to be loadedcray-hdf5 requires a compiler module to be loaded and cray-netcdf in turn requires cray-hdf5

but there are many more examples in the programming environment.

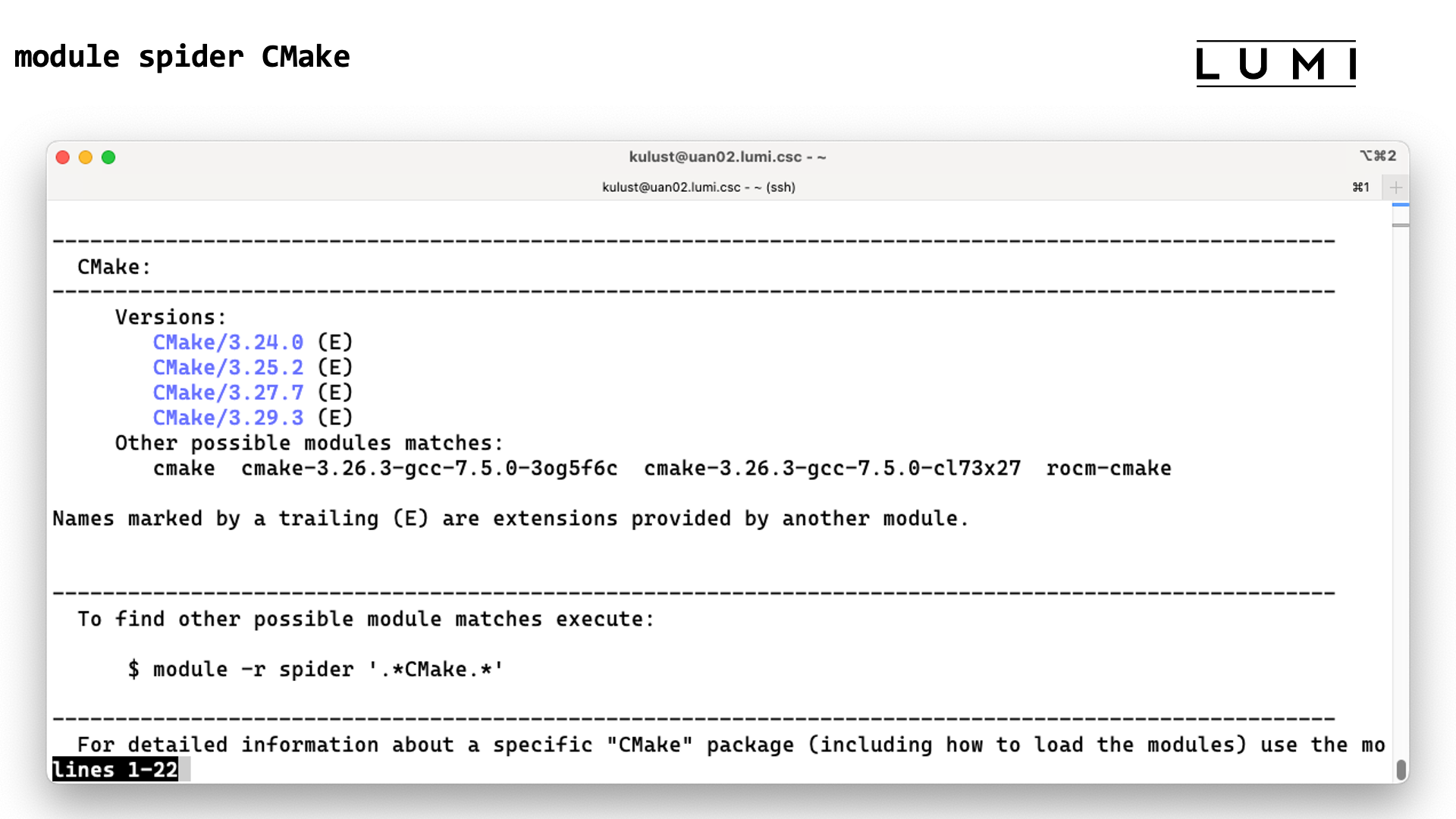

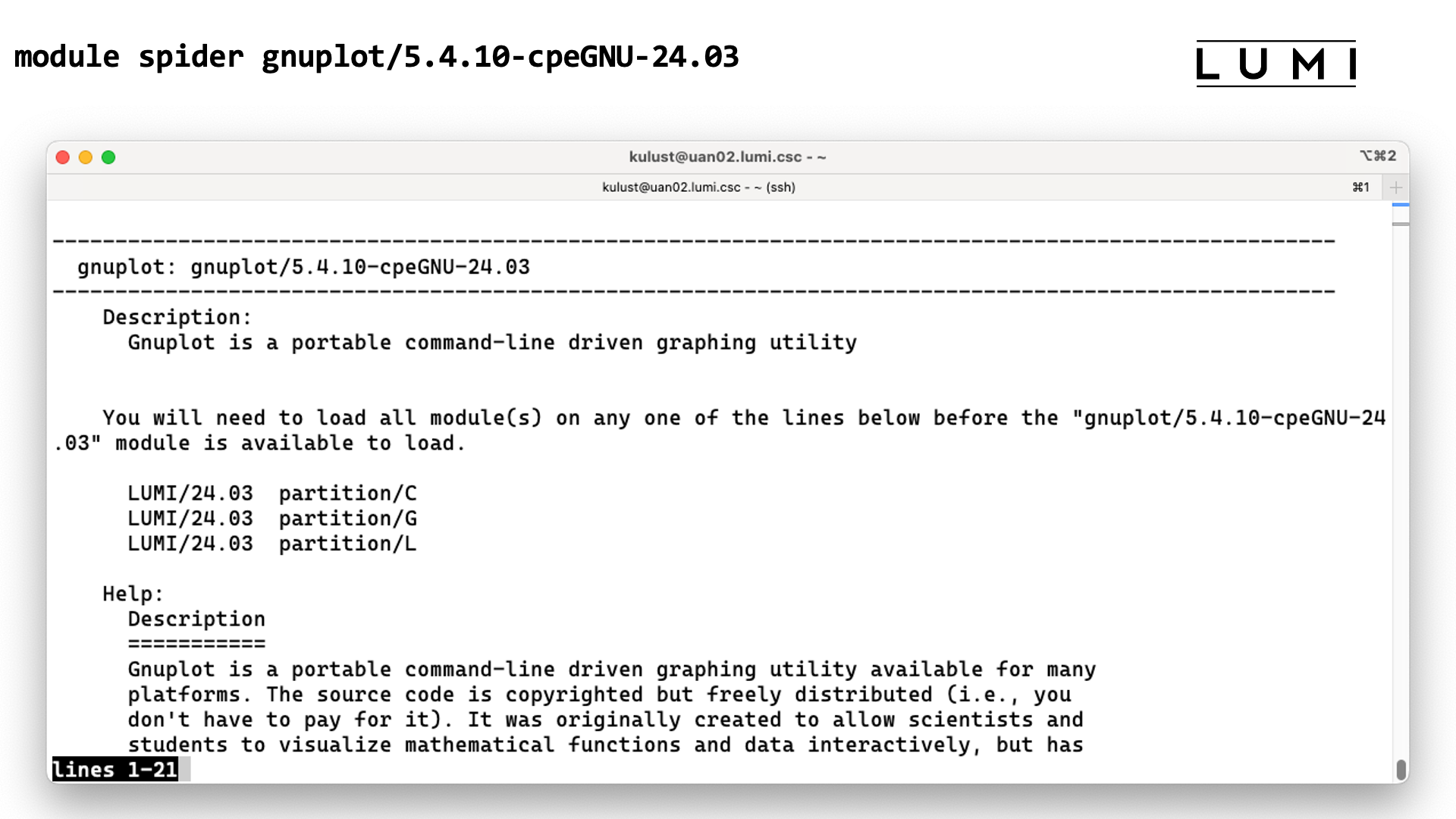

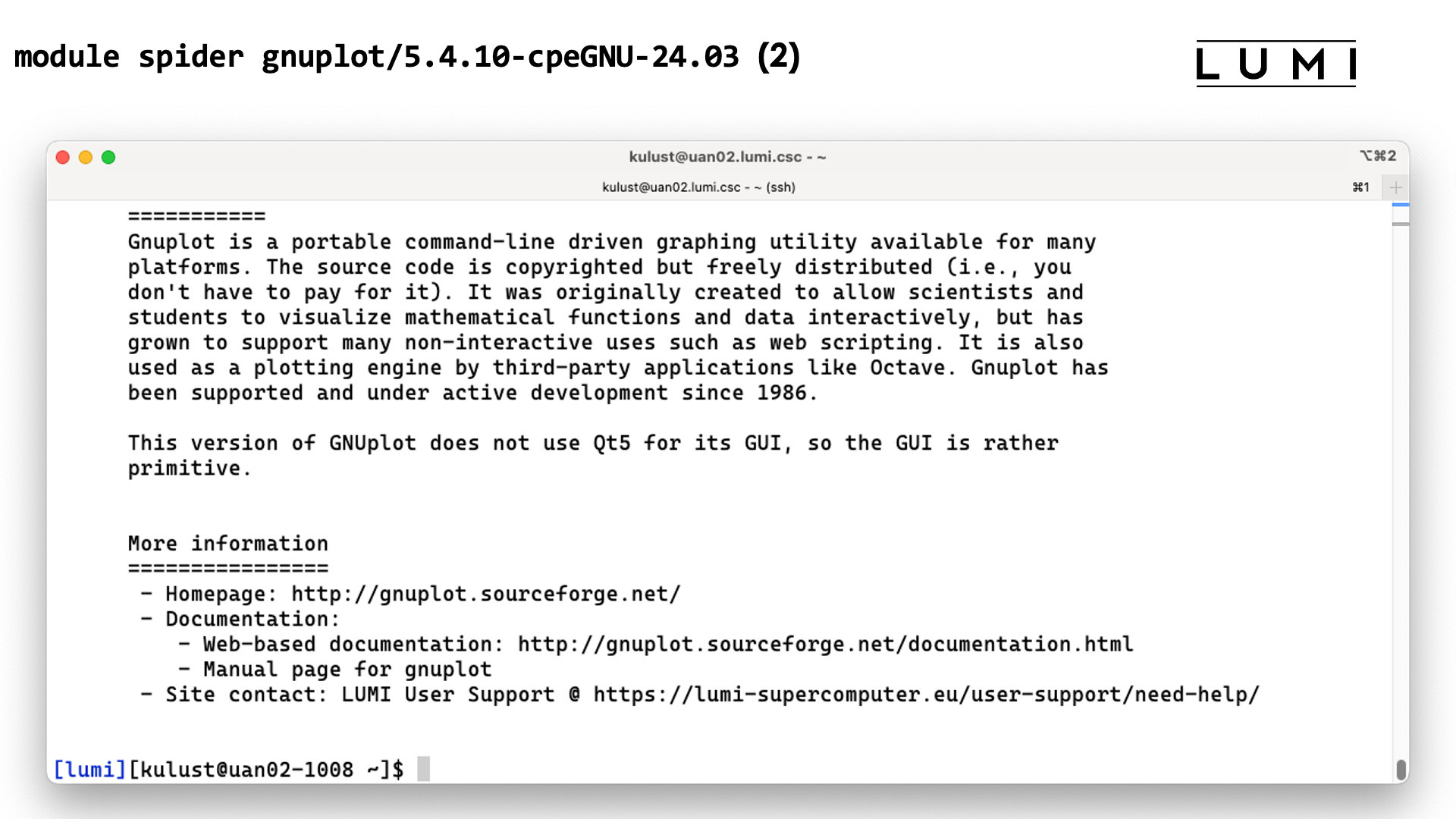

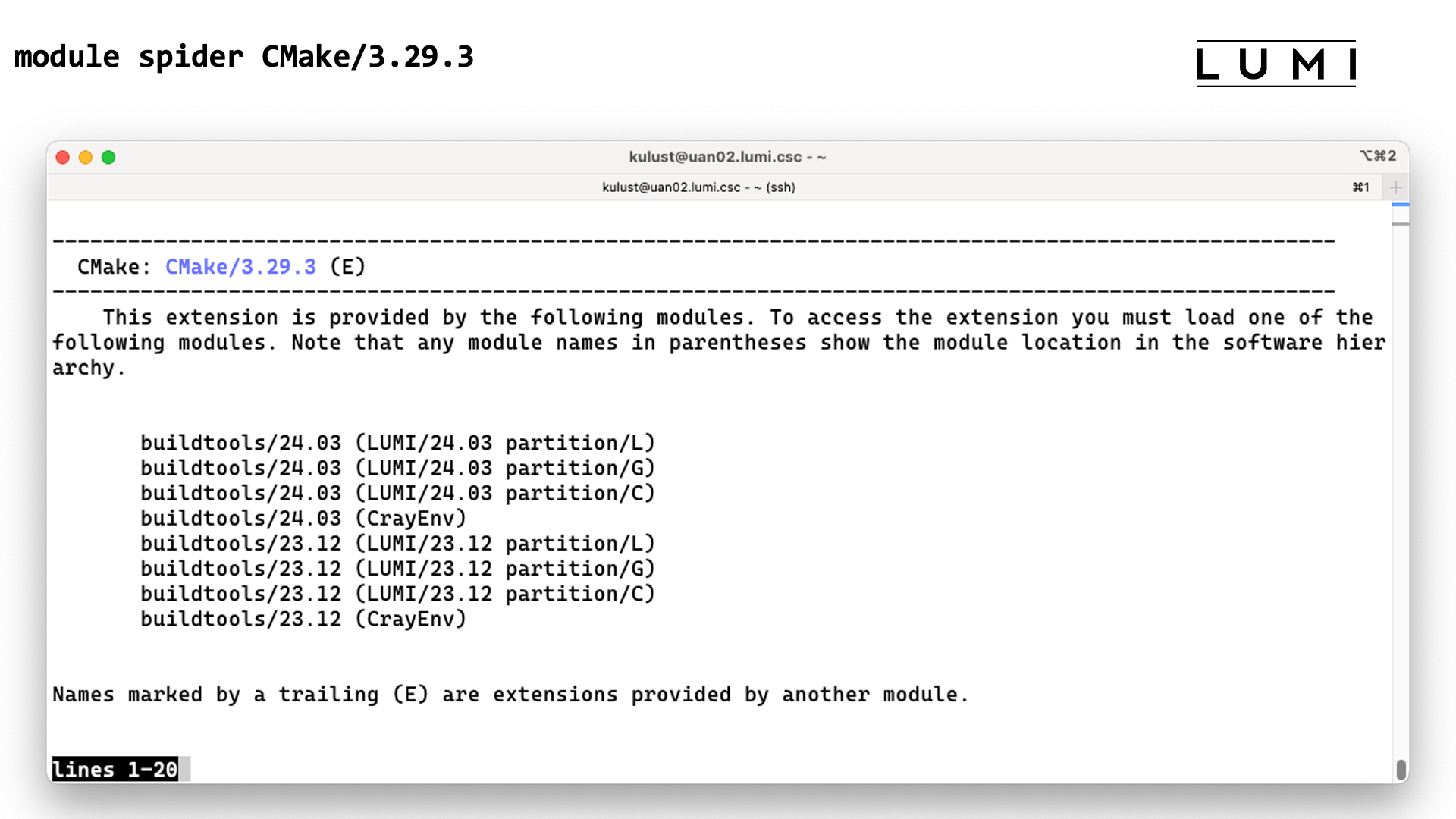

In the next section of the course we will see how unavailable modules can still be found with module spider. That command can also tell which other modules should be loaded before a module can be loaded, but unfortunately due to the sometimes non-standard way the HPE Cray PE uses Lmod that information is not always complete for the PE, which is also why we didn't demonstrate it here.

"},{"location":"1day-20230509/03_Modules/","title":"Modules on LUMI","text":"Intended audience

As this course is designed for people already familiar with HPC systems and as virtually any cluster nowadays uses some form of module environment, this section assumes that the reader is already familiar with a module environment but not necessarily the one used on LUMI.

"},{"location":"1day-20230509/03_Modules/#module-environments","title":"Module environments","text":"Modules are commonly used on HPC systems to enable users to create custom environments and select between multiple versions of applications. Note that this also implies that applications on HPC systems are often not installed in the regular directories one would expect from the documentation of some packages, as that location may not even always support proper multi-version installations and as one prefers to have a software stack which is as isolated as possible from the system installation to keep the image that has to be loaded on the compute nodes small.

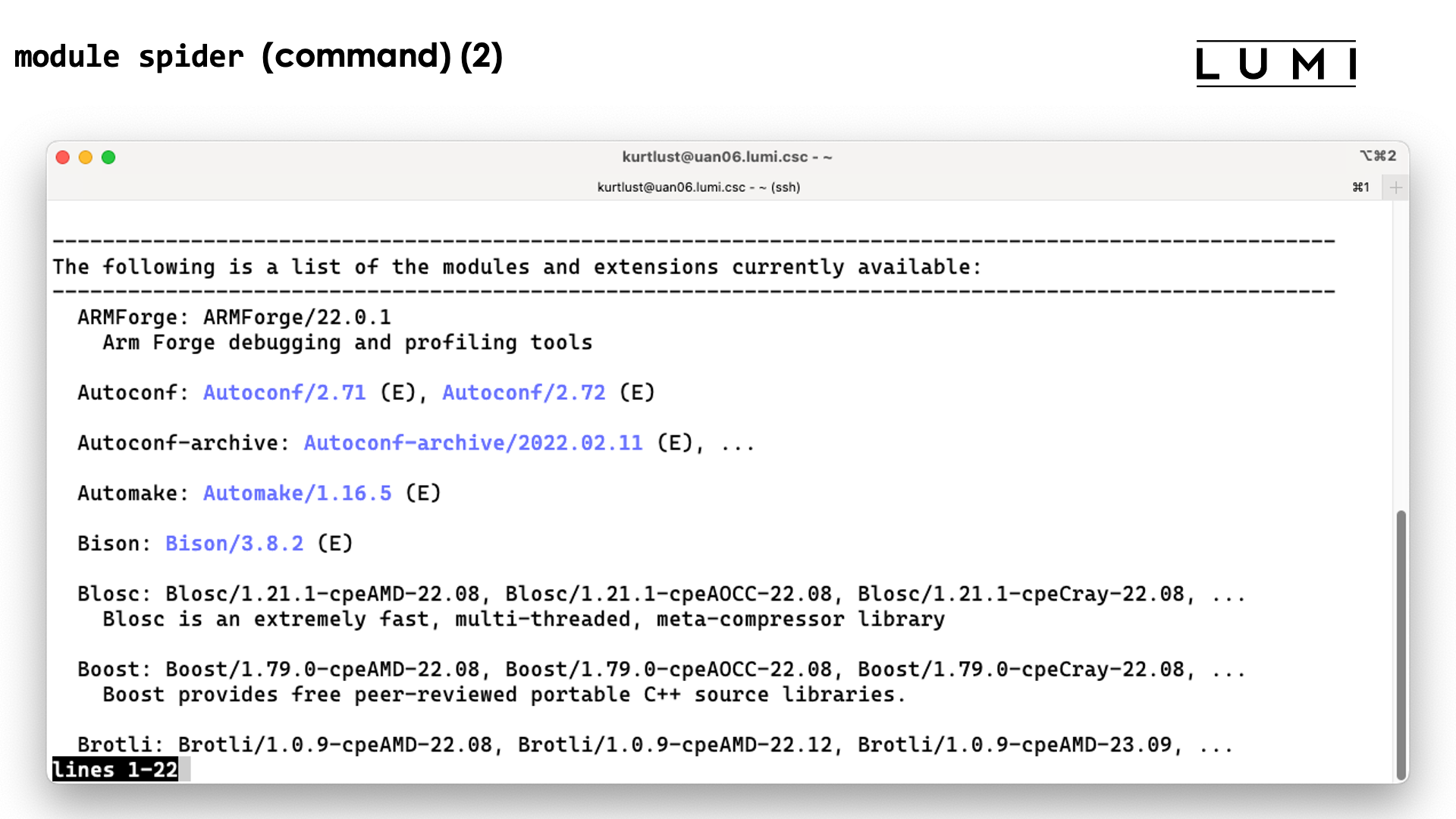

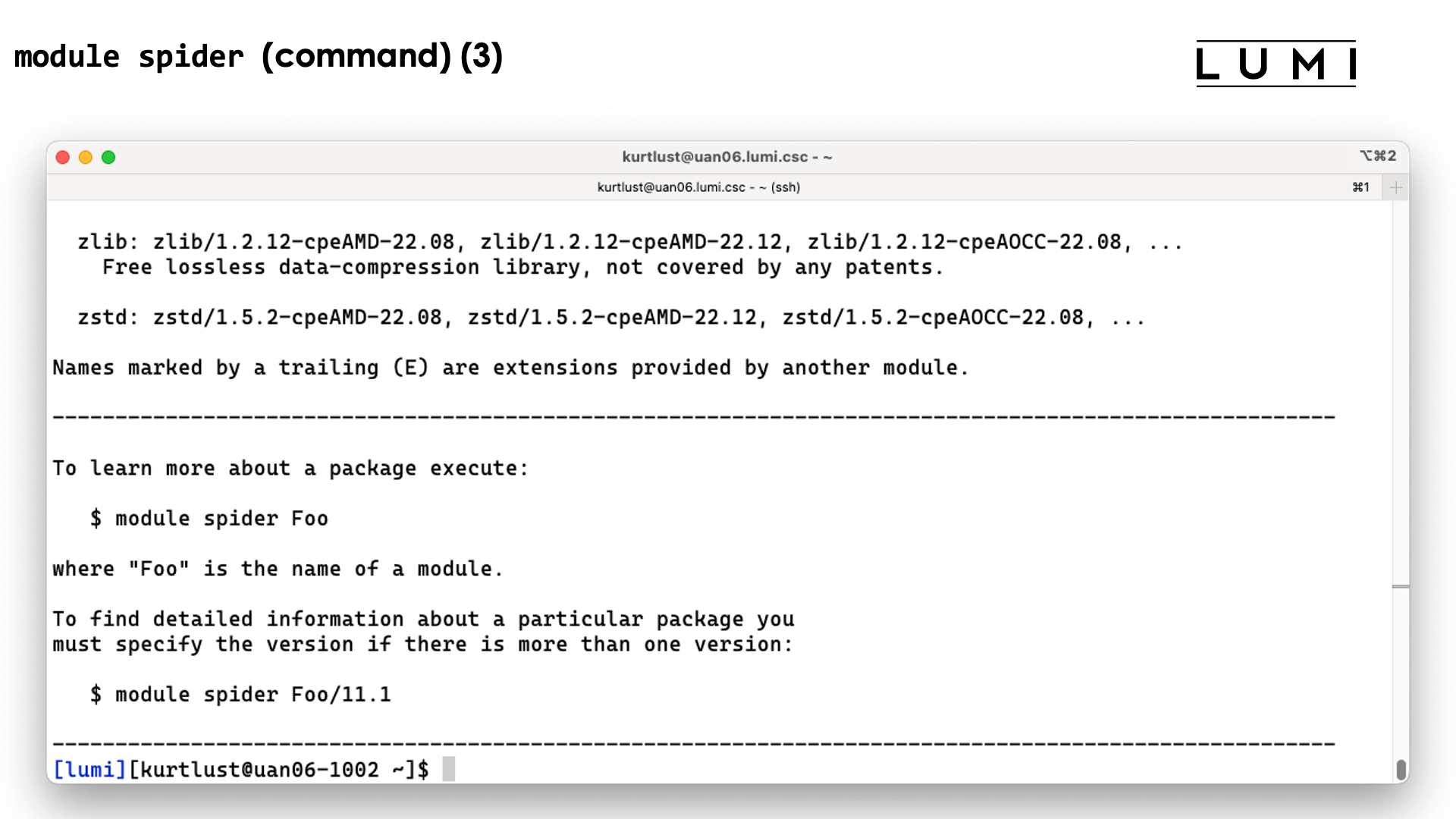

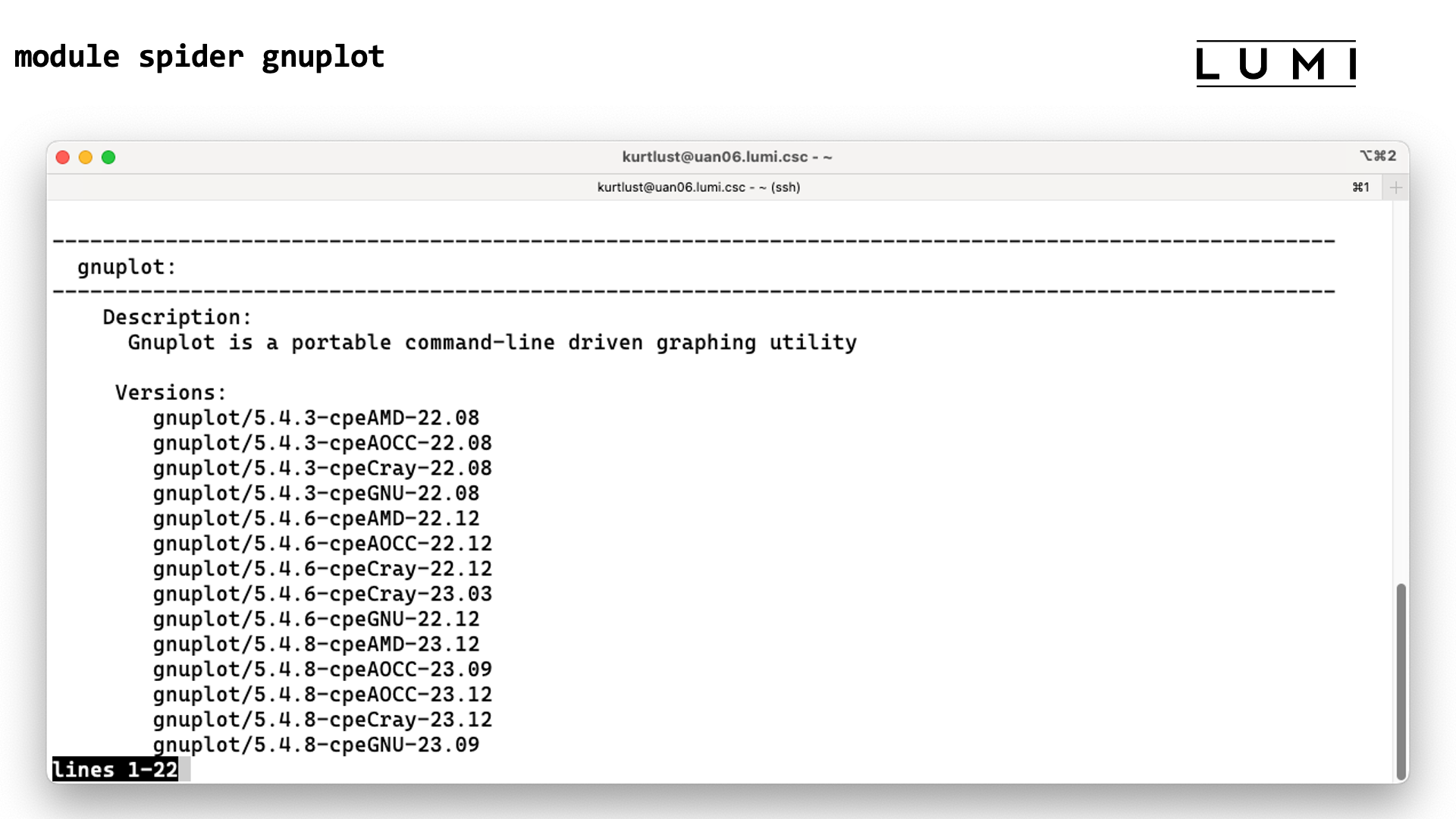

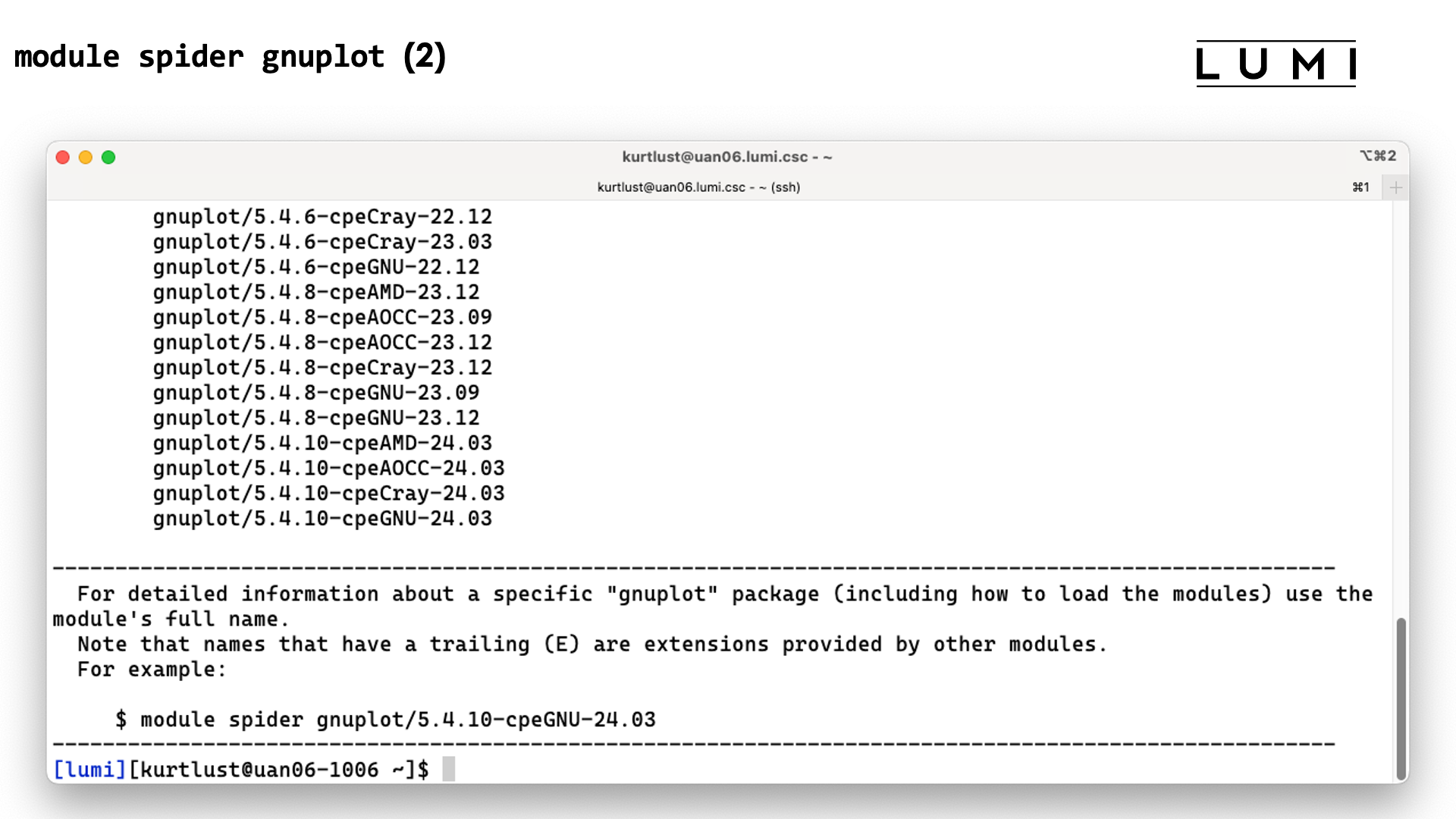

Another use of modules not mentioned on the slide is to configure the programs that is being activated. E.g., some packages expect certain additional environment variables to be set and modules can often take care of that also.