The goal of this project is to create a neural network that can learn how to drive a car by watching how you drive.

Here is a list of my steps to achieve this:

- I will use the simulator to create data to train the network.

- Preprocess data by cropping and normalizing, that it will contain relevant data and proper shape.

- Design and teach the network.

- Test, visualize and write a report.

The submission includes:

- model.py

- drive.py

- model.h5 stored by parts in folder

model:- please use

cat model/model.h* > model.h5to get the originalmodel.h5file (because github for free does not support files larger then 100 mb)

- please use

- writeup report (README.md or writeup_report, they are the same)

- center.jpg, left.jpg, right.jpg

- samples from the training set

- video.mp4

- video result

- output.gif

This implementation was able to successfully train network which can clone the behavior of driving.

Data preprocessing and model are in the model.py. For memory efficiency, there is a generator used.

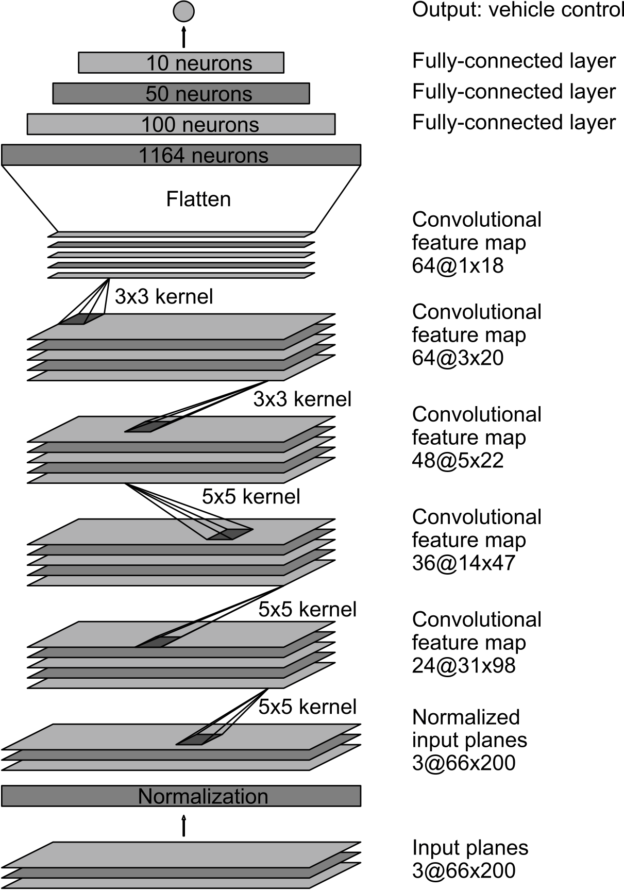

The selected architecture was inspired by https://devblogs.nvidia.com/deep-learning-self-driving-cars/

Because of computation limitation in a given environment, I had to simplify it a little bit. To prevent overfitting and improve learning I've added BatchNormalization and Dropout:

Code in model.py :

# add Convolution2D layers

model.add(Convolution2D(filters=24, kernel_size=(5, 5), padding='valid', activation='relu'))

model.add(Convolution2D(filters=36, kernel_size=(5, 5), padding='valid', activation='relu'))

model.add(Convolution2D(filters=48, kernel_size=(5, 5), padding='valid', activation='relu'))

model.add(Convolution2D(filters=64, kernel_size=(3, 3), padding='valid', activation='relu'))

model.add(Convolution2D(filters=64, kernel_size=(3, 3), padding='valid', activation='relu'))

# add fully connected layers

model.add(Flatten())

model.add(Dense(100, activation='relu'))

model.add(BatchNormalization())

model.add(Dropout(0.4))

model.add(Dense(50, activation='relu'))

model.add(BatchNormalization())

model.add(Dense(50, activation='relu'))

model.add(Dense(10, activation='relu'))

model.add(BatchNormalization())

model.add(Dense(1))In order to prepare training and validation sets I used :

from sklearn.model_selection import train_test_split

train_samples, validation_samples = train_test_split(samples, test_size=0.2)And shuffle samples in generator code (see model.py -> generator() for details).

| Left | Center | Right |

|---|---|---|

|

|

|

For every image, I also generated an augmented image by flipping the original one (see the generator in model.py).

Here is original resulting video file https://github.com/andriikushch/CarND-Behavioral-Cloning-P3/blob/master/video.mp4

As we see from the video the car can drive a lap without any significant issue.