We turned a MacBook into a touchscreen using only $1 of hardware and a little bit of computer vision. The proof-of-concept, dubbed “Project Sistine” after our recreation of the famous painting in the Sistine Chapel, was prototyped by Anish Athalye, Kevin Kwok, Guillermo Webster, and Logan Engstrom in about 16 hours.

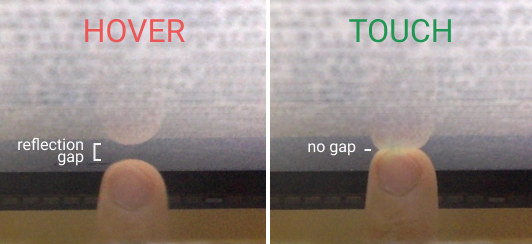

The basic principle behind Sistine is simple. Surfaces viewed from an angle tend to look shiny, and you can tell if a finger is touching the surface by checking if it’s touching its own reflection.

Kevin, back in middle school, noticed this phenomenon and built ShinyTouch, utilizing an external webcam to build a touch input system requiring virtually no setup. We wanted to see if we could miniaturize the idea and make it work without an external webcam. Our idea was to retrofit a small mirror in front of a MacBook’s built-in webcam, so that the webcam would be looking down at the computer screen at a sharp angle. The camera would be able to see fingers hovering over or touching the screen, and we’d be able to translate the video feed into touch events using computer vision.

(Read the rest of our blog post, including a video demo and a high-level explanation of the algorithm, here)

-

First, make sure you have Mac Homebrew installed on your computer. If not, you can install it by running

/usr/bin/ruby -e "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/master/install)" -

Install Python 2 via Homebrew with

brew install python2 -

Install OpenCV 3 via Homebrew with

brew install opencv3 -

Install PyObjC via Pip with

pip2 install pyobjc

Run python2 sistine.py

Copyright (c) 2016-2018 Anish Athalye, Kevin Kwok, Guillermo Webster, and Logan Engstrom. Released under the MIT License. See LICENSE.md for details.