This is a project for making easy money.

In the era of short videos, whoever controls the traffic controls the money!

So I'm sharing this carefully crafted MoneyPrinter project with everyone.

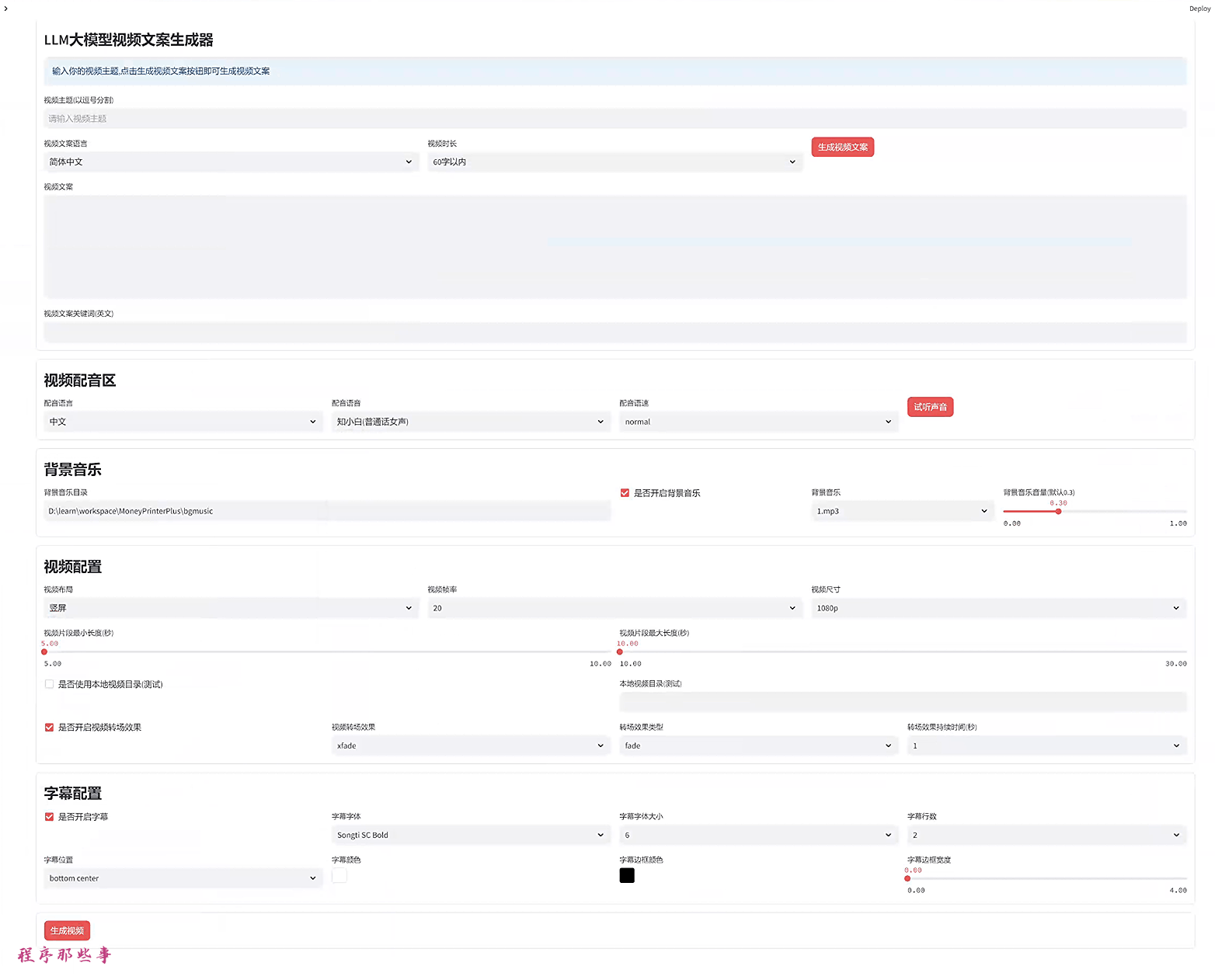

It can: Use AI large model technology to generate various short videos in batches with one click.

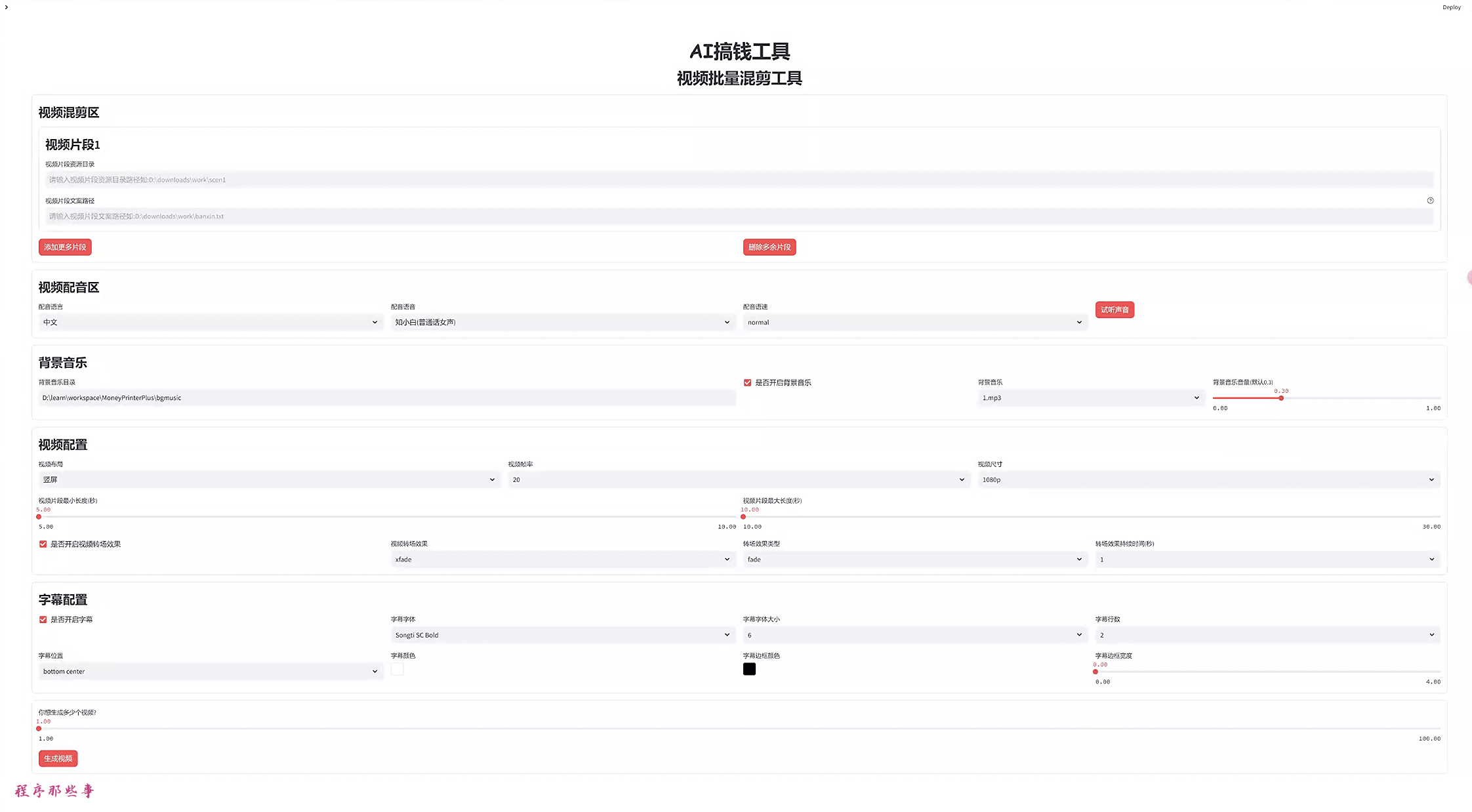

It can: Batch edit short videos, making it a reality to generate short videos in batches.

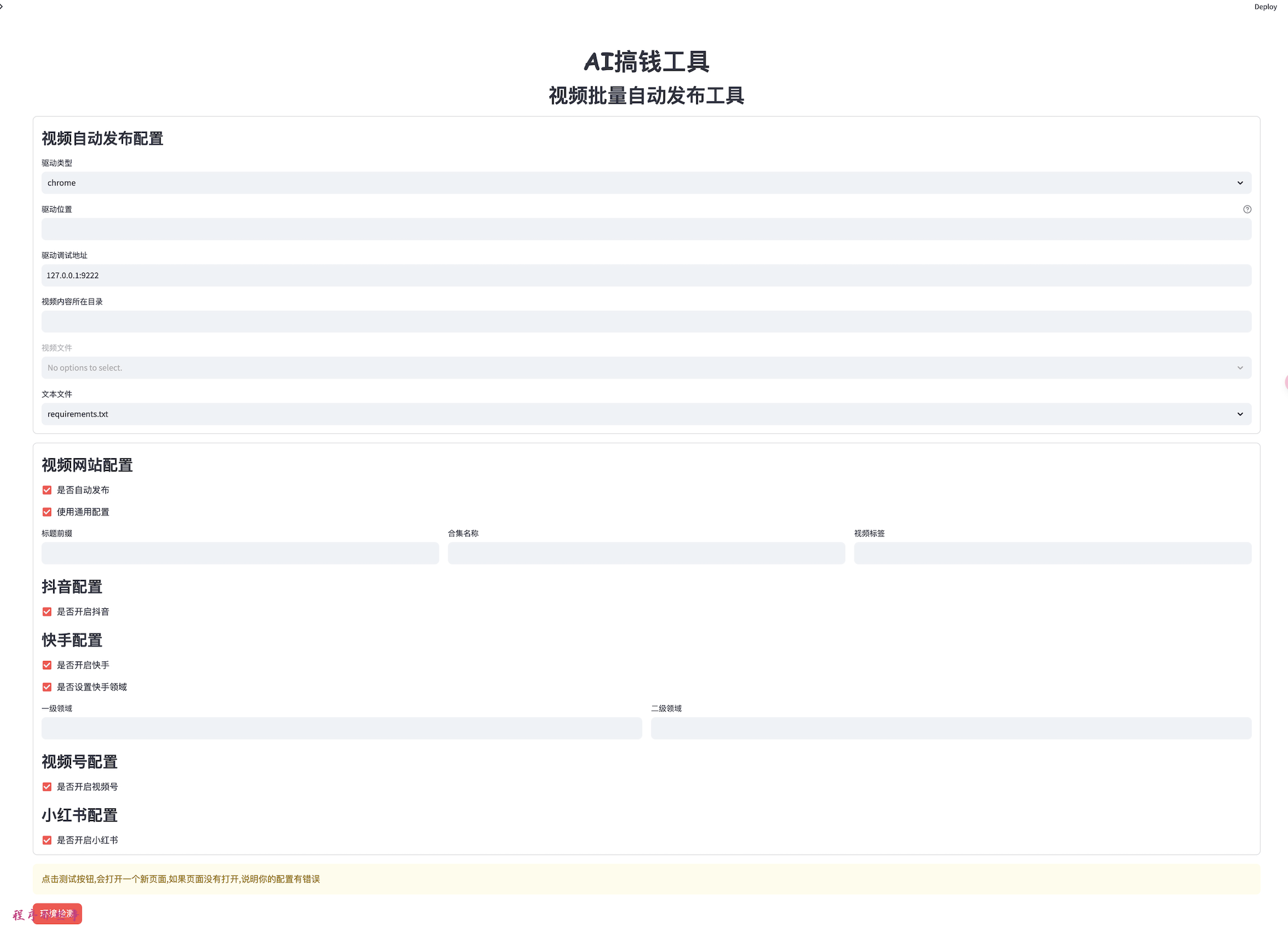

It can: Automatically publish videos to Douyin, Kwai, Xiaohongshu, and Video Number.

Making money has never been easier!

If you find it useful, please give it a star!

MoneyPrinterPlus: Open Source AI Short Video Generation Tool

MoneyPrinterPlus AI Video Tool Detailed Usage Instructions

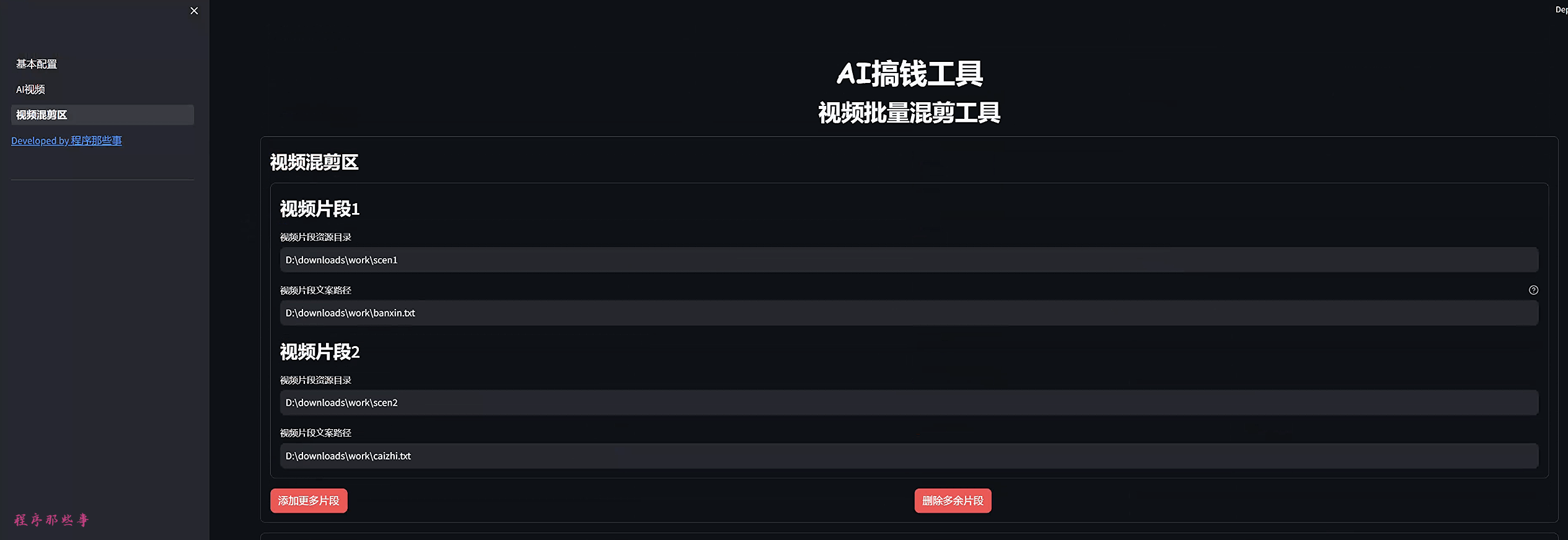

MoneyPrinterPlus AI Batch Short Video Editing Tool Usage Instructions

MoneyPrinterPlus Beginner's Tutorial for Generating Thousands of Short Videos with One Click

MoneyPrinterPlus Detailed Usage Tutorial

MoneyPrinterPlus Alibaba Cloud Detailed Configuration and Usage Tutorial

MoneyPrinterPlus Tencent Cloud Detailed Configuration and Usage Tutorial

MoneyPrinterPlus Microsoft Cloud Detailed Configuration and Usage Tutorial

MoneyPrinterPlus Automatic Environment Configuration and Automatic Running

Usage Introduction: Breaking News! Free One-Click Batch Editing Tool Is Here, Making Thousands of Short Videos a Day a Reality

Usage Introduction: MoneyPrinterPlus Detailed Usage Tutorial

- The video automatic publishing feature is now live!!!!!! Usage Tutorial: MoneyPrinterPlus One-Click Publishing of Short Videos to Video Number, Douyin, Kwai, and Xiaohongshu Is Now Live

- 20240710 Support for local large model: Ollama

- 20240708 Unbelievable! The automatic video publishing feature is now live. Supports Douyin, Kwai, Xiaohongshu, and Video Number!!!!!

- 20240704 Added automatic installation and startup scripts for easy usage by beginners.

- 20240628 Major update! Supports batch video editing, batch generation of a large number of unique short videos!!!!!!

- 20240620 Improved video merging effect to make the video ending more natural.

- 20240619 Speech recognition and synthesis supports Tencent Cloud. Requires enabling Tencent Cloud's speech synthesis and speech recognition functions.

- 20240615 Speech recognition and synthesis supports Alibaba Cloud. Requires enabling Alibaba Cloud's Intelligent Speech Interaction feature, and must enable speech synthesis and recording file recognition (Express Edition) functions.

- 20240614 Resource library supports pixabay, supports voice preview function, and fixes some bugs

- Automatically publish videos to various video platforms, supports Douyin, Kwai, Xiaohongshu, and Video Number!!!!!

- Batch video editing, batch generation of a large number of unique short videos

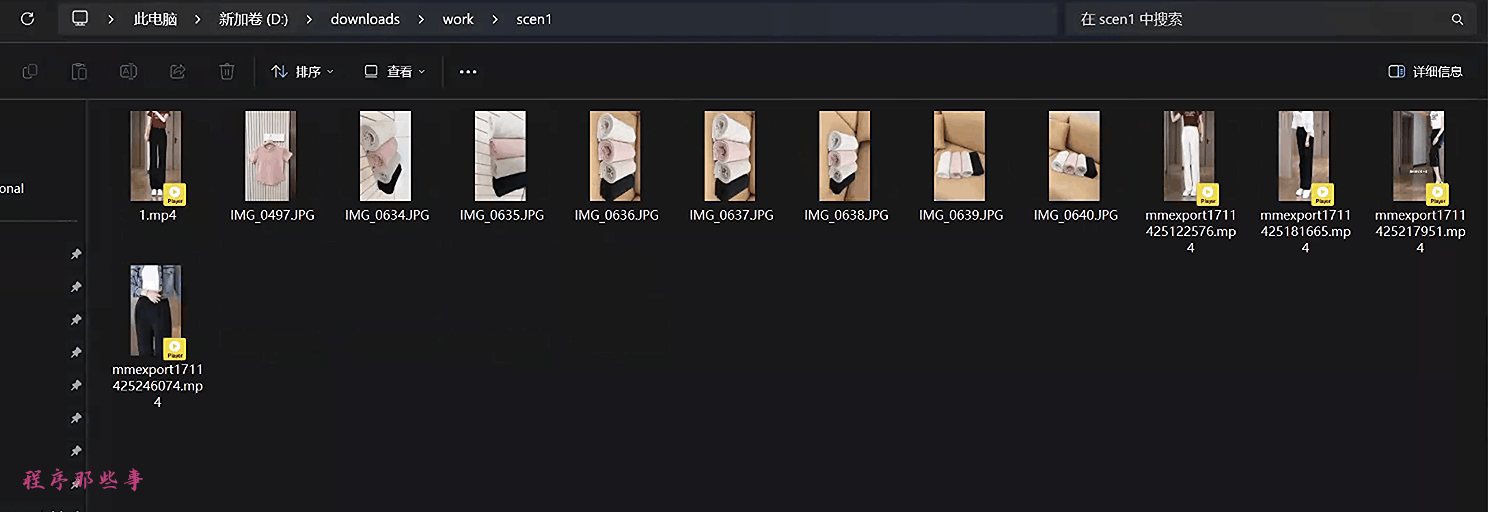

- Supports local material selection (supports various material formats such as mp4, jpg, png), and supports various resolutions.

- Cloud large model integration: OpenAI, Azure, Kimi, Qianfan, Baichuan, Tongyi Qwen, DeepSeek

- Local large model integration: Ollama

- Support for Azure voice features

- Support for Alibaba Cloud voice features

- Support for Tencent Cloud voice features

- Supports 100+ different voice types

- Supports voice preview function

- Supports 30+ video transition effects

- Supports video generation in different resolutions, sizes, and proportions

- Supports voice selection and speed adjustment

- Supports background music

- Supports background music volume adjustment

- Supports custom subtitles

- Covers mainstream AI large model tools on the market

- [] Support for local voice subtitle recognition model

- [] Support for more video resource acquisition methods

- [] Support for more video transition effects

- [] Support for more subtitle effects

- [] Integration of stable diffusion, AI image generation, and video synthesis

- [] Integration of Sora and other AI video large model tools, automatic video generation

| Portrait | Landscape | Square |

|---|---|---|

final-1718158522826.mp4 |

final-1718160166012.mp4 |

final-1718160533551.mp4 |

- Python 3.10+

- ffmpeg 6.0+

- LLM api key

- Azure voice service (https://speech.microsoft.com/portal)

- Or Alibaba Cloud Intelligent Speech Interaction (https://nls-portal.console.aliyun.com/overview)

- Or Tencent Cloud voice technology (https://console.cloud.tencent.com/asr)

Make sure to install ffmpeg and add the ffmpeg path to the environment variable.

- Make sure you have a running environment with Python 3.10+. If you are using Windows, ensure that the Python path is added to the PATH.

- Make sure you have a running environment with ffmpeg 6.0+. If you are using Windows, ensure that the ffmpeg path is added to the PATH. If ffmpeg is not installed, please install the corresponding version from https://ffmpeg.org/.

If you have the Python and ffmpeg environments set up, you can install the required packages using pip:

pip install -r requirements.txtNavigate to the project directory and double-click on the setup.bat file to run it on Windows. On Mac or Linux, execute:

bash setup.shUse the following command to run the program:

streamlit run gui.pyIf you used the automatic installation script, you can execute the following script to run the program:

On Windows, double-click start.bat. On Mac or Linux, execute:

bash start.shThe log file will contain information about the program's execution, including the URL to access the program through a web browser.

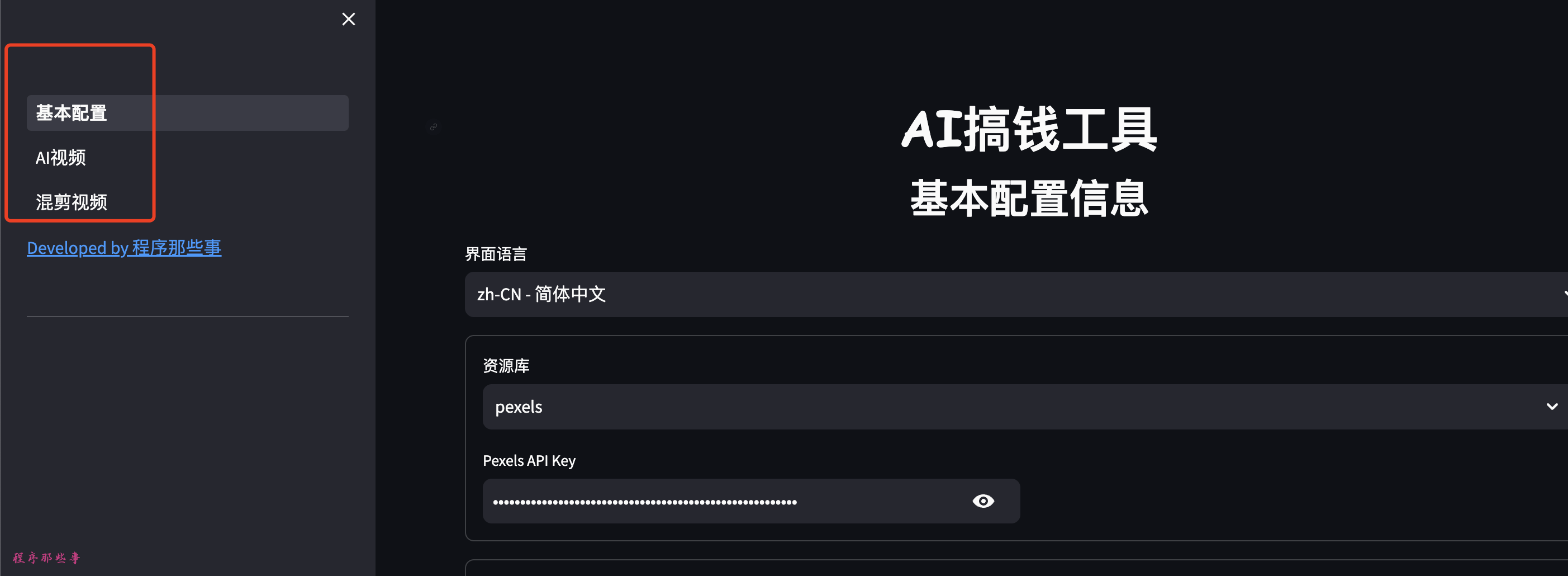

Upon opening the URL in a web browser, you will see the following interface:

The left sidebar contains three configurations: Basic Configuration, AI Video, and Batch Video (under development).

Currently supported resources:

- pexels: www.pexels.com Pexels is a famous website for free images and video materials.

- pixabay: pixabay.com

You will need to register for an API key on the corresponding website to enable API usage.

Other resource libraries will be added in the future, such as videvo.net, videezy.com, etc.

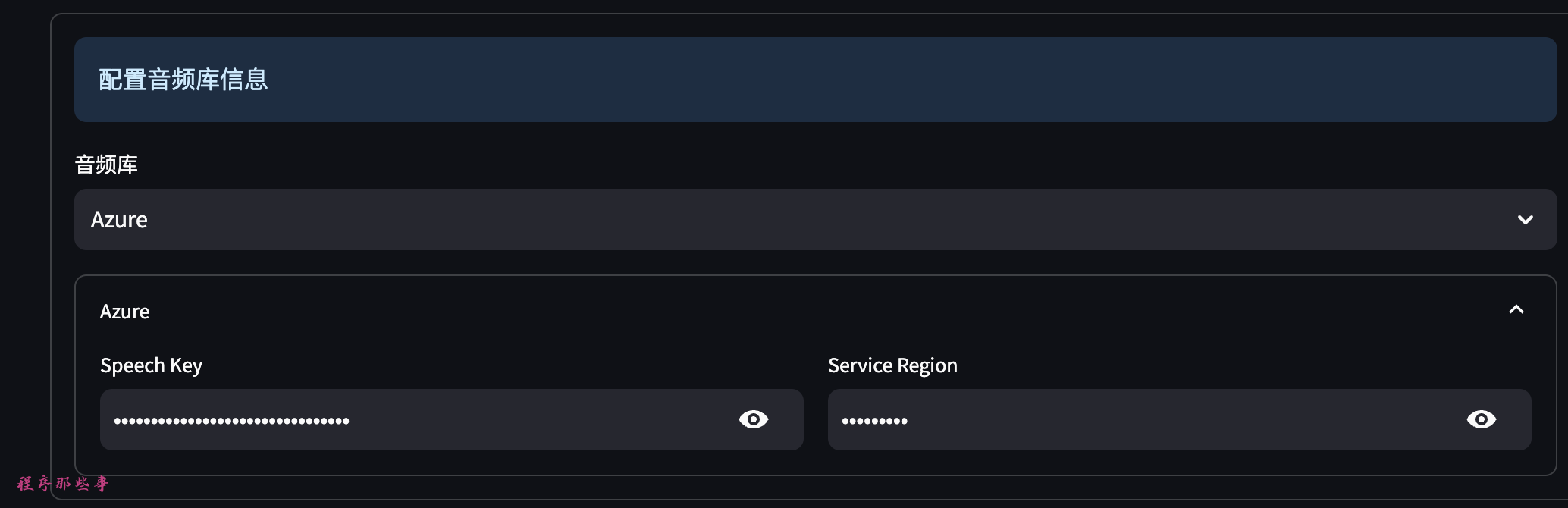

The text-to-speech and speech recognition functions currently support:

- Azure's cognitive-services.

- Alibaba Cloud's Intelligent Speech Interaction

- Tencent Cloud's voice technology (https://console.cloud.tencent.com/asr)

- Azure: You will need to register for a key at https://speech.microsoft.com/portal. Azure offers 1 year of free service for new users and reasonable pricing.

- Alibaba Cloud: You will need to sign up at https://nls-portal.console.aliyun.com/overview and create a project. You must enable Alibaba Cloud's Intelligent Speech Interaction feature and activate the speech synthesis and recording file recognition (Express Edition) functions.

- Tencent Cloud: You will need to sign up at https://console.cloud.tencent.com/asr and enable the speech recognition and speech synthesis functions.

Text-to-speech from Microsoft Azure is currently the most outstanding service.

The large model area currently supports Moonshot, openAI, Azure openAI, Baidu Qianfan, Baichuan, Tongyi Qwen, DeepSeek, and others.

Moonshot API access: https://platform.moonshot.cn/

Baidu Qianfan API access: https://cloud.baidu.com/doc/WENXINWORKSHOP/s/yloieb01t

Baichuan API access: https://platform.baichuan-ai.com/

Alibaba Cloud Tongyi Qwen API access: https://help.aliyun.com/document_detail/611472.html?spm=a2c4g.2399481.0.0

DeepSeek API access: https://www.deepseek.com/

After setting up the basic configuration, you can proceed to the AI video section.

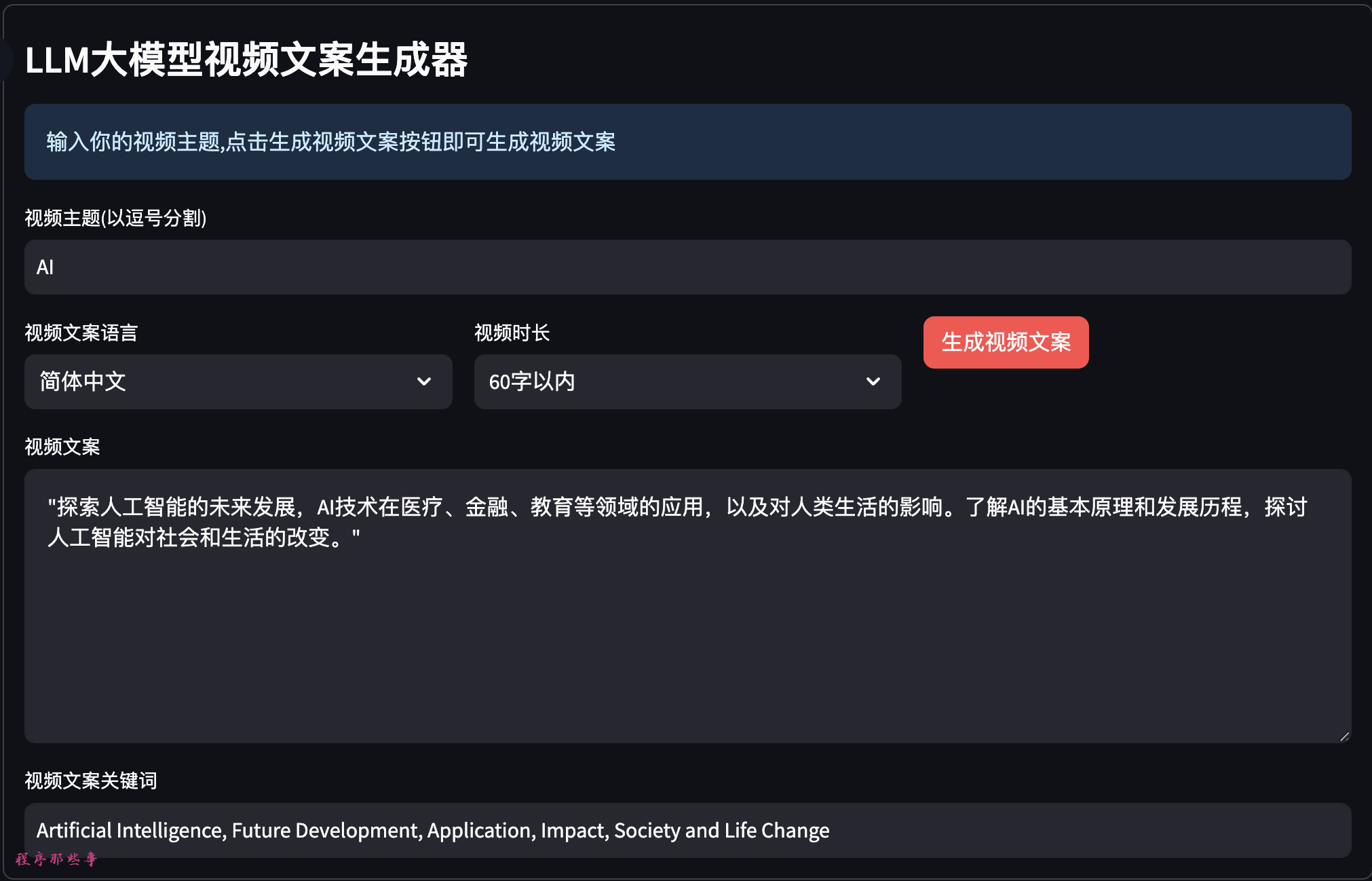

First, provide a keyword, and use the large model to generate video content:

You can choose the language and duration of the video content. If you are not satisfied with the generated video content and keyword, you can manually modify it.

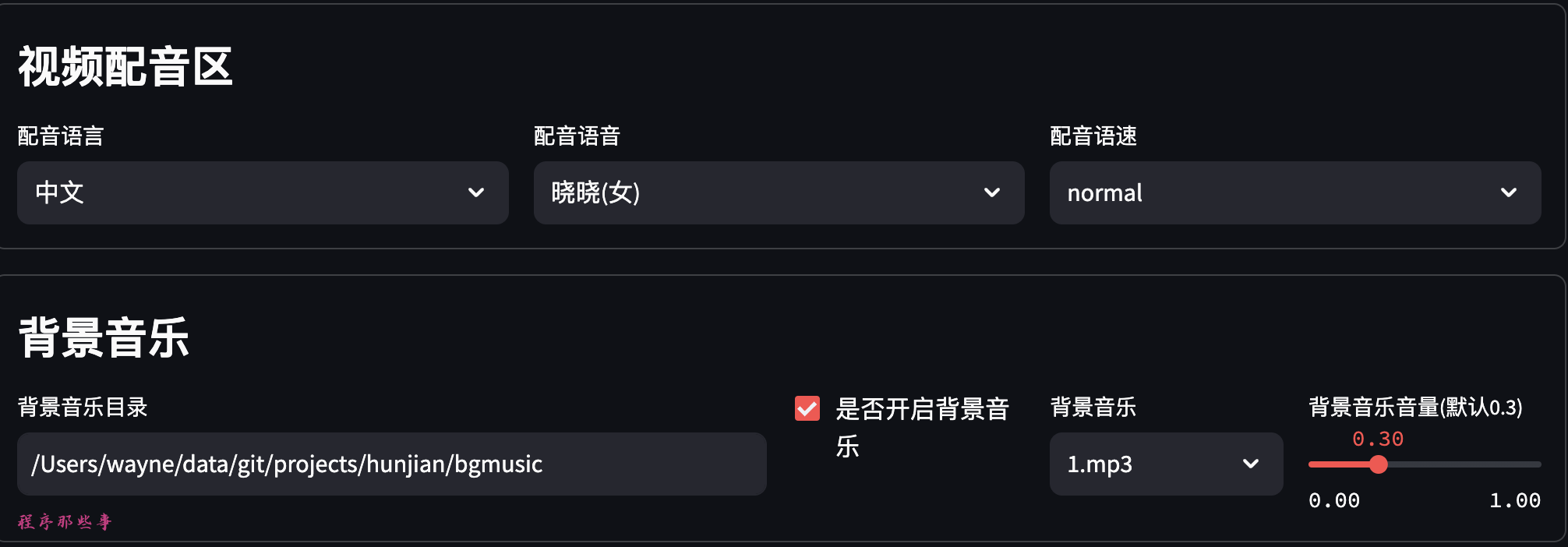

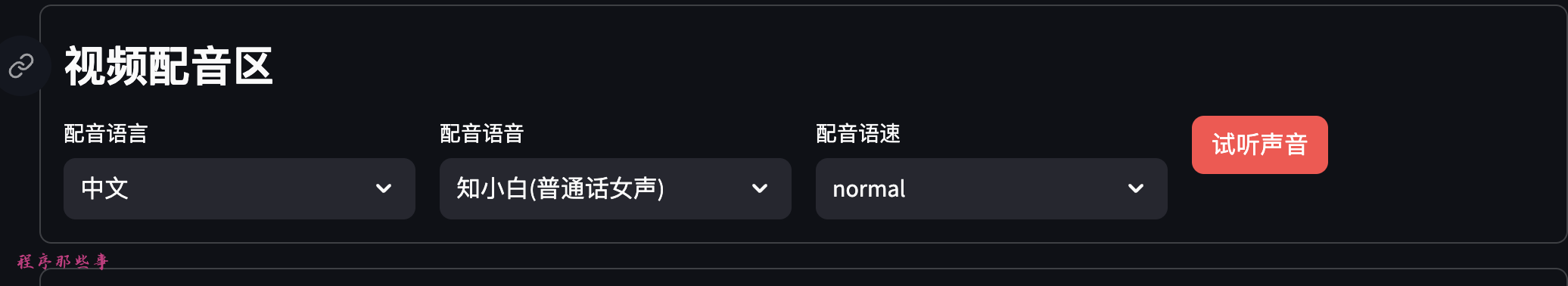

You can choose the language and voice for the voiceover.

You can also adjust the voice speed.

Voice preview function will be supported in the future.

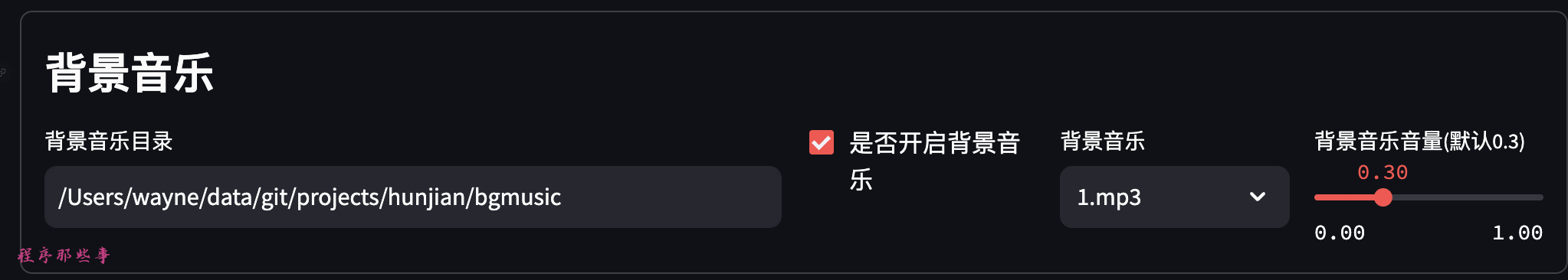

The background music is located in the project's bgmusic folder.

Currently, there are only two background music tracks provided. You can add your own background music files to this folder.

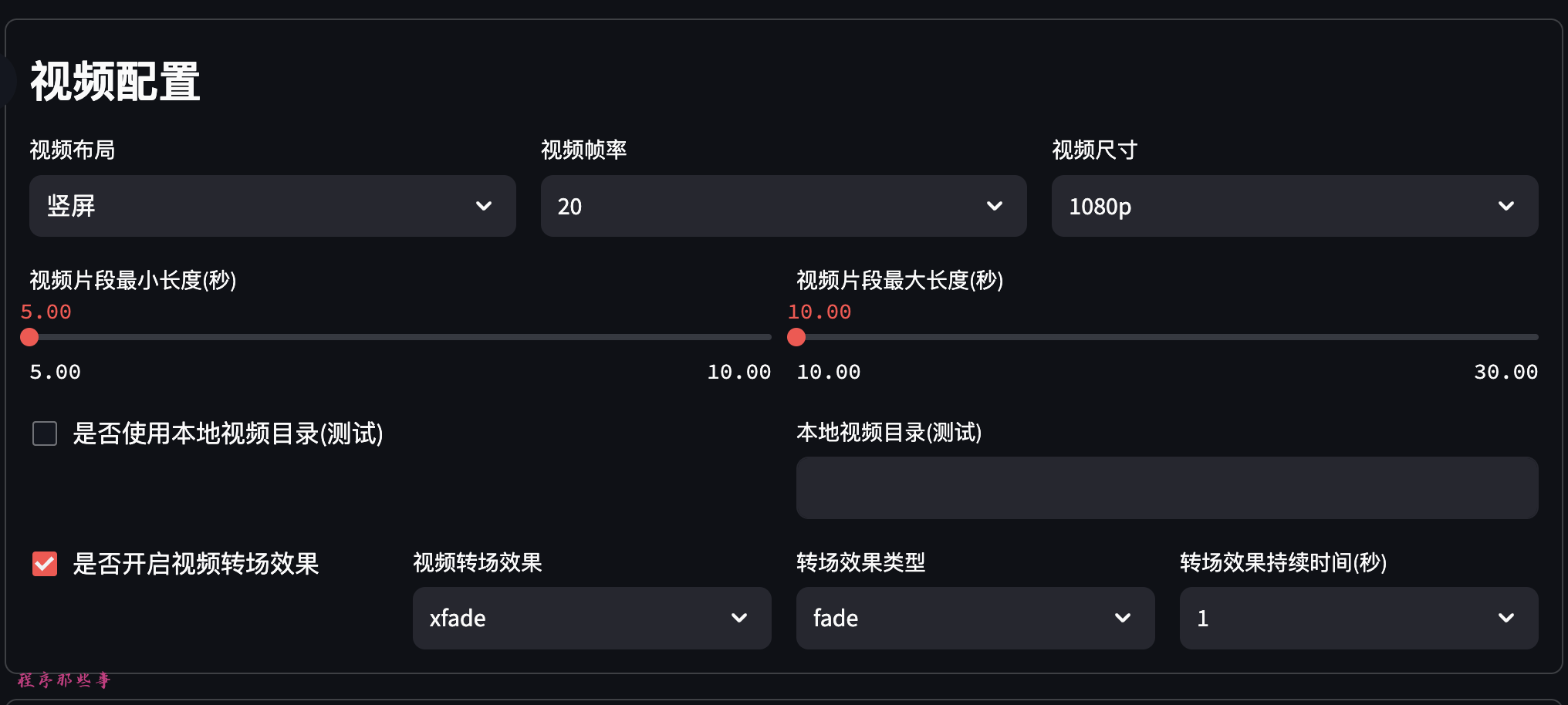

In the video configuration section, you can choose the video layout, frame rate, and video size.

You can also enable video transition effects. Currently, 30+ transition effects are supported.

Local video resource usage will be supported in the future.

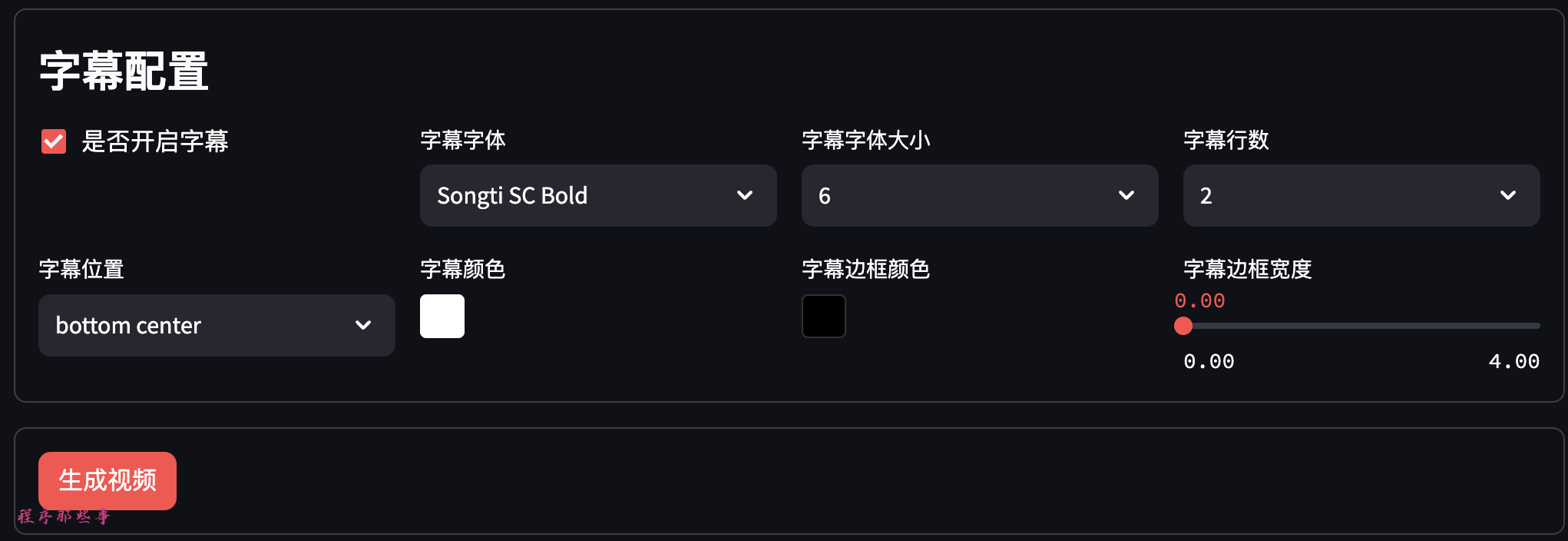

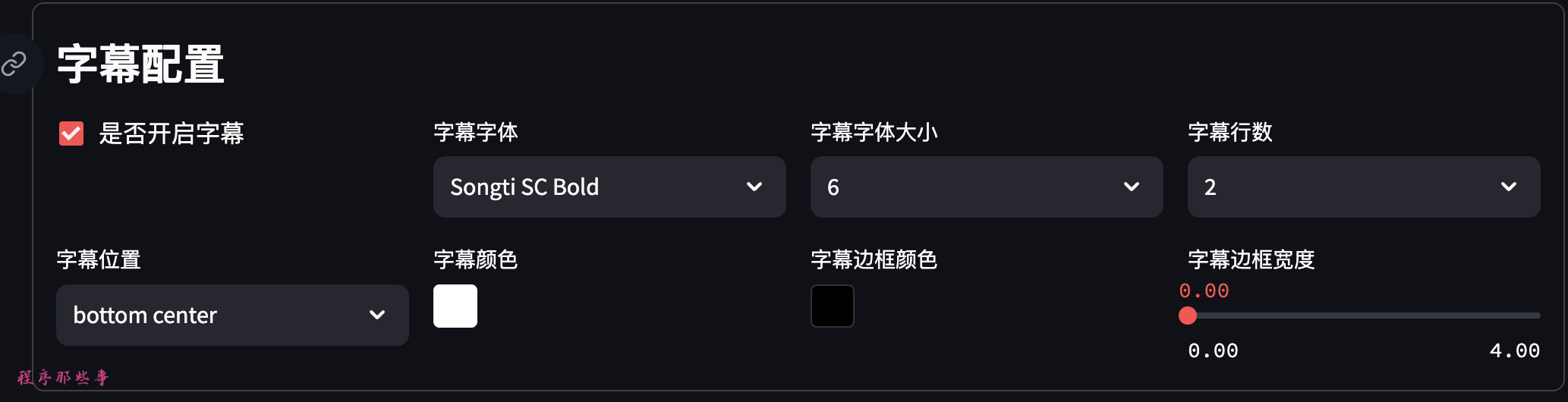

The subtitle files are located in the fonts folder at the project root.

Currently, two font collections are supported: Songti and Pingfang.

You can choose the subtitle position, color, border color, and border width.

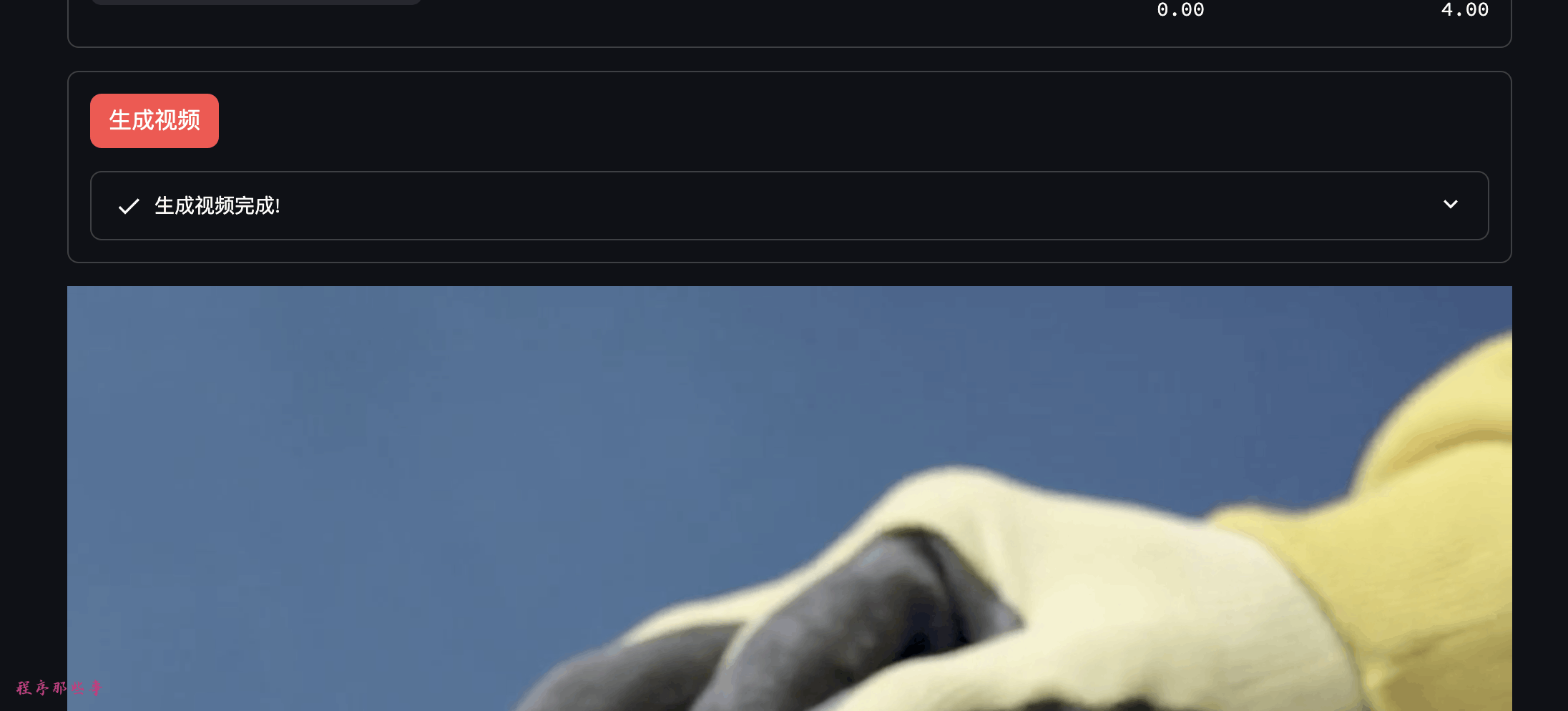

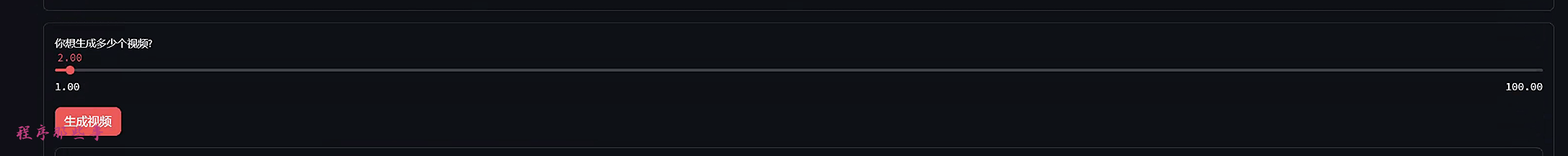

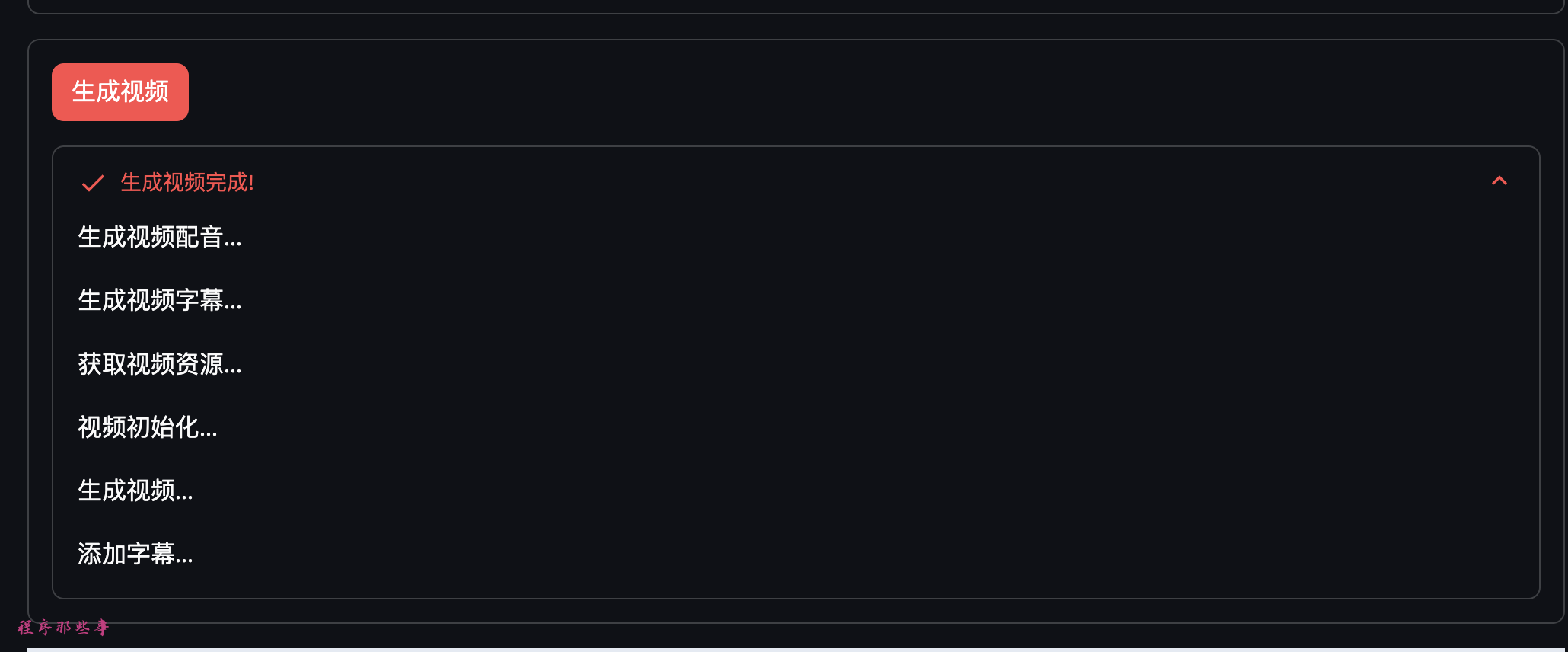

Finally, click the "Generate Video" button to create the video.

The page will display the specific steps and progress.

.

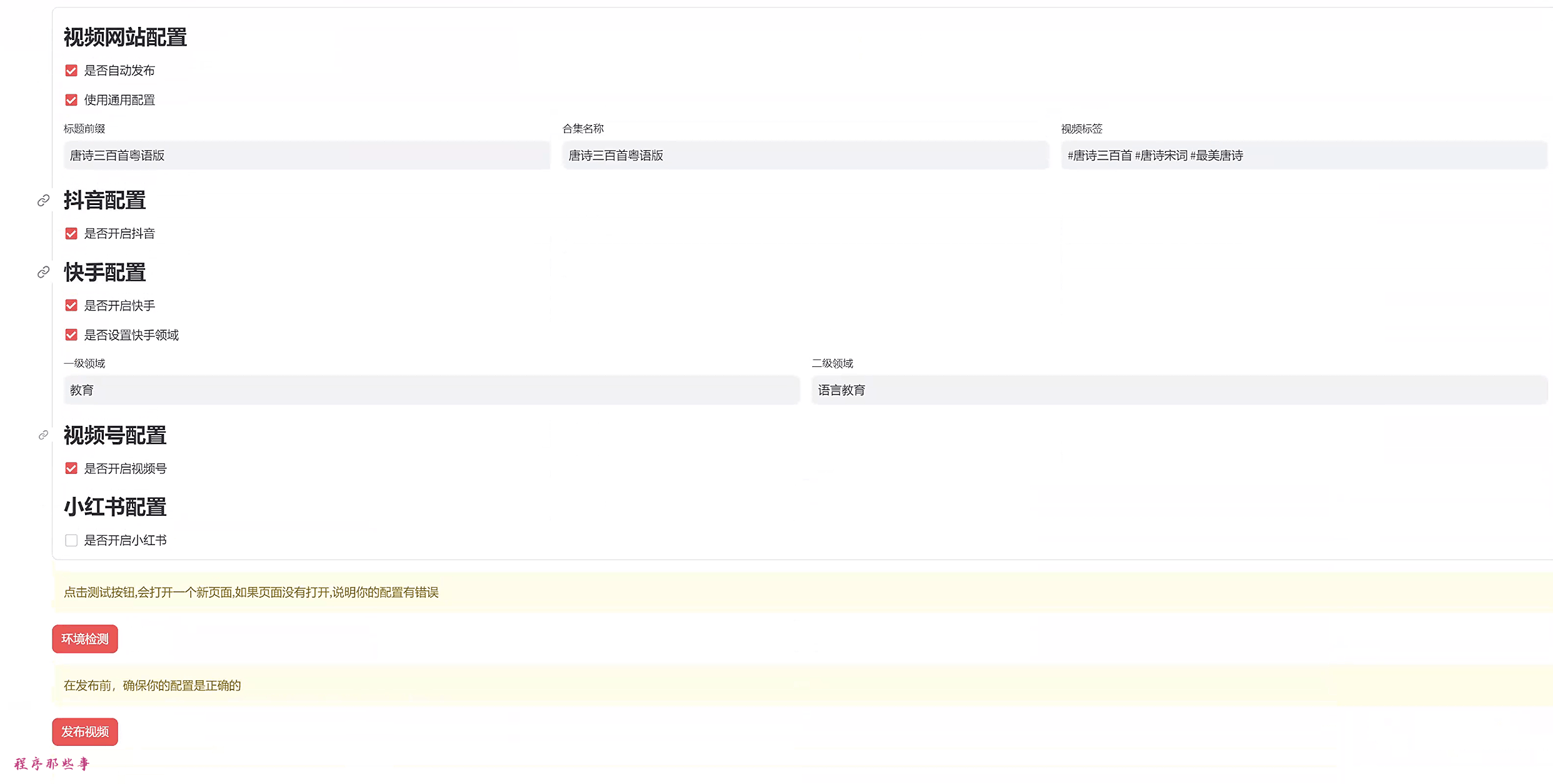

Video Tags: It's self-explanatory, it's the tags, separated by spaces.

For Kuaishou, there is an additional field configuration.

You can choose whether to enable Douyin, Kuaishou, Video Number, or Little Red Book.

Next, you can prepare to publish the video.

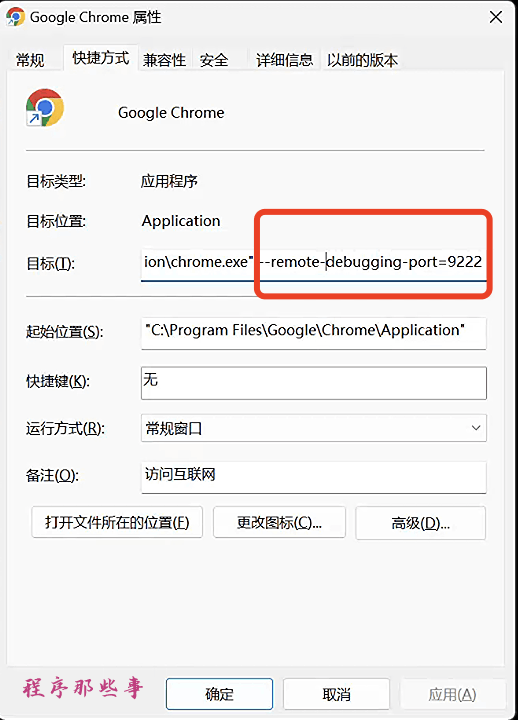

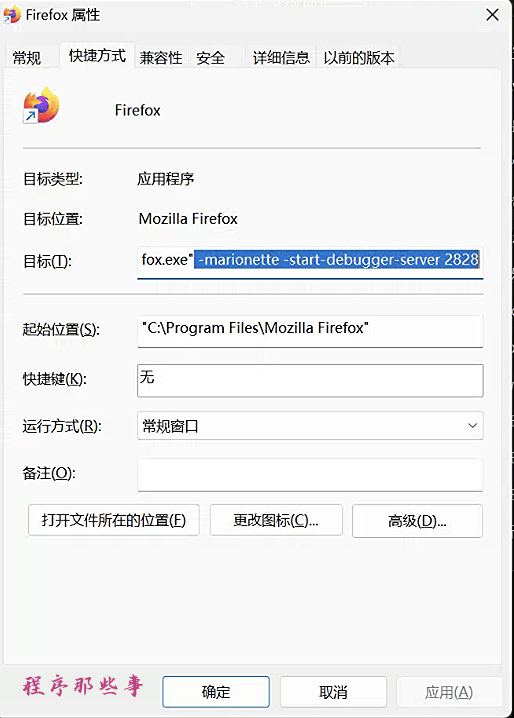

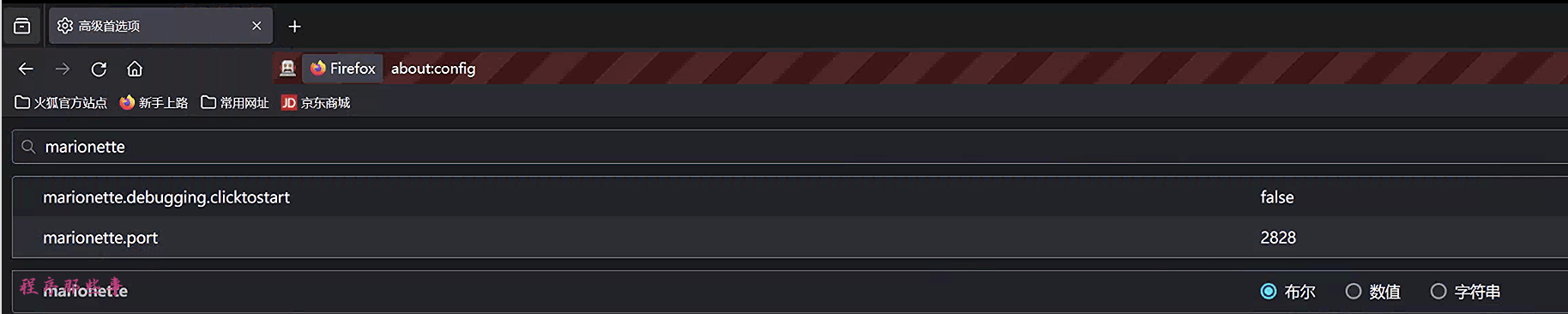

But before publishing, you can click on "Environment Check".

If your homepage opens automatically, it means your environment configuration is fine. You can go ahead and publish the video.

Because all video sites require login, you need to open the corresponding site and log in to your account first before clicking the "Publish Video" button.

Once all your accounts are logged in, click the "Publish Video" button.

Start your journey of freedom.

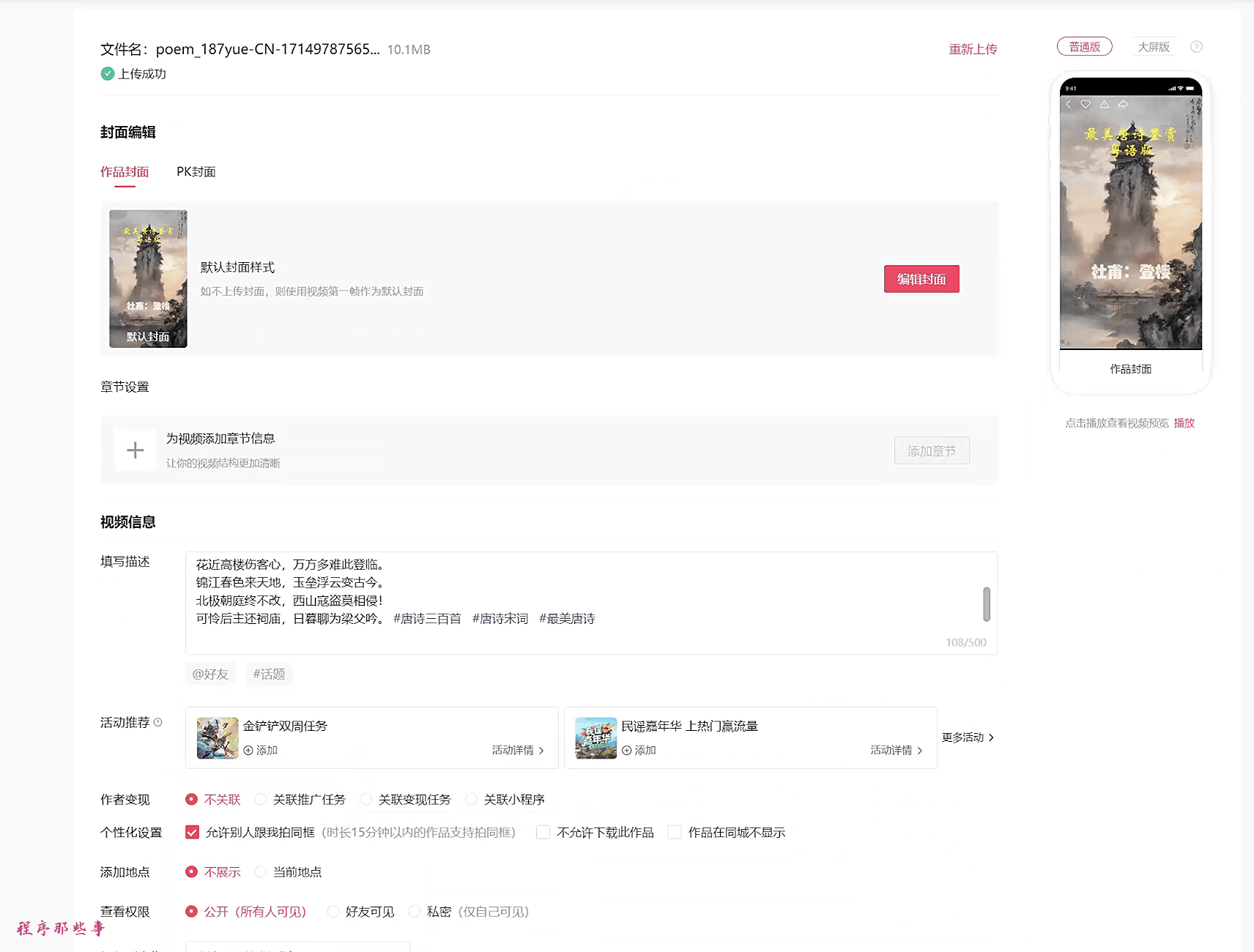

The running interface will look something like this:

For friends who encounter problems, you can first check the summary of common issues here to see if you can solve the problem.

If anyone has any questions or ideas, feel free to join the group for discussion. Friends who think the project is good can buy the author a cup of tea.

| 交流群 | 我的微信 |

|---|---|

|

|