Text generation is a type of natural language processing that uses computational linguistics and artificial intelligence to automatically produce text that can meet specific communicative needs. In this demo we use the Generative Pre-trained Transformer 2 (GPT-2) model for text prediction.

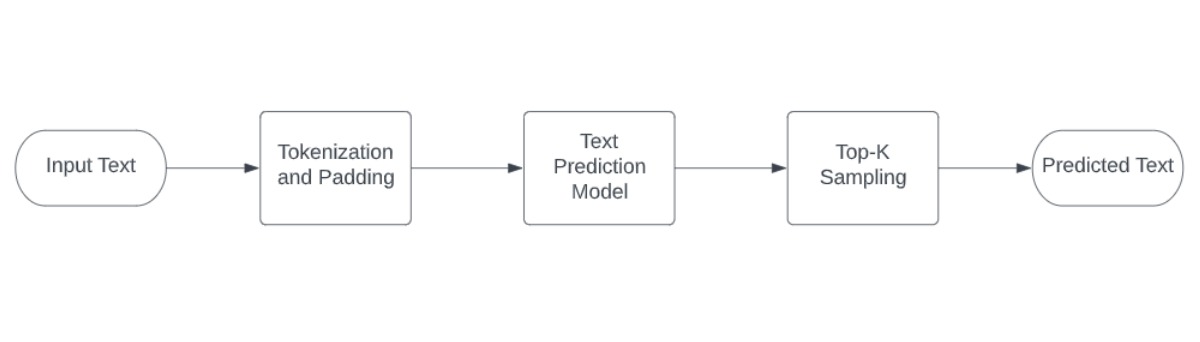

The complete pipeline of this demo's notebook is shown below.

This is a demonstration in which the user can type the beginning of the text and the network will generate a further. This procedure can be repeated as many times as the user desires.

The following image show an example of the input sequence and corresponding predicted sequence.

This notebook demonstrates text prediction with OpenVINO using the gpt-2 model from Open Model Zoo.

If you have not done so already, please follow the Installation Guide to install all required dependencies.