A REST API implementing each of the 30 example applications from the official OpenAI API Documentation using a modularized Terraform approach. Implemented as a serverless microservice using AWS API Gateway, Lambda and the OpenAI Python Library. Leverages OpenAI's suite of AI models, including GPT-3.5, GPT-4, DALL·E, Whisper, Embeddings, and Moderation.

- Creating new OpenAI applications and endpoints for this API only takes a few lines of code and is as easy as it is fun! Follow this link to see how each of these are coded.

- Follow this link for detailed documentation on each URL endpoint.

An example request and response. This endpoint inspects and corrects gramatical errors.

curl --location --request PUT 'https://api.openai.yourdomain.com/examples/default-grammar' \

--header 'x-api-key: your-apigateway-api-key' \

--header 'Content-Type: application/json' \

--data '{"input_text": "She no went to the market."}'return value

{

"isBase64Encoded": false,

"statusCode": 200,

"headers": {

"Content-Type": "application/json"

},

"body": {

"id": "chatcmpl-7yLxpF7ZsJzF3FTUICyUKDe1Ob9nd",

"object": "chat.completion",

"created": 1694618465,

"model": "gpt-3.5-turbo-0613",

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

"content": "The correct way to phrase this sentence would be: \"She did not go to the market.\""

},

"finish_reason": "stop"

}

],

"usage": {

"prompt_tokens": 36,

"completion_tokens": 10,

"total_tokens": 46

}

}

}An example complete URL for one of the end points described below: https://api.openai.example.com/examples/default-grammar

Implementations of each example application found in OpenAI API - Examples.

A single URL end point, /passthrough, that passes the http request body directly to the OpenAI API

Example valid request body:

{

"model": "gpt-3.5-turbo",

"end_point": "ChatCompletion",

"temperature": 0.9,

"max_tokens": 1024,

"messages": [

{

"role": "system",

"content": "Summarize content you are provided with for a second-grade student."

},

{

"role": "user",

"content": "what is quantum computing?"

},

{

"role": "assistant",

"content": "Quantum computing involves teeny tiny itsy bitsy atomic stuff"

},

{

"role": "user",

"content": "What??? I don't understand. Please provide a better explanation."

}

]

}- AWS account

- AWS Command Line Interface

- Terraform. If you're new to Terraform then see Getting Started With AWS and Terraform

- OpenAI platform API key. If you're new to OpenAI API then see How to Get an OpenAI API Key

-

clone this repo and setup a Python virtual environment

git clone https://github.com/FullStackWithLawrence/aws-openai.git cd aws-openai make api-init

-

add your OpenAI API and Pinecone credentials to the .env file in the root folder of this repo. The formats of these credentials should appear similar in format to these examples below.

OPENAI_API_ORGANIZATION=org-YJzABCDEFGHIJESMShcyulf0 OPENAI_API_KEY=sk-7doQ4gAITSez7ABCDEFGHIJlbkFJKLOuEbRhAFadzjtnzAV2 PINECONE_API_KEY=6abbcedf-fhijk-55d0-lmnop-94123344abcd PINECONE_ENVIRONMENT=gcp-myorg DEBUG_MODE=True

Windows/Powershell users: you'll need to modify ./terraform/lambda_openai.tf data "external" "env" as per instructions in this code block.

-

Add your AWS account number and region to Terraform. Set these three values in terraform.tfvars:

account_id = "012345678912" # Required: your 12-digit AWS account number aws_region = "us-east-1" # Optional: an AWS data center aws_profile = "default" # Optional: for aws cli credentials

see the README section "Installation Prerequisites" below for instructions on setting up Terraform for first-time use.

-

Build and deploy the microservice..

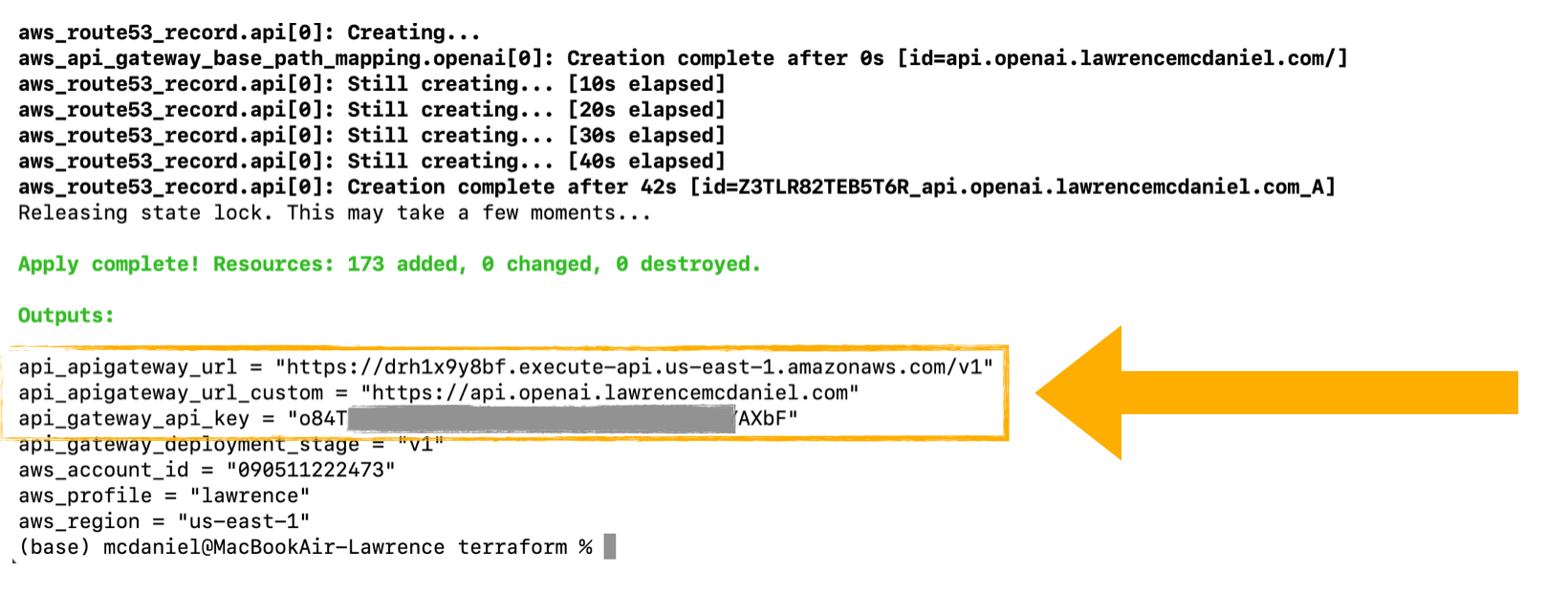

terraform init terraform apply

Note the output variables for your API Gateway root URL and API key.

-

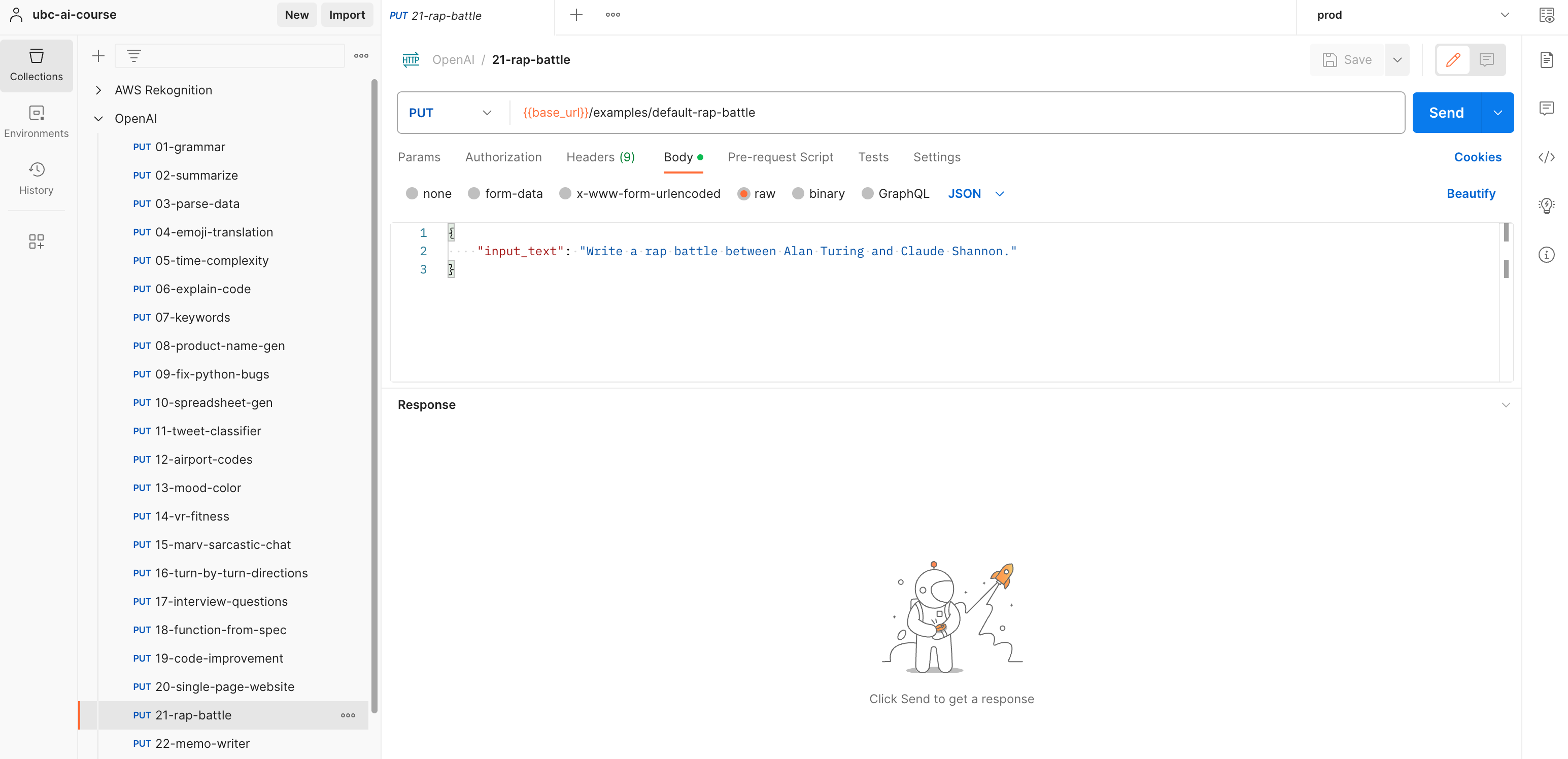

(Optional) use the preconfigured import files to setup a Postman collection with all 30 URL endpoints.

If you manage a domain name using AWS Route53 then you can optionally deploy this API using your own custom domain name. Modify the following variables in terraform/terraform.tfvars and Terraform will take care of the rest.

create_custom_domain = true

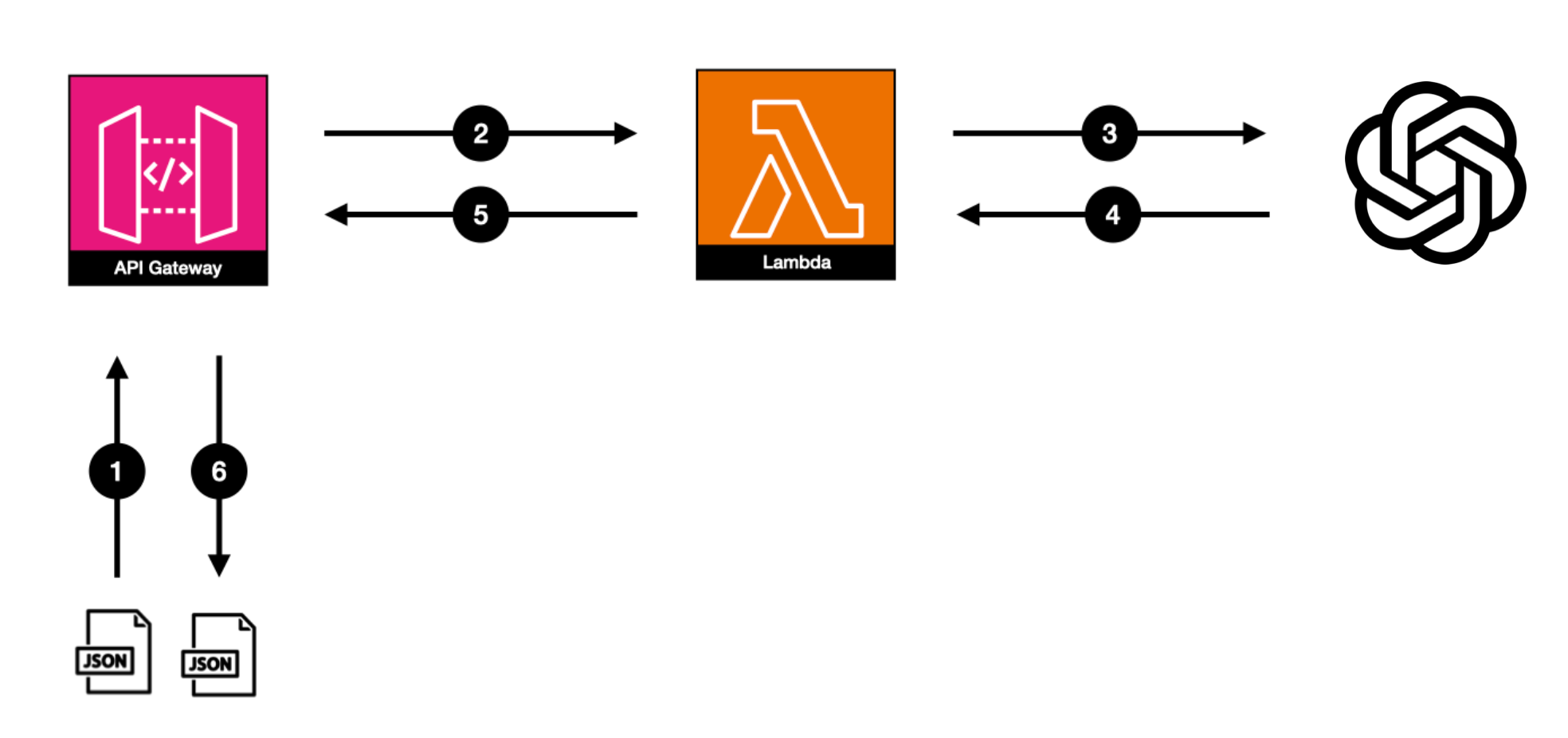

root_domain = "yourdomain.com"- a JSON object and custom headers are added to an HTTP request and sent to the API as a 'PUT' method.

- API Gateway uses a Request Mapping Template in a non-proxy Lambda integration request to combine user request text with your OpenAPI application definition, and then forward the combined data as a custom JSON object to a Lambda function.

- Lambda parses and validates the custom JSON object and then invokes the OpenAI API, passing your api key which is stored as a Lambda environment variable.

- OpenAI API results are returned as JSON objects.

- Lambda creates a custom JSON response containing the http response body as well as system information for API Gateway.

- API Gateway passes through the http response to the client.

You'll find a detailed narrative explanation of the design strategy in this article, OpenAI API With AWS Lambda

- OpenAI: a PyPi package thata provides convenient access to the OpenAI API from applications written in the Python language. It includes a pre-defined set of classes for API resources that initialize themselves dynamically from API responses which makes it compatible with a wide range of versions of the OpenAI API.

- API Gateway: an AWS service for creating, publishing, maintaining, monitoring, and securing REST, HTTP, and WebSocket APIs at any scale.

- IAM: a web service that helps you securely control access to AWS resources. With IAM, you can centrally manage permissions that control which AWS resources users can access. You use IAM to control who is authenticated (signed in) and authorized (has permissions) to use resources.

- Lambda: an event-driven, serverless computing platform provided by Amazon as a part of Amazon Web Services. It is a computing service that runs code in response to events and automatically manages the computing resources required by that code. It was introduced on November 13, 2014.

- CloudWatch: CloudWatch enables you to monitor your complete stack (applications, infrastructure, network, and services) and use alarms, logs, and events data to take automated actions and reduce mean time to resolution (MTTR).

- Route53: (OPTIONAL). a scalable and highly available Domain Name System service. Released on December 5, 2010.

- Certificate Manager: (OPTIONAL). handles the complexity of creating, storing, and renewing public and private SSL/TLS X.509 certificates and keys that protect your AWS websites and applications.

This project leverages the official OpenAI PyPi Python library. The openai library is added to the AWS Lambda installation package. You can review terraform/lambda_openai_text.tf to see how this actually happens from a technical perspective.

Other reference materials on how to use this library:

- How to Get an OpenAI API Key

- OpenAI Official Example Applications

- OpenAI API Documentation

- OpenAI PyPi

- OpenAI Python Source

- OpenAI Official Cookbook

Be aware that the OpenAI platform API is not free. Moreover, the costing models vary significantly across the family of OpenAI models. GPT-4 for example cost significantly more to use than GPT-3.5. Having said that, for development purposes, the cost likely will be negligible. I spent a total of around $0.025 USD while developing and testing the initial release of this project, whereupon I invoked the openai api around 200 times (rough guess).

CORS is always a tedious topics with regard to REST API's. Please note the following special considerations in this API project:

- CORS preflight is implemented with a Node.js Lambda - openai_cors_preflight_handler

- There are a total of 5 response types which require including CORS headers. These are

- the hoped-for 200 response status that is returned by Lambda

- the less hoped-for 400 and 500 response statuses returned by Lambda

- and the even less hoped-for 400 and 500 response statuses that can be returned by API Gateway itself in certain cases such as a.) Lambda timeout, b.) invalid api key credentials, amongst other possibilities.

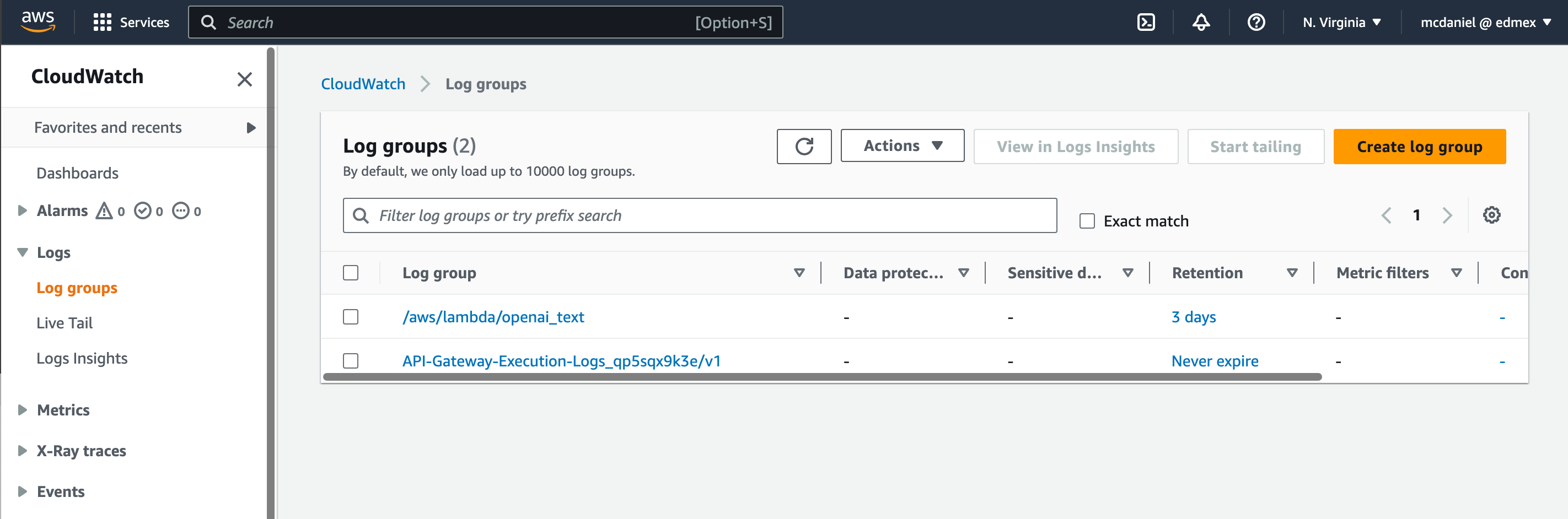

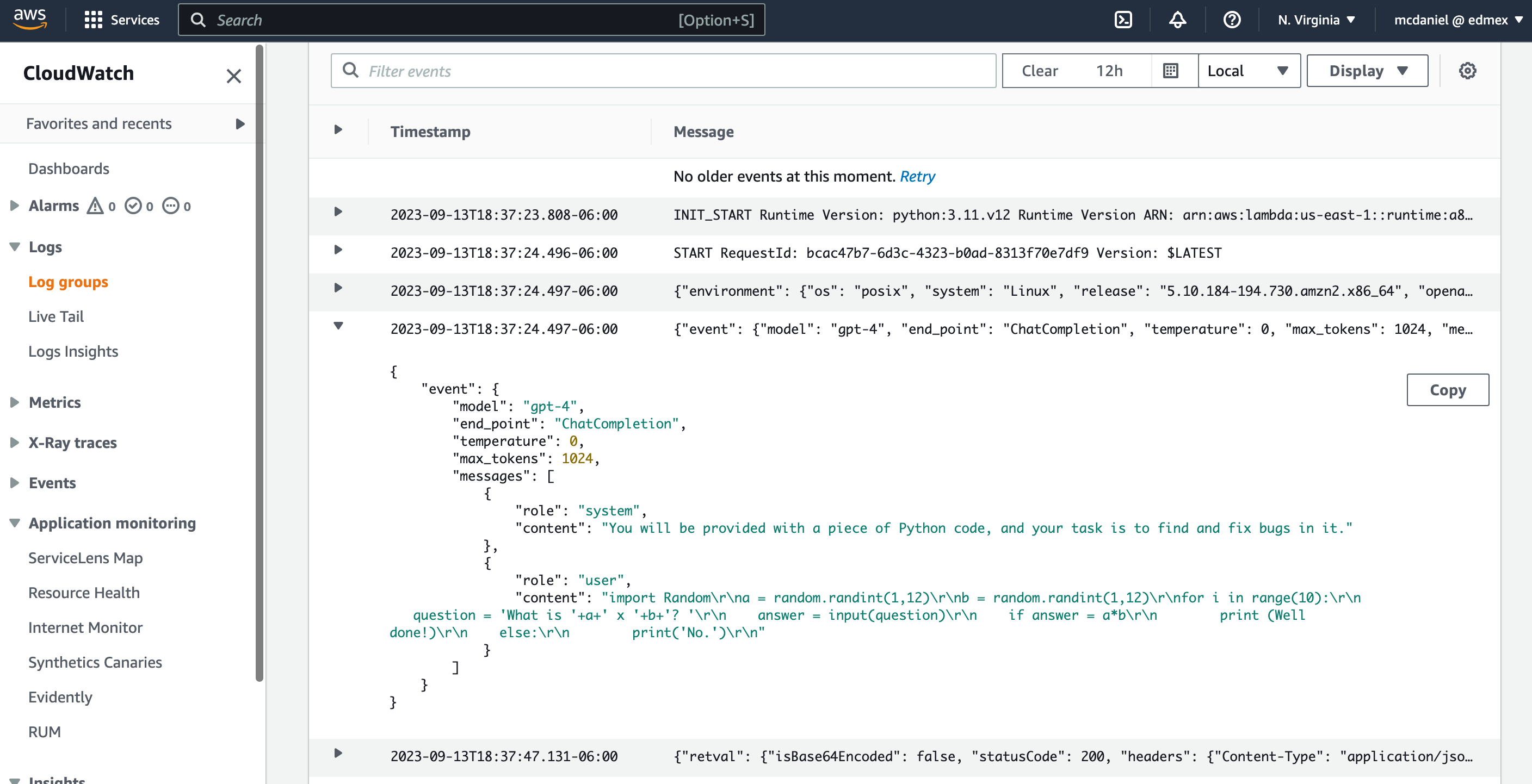

- For audit and trouble shooting purposes, Cloudwatch logs exist for API Gateway as well as the two Lambas, openai_text and openai_cors_preflight_handler

In each case this project attempts to compile an http response that is as verbose as technically possible given the nature and origin of the response data.

There are four URL endpoints that you can use for development and testing purposes. These are especially useful when developing features in the React web app, as each end point reliably returns a known, static response body. Each endpoint returns a body response that is comparable to that returned by the Python Lambda.

a static example response from the OpenAI chatgpt-3.5 API

{

"body": {

"choices": [

{

"finish_reason": "stop",

"index": 0,

"message": {

"content": "Oh, hello there! What kind of trouble can I unknowingly get myself into for you today?",

"role": "assistant"

}

}

],

"created": 1697495501,

"id": "chatcmpl-8AQPdETlM808Fp0NjEeCOOc3a13Vt",

"model": "gpt-3.5-turbo-0613",

"object": "chat.completion",

"usage": {

"completion_tokens": 20,

"prompt_tokens": 31,

"total_tokens": 51

}

},

"isBase64Encoded": false,

"statusCode": 200

}a static http 400 response

{

"body": {

"error": "Bad Request",

"message": "TEST 400 RESPONSE."

},

"isBase64Encoded": false,

"statusCode": 400

}a static http 500 response

{

"body": {

"error": "Internal Server Error",

"message": "TEST 500 RESPONSE."

},

"isBase64Encoded": false,

"statusCode": 500

}a static http 504 response with an empty body.

The terraform scripts will automatically create a collection of CloudWatch Log Groups. Additionally, note the Terraform global variable 'debug_mode' (defaults to 'true') which will increase the verbosity of log entries in the Lambda functions, which are implemented with Python.

I refined the contents and formatting of each log group to suit my own needs while building this solution, and in particular while coding the Python Lambda functions.

Detailed documentation for each endpoint is available here: Documentation

To get community support, go to the official Issues Page for this project.

We welcome contributions! There are a variety of ways for you to get involved, regardless of your background. In additional to Pull requests, this project would benefit from contributors focused on documentation and how-to video content creation, testing, community engagement, and stewards to help us to ensure that we comply with evolving standards for the ethical use of AI.

You can also contact Lawrence McDaniel directly.