diff --git a/docs/dev_guides/index.md b/docs/dev_guides/index.md

index de3e98f83b..e2b37d9b7f 100644

--- a/docs/dev_guides/index.md

+++ b/docs/dev_guides/index.md

@@ -29,4 +29,4 @@ Splink is quite a large, complex codebase. The guides in this section lay out so

* [Comparison and Comparison Level Libraries](./comparisons/new_library_comparisons_and_levels.md) - demonstrates how `Comparison` Library and `ComparisonLevel` Library functions are structured within Splink, including how to add new functions and edit existing functions.

* [Charts](./charts.ipynb) - demonstrates how charts are built in Splink, including how to add new charts and edit existing charts.

* [User-Defined Functions](./udfs.md) - demonstrates how User Defined Functions (UDFs) are used to provide functionality within Splink that is not native to a given SQL backend.

-

+* [Settings Validation](./settings_validation.md) - summarises how to use and expand the existing settings schema and validation functions.

diff --git a/docs/dev_guides/settings_validation.md b/docs/dev_guides/settings_validation.md

deleted file mode 100644

index a01e6dd9d8..0000000000

--- a/docs/dev_guides/settings_validation.md

+++ /dev/null

@@ -1,142 +0,0 @@

-## Settings Schema Validation

-

-[Schema validation](https://github.com/moj-analytical-services/splink/blob/master/splink/validate_jsonschema.py) is currently performed inside the [settings.py](https://github.com/moj-analytical-services/splink/blob/master/splink/settings.py#L44C17-L44C17) script.

-

-This assesses the user's settings dictionary against our custom [settings schema](https://github.com/moj-analytical-services/splink/blob/master/splink/files/settings_jsonschema.json). Where the data type, key or entered values devivate from what is specified in the schema, an error will be thrown.

-

-You can modify the schema by editing the json file [here](https://github.com/moj-analytical-services/splink/blob/master/splink/files/settings_jsonschema.json).

-

-Detailed information on the arguments that can be suppled is available within the [json schema documentation](https://json-schema.org/learn/getting-started-step-by-step).

-

-

-

-## Settings Validator

-

-In addition to the Settings Schema Validation, we have implemented checks to evaluate the validity of a user's settings in the context of the provided input dataframe(s) and linker type.

-

-??? note "The current validation checks performed include:"

- * Verifying that the user's blocking rules and comparison levels have been [imported from the correct library](https://github.com/moj-analytical-services/splink/pull/1579) and contain sufficient information for use in a Splink model.

- * [Performing column lookups]((https://github.com/moj-analytical-services/splink/blob/master/splink/settings_validation/column_lookups.py)), confirming that columns specified in the user's settings dictionary exist within **all** of the user's input dataframes.

- * Conducting various miscellaneous checks that generate more informative error messages for the user, should they be using Splink in an unintended manner.

-

-All components related to our settings checks are currently located in the [settings validation](https://github.com/moj-analytical-services/splink/tree/32e66db1c8c0bed54682daf9a6fea8ef4ed79ab4/splink/settings_validation) within Splink.

-

-This folder is comprised of three scripts, each of which inspects the settings dictionary at different stages of its journey:

-

-* [valid_types.py](https://github.com/moj-analytical-services/splink/blob/32e66db1c8c0bed54682daf9a6fea8ef4ed79ab4/splink/settings_validation/valid_types.py) - This script includes various miscellaneous checks for comparison levels, blocking rules, and linker objects. These checks are primarily performed within settings.py.

-* [settings_validator.py](https://github.com/moj-analytical-services/splink/blob/32e66db1c8c0bed54682daf9a6fea8ef4ed79ab4/splink/settings_validation/settings_validator.py) - This script includes the core `SettingsValidator` class and contains a series of methods that retrieve information on fields within the user's settings dictionary that contain information on columns to be used in training and prediction. Additionally, it provides supplementary cleaning functions to assist in the removal of quotes, prefixes, and suffixes that may be present in a given column name.

-* [column_lookups.py](https://github.com/moj-analytical-services/splink/blob/32e66db1c8c0bed54682daf9a6fea8ef4ed79ab4/splink/settings_validation/column_lookups.py) - This script contains helper functions that generate a series of log strings outlining invalid columns identified within your settings dictionary. It primarily consists of methods that run validation checks on either raw SQL or input columns and assesses their presence in **all** dataframes supplied by the user.

-

-

-

-## Extending the Settings Validator

-

-Before adding any code, it's initially import to identify and determine whether the checks you wish to add fall into any of the broad buckets outlined in the scripts above.

-

-If you intend to introduce checks that are distinct from those currently in place, it is advisable to create a new script within `splink/settings_validation`.

-

-The current options you may wish to extend are as follows:

-

-### Logging Errors

-

-Logging multiple errrors sequentially without disrupting the program, is a common issue. To enable the logging of multiple errors in a singular check, or across multiple checks, an [`ErrorLogger`](https://github.com/moj-analytical-services/splink/blob/settings_validation_refactor_and_improved_logging/splink/exceptions.py#L34) class is available for use.

-

-The `ErrorLogger` operates in a similar way to working with a list, allowing you to add additional errors using the `append` method. Once you've logged all of your errors, you can raise them with the `raise_and_log_all_errors` method.

-

-??? note "`ErrorLogger` in practice"

- ```py

- from splink.exceptions import ErrorLogger

-

- # Create an error logger instance

- e = ErrorLogger()

-

- # Log your errors

- e.append(SyntaxError("The syntax is wrong"))

- e.append(NameError("Invalid name entered"))

-

- # Raise your errors

- e.raise_and_log_all_errors()

- ```

-

-

-### Expanding our Miscellaneous Checks

-

-Miscellaneous checks should typically be added as standalone functions. These functions can then be integrated into the linker's startup process for validation.

-

-In most cases, you have more flexibility in how you structure your solutions. You can place the checks in a script that corresponds to the specific checks being performed, or, if one doesn't already exist, create a new script with a descriptive name.

-

-A prime example of a miscellaneous check is [`validate_dialect`](https://github.com/moj-analytical-services/splink/blob/master/splink/settings_validation/valid_types.py#L31), which assesses whether the settings dialect aligns with the linker's dialect.

-

-

-### Additional Comparison and Blocking Rule Checks

-

-If your checks pertain to comparisons or blocking rules, most of these checks are currently implemented within the [valid_types.py](https://github.com/moj-analytical-services/splink/blob/32e66db1c8c0bed54682daf9a6fea8ef4ed79ab4/splink/settings_validation/valid_types.py) script.

-

-Currently, comparison and blocking rule checks are organised in a modular format.

-

-To expand the current suite of tests, you should:

-

-1. Create a function to inspect the presence of the error you're evaluating.

-2. Define an error message that you intend to add to the `ErrorLogger` class.

-3. Integrate these elements into either the `validate_comparison_levels` function or a similar one, which appends any detected errors.

-4. Finally, work out where this function should live in the setup process of the linker object.

-

-

-### Additional Column Checks

-

-Should you need to include extra checks to assess the validity of columns supplied by a user, your primary focus should be on the [column_lookups.py](https://github.com/moj-analytical-services/splink/blob/master/splink/settings_validation/column_lookups.py) script.

-

-There are currently three classes employed to construct the current log strings. These can be extended to perform additional column checks.

-

-??? note "`InvalidCols`"

- `InvalidCols` is a NamedTuple, used to construct the bulk of our log strings. This accepts a list of columns and the type of error, producing a complete log string when requested.

-

- In practice, this is used as follows:

- ```py

- # Store the invalid columns and why they're invalid

- my_invalid_cols = InvalidCols("invalid_cols", ["first_col", "second_col"])

- # Construct the corresponding log string

- my_invalid_cols.construct_log_string()

- ```

-

-??? note "`InvalidColValidator`"

- `InvalidColValidator` houses a series of validation checks to evaluate whether the column(s) contained within either a SQL string or a user's raw input string, are present within the underlying dataframes.

-

- To achieve this, it employs a range of cleaning functions to standardise our column inputs and conducts a series of checks on these cleaned columns. It utilises `InvalidCols` tuples to log any identified invalid columns.

-

- It inherits from our the `SettingsValidator` class.

-

-??? note "`InvalidColumnsLogger`"

- The principal logging class for our invalid column checks.

-

- This class primarily calls our builder functions outlined in `InvalidColValidator`, constructing a series of log strings for output to both the console and the user's log file (if it exists).

-

-To extend the column checks, you simply need to add an additional validation method to the `InvalidColValidator` class. The implementation of this will vary depending on whether you merely need to assess the validity of a single column or columns within a SQL statement.

-

-#### Single Column Checks

-To assess single columns, the `validate_settings_column` should typically be used. This takes in a `setting_id` (analogous to the title you want to give your log string) and a list of columns to be checked.

-

-Should you need more control, the setup for checking a single column by hand is simple.

-

-To perform checks on a single column, you need to:

-

-* Clean your input text

-* Run a lookup of the clean text against the raw input dataframe(s) columns.

-* Construct log string (typically handled by `InvalidCols`)

-

-Cleaning and validation can be performed with a single method called - `clean_and_return_missing_columns`.

-

-See the `validate_uid` and `validate_columns_to_retain` methods for examples of this process in practice.

-

-#### Raw SQL Checks

-For raw SQL statements, you should be able to make use of the `validate_columns_in_sql_strings` method. This takes in a list of SQL strings and spits out a list of `InvalidCols` tuples, depending on the checks you ask it to perform.

-

-Should you need more control, the process is similar to that of the single column case, just with an additional parsing step.

-

-Parsing is handled by `parse_columns_in_sql`, which is found within `splink.parse_sql`. This will spit out a list of column names that were identified.

-

-Note that this is handled by SQLglot, meaning that it's not always 100% accurate. For our purposes though, its flexibility is unparalleled and allows us to more easily and efficiently extract column names.

-

-Once parsed, you can again run a series of lookups against your input dataframes in a loop. See **Single Column Checks** for more info.

-

-As there are limited constructors present within , it should be incredibly lightweight to create a new class to be used ...

diff --git a/docs/dev_guides/settings_validation/extending_settings_validator.md b/docs/dev_guides/settings_validation/extending_settings_validator.md

new file mode 100644

index 0000000000..7eb58336e5

--- /dev/null

+++ b/docs/dev_guides/settings_validation/extending_settings_validator.md

@@ -0,0 +1,187 @@

+## Expanding the Settings Validator

+

+If a validation check is currently missing, you might want to expand the existing validation codebase.

+

+Before adding any code, it's essential to determine whether the checks you want to include fit into any of the general validation categories already in place.

+

+In summary, the following validation checks are currently carried out:

+

+* Verifying that the user's blocking rules and comparison levels have been [imported from the correct library](https://github.com/moj-analytical-services/splink/pull/1579) and contain sufficient information for Splink model usage.

+* [Performing column lookups](https://github.com/moj-analytical-services/splink/blob/master/splink/settings_validation/column_lookups.py) to ensure that columns specified in the user's settings dictionary exist within **all** of the user's input dataframes.

+* Various miscellaneous checks designed to generate more informative error messages for the user if they happen to employ Splink in an unintended manner.

+

+If you plan to introduce checks that differ from those currently in place, it's advisable to create a new script within `splink/settings_validation`.

+

+

+

+## Splink Exceptions and Warnings

+

+While working on extending the settings validation tools suite, it's important to consider how we notify users when they've included invalid settings or features.

+

+Exception handling and warnings should be integrated into your validation functions to either halt the program or inform the user when errors occur, raising informative error messages as needed.

+

+### Warnings in Splink

+

+Warnings should be employed when you want to alert the user that an included setting might lead to unintended consequences, allowing the user to decide if it warrants further action.

+

+This could be applicable in scenarios such as:

+

+* Parsing SQL where the potential for failure or incorrect column parsing exists.

+* Situations where the user is better positioned to determine whether the issue should be treated as an error, like when dealing with exceptionally high values for [probability_two_random_records_match](https://github.com/moj-analytical-services/splink/blob/master/splink/files/settings_jsonschema.json#L29).

+

+Implementing warnings is straightforward and involves creating a logger instance within your script, followed by a warning call.

+

+??? note "Warnings in practice:"

+ ```py

+ import logging

+ logger = logging.getLogger(__name__)

+

+ logger.warning("My warning message")

+ ```

+

+ Which will print:

+

+ > `My warning message`

+

+ to both the console and your log file.

+

+### Splink Exceptions

+

+Exceptions should be raised when you want the program to halt due to an unequivocal error.

+

+In addition to the built-in exception types, such as [SyntaxError](https://docs.python.org/3/library/exceptions.html#SyntaxError), we have several Splink-specific exceptions available for use.

+

+These exceptions serve to raise issues specific to Splink or to customize exception behavior. For instance, you can specify a message prefix by modifying the constructor of an exception, as exemplified in the [ComparisonSettingsException](https://github.com/moj-analytical-services/splink/blob/f7c155c27ccf3c906c92180411b527a4cfd1111b/splink/exceptions.py#L14).

+

+It's crucial to also consider how to inform the user that such behavior is not permitted. For guidelines on crafting effective error messages, refer to [How to Write Good Error Messages](https://uxplanet.org/how-to-write-good-error-messages-858e4551cd4).

+

+For a comprehensive list of exceptions native to Splink, please visit [here](https://github.com/moj-analytical-services/splink/blob/master/splink/exceptions.py).

+

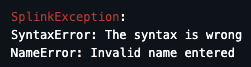

+#### Raising Multiple Exceptions

+

+Raising multiple errrors sequentially without disrupting the program, is a feature we commonly wish to implement across the validation steps.

+

+In numerous instances, it makes sense to wait until all checks have been performed before raising exceptions captured to the user in one go.

+

+To enable the logging of multiple errors in a singular check, or across multiple checks, an [`ErrorLogger`](https://github.com/moj-analytical-services/splink/blob/settings_validation_refactor_and_improved_logging/splink/exceptions.py#L34) class is available for use.

+

+The `ErrorLogger` operates in a similar way to working with a list, allowing you to add additional errors using the `append` method. Once you've logged all of your errors, you can raise them with the `raise_and_log_all_errors` method.

+

+??? note "`ErrorLogger` in practice"

+ ```py

+ from splink.exceptions import ErrorLogger

+

+ # Create an error logger instance

+ e = ErrorLogger()

+

+ # Log your errors

+ e.append(SyntaxError("The syntax is wrong"))

+ e.append(NameError("Invalid name entered"))

+

+ # Raise your errors

+ e.raise_and_log_all_errors()

+ ```

+

+

+

+

+

+## Expanding our Miscellaneous Checks

+

+Miscellaneous checks should typically be added as standalone functions. These functions can then be integrated into the linker's startup process for validation.

+

+In most cases, you have more flexibility in how you structure your solutions. You can place the checks in a script that corresponds to the specific checks being performed, or, if one doesn't already exist, create a new script with a descriptive name.

+

+A prime example of a miscellaneous check is [`validate_dialect`](https://github.com/moj-analytical-services/splink/blob/master/splink/settings_validation/valid_types.py#L31), which assesses whether the settings dialect aligns with the linker's dialect.

+

+

+

+## Additional Comparison and Blocking Rule Checks

+

+If your checks pertain to comparisons or blocking rules, most of these checks are currently implemented within the [valid_types.py](https://github.com/moj-analytical-services/splink/blob/32e66db1c8c0bed54682daf9a6fea8ef4ed79ab4/splink/settings_validation/valid_types.py) script.

+

+Currently, comparison and blocking rule checks are organised in a modular format.

+

+To expand the current suite of tests, you should:

+

+1. Create a function to inspect the presence of the error you're evaluating.

+2. Define an error message that you intend to add to the `ErrorLogger` class.

+3. Integrate these elements into either the [`validate_comparison_levels`](https://github.com/moj-analytical-services/splink/blob/32e66db1c8c0bed54682daf9a6fea8ef4ed79ab4/splink/settings_validation/valid_types.py#L43) function (or something similar), which appends any detected errors to an `ErrorLogger`.

+4. Finally, work out where this function should live in the setup process of the linker object. Typically, you should look to add these checks before any processing of the settings dictionary is performed.

+

+The above steps are set to change as we are looking to refactor our settings object.

+

+

+

+## Checking that columns exist

+

+Should you need to include extra checks to assess the validity of columns supplied by a user, your primary focus should be on the [column_lookups.py](https://github.com/moj-analytical-services/splink/blob/master/splink/settings_validation/column_lookups.py) script.

+

+There are currently three classes employed to construct the current log strings. These can be extended to perform additional column checks.

+

+??? note "`InvalidCols`"

+ `InvalidCols` is a NamedTuple, used to construct the bulk of our log strings. This accepts a list of columns and the type of error, producing a complete log string when requested.

+

+ In practice, this is used as follows:

+ ```py

+ # Store the invalid columns and why they're invalid

+ my_invalid_cols = InvalidCols("invalid_cols", ["first_col", "second_col"])

+ # Construct the corresponding log string

+ my_invalid_cols.construct_log_string()

+ ```

+

+??? note "`InvalidColValidator`"

+ `InvalidColValidator` houses a series of validation checks to evaluate whether the column(s) contained within either a SQL string or a user's raw input string, are present within the underlying dataframes.

+

+ To achieve this, it employs a range of cleaning functions to standardise our column inputs and conducts a series of checks on these cleaned columns. It utilises `InvalidCols` tuples to log any identified invalid columns.

+

+ It inherits from our the `SettingsValidator` class.

+

+??? note "`InvalidColumnsLogger`"

+ The principal logging class for our invalid column checks.

+

+ This class primarily calls our builder functions outlined in `InvalidColValidator`, constructing a series of log strings for output to both the console and the user's log file (if it exists).

+

+

+To extend the column checks, you simply need to add an additional validation method to the [`InvalidColValidator`](https://github.com/moj-analytical-services/splink/blob/master/splink/settings_validation/column_lookups.py#L15) class, followed by an extension of the [`InvalidColumnsLogger`](https://github.com/moj-analytical-services/splink/blob/master/splink/settings_validation/column_lookups.py#L164).

+

+### A Practical Example of a Column Check

+

+For an example of column checks in practice, see [`validate_uid`](https://github.com/moj-analytical-services/splink/blob/master/splink/settings_validation/column_lookups.py#L195).

+

+Here, we call `validate_settings_column`, checking whether the unique ID column submitted by the user is valid. The output of this call yields either an `InvalidCols` tuple, or `None`.

+

+From there, we can use the built-in log constructor [`construct_generic_settings_log_string`](https://github.com/moj-analytical-services/splink/blob/master/splink/settings_validation/column_lookups.py#L329C27-L329C27) to construct and print the required logs. Where the output above was `None`, nothing is logged.

+

+If your checks aren't part of the initial settings check (say you want to assess additional columns found in blocking rules supplied at a later stage by the user), you should add a new method to `InvalidColumnsLogger`, similar in functionality to [`construct_output_logs`](https://github.com/moj-analytical-services/splink/blob/master/splink/settings_validation/column_lookups.py#L319).

+

+However, it is worth noting that not all checks are performed on a simple string columns. Where you require checks to be performed on SQL strings, there's an additional step required, outlined below.

+

+### Single Column Checks

+

+To review single columns, [`validate_settings_column`](https://github.com/moj-analytical-services/splink/blob/master/splink/settings_validation/column_lookups.py#L144) should be used. This takes in a `setting_id` (analogous to the title you want to give your log string) and a list of columns to be checked.

+

+A working example of this in practice can be found in the section above.

+

+### Checking Columns in SQL statements

+

+For raw SQL statements, you should make use of the [`validate_columns_in_sql_strings`](https://github.com/moj-analytical-services/splink/blob/master/splink/settings_validation/column_lookups.py#L102) method.

+

+This takes in a list of SQL strings and spits out a list of `InvalidCols` tuples, depending on the checks you ask it to perform.

+

+Should you need more control, the process is similar to that of the single column case, just with an additional parsing step.

+

+Parsing is handled by [`parse_columns_in_sql`](https://github.com/moj-analytical-services/splink/blob/master/splink/parse_sql.py#L45). This will spit out a list of column names that were identified by sqlglot.

+

+> Note that as this is handled by SQLglot, it's not always 100% accurate. For our purposes though, its flexibility is unparalleled and allows us to more easily and efficiently extract column names.

+

+Once your columns have been parsed, you can again run a series of lookups against your input dataframe(s). This is identical to the steps outlined in the **Single Column Checks** section.

+

+You may also wish to perform additional checks on the columns, to assess whether they contain valid prefixes, suffixes or some other quality of the column.

+

+Additional checks can be passed to [`validate_columns_in_sql_strings`](https://github.com/moj-analytical-services/splink/blob/master/splink/settings_validation/column_lookups.py#L102) and should be specified as methods in the `InvalidColValidator` class.

+

+See [validate_blocking_rules](https://github.com/moj-analytical-services/splink/blob/master/splink/settings_validation/column_lookups.py#L209) for a practical example where we loop through each blocking rule, parse it and then assess whether it:

+

+1. Contains a valid list of columns

+2. Each column contains a valid table prefix.

diff --git a/docs/dev_guides/settings_validation/settings_validation_overview.md b/docs/dev_guides/settings_validation/settings_validation_overview.md

new file mode 100644

index 0000000000..4900d78848

--- /dev/null

+++ b/docs/dev_guides/settings_validation/settings_validation_overview.md

@@ -0,0 +1,55 @@

+## Settings Validation

+

+A common issue within Splink is users providing invalid settings dictionaries. To prevent this, the settings validator scans through a settings dictionary and provides user-friendly feedback on what needs to be fixed.

+

+At a high level, this includes:

+

+1. Assessing the structure of the settings dictionary. See the [Settings Schema Validation](#settings-schema-validation) section.

+2. The contents of the settings dictionary. See the [Settings Vaildator](#settings-validator) section.

+

+

+

+## Settings Schema Validation

+

+Our custom settings schema can be found within [settings_jsonschema.json](https://github.com/moj-analytical-services/splink/blob/master/splink/files/settings_jsonschema.json).

+

+This is a json file, outlining the required data type, key and value(s) to be specified by the user while constructing their settings. Where values devivate from this specified schema, an error will be thrown.

+

+[Schema validation](https://github.com/moj-analytical-services/splink/blob/master/splink/validate_jsonschema.py) is currently performed inside the [settings.py](https://github.com/moj-analytical-services/splink/blob/master/splink/settings.py#L44C17-L44C17) script.

+

+You can modify the schema by manually editing the [json schema](https://github.com/moj-analytical-services/splink/blob/master/splink/files/settings_jsonschema.json).

+

+Modifications can be used to (amongst other uses):

+

+* Set or remove default values for schema keys.

+* Set the required data type for a given key.

+* Expand or refine previous titles and descriptions to help with clarity.

+

+Any updates you wish to make to the schema should be discussed with the wider team, to ensure it won't break backwards compatability and makes sense as a design decision.

+

+Detailed information on the arguments that can be suppled to the json schema can be found within the [json schema documentation](https://json-schema.org/learn/getting-started-step-by-step).

+

+

+

+## Settings Validator

+

+The settings validation code currently resides in the [settings validation](https://github.com/moj-analytical-services/splink/tree/32e66db1c8c0bed54682daf9a6fea8ef4ed79ab4/splink/settings_validation) directory of Splink. This code is responsible for executing a secondary series of tests to determine whether all values within the settings dictionary will generate valid SQL.

+

+Numerous inputs pass our initial schema checks before breaking other parts of the code base. These breaks are typically due to the construction of invalid SQL, that is then passed to the database engine, [commonly resulting in uninformative errors](https://github.com/moj-analytical-services/splink/issues/1362).

+

+Frequently encountered problems include:

+

+* Usage of invalid column names. For example, specifying a [`unique_id_column_name`](https://github.com/moj-analytical-services/splink/blob/settings_validation_docs/splink/files/settings_jsonschema.json#L61) that doesn't exist in the underlying dataframe(s). Such names satisfy the schema requirements as long as they are strings.

+* Users not updating default values in the settings schema, even when these values are inappropriate for their provided input dataframes.

+* Importing comparisons and blocking rules from incorrect sections of the codebase, or using an inappropriate data type (comparison level vs. comparison).

+* Using Splink for an invalid form of linkage. See the [following dicsussion](https://github.com/moj-analytical-services/splink/issues/1362).

+

+Currently, the [settings validation](https://github.com/moj-analytical-services/splink/tree/32e66db1c8c0bed54682daf9a6fea8ef4ed79ab4/splink/settings_validation) scripts are setup in a modular fashion, to allow each to inherit the checks it needs.

+

+The folder is comprised of three scripts, each of which inspects the settings dictionary at different stages of its journey:

+

+* [valid_types.py](https://github.com/moj-analytical-services/splink/blob/32e66db1c8c0bed54682daf9a6fea8ef4ed79ab4/splink/settings_validation/valid_types.py) - This script includes various miscellaneous checks for comparison levels, blocking rules, and linker objects. These checks are primarily performed within settings.py.

+* [settings_validator.py](https://github.com/moj-analytical-services/splink/blob/32e66db1c8c0bed54682daf9a6fea8ef4ed79ab4/splink/settings_validation/settings_validator.py) - This script includes the core `SettingsValidator` class and contains a series of methods that retrieve information on fields within the user's settings dictionary that contain information on columns to be used in training and prediction. Additionally, it provides supplementary cleaning functions to assist in the removal of quotes, prefixes, and suffixes that may be present in a given column name.

+* [column_lookups.py](https://github.com/moj-analytical-services/splink/blob/32e66db1c8c0bed54682daf9a6fea8ef4ed79ab4/splink/settings_validation/column_lookups.py) - This script contains helper functions that generate a series of log strings outlining invalid columns identified within your settings dictionary. It primarily consists of methods that run validation checks on either raw SQL or input columns and assesses their presence in **all** dataframes supplied by the user.

+

+For information on expanding the range of checks available to the validator, see [Extending the Settings Validator](./extending_settings_validator.md).

diff --git a/docs/img/settings_validation/error_logger.png b/docs/img/settings_validation/error_logger.png

new file mode 100644

index 0000000000..a5cf2a785f

Binary files /dev/null and b/docs/img/settings_validation/error_logger.png differ

diff --git a/mkdocs.yml b/mkdocs.yml

index 33a41580ca..8d722d2ebc 100644

--- a/mkdocs.yml

+++ b/mkdocs.yml

@@ -234,7 +234,9 @@ nav:

- Understanding and editing charts: "dev_guides/charts/understanding_and_editing_charts.md"

- Building new charts: "dev_guides/charts/building_charts.ipynb"

- User-Defined Functions: "dev_guides/udfs.md"

- - Settings Validation: "dev_guides/settings_validation.md"

+ - Settings Validation:

+ - Setting Validation Overview: "dev_guides/settings_validation/settings_validation_overview.md"

+ - Extending the Settings Validator: "dev_guides/settings_validation/extending_settings_validator.md"

- Blog:

- blog/index.md

extra_css: