diff --git a/tutorials/W2D1_Macrocircuits/W2D1_Tutorial1.ipynb b/tutorials/W2D1_Macrocircuits/W2D1_Tutorial1.ipynb

index 769cc497b..b099c0d2d 100644

--- a/tutorials/W2D1_Macrocircuits/W2D1_Tutorial1.ipynb

+++ b/tutorials/W2D1_Macrocircuits/W2D1_Tutorial1.ipynb

@@ -580,7 +580,7 @@

"\n",

" return nn.Sequential(*layers)\n",

"\n",

- "net = make_MLP(10, 3, 2)"

+ "net = make_MLP(n_in = 10, W = 3, D = 2)"

]

},

{

@@ -710,7 +710,7 @@

" nn.init.normal_(param, std = ...)\n",

"\n",

"initialize_layers(net, 1)\n",

- "np.testing.assert_allclose(next(net.parameters())[0][0].item(), 0.6093441247940063, err_msg = \"Expected value of parameter is different!\")"

+ "np.testing.assert_allclose(next(net.parameters())[0][0].item(), 0.609, err_msg = \"Expected value of parameter is different!\", atol = 1e-3)"

]

},

{

@@ -737,7 +737,7 @@

" nn.init.normal_(param, std = sigma/np.sqrt(n_in))\n",

"\n",

"initialize_layers(net, 1)\n",

- "np.testing.assert_allclose(next(net.parameters())[0][0].item(), 0.6093441247940063, err_msg = \"Expected value of parameter is different!\")"

+ "np.testing.assert_allclose(next(net.parameters())[0][0].item(), 0.609, err_msg = \"Expected value of parameter is different!\", atol = 1e-3)"

]

},

{

@@ -803,7 +803,7 @@

" return X, ...\n",

"\n",

"X, y = make_data(net, 10, 10000000)\n",

- "np.testing.assert_allclose(X[0][0].item(), 1.9269152879714966, err_msg = \"Expected value of data is different!\")"

+ "np.testing.assert_allclose(X[0][0].item(), 1.927, err_msg = \"Expected value of data is different!\", atol = 1e-3)"

]

},

{

@@ -835,7 +835,7 @@

" return X, y\n",

"\n",

"X, y = make_data(net, 10, 10000000)\n",

- "np.testing.assert_allclose(X[0][0].item(), 1.9269152879714966, err_msg = \"Expected value of data is different!\")"

+ "np.testing.assert_allclose(X[0][0].item(), 1.927, err_msg = \"Expected value of data is different!\", atol = 1e-3)"

]

},

{

@@ -916,7 +916,7 @@

" return Es\n",

"\n",

"Es = train_model(net, X, y, 10, 1e-3)\n",

- "np.testing.assert_allclose(Es[0], 0.0, err_msg = \"Expected value of loss is different!\")"

+ "np.testing.assert_allclose(Es[0], 0.0, err_msg = \"Expected value of loss is different!\", atol = 1e-3)"

]

},

{

@@ -964,7 +964,7 @@

" return Es\n",

"\n",

"Es = train_model(net, X, y, 10, 1e-3)\n",

- "np.testing.assert_allclose(Es[0], 0.0, err_msg = \"Expected value of loss is different!\")"

+ "np.testing.assert_allclose(Es[0], 0.0, err_msg = \"Expected value of loss is different!\", atol = 1e-3)"

]

},

{

@@ -1037,7 +1037,7 @@

" return loss\n",

"\n",

"loss = compute_loss(net, X, y)\n",

- "np.testing.assert_allclose(loss, 0.0, err_msg = \"Expected value of loss is different!\")"

+ "np.testing.assert_allclose(loss, 0.0, err_msg = \"Expected value of loss is different!\", atol = 1e-3)"

]

},

{

@@ -1070,7 +1070,7 @@

" return loss\n",

"\n",

"loss = compute_loss(net, X, y)\n",

- "np.testing.assert_allclose(loss, 0.0, err_msg = \"Expected value of loss is different!\")"

+ "np.testing.assert_allclose(loss, 0.0, err_msg = \"Expected value of loss is different!\", atol = 1e-3)"

]

},

{

diff --git a/tutorials/W2D1_Macrocircuits/W2D1_Tutorial2.ipynb b/tutorials/W2D1_Macrocircuits/W2D1_Tutorial2.ipynb

index b2c9da344..936877ab5 100644

--- a/tutorials/W2D1_Macrocircuits/W2D1_Tutorial2.ipynb

+++ b/tutorials/W2D1_Macrocircuits/W2D1_Tutorial2.ipynb

@@ -1197,7 +1197,7 @@

" Outputs:\n",

" - (float): mean error for train data.\n",

" \"\"\"\n",

- " return np.mean(...)\n",

+ " return np.mean(np.array([fit_relu(..., ..., ..., ..., n_hidden=n_hidden, reg = reg)[1] for _ in range(n_reps)]))\n",

"\n",

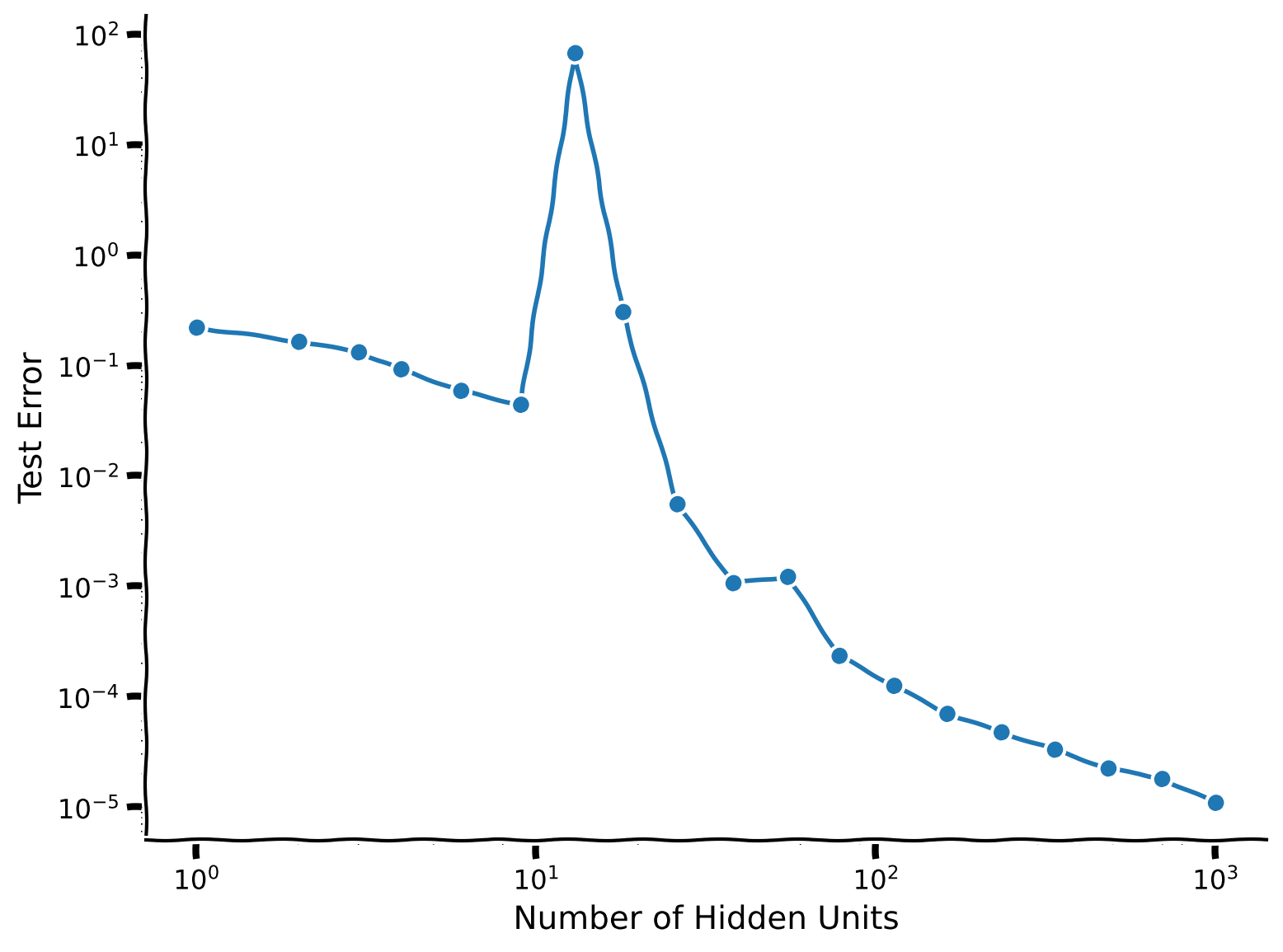

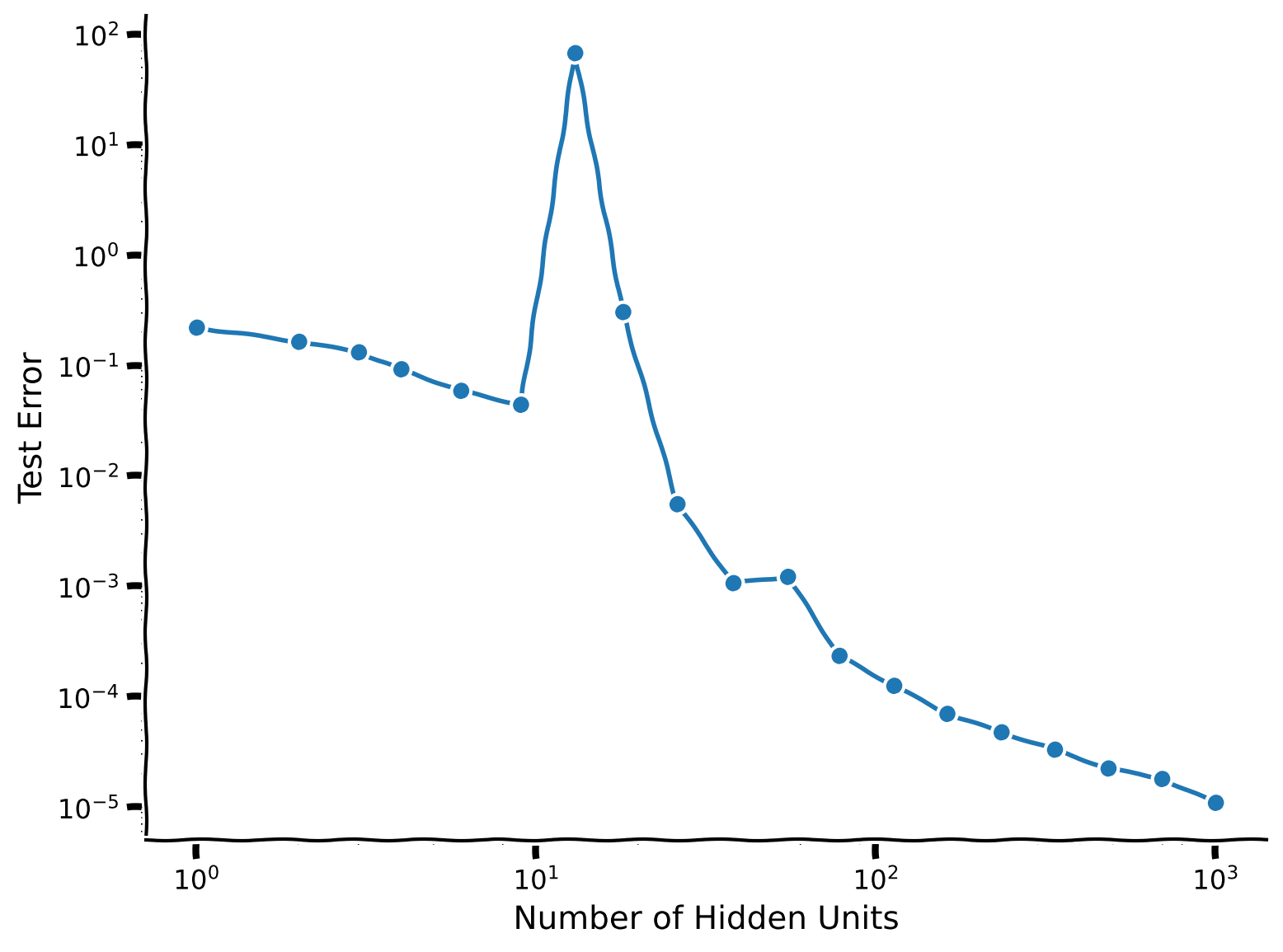

"test_errs = [sweep_test(x_train, y_train, x_test, y_test, n_hidden=..., n_reps=100, reg = 0.0) for n_hid in ...]\n",

"\n",

@@ -1238,7 +1238,7 @@

" Outputs:\n",

" - (float): mean error for train data.\n",

" \"\"\"\n",

- " return np.mean(np.array([fit_relu(x_train, y_train, x_test, y_test, n_hidden=n_hidden, reg = reg)[1] for i in range(n_reps)]))\n",

+ " return np.mean(np.array([fit_relu(x_train, y_train, x_test, y_test, n_hidden=n_hidden, reg = reg)[1] for _ in range(n_reps)]))\n",

"\n",

"test_errs = [sweep_test(x_train, y_train, x_test, y_test, n_hidden=n_hid, n_reps=100, reg = 0.0) for n_hid in n_hids]\n",

"\n",

diff --git a/tutorials/W2D1_Macrocircuits/W2D1_Tutorial3.ipynb b/tutorials/W2D1_Macrocircuits/W2D1_Tutorial3.ipynb

index 3a14ad768..1bd3e0e82 100644

--- a/tutorials/W2D1_Macrocircuits/W2D1_Tutorial3.ipynb

+++ b/tutorials/W2D1_Macrocircuits/W2D1_Tutorial3.ipynb

@@ -2312,6 +2312,23 @@

"1. Is there any difference between the trajectories for the modular and holistic agents? If so, what does it imply?"

]

},

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "execution": {}

+ },

+ "outputs": [],

+ "source": [

+ "#to_remove explanation\n",

+ "\n",

+ "\"\"\"\n",

+ "Discussion: Is there any difference between the trajectories for the modular and holistic agents? If so, what does it imply?\n",

+ "\n",

+ "The holistic agent's trajectory has a higher curvature and length than that of the modular agent, suggesting that the modular agent's trajectory is more optimal. This is because, based on the RL objective with a discount factor smaller than 1, the trajectory should be as efficient (involving fewer steps) as possible.\n",

+ "\"\"\""

+ ]

+ },

{

"cell_type": "code",

"execution_count": null,

diff --git a/tutorials/W2D1_Macrocircuits/instructor/W2D1_Tutorial1.ipynb b/tutorials/W2D1_Macrocircuits/instructor/W2D1_Tutorial1.ipynb

index 93e88f1fe..85cc81484 100644

--- a/tutorials/W2D1_Macrocircuits/instructor/W2D1_Tutorial1.ipynb

+++ b/tutorials/W2D1_Macrocircuits/instructor/W2D1_Tutorial1.ipynb

@@ -580,7 +580,7 @@

"\n",

" return nn.Sequential(*layers)\n",

"\n",

- "net = make_MLP(10, 3, 2)"

+ "net = make_MLP(n_in = 10, W = 3, D = 2)"

]

},

{

@@ -712,7 +712,7 @@

" nn.init.normal_(param, std = ...)\n",

"\n",

"initialize_layers(net, 1)\n",

- "np.testing.assert_allclose(next(net.parameters())[0][0].item(), 0.6093441247940063, err_msg = \"Expected value of parameter is different!\")\n",

+ "np.testing.assert_allclose(next(net.parameters())[0][0].item(), 0.609, err_msg = \"Expected value of parameter is different!\", atol = 1e-3)\n",

"\n",

"```"

]

@@ -741,7 +741,7 @@

" nn.init.normal_(param, std = sigma/np.sqrt(n_in))\n",

"\n",

"initialize_layers(net, 1)\n",

- "np.testing.assert_allclose(next(net.parameters())[0][0].item(), 0.6093441247940063, err_msg = \"Expected value of parameter is different!\")"

+ "np.testing.assert_allclose(next(net.parameters())[0][0].item(), 0.609, err_msg = \"Expected value of parameter is different!\", atol = 1e-3)"

]

},

{

@@ -807,7 +807,7 @@

" return X, ...\n",

"\n",

"X, y = make_data(net, 10, 10000000)\n",

- "np.testing.assert_allclose(X[0][0].item(), 1.9269152879714966, err_msg = \"Expected value of data is different!\")\n",

+ "np.testing.assert_allclose(X[0][0].item(), 1.927, err_msg = \"Expected value of data is different!\", atol = 1e-3)\n",

"\n",

"```"

]

@@ -841,7 +841,7 @@

" return X, y\n",

"\n",

"X, y = make_data(net, 10, 10000000)\n",

- "np.testing.assert_allclose(X[0][0].item(), 1.9269152879714966, err_msg = \"Expected value of data is different!\")"

+ "np.testing.assert_allclose(X[0][0].item(), 1.927, err_msg = \"Expected value of data is different!\", atol = 1e-3)"

]

},

{

@@ -922,7 +922,7 @@

" return Es\n",

"\n",

"Es = train_model(net, X, y, 10, 1e-3)\n",

- "np.testing.assert_allclose(Es[0], 0.0, err_msg = \"Expected value of loss is different!\")\n",

+ "np.testing.assert_allclose(Es[0], 0.0, err_msg = \"Expected value of loss is different!\", atol = 1e-3)\n",

"\n",

"```"

]

@@ -972,7 +972,7 @@

" return Es\n",

"\n",

"Es = train_model(net, X, y, 10, 1e-3)\n",

- "np.testing.assert_allclose(Es[0], 0.0, err_msg = \"Expected value of loss is different!\")"

+ "np.testing.assert_allclose(Es[0], 0.0, err_msg = \"Expected value of loss is different!\", atol = 1e-3)"

]

},

{

@@ -1045,7 +1045,7 @@

" return loss\n",

"\n",

"loss = compute_loss(net, X, y)\n",

- "np.testing.assert_allclose(loss, 0.0, err_msg = \"Expected value of loss is different!\")\n",

+ "np.testing.assert_allclose(loss, 0.0, err_msg = \"Expected value of loss is different!\", atol = 1e-3)\n",

"\n",

"```"

]

@@ -1080,7 +1080,7 @@

" return loss\n",

"\n",

"loss = compute_loss(net, X, y)\n",

- "np.testing.assert_allclose(loss, 0.0, err_msg = \"Expected value of loss is different!\")"

+ "np.testing.assert_allclose(loss, 0.0, err_msg = \"Expected value of loss is different!\", atol = 1e-3)"

]

},

{

diff --git a/tutorials/W2D1_Macrocircuits/instructor/W2D1_Tutorial2.ipynb b/tutorials/W2D1_Macrocircuits/instructor/W2D1_Tutorial2.ipynb

index 84ef976c0..e31b18a6b 100644

--- a/tutorials/W2D1_Macrocircuits/instructor/W2D1_Tutorial2.ipynb

+++ b/tutorials/W2D1_Macrocircuits/instructor/W2D1_Tutorial2.ipynb

@@ -1201,7 +1201,7 @@

" Outputs:\n",

" - (float): mean error for train data.\n",

" \"\"\"\n",

- " return np.mean(...)\n",

+ " return np.mean(np.array([fit_relu(..., ..., ..., ..., n_hidden=n_hidden, reg = reg)[1] for _ in range(n_reps)]))\n",

"\n",

"test_errs = [sweep_test(x_train, y_train, x_test, y_test, n_hidden=..., n_reps=100, reg = 0.0) for n_hid in ...]\n",

"\n",

@@ -1244,7 +1244,7 @@

" Outputs:\n",

" - (float): mean error for train data.\n",

" \"\"\"\n",

- " return np.mean(np.array([fit_relu(x_train, y_train, x_test, y_test, n_hidden=n_hidden, reg = reg)[1] for i in range(n_reps)]))\n",

+ " return np.mean(np.array([fit_relu(x_train, y_train, x_test, y_test, n_hidden=n_hidden, reg = reg)[1] for _ in range(n_reps)]))\n",

"\n",

"test_errs = [sweep_test(x_train, y_train, x_test, y_test, n_hidden=n_hid, n_reps=100, reg = 0.0) for n_hid in n_hids]\n",

"\n",

diff --git a/tutorials/W2D1_Macrocircuits/instructor/W2D1_Tutorial3.ipynb b/tutorials/W2D1_Macrocircuits/instructor/W2D1_Tutorial3.ipynb

index c18b73ee1..651b867d4 100644

--- a/tutorials/W2D1_Macrocircuits/instructor/W2D1_Tutorial3.ipynb

+++ b/tutorials/W2D1_Macrocircuits/instructor/W2D1_Tutorial3.ipynb

@@ -2322,6 +2322,23 @@

"1. Is there any difference between the trajectories for the modular and holistic agents? If so, what does it imply?"

]

},

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "execution": {}

+ },

+ "outputs": [],

+ "source": [

+ "#to_remove explanation\n",

+ "\n",

+ "\"\"\"\n",

+ "Discussion: Is there any difference between the trajectories for the modular and holistic agents? If so, what does it imply?\n",

+ "\n",

+ "The holistic agent's trajectory has a higher curvature and length than that of the modular agent, suggesting that the modular agent's trajectory is more optimal. This is because, based on the RL objective with a discount factor smaller than 1, the trajectory should be as efficient (involving fewer steps) as possible.\n",

+ "\"\"\""

+ ]

+ },

{

"cell_type": "code",

"execution_count": null,

diff --git a/tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial1_Solution_d81fba1b.py b/tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial1_Solution_6b3d3e34.py

similarity index 83%

rename from tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial1_Solution_d81fba1b.py

rename to tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial1_Solution_6b3d3e34.py

index 5949e95fb..426ef320f 100644

--- a/tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial1_Solution_d81fba1b.py

+++ b/tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial1_Solution_6b3d3e34.py

@@ -18,4 +18,4 @@ def make_data(net, n_in, n_examples):

return X, y

X, y = make_data(net, 10, 10000000)

-np.testing.assert_allclose(X[0][0].item(), 1.9269152879714966, err_msg = "Expected value of data is different!")

\ No newline at end of file

+np.testing.assert_allclose(X[0][0].item(), 1.927, err_msg = "Expected value of data is different!", atol = 1e-3)

\ No newline at end of file

diff --git a/tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial1_Solution_4929cd6d.py b/tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial1_Solution_6fa930a8.py

similarity index 93%

rename from tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial1_Solution_4929cd6d.py

rename to tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial1_Solution_6fa930a8.py

index 13f4bcfe6..2303263a2 100644

--- a/tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial1_Solution_4929cd6d.py

+++ b/tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial1_Solution_6fa930a8.py

@@ -19,4 +19,4 @@ def compute_loss(net, X, y):

return loss

loss = compute_loss(net, X, y)

-np.testing.assert_allclose(loss, 0.0, err_msg = "Expected value of loss is different!")

\ No newline at end of file

+np.testing.assert_allclose(loss, 0.0, err_msg = "Expected value of loss is different!", atol = 1e-3)

\ No newline at end of file

diff --git a/tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial1_Solution_a2d6d2f1.py b/tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial1_Solution_8c945e68.py

similarity index 87%

rename from tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial1_Solution_a2d6d2f1.py

rename to tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial1_Solution_8c945e68.py

index 13fafcc00..86af18919 100644

--- a/tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial1_Solution_a2d6d2f1.py

+++ b/tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial1_Solution_8c945e68.py

@@ -13,4 +13,4 @@ def initialize_layers(net,sigma):

nn.init.normal_(param, std = sigma/np.sqrt(n_in))

initialize_layers(net, 1)

-np.testing.assert_allclose(next(net.parameters())[0][0].item(), 0.6093441247940063, err_msg = "Expected value of parameter is different!")

\ No newline at end of file

+np.testing.assert_allclose(next(net.parameters())[0][0].item(), 0.609, err_msg = "Expected value of parameter is different!", atol = 1e-3)

\ No newline at end of file

diff --git a/tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial1_Solution_29135326.py b/tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial1_Solution_c3274cd4.py

similarity index 96%

rename from tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial1_Solution_29135326.py

rename to tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial1_Solution_c3274cd4.py

index d0b4dce22..22d8a50b8 100644

--- a/tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial1_Solution_29135326.py

+++ b/tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial1_Solution_c3274cd4.py

@@ -34,4 +34,4 @@ def train_model(net, X, y, n_epochs, lr, progressbar=True):

return Es

Es = train_model(net, X, y, 10, 1e-3)

-np.testing.assert_allclose(Es[0], 0.0, err_msg = "Expected value of loss is different!")

\ No newline at end of file

+np.testing.assert_allclose(Es[0], 0.0, err_msg = "Expected value of loss is different!", atol = 1e-3)

\ No newline at end of file

diff --git a/tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial2_Solution_e6df4a06.py b/tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial2_Solution_7717ab4f.py

similarity index 94%

rename from tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial2_Solution_e6df4a06.py

rename to tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial2_Solution_7717ab4f.py

index 5bcc9ad74..f2093d87b 100644

--- a/tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial2_Solution_e6df4a06.py

+++ b/tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial2_Solution_7717ab4f.py

@@ -18,7 +18,7 @@ def sweep_test(x_train, y_train, x_test, y_test, n_hidden = 10, n_reps = 100, re

Outputs:

- (float): mean error for train data.

"""

- return np.mean(np.array([fit_relu(x_train, y_train, x_test, y_test, n_hidden=n_hidden, reg = reg)[1] for i in range(n_reps)]))

+ return np.mean(np.array([fit_relu(x_train, y_train, x_test, y_test, n_hidden=n_hidden, reg = reg)[1] for _ in range(n_reps)]))

test_errs = [sweep_test(x_train, y_train, x_test, y_test, n_hidden=n_hid, n_reps=100, reg = 0.0) for n_hid in n_hids]

diff --git a/tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial3_Solution_02426ac5.py b/tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial3_Solution_02426ac5.py

new file mode 100644

index 000000000..1cca0d084

--- /dev/null

+++ b/tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial3_Solution_02426ac5.py

@@ -0,0 +1,6 @@

+

+"""

+Discussion: Is there any difference between the trajectories for the modular and holistic agents? If so, what does it imply?

+

+The holistic agent's trajectory has a higher curvature and length than that of the modular agent, suggesting that the modular agent's trajectory is more optimal. This is because, based on the RL objective with a discount factor smaller than 1, the trajectory should be as efficient (involving fewer steps) as possible.

+"""

\ No newline at end of file

diff --git a/tutorials/W2D1_Macrocircuits/static/W2D1_Tutorial2_Solution_e6df4a06_0.png b/tutorials/W2D1_Macrocircuits/static/W2D1_Tutorial2_Solution_7717ab4f_0.png

similarity index 100%

rename from tutorials/W2D1_Macrocircuits/static/W2D1_Tutorial2_Solution_e6df4a06_0.png

rename to tutorials/W2D1_Macrocircuits/static/W2D1_Tutorial2_Solution_7717ab4f_0.png

diff --git a/tutorials/W2D1_Macrocircuits/student/W2D1_Tutorial1.ipynb b/tutorials/W2D1_Macrocircuits/student/W2D1_Tutorial1.ipynb

index f49719808..e2f5481c9 100644

--- a/tutorials/W2D1_Macrocircuits/student/W2D1_Tutorial1.ipynb

+++ b/tutorials/W2D1_Macrocircuits/student/W2D1_Tutorial1.ipynb

@@ -580,7 +580,7 @@

"\n",

" return nn.Sequential(*layers)\n",

"\n",

- "net = make_MLP(10, 3, 2)"

+ "net = make_MLP(n_in = 10, W = 3, D = 2)"

]

},

{

@@ -691,7 +691,7 @@

" nn.init.normal_(param, std = ...)\n",

"\n",

"initialize_layers(net, 1)\n",

- "np.testing.assert_allclose(next(net.parameters())[0][0].item(), 0.6093441247940063, err_msg = \"Expected value of parameter is different!\")"

+ "np.testing.assert_allclose(next(net.parameters())[0][0].item(), 0.609, err_msg = \"Expected value of parameter is different!\", atol = 1e-3)"

]

},

{

@@ -701,7 +701,7 @@

"execution": {}

},

"source": [

- "[*Click for solution*](https://github.com/neuromatch/NeuroAI_Course/tree/main/tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial1_Solution_a2d6d2f1.py)\n",

+ "[*Click for solution*](https://github.com/neuromatch/NeuroAI_Course/tree/main/tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial1_Solution_8c945e68.py)\n",

"\n"

]

},

@@ -768,7 +768,7 @@

" return X, ...\n",

"\n",

"X, y = make_data(net, 10, 10000000)\n",

- "np.testing.assert_allclose(X[0][0].item(), 1.9269152879714966, err_msg = \"Expected value of data is different!\")"

+ "np.testing.assert_allclose(X[0][0].item(), 1.927, err_msg = \"Expected value of data is different!\", atol = 1e-3)"

]

},

{

@@ -778,7 +778,7 @@

"execution": {}

},

"source": [

- "[*Click for solution*](https://github.com/neuromatch/NeuroAI_Course/tree/main/tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial1_Solution_d81fba1b.py)\n",

+ "[*Click for solution*](https://github.com/neuromatch/NeuroAI_Course/tree/main/tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial1_Solution_6b3d3e34.py)\n",

"\n"

]

},

@@ -860,7 +860,7 @@

" return Es\n",

"\n",

"Es = train_model(net, X, y, 10, 1e-3)\n",

- "np.testing.assert_allclose(Es[0], 0.0, err_msg = \"Expected value of loss is different!\")"

+ "np.testing.assert_allclose(Es[0], 0.0, err_msg = \"Expected value of loss is different!\", atol = 1e-3)"

]

},

{

@@ -870,7 +870,7 @@

"execution": {}

},

"source": [

- "[*Click for solution*](https://github.com/neuromatch/NeuroAI_Course/tree/main/tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial1_Solution_29135326.py)\n",

+ "[*Click for solution*](https://github.com/neuromatch/NeuroAI_Course/tree/main/tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial1_Solution_c3274cd4.py)\n",

"\n"

]

},

@@ -937,7 +937,7 @@

" return loss\n",

"\n",

"loss = compute_loss(net, X, y)\n",

- "np.testing.assert_allclose(loss, 0.0, err_msg = \"Expected value of loss is different!\")"

+ "np.testing.assert_allclose(loss, 0.0, err_msg = \"Expected value of loss is different!\", atol = 1e-3)"

]

},

{

@@ -947,7 +947,7 @@

"execution": {}

},

"source": [

- "[*Click for solution*](https://github.com/neuromatch/NeuroAI_Course/tree/main/tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial1_Solution_4929cd6d.py)\n",

+ "[*Click for solution*](https://github.com/neuromatch/NeuroAI_Course/tree/main/tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial1_Solution_6fa930a8.py)\n",

"\n"

]

},

diff --git a/tutorials/W2D1_Macrocircuits/student/W2D1_Tutorial2.ipynb b/tutorials/W2D1_Macrocircuits/student/W2D1_Tutorial2.ipynb

index ace99ce6f..fbadf8795 100644

--- a/tutorials/W2D1_Macrocircuits/student/W2D1_Tutorial2.ipynb

+++ b/tutorials/W2D1_Macrocircuits/student/W2D1_Tutorial2.ipynb

@@ -1112,7 +1112,7 @@

" Outputs:\n",

" - (float): mean error for train data.\n",

" \"\"\"\n",

- " return np.mean(...)\n",

+ " return np.mean(np.array([fit_relu(..., ..., ..., ..., n_hidden=n_hidden, reg = reg)[1] for _ in range(n_reps)]))\n",

"\n",

"test_errs = [sweep_test(x_train, y_train, x_test, y_test, n_hidden=..., n_reps=100, reg = 0.0) for n_hid in ...]\n",

"\n",

@@ -1131,11 +1131,11 @@

"execution": {}

},

"source": [

- "[*Click for solution*](https://github.com/neuromatch/NeuroAI_Course/tree/main/tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial2_Solution_e6df4a06.py)\n",

+ "[*Click for solution*](https://github.com/neuromatch/NeuroAI_Course/tree/main/tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial2_Solution_7717ab4f.py)\n",

"\n",

"*Example output:*\n",

"\n",

- " \n",

+ "

\n",

+ " \n",

"\n"

]

},

diff --git a/tutorials/W2D1_Macrocircuits/student/W2D1_Tutorial3.ipynb b/tutorials/W2D1_Macrocircuits/student/W2D1_Tutorial3.ipynb

index bfdec6ae0..96043c6af 100644

--- a/tutorials/W2D1_Macrocircuits/student/W2D1_Tutorial3.ipynb

+++ b/tutorials/W2D1_Macrocircuits/student/W2D1_Tutorial3.ipynb

@@ -1972,6 +1972,17 @@

"1. Is there any difference between the trajectories for the modular and holistic agents? If so, what does it imply?"

]

},

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "colab_type": "text",

+ "execution": {}

+ },

+ "source": [

+ "[*Click for solution*](https://github.com/neuromatch/NeuroAI_Course/tree/main/tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial3_Solution_02426ac5.py)\n",

+ "\n"

+ ]

+ },

{

"cell_type": "code",

"execution_count": null,

\n",

"\n"

]

},

diff --git a/tutorials/W2D1_Macrocircuits/student/W2D1_Tutorial3.ipynb b/tutorials/W2D1_Macrocircuits/student/W2D1_Tutorial3.ipynb

index bfdec6ae0..96043c6af 100644

--- a/tutorials/W2D1_Macrocircuits/student/W2D1_Tutorial3.ipynb

+++ b/tutorials/W2D1_Macrocircuits/student/W2D1_Tutorial3.ipynb

@@ -1972,6 +1972,17 @@

"1. Is there any difference between the trajectories for the modular and holistic agents? If so, what does it imply?"

]

},

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "colab_type": "text",

+ "execution": {}

+ },

+ "source": [

+ "[*Click for solution*](https://github.com/neuromatch/NeuroAI_Course/tree/main/tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial3_Solution_02426ac5.py)\n",

+ "\n"

+ ]

+ },

{

"cell_type": "code",

"execution_count": null,

\n",

+ "

\n",

+ " \n",

"\n"

]

},

diff --git a/tutorials/W2D1_Macrocircuits/student/W2D1_Tutorial3.ipynb b/tutorials/W2D1_Macrocircuits/student/W2D1_Tutorial3.ipynb

index bfdec6ae0..96043c6af 100644

--- a/tutorials/W2D1_Macrocircuits/student/W2D1_Tutorial3.ipynb

+++ b/tutorials/W2D1_Macrocircuits/student/W2D1_Tutorial3.ipynb

@@ -1972,6 +1972,17 @@

"1. Is there any difference between the trajectories for the modular and holistic agents? If so, what does it imply?"

]

},

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "colab_type": "text",

+ "execution": {}

+ },

+ "source": [

+ "[*Click for solution*](https://github.com/neuromatch/NeuroAI_Course/tree/main/tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial3_Solution_02426ac5.py)\n",

+ "\n"

+ ]

+ },

{

"cell_type": "code",

"execution_count": null,

\n",

"\n"

]

},

diff --git a/tutorials/W2D1_Macrocircuits/student/W2D1_Tutorial3.ipynb b/tutorials/W2D1_Macrocircuits/student/W2D1_Tutorial3.ipynb

index bfdec6ae0..96043c6af 100644

--- a/tutorials/W2D1_Macrocircuits/student/W2D1_Tutorial3.ipynb

+++ b/tutorials/W2D1_Macrocircuits/student/W2D1_Tutorial3.ipynb

@@ -1972,6 +1972,17 @@

"1. Is there any difference between the trajectories for the modular and holistic agents? If so, what does it imply?"

]

},

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "colab_type": "text",

+ "execution": {}

+ },

+ "source": [

+ "[*Click for solution*](https://github.com/neuromatch/NeuroAI_Course/tree/main/tutorials/W2D1_Macrocircuits/solutions/W2D1_Tutorial3_Solution_02426ac5.py)\n",

+ "\n"

+ ]

+ },

{

"cell_type": "code",

"execution_count": null,