diff --git a/.github/workflows/docs.yaml b/.github/workflows/docs.yaml

new file mode 100644

index 00000000..2d540ba1

--- /dev/null

+++ b/.github/workflows/docs.yaml

@@ -0,0 +1,25 @@

+name: docs

+

+on:

+ push:

+ branches:

+ - main

+permissions:

+ contents: write

+jobs:

+ deploy:

+ runs-on: ubuntu-latest

+ steps:

+ - uses: actions/checkout@v4

+ - uses: actions/setup-python@v4

+ with:

+ python-version: 3.10

+ - run: echo "cache_id=$(date --utc '+%V')" >> $GITHUB_ENV

+ - uses: actions/cache@v3

+ with:

+ key: mkdocs-material-${{ env.cache_id }}

+ path: .cache

+ restore-keys: |

+ mkdocs-material-

+ - run: pip install .[dev]

+ - run: mkdocs gh-deploy --force

diff --git a/.pre-commit-config.yaml b/.pre-commit-config.yaml

index df667eb2..451c1ad0 100644

--- a/.pre-commit-config.yaml

+++ b/.pre-commit-config.yaml

@@ -2,23 +2,24 @@

# See https://pre-commit.com/hooks.html for more hooks

repos:

- repo: https://github.com/pre-commit/pre-commit-hooks

- rev: v3.2.0

+ rev: v4.5.0

hooks:

- id: trailing-whitespace

- id: end-of-file-fixer

- id: check-yaml

- id: check-added-large-files

+ - id: mixed-line-ending

+ - repo: https://github.com/charliermarsh/ruff-pre-commit

+ # Ruff version.

+ rev: "v0.1.1"

+ hooks:

+ - id: ruff

- repo: https://github.com/psf/black

- rev: 23.3.0

+ rev: 23.10.0

hooks:

- id: black

# It is recommended to specify the latest version of Python

# supported by your project here, or alternatively use

# pre-commit's default_language_version, see

# https://pre-commit.com/#top_level-default_language_version

- # language_version: python3.9

- - repo: https://github.com/charliermarsh/ruff-pre-commit

- # Ruff version.

- rev: "v0.0.287"

- hooks:

- - id: ruff

+ # language_version: python3.10

diff --git a/README.md b/README.md

index d81621f1..624b9924 100644

--- a/README.md

+++ b/README.md

@@ -3,7 +3,8 @@

*The friendly earthquake detector*

[](https://github.com/miili/lassie-v2/actions/workflows/build.yaml)

-

+[](https://pyrocko.github.io/lassie-v2/)

+

[](https://pre-commit.com/)

@@ -26,12 +27,14 @@ Key features are of the earthquake detection and localisation framework are:

Lassie is built on top of [Pyrocko](https://pyrocko.org).

+For more information check out the documentation at https://pyrocko.github.io/lassie-v2/.

+

## Installation

+Simple installation from GitHub.

+

```sh

-git clone https://github.com/pyrocko/lassie-v2

-cd lassie-v2

-pip3 install .

+pip install git+https://github.com/pyrocko/lassie-v2

```

## Project Initialisation

@@ -47,7 +50,7 @@ Edit the `my-project.json`

Start the earthquake detection with

```sh

-lassie run search.json

+lassie search search.json

```

## Packaging

diff --git a/docs/components/feature_extraction.md b/docs/components/feature_extraction.md

new file mode 100644

index 00000000..e69de29b

diff --git a/docs/components/image_function.md b/docs/components/image_function.md

new file mode 100644

index 00000000..10582bf3

--- /dev/null

+++ b/docs/components/image_function.md

@@ -0,0 +1,15 @@

+# Image Function

+

+For image functions this version of Lassie relies heavily on machine learning pickers delivered by [SeisBench](https://github.com/seisbench/seisbench).

+

+## PhaseNet Image Function

+

+!!! abstract "Citation PhaseNet"

+ *Zhu, Weiqiang, and Gregory C. Beroza. "PhaseNet: A Deep-Neural-Network-Based Seismic Arrival Time Picking Method." arXiv preprint arXiv:1803.03211 (2018).*

+

+```python exec='on'

+from lassie.utils import generate_docs

+from lassie.images.phase_net import PhaseNet

+

+print(generate_docs(PhaseNet()))

+```

diff --git a/docs/components/octree.md b/docs/components/octree.md

new file mode 100644

index 00000000..b0ab2334

--- /dev/null

+++ b/docs/components/octree.md

@@ -0,0 +1,10 @@

+# Octree

+

+A 3D space is searched for sources of seismic energy. Lassie created an octree structure which is iteratively refined when energy is detected, to focus on the source' location. This speeds up the search and improves the resolution of the localisations.

+

+```python exec='on'

+from lassie.utils import generate_docs

+from lassie.octree import Octree

+

+print(generate_docs(Octree()))

+```

diff --git a/docs/components/ray_tracer.md b/docs/components/ray_tracer.md

new file mode 100644

index 00000000..3fc3ab09

--- /dev/null

+++ b/docs/components/ray_tracer.md

@@ -0,0 +1,56 @@

+# Ray Tracers

+

+The calculation of seismic travel times is a cornerstone for the migration and stacking approach. Lassie supports different ray tracers for travel time calculation, which can be adapted for different geological settings.

+

+## Constant Velocity

+

+The constant velocity models is trivial and follows:

+

+$$

+t_{P} = \frac{d}{v_P}

+$$

+

+This module is used for simple use cases and cross-referencing testing.

+

+```python exec='on'

+from lassie.utils import generate_docs

+from lassie.tracers.constant_velocity import ConstantVelocityTracer

+

+print(generate_docs(ConstantVelocityTracer()))

+```

+

+## 1D Layered Model

+

+Calculation of travel times in 1D layered media is based on the [Pyrocko Cake](https://pyrocko.org/docs/current/apps/cake/manual.html#command-line-examples) ray tracer.

+

+

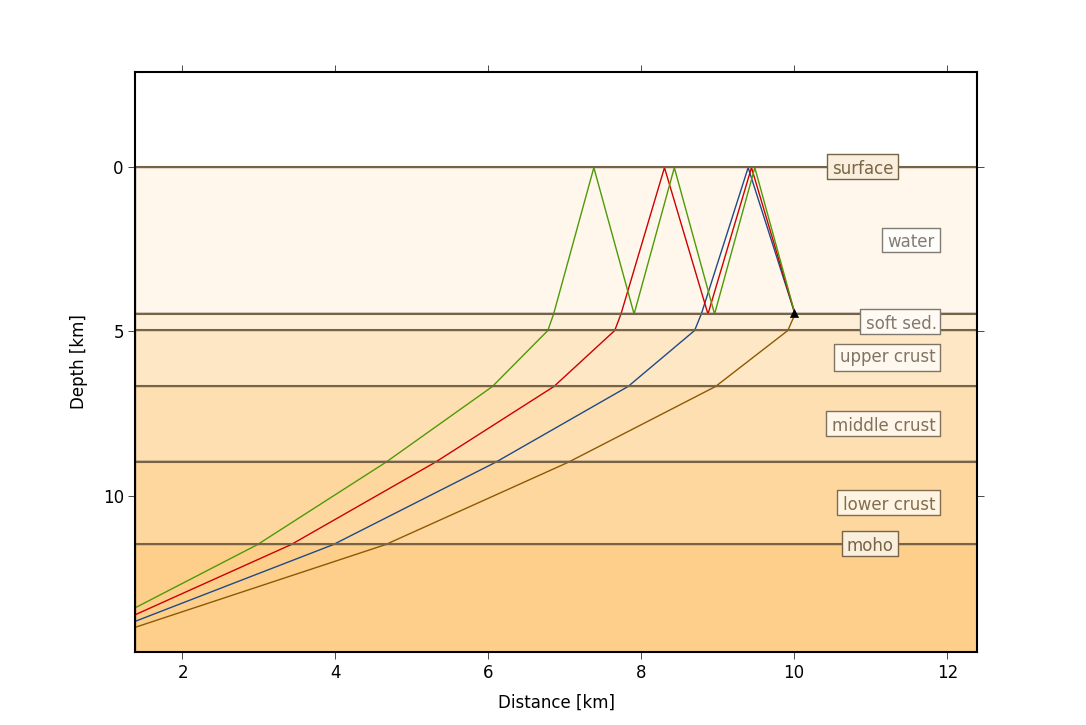

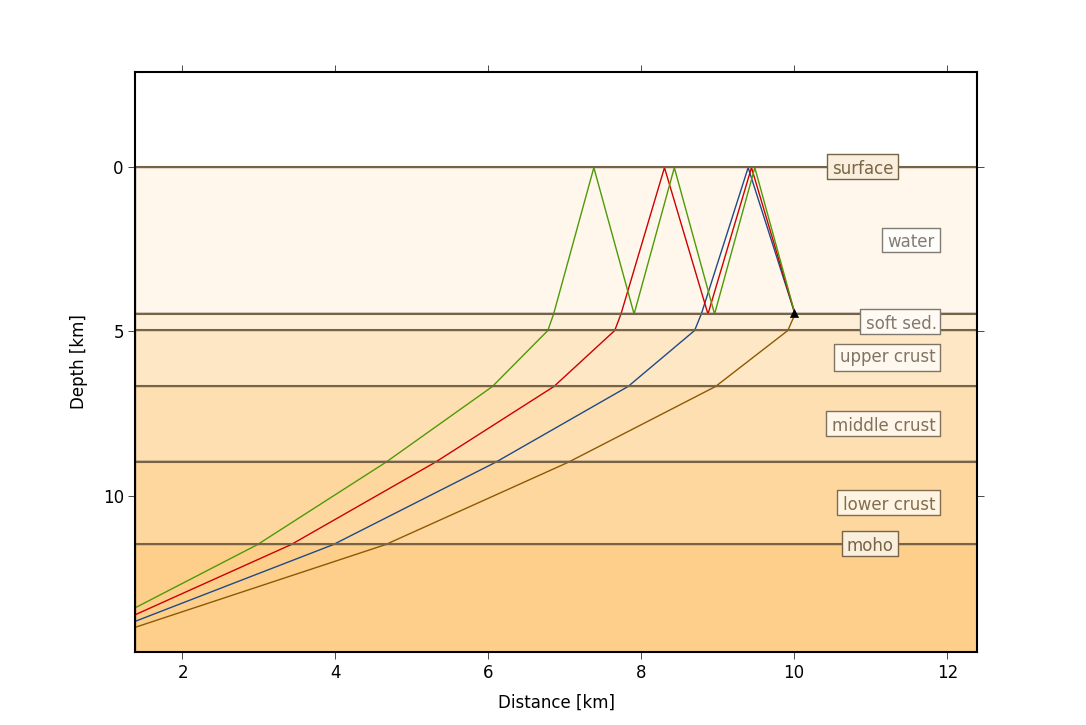

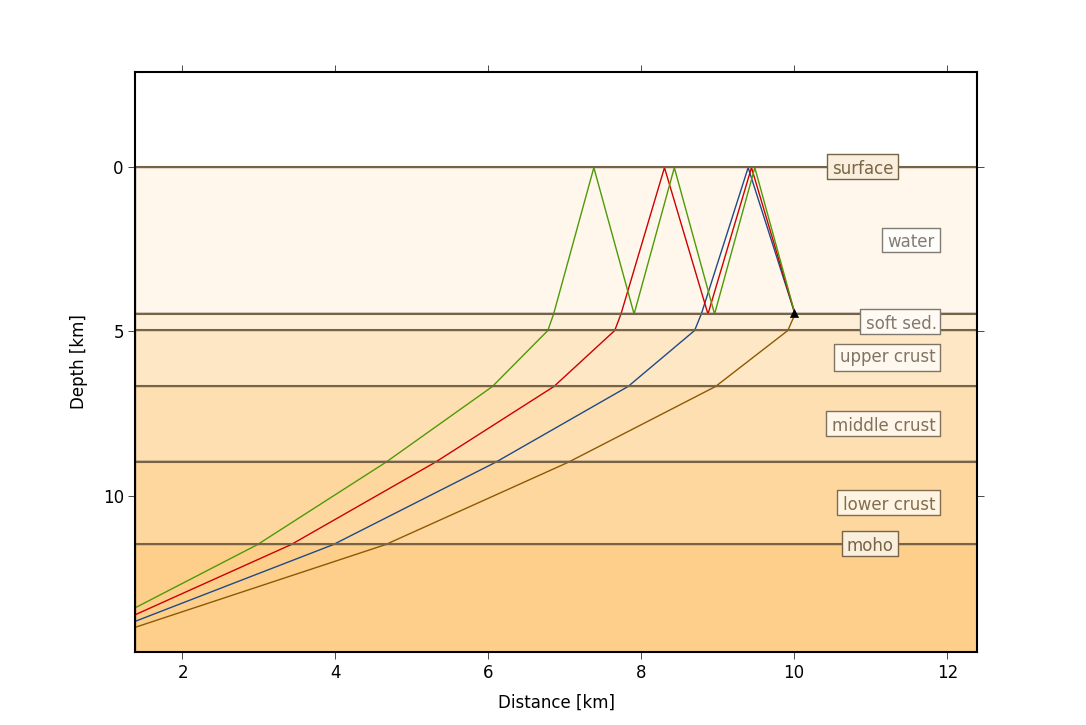

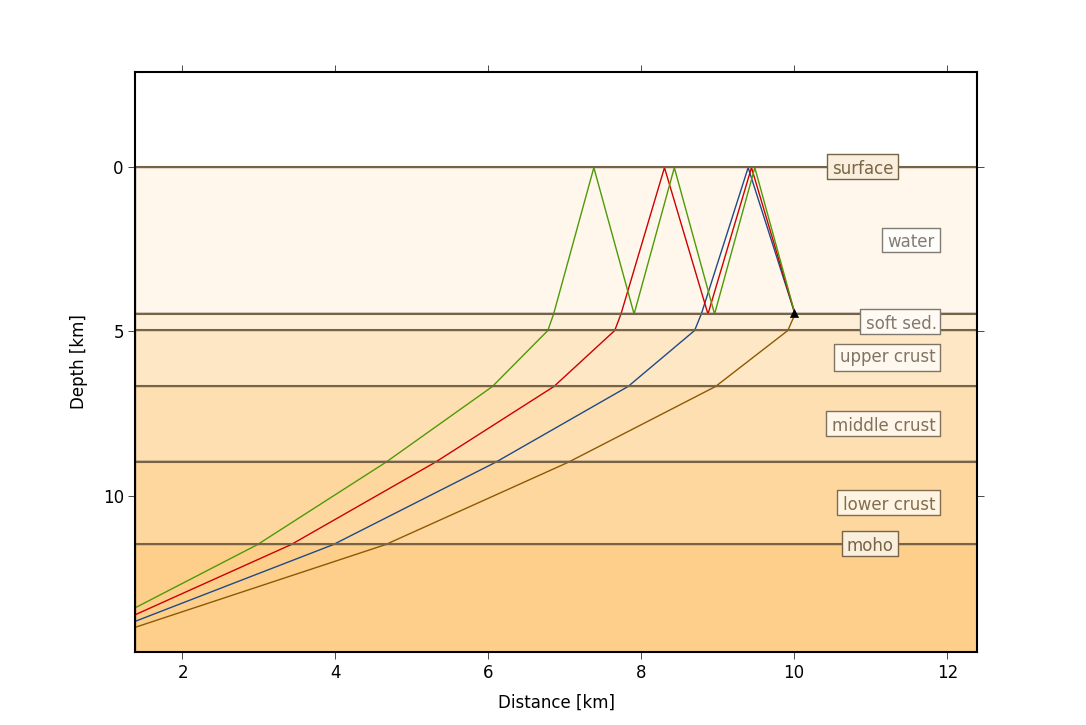

+*Pyrocko Cake 1D ray tracer for travel time calculation in 1D layered media*

+

+```python exec='on'

+from lassie.utils import generate_docs

+from lassie.tracers.cake import CakeTracer

+

+print(generate_docs(CakeTracer(), exclude={'earthmodel': {'raw_file_data'}}))

+```

+

+## 3D Fast Marching

+

+We implement the fast marching method for calculating first arrivals of waves in 3D volumes. Currently three different 3D velocity models are supported:

+

+* [x] Import [NonLinLoc](http://alomax.free.fr/nlloc/) 3D velocity model

+* [x] 1D layered model 🥞

+* [x] Constant velocity, mainly for testing purposes 🥼

+

+```python exec='on'

+from lassie.utils import generate_docs

+from lassie.tracers.fast_marching import FastMarchingTracer

+

+print(generate_docs(FastMarchingTracer()))

+```

+

+### Visualizing 3D Models

+

+For quality check, all 3D velocity models are exported to `vtk/` folder as `.vti` files. Use [ParaView](https://www.paraview.org/) to inspect and explore the velocity models.

+

+

+*Seismic velocity model of the Utah FORGE testbed site, visualized in ParaView.*

diff --git a/docs/components/seismic_data.md b/docs/components/seismic_data.md

new file mode 100644

index 00000000..8ecb3c11

--- /dev/null

+++ b/docs/components/seismic_data.md

@@ -0,0 +1,32 @@

+# Seismic Data

+

+## Waveform Data

+

+The seismic can be delivered in MiniSeed or any other format compatible with Pyrocko.

+

+Organize your data in an [SDS structure](https://www.seiscomp.de/doc/base/concepts/waveformarchives.html) or just a single MiniSeed file.

+

+```python exec='on'

+from lassie.utils import generate_docs

+from lassie.waveforms import PyrockoSquirrel

+

+print(generate_docs(PyrockoSquirrel()))

+```

+

+## Meta Data

+

+Meta data is required primarily for station locations and codes.

+

+Supported data formats are:

+

+* [x] [StationXML](https://www.fdsn.org/xml/station/)

+* [x] [Pyrocko Station YAML](https://pyrocko.org/docs/current/formats/yaml.html)

+

+Metadata does not need to include response information for pure detection and localisation. If local magnitudes $M_L$ are extracted response information is required.

+

+```python exec='on'

+from lassie.utils import generate_docs

+from lassie.models.station import Stations

+

+print(generate_docs(Stations()))

+```

diff --git a/docs/components/station_corrections.md b/docs/components/station_corrections.md

new file mode 100644

index 00000000..a35818c6

--- /dev/null

+++ b/docs/components/station_corrections.md

@@ -0,0 +1,10 @@

+# Station Corrections

+

+Station corrections can be extract from previous runs to refine the localisation accuracy. The corrections can also help to improve the semblance find more events in a dataset.

+

+```python exec='on'

+from lassie.utils import generate_docs

+from lassie.models.station import Stations

+

+print(generate_docs(Stations()))

+```

diff --git a/docs/getting_started.md b/docs/getting_started.md

new file mode 100644

index 00000000..ec331764

--- /dev/null

+++ b/docs/getting_started.md

@@ -0,0 +1,106 @@

+# Getting Started

+

+## Installation

+

+The installation is straight-forward:

+

+```sh title="From GitHub"

+pip install git+https://github.com/pyrocko/lassie-v2

+```

+

+## Running Lassie

+

+The main entry point in the executeable is the `lassie` command. The provided command line interface (CLI) and a JSON config file is all what is needed to run the program.

+

+```bash exec='on' result='ansi' source='above'

+lassie -h

+```

+

+## Initializing a New Project

+

+Once installed you can run the lassie executeable to initialize a new project.

+

+```sh title="Initialize new Project"

+lassie init my-project

+```

+

+Check out the `search.json` config file and add your waveform data and velocity models.

+

+??? abstract "Minimal Configuration Example"

+ Here is a minimal JSON configuration for Lassie

+ ```json

+ {

+ "project_dir": ".",

+ "stations": {

+ "station_xmls": [],

+ "pyrocko_station_yamls": ["search/pyrocko-stations.yaml"],

+ },

+ "data_provider": {

+ "provider": "PyrockoSquirrel",

+ "environment": ".",

+ "waveform_dirs": ["data/"],

+ },

+ "octree": {

+ "location": {

+ "lat": 0.0,

+ "lon": 0.0,

+ "east_shift": 0.0,

+ "north_shift": 0.0,

+ "elevation": 0.0,

+ "depth": 0.0

+ },

+ "size_initial": 2000.0,

+ "size_limit": 500.0,

+ "east_bounds": [

+ -10000.0,

+ 10000.0

+ ],

+ "north_bounds": [

+ -10000.0,

+ 10000.0

+ ],

+ "depth_bounds": [

+ 0.0,

+ 20000.0

+ ],

+ "absorbing_boundary": 1000.0

+ },

+ "image_functions": [

+ {

+ "image": "PhaseNet",

+ "model": "ethz",

+ "torch_use_cuda": false,

+ "phase_map": {

+ "P": "constant:P",

+ "S": "constant:S"

+ },

+ }

+ ],

+ "ray_tracers": [

+ {

+ "tracer": "ConstantVelocityTracer",

+ "phase": "constant:P",

+ "velocity": 5000.0

+ }

+ ],

+ "station_corrections": {},

+ "event_features": [],

+ "sampling_rate": 100,

+ "detection_threshold": 0.05,

+ "detection_blinding": "PT2S",

+ "node_split_threshold": 0.9,

+ "window_length": "PT300S",

+ "n_threads_parstack": 0,

+ "n_threads_argmax": 4,

+ }

+ ```

+

+For more details and information about the component, head over to [details of the modules](components/seismic_data.md).

+

+## Starting the Search

+

+Once happy, start the lassie CLI.

+

+```sh title="Start earthquake detection"

+lassie search search.json

+```

diff --git a/docs/images/FORGE-velocity-model.webp b/docs/images/FORGE-velocity-model.webp

new file mode 100644

index 00000000..399ef062

Binary files /dev/null and b/docs/images/FORGE-velocity-model.webp differ

diff --git a/docs/images/logo.webp b/docs/images/logo.webp

new file mode 100644

index 00000000..cffad92e

Binary files /dev/null and b/docs/images/logo.webp differ

diff --git a/docs/images/qgis-loaded.webp b/docs/images/qgis-loaded.webp

new file mode 100644

index 00000000..23206cba

Binary files /dev/null and b/docs/images/qgis-loaded.webp differ

diff --git a/docs/images/reykjanes-demo.webp b/docs/images/reykjanes-demo.webp

new file mode 100644

index 00000000..8bdb0e7a

Binary files /dev/null and b/docs/images/reykjanes-demo.webp differ

diff --git a/docs/images/squirrel-reykjanes.webp b/docs/images/squirrel-reykjanes.webp

new file mode 100644

index 00000000..e59c6b53

Binary files /dev/null and b/docs/images/squirrel-reykjanes.webp differ

diff --git a/docs/index.md b/docs/index.md

index 000ea345..97e69af2 100644

--- a/docs/index.md

+++ b/docs/index.md

@@ -1,17 +1,36 @@

-# Welcome to MkDocs

+# Welcome to Lassie 🐕🦺

-For full documentation visit [mkdocs.org](https://www.mkdocs.org).

+Lassie is an earthquake detection and localisation framework. It combines modern **machine learning phase detection and robust migration and stacking techniques**.

-## Commands

+The detector is leveraging [Pyrocko](https://pyrocko.org) and [SeisBench](https://github.com/seisbench/seisbench), it is highly-performant and can search massive data sets for seismic activity efficiently.

-* `mkdocs new [dir-name]` - Create a new project.

-* `mkdocs serve` - Start the live-reloading docs server.

-* `mkdocs build` - Build the documentation site.

-* `mkdocs -h` - Print help message and exit.

+!!! abstract "Citation"

+ TDB

-## Project layout

+

- mkdocs.yml # The configuration file.

- docs/

- index.md # The documentation homepage.

- ... # Other markdown pages, images and other files.

+*Seismic swarm activity at Iceland, Reykjanes Peninsula during a 2020 unrest. 15,000+ earthquakes detected, outlining a dike intrusion, preceeding the 2021 Fagradasfjall eruption. Visualized in [Pyrocko Sparrow](https://pyrocko.org).*

+

+## Features

+

+* [x] Earthquake phase detection using machine-learning pickers from [SeisBench](https://github.com/seisbench/seisbench)

+* [x] Octree localisation approach for efficient and accurate search

+* [x] Different velocity models:

+ * [x] Constant velocity

+ * [x] 1D Layered velocity model

+ * [x] 3D fast-marching velocity model (NonLinLoc compatible)

+* [x] Extraction of earthquake event features:

+ * [x] Local magnitudes

+ * [x] Ground motion attributes

+* [x] Automatic extraction of modelled and picked travel times

+* [x] Calculation and application of station corrections / station delay times

+* [ ] Real-time analytics on streaming data (e.g. SeedLink)

+

+

+[Get Started!](getting_started.md){ .md-button }

+

+## Build with

+

+{ width="100" }

+{ width="400" padding-right="40" }

+{ width="100" }

diff --git a/docs/theme/announce.html b/docs/theme/announce.html

new file mode 100644

index 00000000..594d6af7

--- /dev/null

+++ b/docs/theme/announce.html

@@ -0,0 +1 @@

+Lassie is in Beta 🧫 Please handle with care

diff --git a/docs/theme/main.html b/docs/theme/main.html

new file mode 100644

index 00000000..1f59cb80

--- /dev/null

+++ b/docs/theme/main.html

@@ -0,0 +1,6 @@

+{% extends "base.html" %}

+

+{% block announce %}

+ {% include 'announce.html' ignore missing %}

+{% endblock %}

+

diff --git a/docs/visualizing_results.md b/docs/visualizing_results.md

new file mode 100644

index 00000000..850c9bb2

--- /dev/null

+++ b/docs/visualizing_results.md

@@ -0,0 +1,15 @@

+# Visualizing Detections

+

+The event detections are exported in Lassie-native JSON, Pyrocko YAML format and as CSV files.

+

+## Pyrocko Sparrow

+

+For large data sets use the [Pyrocko Sparrow](https://pyrocko.org) to visualise seismic event detections in 3D. Also seismic stations and many other features from the Pyrocko ecosystem can be integrated into the view.

+

+

+

+## QGIS

+

+[QGIS](https://www.qgis.org/) can be used to import `.csv` and explore the data in an interactive fashion. Detections can be rendered by e.g. the detection semblance or the calculated magnitude.

+

+

diff --git a/lassie/apps/lassie.py b/lassie/apps/lassie.py

index ae5328fb..49e25dd9 100644

--- a/lassie/apps/lassie.py

+++ b/lassie/apps/lassie.py

@@ -2,6 +2,7 @@

import argparse

import asyncio

+import json

import logging

import shutil

from pathlib import Path

@@ -10,10 +11,6 @@

from pkg_resources import get_distribution

from lassie.console import console

-from lassie.models import Stations

-from lassie.search import Search

-from lassie.server import WebServer

-from lassie.station_corrections import StationCorrections

from lassie.utils import CACHE_DIR, setup_rich_logging

nest_asyncio.apply()

@@ -21,17 +18,18 @@

logger = logging.getLogger(__name__)

-def main() -> None:

+def get_parser() -> argparse.ArgumentParser:

parser = argparse.ArgumentParser(

prog="lassie",

- description="The friendly earthquake detector - V2",

+ description="Lassie - The friendly earthquake detector 🐕",

)

parser.add_argument(

"--verbose",

"-v",

action="count",

default=0,

- help="increase verbosity of the log messages, default level is INFO",

+ help="increase verbosity of the log messages, repeat to increase. "

+ "Default level is INFO",

)

parser.add_argument(

"--version",

@@ -40,11 +38,28 @@ def main() -> None:

help="show version and exit",

)

- subparsers = parser.add_subparsers(title="commands", required=True, dest="command")

+ subparsers = parser.add_subparsers(

+ title="commands",

+ required=True,

+ dest="command",

+ description="Available commands to run Lassie. Get command help with "

+ "`lassie --help`.",

+ )

+

+ init_project = subparsers.add_parser(

+ "init",

+ help="initialize a new Lassie project",

+ description="initialze a new project with a default configuration file. ",

+ )

+ init_project.add_argument(

+ "folder",

+ type=Path,

+ help="folder to initialize project in",

+ )

run = subparsers.add_parser(

- "run",

- help="start a new detection run",

+ "search",

+ help="start a search",

description="detect, localize and characterize earthquakes in a dataset",

)

run.add_argument("config", type=Path, help="path to config file")

@@ -62,14 +77,6 @@ def main() -> None:

)

continue_run.add_argument("rundir", type=Path, help="existing runding to continue")

- init_project = subparsers.add_parser(

- "init",

- help="initialize a new Lassie project",

- )

- init_project.add_argument(

- "folder", type=Path, help="folder to initialize project in"

- )

-

features = subparsers.add_parser(

"feature-extraction",

help="extract features from an existing run",

@@ -100,15 +107,29 @@ def main() -> None:

subparsers.add_parser(

"clear-cache",

help="clear the cach directory",

+ description="clear all data in the cache directory",

)

dump_schemas = subparsers.add_parser(

"dump-schemas",

help="dump data models to json-schema (development)",

+ description="dump data models to json-schema, "

+ "this is for development purposes only",

)

dump_schemas.add_argument("folder", type=Path, help="folder to dump schemas to")

+ return parser

+

+

+def main() -> None:

+ parser = get_parser()

args = parser.parse_args()

+

+ from lassie.models import Stations

+ from lassie.search import Search

+ from lassie.server import WebServer

+ from lassie.station_corrections import StationCorrections

+

setup_rich_logging(level=logging.INFO - args.verbose * 10)

if args.command == "init":

@@ -120,11 +141,7 @@ def main() -> None:

pyrocko_stations = folder / "pyrocko-stations.yaml"

pyrocko_stations.touch()

- config = Search(

- stations=Stations(

- pyrocko_station_yamls=[pyrocko_stations.relative_to(folder)]

- )

- )

+ config = Search(stations=Stations(pyrocko_station_yamls=[pyrocko_stations]))

config_file = folder / f"{folder.name}.json"

config_file.write_text(config.model_dump_json(by_alias=False, indent=2))

@@ -132,7 +149,7 @@ def main() -> None:

logger.info("initialized new project in folder %s", folder)

logger.info("start detection with: lassie run %s", config_file.name)

- elif args.command == "run":

+ elif args.command == "search":

search = Search.from_config(args.config)

webserver = WebServer(search)

@@ -174,7 +191,9 @@ async def extract() -> None:

station_corrections = StationCorrections(rundir=rundir)

if args.plot:

station_corrections.save_plots(rundir / "station_corrections")

- station_corrections.save_csv(filename=rundir / "station_corrections_stats.csv")

+ station_corrections.export_csv(

+ filename=rundir / "station_corrections_stats.csv"

+ )

elif args.command == "serve":

search = Search.load_rundir(args.rundir)

@@ -196,10 +215,12 @@ async def extract() -> None:

file = args.folder / "search.schema.json"

print(f"writing JSON schemas to {args.folder}")

- file.write_text(Search.model_json_schema(indent=2))

+ file.write_text(json.dumps(Search.model_json_schema(), indent=2))

file = args.folder / "detections.schema.json"

- file.write_text(EventDetections.model_json_schema(indent=2))

+ file.write_text(json.dumps(EventDetections.model_json_schema(), indent=2))

+ else:

+ parser.error(f"unknown command: {args.command}")

if __name__ == "__main__":

diff --git a/lassie/features/local_magnitude.py b/lassie/features/local_magnitude.py

index 91087667..230078c2 100644

--- a/lassie/features/local_magnitude.py

+++ b/lassie/features/local_magnitude.py

@@ -251,7 +251,6 @@ async def add_features(self, squirrel: Squirrel, event: EventDetection) -> None:

std=float(np.std(magnitudes)),

n_stations=len(magnitudes),

)

- print(event.time, local_magnitude)

event.magnitude = local_magnitude.median

event.magnitude_type = "local"

event.features.add_feature(local_magnitude)

diff --git a/lassie/images/__init__.py b/lassie/images/__init__.py

index 5773511f..24db78a9 100644

--- a/lassie/images/__init__.py

+++ b/lassie/images/__init__.py

@@ -9,6 +9,7 @@

from lassie.images.base import ImageFunction, PickedArrival

from lassie.images.phase_net import PhaseNet, PhaseNetPick

+from lassie.utils import PhaseDescription

if TYPE_CHECKING:

from datetime import timedelta

@@ -51,7 +52,7 @@ async def process_traces(self, traces: list[Trace]) -> WaveformImages:

return WaveformImages(root=images)

- def get_phases(self) -> tuple[str, ...]:

+ def get_phases(self) -> tuple[PhaseDescription, ...]:

"""Get all phases that are available in the image functions.

Returns:

@@ -85,6 +86,15 @@ def downsample(self, sampling_rate: float, max_normalize: bool = False) -> None:

for image in self:

image.downsample(sampling_rate, max_normalize)

+ def apply_exponent(self, exponent: float) -> None:

+ """Apply exponent to all images.

+

+ Args:

+ exponent (float): Exponent to apply.

+ """

+ for image in self:

+ image.apply_exponent(exponent)

+

def set_stations(self, stations: Stations) -> None:

"""Set the images stations."""

for image in self:

diff --git a/lassie/images/base.py b/lassie/images/base.py

index cc083eb4..5d539e59 100644

--- a/lassie/images/base.py

+++ b/lassie/images/base.py

@@ -35,7 +35,7 @@ def blinding(self) -> timedelta:

"""Blinding duration for the image function. Added to padded waveforms."""

raise NotImplementedError("must be implemented by subclass")

- def get_provided_phases(self) -> tuple[str, ...]:

+ def get_provided_phases(self) -> tuple[PhaseDescription, ...]:

...

@@ -105,6 +105,15 @@ def get_offsets(self, reference: datetime) -> np.ndarray:

np.int32

)

+ def apply_exponent(self, exponent: float) -> None:

+ """Apply exponent to all traces.

+

+ Args:

+ exponent (float): Exponent to apply.

+ """

+ for tr in self.traces:

+ tr.ydata **= exponent

+

def search_phase_arrival(

self,

trace_idx: int,

diff --git a/lassie/images/phase_net.py b/lassie/images/phase_net.py

index 670d8bfe..602f4b11 100644

--- a/lassie/images/phase_net.py

+++ b/lassie/images/phase_net.py

@@ -5,7 +5,7 @@

from typing import TYPE_CHECKING, Any, Literal

from obspy import Stream

-from pydantic import PositiveFloat, PositiveInt, PrivateAttr, conint

+from pydantic import Field, PositiveFloat, PositiveInt, PrivateAttr

from pyrocko import obspy_compat

from seisbench import logger

@@ -82,21 +82,60 @@ def search_phase_arrival(

class PhaseNet(ImageFunction):

+ """PhaseNet image function. For more details see SeisBench documentation."""

+

image: Literal["PhaseNet"] = "PhaseNet"

- model: ModelName = "ethz"

- window_overlap_samples: conint(ge=1000, le=3000) = 2000

- torch_use_cuda: bool = False

- torch_cpu_threads: PositiveInt = 4

- batch_size: conint(ge=64) = 64

- stack_method: StackMethod = "avg"

- phase_map: dict[PhaseName, str] = {

- "P": "constant:P",

- "S": "constant:S",

- }

- weights: dict[PhaseName, PositiveFloat] = {

- "P": 1.0,

- "S": 1.0,

- }

+ model: ModelName = Field(

+ default="ethz",

+ description="SeisBench pre-trained PhaseNet model to use. "

+ "Choose from `ethz`, `geofon`, `instance`, `iquique`, `lendb`, `neic`, `obs`,"

+ " `original`, `scedc`, `stead`."

+ " For more details see SeisBench documentation",

+ )

+ window_overlap_samples: int = Field(

+ default=2000,

+ ge=1000,

+ le=3000,

+ description="Window overlap in samples.",

+ )

+ torch_use_cuda: bool = Field(

+ default=False,

+ description="Use CUDA for inference.",

+ )

+ torch_cpu_threads: PositiveInt = Field(

+ default=4,

+ description="Number of CPU threads to use if only CPU is used.",

+ )

+ batch_size: int = Field(

+ default=64,

+ ge=64,

+ description="Batch size for inference, larger values can improve performance.",

+ )

+ stack_method: StackMethod = Field(

+ default="avg",

+ description="Method to stack the overlaping blocks internally. "

+ "Choose from `avg` and `max`.",

+ )

+ upscale_input: PositiveInt = Field(

+ default=1,

+ description="Upscale input by factor. "

+ "This augments the input data from e.g. 100 Hz to 50 Hz (factor: `2`). Can be"

+ " useful for high-frequency earthquake signals.",

+ )

+ phase_map: dict[PhaseName, str] = Field(

+ default={

+ "P": "constant:P",

+ "S": "constant:S",

+ },

+ description="Phase mapping from SeisBench PhaseNet to Lassie phases.",

+ )

+ weights: dict[PhaseName, PositiveFloat] = Field(

+ default={

+ "P": 1.0,

+ "S": 1.0,

+ },

+ description="Weights for each phase.",

+ )

_phase_net: PhaseNetSeisBench = PrivateAttr(None)

@@ -118,6 +157,11 @@ def blinding(self) -> timedelta:

@alog_call

async def process_traces(self, traces: list[Trace]) -> list[PhaseNetImage]:

stream = Stream(tr.to_obspy_trace() for tr in traces)

+ if self.upscale_input > 1:

+ scale = self.upscale_input

+ for tr in stream:

+ tr.stats.sampling_rate = tr.stats.sampling_rate / scale

+

annotations: Stream = self._phase_net.annotate(

stream,

overlap=self.window_overlap_samples,

@@ -125,6 +169,11 @@ async def process_traces(self, traces: list[Trace]) -> list[PhaseNetImage]:

# parallelism=self.seisbench_subprocesses,

)

+ if self.upscale_input > 1:

+ scale = self.upscale_input

+ for tr in annotations:

+ tr.stats.sampling_rate = tr.stats.sampling_rate * scale

+

annotated_traces: list[Trace] = [

tr.to_pyrocko_trace()

for tr in annotations

diff --git a/lassie/models/detection.py b/lassie/models/detection.py

index f4027182..502ebec9 100644

--- a/lassie/models/detection.py

+++ b/lassie/models/detection.py

@@ -8,7 +8,9 @@

from typing import TYPE_CHECKING, Any, ClassVar, Iterator, Literal, Type, TypeVar

from uuid import UUID, uuid4

+import numpy as np

from pydantic import BaseModel, Field, PrivateAttr, computed_field

+from pyevtk.hl import pointsToVTK

from pyrocko import io

from pyrocko.gui import marker

from pyrocko.model import Event, dump_events

@@ -366,8 +368,7 @@ def as_pyrocko_event(self) -> Event:

lon=self.lon,

east_shift=self.east_shift,

north_shift=self.north_shift,

- depth=self.depth,

- elevation=self.elevation,

+ depth=self.effective_depth,

magnitude=self.magnitude or self.semblance,

magnitude_type=self.magnitude_type,

)

@@ -441,7 +442,21 @@ def n_detections(self) -> int:

@property

def markers_dir(self) -> Path:

- return self.rundir / "pyrocko_markers"

+ dir = self.rundir / "pyrocko_markers"

+ dir.mkdir(exist_ok=True)

+ return dir

+

+ @property

+ def csv_dir(self) -> Path:

+ dir = self.rundir / "csv"

+ dir.mkdir(exist_ok=True)

+ return dir

+

+ @property

+ def vtk_dir(self) -> Path:

+ dir = self.rundir / "vtk"

+ dir.mkdir(exist_ok=True)

+ return dir

def add(self, detection: EventDetection) -> None:

markers_file = self.markers_dir / f"{time_to_path(detection.time)}.list"

@@ -465,20 +480,24 @@ def add(self, detection: EventDetection) -> None:

def dump_detections(self, jitter_location: float = 0.0) -> None:

"""Dump all detections to files in the detection directory."""

- csv_folder = self.rundir / "csv"

- csv_folder.mkdir(exist_ok=True)

logger.debug("dumping detections")

- self.save_csvs(csv_folder / "detections_locations.csv")

- self.save_pyrocko_events(self.rundir / "pyrocko_events.list")

+ self.export_csv(self.csv_dir / "detections.csv")

+ self.export_pyrocko_events(self.rundir / "pyrocko_detections.list")

+

+ self.export_vtk(self.vtk_dir / "detections")

if jitter_location:

- self.save_csvs(

- csv_folder / "detections_locations_jittered.csv",

+ self.export_csv(

+ self.csv_dir / "detections_jittered.csv",

jitter_location=jitter_location,

)

- self.save_pyrocko_events(

- self.rundir / "pyrocko_events_jittered.list",

+ self.export_pyrocko_events(

+ self.rundir / "pyrocko_detections_jittered.list",

+ jitter_location=jitter_location,

+ )

+ self.export_vtk(

+ self.vtk_dir / "detections_jittered",

jitter_location=jitter_location,

)

@@ -515,27 +534,29 @@ def load_rundir(cls, rundir: Path) -> EventDetections:

console.log(f"loaded {detections.n_detections} detections")

return detections

- def save_csvs(self, file: Path, jitter_location: float = 0.0) -> None:

- """Save detections to a CSV file

+ def export_csv(self, file: Path, jitter_location: float = 0.0) -> None:

+ """Export detections to a CSV file

Args:

file (Path): output filename

randomize_meters (float, optional): randomize the location of each detection

by this many meters. Defaults to 0.0.

"""

- lines = ["lat, lon, depth, semblance, time, distance_border"]

+ lines = ["lat,lon,depth,semblance,time,distance_border"]

for detection in self:

if jitter_location:

detection = detection.jitter_location(jitter_location)

lat, lon = detection.effective_lat_lon

lines.append(

- f"{lat:.5f}, {lon:.5f}, {-detection.effective_elevation:.1f},"

- f" {detection.semblance}, {detection.time}, {detection.distance_border}"

+ f"{lat:.5f},{lon:.5f},{detection.effective_depth:.1f},"

+ f" {detection.semblance},{detection.time},{detection.distance_border}"

)

file.write_text("\n".join(lines))

- def save_pyrocko_events(self, filename: Path, jitter_location: float = 0.0) -> None:

- """Save Pyrocko events for all detections to a file

+ def export_pyrocko_events(

+ self, filename: Path, jitter_location: float = 0.0

+ ) -> None:

+ """Export Pyrocko events for all detections to a file

Args:

filename (Path): output filename

@@ -543,16 +564,14 @@ def save_pyrocko_events(self, filename: Path, jitter_location: float = 0.0) -> N

logger.info("saving Pyrocko events to %s", filename)

detections = self.detections

if jitter_location:

- detections = [

- detection.jitter_location(jitter_location) for detection in detections

- ]

+ detections = [det.jitter_location(jitter_location) for det in detections]

dump_events(

- [detection.as_pyrocko_event() for detection in detections],

+ [det.as_pyrocko_event() for det in detections],

filename=str(filename),

)

- def save_pyrocko_markers(self, filename: Path) -> None:

- """Save Pyrocko markers for all detections to a file

+ def export_pyrocko_markers(self, filename: Path) -> None:

+ """Export Pyrocko markers for all detections to a file

Args:

filename (Path): output filename

@@ -563,5 +582,33 @@ def save_pyrocko_markers(self, filename: Path) -> None:

pyrocko_markers.extend(detection.get_pyrocko_markers())

marker.save_markers(pyrocko_markers, str(filename))

+ def export_vtk(

+ self,

+ filename: Path,

+ jitter_location: float = 0.0,

+ ) -> None:

+ """Export events as vtk file

+

+ Args:

+ filename (Path): output filename, without file extension.

+ reference (Location): Relative to this location.

+ """

+ detections = self.detections

+ if jitter_location:

+ detections = [det.jitter_location(jitter_location) for det in detections]

+ offsets = np.array(

+ [(det.east_shift, det.north_shift, det.depth) for det in detections]

+ )

+ pointsToVTK(

+ str(filename),

+ np.array(offsets[:, 0]),

+ np.array(offsets[:, 1]),

+ -np.array(offsets[:, 2]),

+ data={

+ "semblance": np.array([det.semblance for det in detections]),

+ "time": np.array([det.time.timestamp() for det in detections]),

+ },

+ )

+

def __iter__(self) -> Iterator[EventDetection]:

return iter(sorted(self.detections, key=lambda d: d.time))

diff --git a/lassie/models/location.py b/lassie/models/location.py

index 9347730a..d1ab0c5a 100644

--- a/lassie/models/location.py

+++ b/lassie/models/location.py

@@ -5,7 +5,7 @@

import struct

from typing import TYPE_CHECKING, Iterable, Literal, TypeVar

-from pydantic import BaseModel, PrivateAttr

+from pydantic import BaseModel, Field, PrivateAttr

from pyrocko import orthodrome as od

from typing_extensions import Self

@@ -18,10 +18,22 @@

class Location(BaseModel):

lat: float

lon: float

- east_shift: float = 0.0

- north_shift: float = 0.0

- elevation: float = 0.0

- depth: float = 0.0

+ east_shift: float = Field(

+ default=0.0,

+ description="east shift towards geographical reference in meters.",

+ )

+ north_shift: float = Field(

+ default=0.0,

+ description="north shift towards geographical reference in meters.",

+ )

+ elevation: float = Field(

+ default=0.0,

+ description="elevation in meters.",

+ )

+ depth: float = Field(

+ default=0.0,

+ description="depth in meters, positive is down.",

+ )

_cached_lat_lon: tuple[float, float] | None = PrivateAttr(None)

@@ -107,7 +119,7 @@ def distance_to(self, other: Location) -> float:

return math.sqrt((sx - ox) ** 2 + (sy - oy) ** 2 + (sz - oz) ** 2)

def offset_from(self, other: Location) -> tuple[float, float, float]:

- """Return offset vector (east, north, depth) to other location in [m]

+ """Return offset vector (east, north, depth) from other location in [m]

Args:

other (Location): The other location.

diff --git a/lassie/models/semblance.py b/lassie/models/semblance.py

index eb74a9ae..75f5ef78 100644

--- a/lassie/models/semblance.py

+++ b/lassie/models/semblance.py

@@ -132,6 +132,15 @@ def median_abs_deviation(self) -> float:

"""Median absolute deviation of the maximum semblance."""

return float(stats.median_abs_deviation(self.maximum_semblance))

+ def apply_exponent(self, exponent: float) -> None:

+ """Apply exponent to the maximum semblance.

+

+ Args:

+ exponent (float): Exponent

+ """

+ self.semblance_unpadded **= exponent

+ self._clear_cache()

+

def median_mask(self, level: float = 3.0) -> np.ndarray:

"""Median mask above a level from the maximum semblance.

diff --git a/lassie/models/station.py b/lassie/models/station.py

index 6e6054a2..7c0f97f7 100644

--- a/lassie/models/station.py

+++ b/lassie/models/station.py

@@ -5,14 +5,14 @@

from typing import TYPE_CHECKING, Any, Iterable, Iterator

import numpy as np

-from pydantic import BaseModel, constr

+from pydantic import BaseModel, Field, FilePath, constr

from pyrocko.io.stationxml import load_xml

from pyrocko.model import Station as PyrockoStation

from pyrocko.model import dump_stations_yaml, load_stations

if TYPE_CHECKING:

- from pyrocko.trace import Trace

from pyrocko.squirrel import Squirrel

+ from pyrocko.trace import Trace

from lassie.models.location import CoordSystem, Location

@@ -22,9 +22,9 @@

class Station(Location):

- network: constr(max_length=2)

- station: constr(max_length=5)

- location: constr(max_length=2) = ""

+ network: str = Field(..., max_length=2)

+ station: str = Field(..., max_length=5)

+ location: str = Field(default="", max_length=2)

@classmethod

def from_pyrocko_station(cls, station: PyrockoStation) -> Station:

@@ -56,11 +56,22 @@ def __hash__(self) -> int:

class Stations(BaseModel):

- station_xmls: list[Path] = []

- pyrocko_station_yamls: list[Path] = []

-

+ pyrocko_station_yamls: list[FilePath] = Field(

+ default=[],

+ description="List of [Pyrocko station YAML]"

+ "(https://pyrocko.org/docs/current/formats/yaml.html) files.",

+ )

+ station_xmls: list[FilePath] = Field(

+ default=[],

+ description="List of StationXML files.",

+ )

+

+ blacklist: set[constr(pattern=NSL_RE)] = Field(

+ default=set(),

+ description="Blacklist stations and exclude from detecion. "

+ "Format is `['NET.STA.LOC', ...]`",

+ )

stations: list[Station] = []

- blacklist: set[constr(pattern=NSL_RE)] = set()

def model_post_init(self, __context: Any) -> None:

loaded_stations = []

@@ -183,7 +194,7 @@ def get_coordinates(self, system: CoordSystem = "geographic") -> np.ndarray:

[(*sta.effective_lat_lon, sta.effective_elevation) for sta in self]

)

- def dump_pyrocko_stations(self, filename: Path) -> None:

+ def export_pyrocko_stations(self, filename: Path) -> None:

"""Dump stations to pyrocko station yaml file.

Args:

@@ -194,7 +205,7 @@ def dump_pyrocko_stations(self, filename: Path) -> None:

filename=str(filename.expanduser()),

)

- def dump_csv(self, filename: Path) -> None:

+ def export_csv(self, filename: Path) -> None:

"""Dump stations to CSV file.

Args:

@@ -208,5 +219,8 @@ def dump_csv(self, filename: Path) -> None:

f"{sta.lat},{sta.lon},{sta.elevation},{sta.depth}\n"

)

+ def export_vtk(self, reference: Location | None = None) -> None:

+ ...

+

def __hash__(self) -> int:

return hash(sta for sta in self)

diff --git a/lassie/octree.py b/lassie/octree.py

index 51c2fa84..ac8fa1de 100644

--- a/lassie/octree.py

+++ b/lassie/octree.py

@@ -15,7 +15,6 @@

Field,

PositiveFloat,

PrivateAttr,

- confloat,

field_validator,

model_validator,

)

@@ -66,7 +65,7 @@ class Node(BaseModel):

semblance: float = 0.0

tree: Octree | None = Field(None, exclude=True)

- children: tuple[Node, ...] = Field((), exclude=True)

+ children: tuple[Node, ...] = Field(default=(), exclude=True)

_hash: bytes | None = PrivateAttr(None)

_children_cached: tuple[Node, ...] = PrivateAttr(())

@@ -141,7 +140,7 @@ def as_location(self) -> Location:

if not self.tree:

raise AttributeError("parent tree not set")

if not self._location:

- reference = self.tree.reference

+ reference = self.tree.location

self._location = Location.model_construct(

lat=reference.lat,

lon=reference.lon,

@@ -160,12 +159,14 @@ def __iter__(self) -> Iterator[Node]:

yield self

def hash(self) -> bytes:

+ if not self.tree:

+ raise AttributeError("parent tree not set")

if self._hash is None:

self._hash = sha1(

struct.pack(

"dddddd",

- self.tree.reference.lat,

- self.tree.reference.lon,

+ self.tree.location.lat,

+ self.tree.location.lon,

self.east,

self.north,

self.depth,

@@ -179,19 +180,47 @@ def __hash__(self) -> int:

class Octree(BaseModel):

- reference: Location = Location(lat=0.0, lon=0)

- size_initial: PositiveFloat = 2 * KM

- size_limit: PositiveFloat = 500

- east_bounds: tuple[float, float] = (-10 * KM, 10 * KM)

- north_bounds: tuple[float, float] = (-10 * KM, 10 * KM)

- depth_bounds: tuple[float, float] = (0 * KM, 20 * KM)

- absorbing_boundary: confloat(ge=0.0) = 1 * KM

+ location: Location = Field(

+ default=Location(lat=0.0, lon=0.0),

+ description="The reference location of the octree.",

+ )

+ size_initial: PositiveFloat = Field(

+ default=2 * KM,

+ description="Initial size of a cubic octree node in meters.",

+ )

+ size_limit: PositiveFloat = Field(

+ default=500.0,

+ description="Smallest possible size of an octree node in meters.",

+ )

+ east_bounds: tuple[float, float] = Field(

+ default=(-10 * KM, 10 * KM),

+ description="East bounds of the octree in meters.",

+ )

+ north_bounds: tuple[float, float] = Field(

+ default=(-10 * KM, 10 * KM),

+ description="North bounds of the octree in meters.",

+ )

+ depth_bounds: tuple[float, float] = Field(

+ default=(0 * KM, 20 * KM),

+ description="Depth bounds of the octree in meters.",

+ )

+ absorbing_boundary: float = Field(

+ default=1 * KM,

+ ge=0.0,

+ description="Absorbing boundary in meters. Detections inside the boundary will be tagged.",

+ )

_root_nodes: list[Node] = PrivateAttr([])

_cached_coordinates: dict[CoordSystem, np.ndarray] = PrivateAttr({})

model_config = ConfigDict(ignored_types=(cached_property,))

+ @field_validator("location")

+ def check_reference(cls, location: Location) -> Location: # noqa: N805

+ if location.lat == 0.0 and location.lon == 0.0:

+ raise ValueError("invalid location, expected non-zero lat/lon")

+ return location

+

@field_validator("east_bounds", "north_bounds", "depth_bounds")

def check_bounds(

cls, # noqa: N805

@@ -209,6 +238,7 @@ def check_limits(self) -> Octree:

f"invalid octree size limits ({self.size_initial}, {self.size_limit}),"

" expected size_limit <= size_initial"

)

+ # self.reference = self.reference.shifted_origin()

return self

def model_post_init(self, __context: Any) -> None:

@@ -380,18 +410,23 @@ def total_number_nodes(self) -> int:

"""

return len(self._root_nodes) * (8 ** self.n_levels())

- def maximum_number_nodes(self) -> int:

- """Returns the maximum number of nodes.

+ def cached_bottom(self) -> Self:

+ """Returns a copy of the octree refined to the cached bottom nodes.

+

+ Raises:

+ EnvironmentError: If the octree has never been split.

Returns:

- int: Maximum number of nodes.

+ Self: Copy of the octree with cached bottom nodes.

"""

- return int(

- (self.east_bounds[1] - self.east_bounds[0])

- * (self.north_bounds[1] - self.north_bounds[0])

- * (self.depth_bounds[1] - self.depth_bounds[0])

- / (self.smallest_node_size() ** 3)

- )

+ tree = self.copy(deep=True)

+ split_nodes = []

+ for node in tree:

+ if node._children_cached:

+ split_nodes.extend(node.split())

+ if not split_nodes:

+ raise EnvironmentError("octree has never been split.")

+ return tree

def copy(self, deep=False) -> Self:

tree = super().model_copy(deep=deep)

diff --git a/lassie/plot/detections.py b/lassie/plot/detections.py

index 5c7bad69..ad8ae6f8 100644

--- a/lassie/plot/detections.py

+++ b/lassie/plot/detections.py

@@ -1,66 +1,74 @@

from __future__ import annotations

from datetime import datetime, timezone

-from pathlib import Path

-from typing import TYPE_CHECKING, cast

+from typing import ClassVar, Literal

-import matplotlib.pyplot as plt

import numpy as np

-from .utils import with_default_axes

-

-if TYPE_CHECKING:

- from lassie.models.detection import EventDetections

+from lassie.plot.base import BasePlot, LassieFigure

HOUR = 3600

DAY = 24 * HOUR

-@with_default_axes

-def plot_detections(

- detections: EventDetections,

- axes: plt.Axes | None = None,

- filename: Path | None = None,

-) -> None:

- axes = cast(plt.Axes, axes) # injected by wrapper

-

- semblances = [detection.semblance for detection in detections]

- times = [

- detection.time.replace(tzinfo=None) # Stupid fix for matplotlib bug

- for detection in detections

- ]

-

- axes.scatter(times, semblances, cmap="viridis_r", c=semblances, s=3, alpha=0.5)

- axes.set_ylabel("Detection Semblance")

- axes.grid(axis="x", alpha=0.3)

- # axes.figure.autofmt_xdate()

-

- cum_axes = axes.twinx()

-

- cummulative_detections = np.cumsum(np.ones(detections.n_detections))

- cum_axes.plot(

- times,

- cummulative_detections,

- color="black",

- alpha=0.8,

- label="Cumulative Detections",

- )

- cum_axes.set_ylabel("# Detections")

-

- to_timestamps = np.vectorize(lambda d: d.timestamp())

- from_timestamps = np.vectorize(lambda t: datetime.fromtimestamp(t, tz=timezone.utc))

- detection_time_span = times[-1] - times[0]

- daily_rate, edges = np.histogram(

- to_timestamps(times),

- bins=detection_time_span.days,

- )

-

- cum_axes.stairs(

- daily_rate,

- from_timestamps(edges),

- color="gray",

- fill=True,

- alpha=0.5,

- label="Daily Detections",

- )

- cum_axes.legend(loc="upper left", fontsize="small")

+DetectionAttribute = Literal["semblance", "magnitude"]

+

+

+class DetectionsDistribution(BasePlot):

+ attribute: ClassVar[DetectionAttribute] = "semblance"

+

+ def get_figure(self) -> LassieFigure:

+ return self.create_figure(attribute=self.attribute)

+

+ def create_figure(

+ self,

+ attribute: DetectionAttribute = "semblance",

+ ) -> LassieFigure:

+ figure = self.new_figure("event-distribution-{attribute}.png")

+ axes = figure.get_axes()

+

+ detections = self.detections

+

+ values = [getattr(detection, attribute) for detection in detections]

+ times = [

+ detection.time.replace(tzinfo=None) # Stupid fix for matplotlib bug

+ for detection in detections

+ ]

+

+ axes.scatter(times, values, cmap="viridis_r", c=values, s=3, alpha=0.5)

+ axes.set_ylabel(attribute.capitalize())

+ axes.grid(axis="x", alpha=0.3)

+ # axes.figure.autofmt_xdate()

+

+ cum_axes = axes.twinx()

+

+ cummulative_detections = np.cumsum(np.ones(detections.n_detections))

+ cum_axes.plot(

+ times,

+ cummulative_detections,

+ color="black",

+ alpha=0.8,

+ label="Cumulative Detections",

+ )

+ cum_axes.set_ylabel("# Detections")

+

+ to_timestamps = np.vectorize(lambda d: d.timestamp())

+ from_timestamps = np.vectorize(

+ lambda t: datetime.fromtimestamp(t, tz=timezone.utc)

+ )

+ detection_time_span = times[-1] - times[0]

+ daily_rate, edges = np.histogram(

+ to_timestamps(times),

+ bins=detection_time_span.days,

+ )

+

+ cum_axes.stairs(

+ daily_rate,

+ from_timestamps(edges),

+ color="gray",

+ fill=True,

+ alpha=0.5,

+ label="Daily Detections",

+ )

+ cum_axes.legend(loc="upper left", fontsize="small")

+ return figure

diff --git a/lassie/plot/octree.py b/lassie/plot/octree.py

index 83847d76..38a3b870 100644

--- a/lassie/plot/octree.py

+++ b/lassie/plot/octree.py

@@ -1,7 +1,7 @@

from __future__ import annotations

import logging

-from typing import TYPE_CHECKING, Callable

+from typing import TYPE_CHECKING, Callable, Iterator

import matplotlib.pyplot as plt

import numpy as np

@@ -11,7 +11,7 @@

from matplotlib.collections import PatchCollection

from matplotlib.patches import Rectangle

-from lassie.models.detection import EventDetection

+from lassie.plot.base import BasePlot, LassieFigure

if TYPE_CHECKING:

from pathlib import Path

@@ -62,6 +62,45 @@ def octree_to_rectangles(

)

+class OctreeRefinement(BasePlot):

+ normalize: bool = False

+ plot_detections: bool = False

+

+ def get_figure(self) -> Iterator[LassieFigure]:

+ yield self.create_figure()

+

+ def create_figure(self) -> LassieFigure:

+ figure = self.new_figure("octree-refinement.png")

+ ax = figure.get_axes()

+ octree = self.search.octree

+

+ for spine in ax.spines.values():

+ spine.set_visible(False)

+ ax.set_xticklabels([])

+ ax.set_xticks([])

+ ax.set_yticklabels([])

+ ax.set_yticks([])

+ ax.set_xlabel("East [m]")

+ ax.set_ylabel("North [m]")

+ ax.add_collection(octree_to_rectangles(octree, normalize=self.normalize))

+

+ ax.set_title(f"Octree surface tiles (nodes: {octree.n_nodes})")

+

+ ax.autoscale()

+

+ if self.plot_detections:

+ detections = self.search.detections

+ for detection in detections or []:

+ ax.scatter(

+ detection.east_shift,

+ detection.north_shift,

+ marker="*",

+ s=50,

+ color="yellow",

+ )

+ return figure

+

+

def plot_octree_3d(octree: Octree, cmap: str = "Oranges") -> None:

ax = plt.figure().add_subplot(projection="3d")

colormap = get_cmap(cmap)

@@ -70,9 +109,9 @@ def plot_octree_3d(octree: Octree, cmap: str = "Oranges") -> None:

colors = colormap(octree.semblance, alpha=octree.semblance)

ax.scatter(coords[0], coords[1], coords[2], c=colors)

- ax.set_xlabel("east [m]")

- ax.set_ylabel("north [m]")

- ax.set_zlabel("depth [m]")

+ ax.set_xlabel("East [m]")

+ ax.set_ylabel("North [m]")

+ ax.set_zlabel("Depth [m]")

plt.show()

@@ -89,51 +128,9 @@ def plot_octree_scatter(

colors = colormap(surface[:, 2], alpha=normalized_semblance)

ax = plt.figure().gca()

ax.scatter(surface[:, 0], surface[:, 1], c=colors)

- ax.set_xlabel("east [m]")

- ax.set_ylabel("north [m]")

- plt.show()

-

-

-def plot_octree_surface_tiles(

- octree: Octree,

- axes: plt.Axes | None = None,

- normalize: bool = False,

- filename: Path | None = None,

- detections: list[EventDetection] | None = None,

-) -> None:

- if axes is None:

- fig = plt.figure()

- ax = fig.gca()

- else:

- fig = axes.figure

- ax = axes

-

- for spine in ax.spines.values():

- spine.set_visible(False)

- ax.set_xticklabels([])

- ax.set_xticks([])

- ax.set_yticklabels([])

- ax.set_yticks([])

ax.set_xlabel("East [m]")

ax.set_ylabel("North [m]")

- ax.add_collection(octree_to_rectangles(octree, normalize=normalize))

-

- ax.set_title(f"Octree surface tiles (nodes: {octree.n_nodes})")

-

- ax.autoscale()

- for detection in detections or []:

- ax.scatter(

- detection.east_shift,

- detection.north_shift,

- marker="*",

- s=50,

- color="yellow",

- )

- if filename is not None:

- fig.savefig(str(filename), bbox_inches="tight", dpi=300)

- plt.close()

- elif axes is None:

- plt.show()

+ plt.show()

def plot_octree_semblance_movie(

diff --git a/lassie/plot/plot.py b/lassie/plot/plot.py

deleted file mode 100644

index 69a3ef88..00000000

--- a/lassie/plot/plot.py

+++ /dev/null

@@ -1,58 +0,0 @@

-from __future__ import annotations

-

-from typing import TYPE_CHECKING

-

-import matplotlib.pyplot as plt

-import numpy as np

-from matplotlib import cm

-from matplotlib.collections import PatchCollection

-from matplotlib.patches import Rectangle

-

-if TYPE_CHECKING:

- from matplotlib.colors import Colormap

-

- from lassie.octree import Octree

-

-

-def octree_to_rectangles(

- octree: Octree,

- cmap: str | Colormap = "Oranges",

-) -> PatchCollection:

- if isinstance(cmap, str):

- cmap = cm.get_cmap(cmap)

-

- coords = octree.reduce_surface()

- coords = coords[np.argsort(coords[:, 2])[::-1]]

- sizes = coords[:, 2]

- semblances = coords[:, 3]

- sizes = sorted(set(sizes), reverse=True)

- zorders = {size: 1.0 + float(order) for order, size in enumerate(sizes)}

- print(zorders)

-

- rectangles = []

- for node in coords:

- east, north, size, semblance = node

- half_size = size / 2

- rect = Rectangle(

- xy=(east - half_size, north - half_size),

- width=size,

- height=size,

- zorder=semblance,

- )

- rectangles.append(rect)

- colors = cmap(semblances / semblances.max())

- print(colors)

- return PatchCollection(patches=rectangles, facecolors=colors, edgecolors="k")

-

-

-def plot_octree(octree: Octree, axes: plt.Axes | None = None) -> None:

- if axes is None:

- fig = plt.figure()

- ax = fig.gca()

- else:

- ax = axes

- ax.add_collection(octree_to_rectangles(octree))

-

- ax.autoscale()

- if axes is None:

- plt.show()

diff --git a/lassie/search.py b/lassie/search.py

index abc8c372..7399d9cc 100644

--- a/lassie/search.py

+++ b/lassie/search.py

@@ -24,7 +24,6 @@

from lassie.models.detection import EventDetection, EventDetections, PhaseDetection

from lassie.models.semblance import Semblance, SemblanceStats

from lassie.octree import NodeSplitError, Octree

-from lassie.plot.octree import plot_octree_surface_tiles

from lassie.signals import Signal

from lassie.station_corrections import StationCorrections

from lassie.tracers import (

@@ -33,7 +32,13 @@

FastMarchingTracer,

RayTracers,

)

-from lassie.utils import PhaseDescription, alog_call, datetime_now, time_to_path

+from lassie.utils import (

+ PhaseDescription,

+ alog_call,

+ datetime_now,

+ human_readable_bytes,

+ time_to_path,

+)

from lassie.waveforms import PyrockoSquirrel, WaveformProviderType

if TYPE_CHECKING:

@@ -70,10 +75,13 @@ class Search(BaseModel):

LocalMagnitudeExtractor(),

]

- sampling_rate: SamplingRate = 50

+ sampling_rate: SamplingRate = 100

detection_threshold: PositiveFloat = 0.05

- node_split_threshold: float = Field(default=0.9, gt=0.0, lt=1.0)

detection_blinding: timedelta = timedelta(seconds=2.0)

+

+ image_mean_p: float = Field(default=1, ge=1.0, le=2.0)

+

+ node_split_threshold: float = Field(default=0.9, gt=0.0, lt=1.0)

window_length: timedelta = timedelta(minutes=5)

n_threads_parstack: int = Field(default=0, ge=0)

@@ -97,11 +105,14 @@ class Search(BaseModel):

# Signals

_new_detection: Signal[EventDetection] = PrivateAttr(Signal())

- _batch_processing_durations: Deque[timedelta] = PrivateAttr(

+ _batch_proc_time: Deque[timedelta] = PrivateAttr(

+ default_factory=lambda: deque(maxlen=25)

+ )

+ _batch_cum_durations: Deque[timedelta] = PrivateAttr(

default_factory=lambda: deque(maxlen=25)

)

- def init_rundir(self, force=False) -> None:

+ def init_rundir(self, force: bool = False) -> None:

rundir = (

self.project_dir / self._config_stem or f"run-{time_to_path(self.created)}"

)

@@ -121,13 +132,16 @@ def init_rundir(self, force=False) -> None:

if not rundir.exists():

rundir.mkdir()

- file_logger = logging.FileHandler(self._rundir / "lassie.log")

- logging.root.addHandler(file_logger)

self.write_config()

+ self._init_logging()

logger.info("created new rundir %s", rundir)

self._detections = EventDetections(rundir=rundir)

+ def _init_logging(self) -> None:

+ file_logger = logging.FileHandler(self._rundir / "lassie.log")

+ logging.root.addHandler(file_logger)

+

def write_config(self, path: Path | None = None) -> None:

rundir = self._rundir

path = path or rundir / "search.json"

@@ -136,8 +150,11 @@ def write_config(self, path: Path | None = None) -> None:

path.write_text(self.model_dump_json(indent=2, exclude_unset=True))

logger.debug("dumping stations...")

- self.stations.dump_pyrocko_stations(rundir / "pyrocko-stations.yaml")

- self.stations.dump_csv(rundir / "stations.csv")

+ self.stations.export_pyrocko_stations(rundir / "pyrocko_stations.yaml")

+

+ csv_dir = rundir / "csv"

+ csv_dir.mkdir(exist_ok=True)

+ self.stations.export_csv(csv_dir / "stations.csv")

@property

def semblance_stats(self) -> SemblanceStats:

@@ -155,7 +172,9 @@ def init_boundaries(self) -> None:

self._distance_range = (distances.min(), distances.max())

# Timing ranges

- for phase, tracer in self.ray_tracers.iter_phase_tracer():

+ for phase, tracer in self.ray_tracers.iter_phase_tracer(

+ phases=self.image_functions.get_phases()

+ ):

traveltimes = tracer.get_travel_times(phase, self.octree, self.stations)

self._travel_time_ranges[phase] = (

timedelta(seconds=np.nanmin(traveltimes)),

@@ -181,7 +200,7 @@ def init_boundaries(self) -> None:

if self.window_length < 2 * self._window_padding + self._shift_range:

raise ValueError(

f"window length {self.window_length} is too short for the "

- f"theoretical shift range {self._shift_range} and "

+ f"theoretical travel time range {self._shift_range} and "

f"cummulative window padding of {self._window_padding}."

" Increase the window_length time."

)

@@ -193,22 +212,6 @@ def init_boundaries(self) -> None:

*self._distance_range,

)

- def _plot_octree_surface(

- self,

- octree: Octree,

- time: datetime,

- detections: list[EventDetection] | None = None,

- ) -> None:

- logger.info("plotting octree surface...")

- filename = (

- self._rundir

- / "figures"

- / "octree_surface"

- / f"{time_to_path(time)}-nodes-{octree.n_nodes}.png"

- )

- filename.parent.mkdir(parents=True, exist_ok=True)

- plot_octree_surface_tiles(octree, filename=filename, detections=detections)

-

async def prepare(self) -> None:

logger.info("preparing search...")

self.data_provider.prepare(self.stations)

@@ -216,13 +219,18 @@ async def prepare(self) -> None:

self.octree,

self.stations,

phases=self.image_functions.get_phases(),

+ rundir=self._rundir,

)

self.init_boundaries()

async def start(self, force_rundir: bool = False) -> None:

+ if not self.has_rundir():

+ self.init_rundir(force=force_rundir)

+

await self.prepare()

- self.init_rundir(force_rundir)

+

logger.info("starting search...")

+ batch_processing_start = datetime_now()

processing_start = datetime_now()

if self._progress.time_progress:

@@ -236,11 +244,15 @@ async def start(self, force_rundir: bool = False) -> None:

batch.clean_traces()

if batch.is_empty():

- logger.warning("batch is empty")

+ logger.warning("empty batch %s", batch.log_str())

continue

if batch.duration < 2 * self._window_padding:

- logger.warning("batch duration is too short")

+ logger.warning(

+ "duration of batch %s too short %s",

+ batch.log_str(),

+ batch.duration,

+ )

continue

search_block = SearchTraces(

@@ -259,38 +271,51 @@ async def start(self, force_rundir: bool = False) -> None:

self._detections.add(detection)

await self._new_detection.emit(detection)

- if batch.i_batch % 50 == 0:

+ if self._detections.n_detections and batch.i_batch % 50 == 0:

self._detections.dump_detections(jitter_location=self.octree.size_limit)

- processing_time = datetime_now() - processing_start

- self._batch_processing_durations.append(processing_time)

- if batch.n_batches:

- percent_processed = ((batch.i_batch + 1) / batch.n_batches) * 100

- else:

- percent_processed = 0.0

+ processing_time = datetime_now() - batch_processing_start

+ self._batch_proc_time.append(processing_time)

+ self._batch_cum_durations.append(batch.cumulative_duration)

+

+ processed_percent = (

+ ((batch.i_batch + 1) / batch.n_batches) * 100

+ if batch.n_batches

+ else 0.0

+ )

+ # processing_rate = (

+ # sum(self._batch_cum_durations, timedelta())

+ # / sum(self._batch_proc_time, timedelta()).total_seconds()

+ # )

+ processing_rate_bytes = human_readable_bytes(

+ batch.cumulative_bytes / processing_time.total_seconds()

+ )

+

logger.info(

- "%s%% processed - batch %d/%s - %s in %s",

- f"{percent_processed:.1f}" if percent_processed else "??",

- batch.i_batch + 1,

- str(batch.n_batches or "?"),

- batch.start_time,

+ "%s%% processed - batch %s in %s",

+ f"{processed_percent:.1f}" if processed_percent else "??",

+ batch.log_str(),

processing_time,

)

if batch.n_batches:

- remaining_time = (

- sum(self._batch_processing_durations, timedelta())

- / len(self._batch_processing_durations)

- * (batch.n_batches - batch.i_batch - 1)

+ remaining_time = sum(self._batch_proc_time, timedelta()) / len(

+ self._batch_proc_time

)

+ remaining_time *= batch.n_batches - batch.i_batch - 1

logger.info(

- "%s remaining - estimated finish at %s",

+ "processing rate %s/s - %s remaining - finish at %s",

+ processing_rate_bytes,

remaining_time,

datetime.now() + remaining_time, # noqa: DTZ005

)

- processing_start = datetime_now()

+ batch_processing_start = datetime_now()

self.set_progress(batch.end_time)

+ self._detections.dump_detections(jitter_location=self.octree.size_limit)

+ logger.info("finished search in %s", datetime_now() - processing_start)

+ logger.info("found %d detections", self._detections.n_detections)

+

async def add_features(self, event: EventDetection) -> None:

try:

squirrel = self.data_provider.get_squirrel()

@@ -313,6 +338,8 @@ def load_rundir(cls, rundir: Path) -> Self:

search._progress = SearchProgress.model_validate_json(

progress_file.read_text()

)

+

+ search._init_logging()

return search

@classmethod

@@ -331,7 +358,11 @@ def from_config(

model._config_stem = filename.stem

return model

+ def has_rundir(self) -> bool:

+ return hasattr(self, "_rundir") and self._rundir.exists()

+

def __del__(self) -> None:

+ # FIXME: Replace with signal overserver?

if hasattr(self, "_detections"):

with contextlib.suppress(Exception):

self._detections.dump_detections(jitter_location=self.octree.size_limit)

@@ -385,10 +416,10 @@ async def calculate_semblance(

traveltimes_bad = np.isnan(traveltimes)

traveltimes[traveltimes_bad] = 0.0

- station_contribution = (~traveltimes_bad).sum(axis=1, dtype=float)

+ station_contribution = (~traveltimes_bad).sum(axis=1, dtype=np.float32)

shifts = np.round(-traveltimes / image.delta_t).astype(np.int32)

- weights = np.full_like(shifts, fill_value=image.weight, dtype=float)

+ weights = np.full_like(shifts, fill_value=image.weight, dtype=np.float32)

# Normalize by number of station contribution

with np.errstate(divide="ignore", invalid="ignore"):

@@ -423,6 +454,7 @@ async def get_images(self, sampling_rate: float | None = None) -> WaveformImages

if None not in self._images:

images = await self.parent.image_functions.process_traces(self.traces)

images.set_stations(self.parent.stations)

+ images.apply_exponent(self.parent.image_mean_p)

self._images[None] = images

if sampling_rate not in self._images:

@@ -475,21 +507,18 @@ async def search(

semblance_data=semblance.semblance_unpadded,

n_samples_semblance=semblance.n_samples_unpadded,

)

+ semblance.apply_exponent(1.0 / parent.image_mean_p)

semblance.normalize(images.cumulative_weight())

parent.semblance_stats.update(semblance.get_stats())

logger.debug("semblance stats: %s", parent.semblance_stats)

detection_idx, detection_semblance = semblance.find_peaks(

- height=parent.detection_threshold,

- prominence=parent.detection_threshold,

+ height=parent.detection_threshold**parent.image_mean_p,

+ prominence=parent.detection_threshold**parent.image_mean_p,

distance=round(parent.detection_blinding.total_seconds() * sampling_rate),

)

- if parent.plot_octree_surface:

- octree.map_semblance(semblance.maximum_node_semblance())

- parent._plot_octree_surface(octree, time=self.start_time)

-

if detection_idx.size == 0:

return [], semblance.get_trace()

@@ -550,7 +579,7 @@ async def search(

phase=image.phase,

event_time=time,

source=source_location,

- receivers=image.stations.stations,

+ receivers=image.stations,

)

arrivals_observed = image.search_phase_arrivals(

modelled_arrivals=[

@@ -565,7 +594,7 @@ async def search(

for mod, obs in zip(arrivals_model, arrivals_observed, strict=True)

]

detection.receivers.add_receivers(

- stations=image.stations.stations,

+ stations=image.stations,

phase_arrivals=phase_detections,

)

diff --git a/lassie/station_corrections.py b/lassie/station_corrections.py

index 6e6de66d..0616ffd6 100644

--- a/lassie/station_corrections.py

+++ b/lassie/station_corrections.py

@@ -313,14 +313,34 @@ def from_receiver(cls, receiver: Receiver) -> Self:

class StationCorrections(BaseModel):

rundir: DirectoryPath | None = Field(

default=None,

- description="The rundir to load the detections from",

+ description="Lassie rundir to calculate the corrections from.",

+ )

+ measure: Literal["median", "average"] = Field(

+ default="median",

+ description="Arithmetic measure for the traveltime delays. "

+ "Choose from `median` and `average`.",

+ )

+ weighting: ArrivalWeighting = Field(

+ default="mul-PhaseNet-semblance",

+ description="Weighting of the traveltime delays. Choose from `none`, "

+ "`PhaseNet`, `semblance`, `add-PhaseNet-semblance`"

+ " and `mul-PhaseNet-semblance`.",

)

- measure: Literal["median", "average"] = "median"

- weighting: ArrivalWeighting = "mul-PhaseNet-semblance"

- minimum_num_picks: PositiveInt = 5

- minimum_distance_border: PositiveFloat = 2000.0

- minimum_depth: PositiveFloat = 3000.0

+ minimum_num_picks: PositiveInt = Field(

+ default=5,

+ description="Minimum number of picks at a station required"

+ " to calculate station corrections.",

+ )

+ minimum_distance_border: PositiveFloat = Field(

+ default=2000.0,

+ description="Minimum distance to the octree border "

+ "to be considered for correction.",

+ )

+ minimum_depth: PositiveFloat = Field(

+ default=3000.0,

+ description="Minimum depth of the detection to be considered for correction.",

+ )

_station_corrections: dict[str, StationCorrection] = PrivateAttr({})

_traveltime_delay_cache: dict[tuple[NSL, PhaseDescription], float] = PrivateAttr({})

@@ -410,8 +430,7 @@ def get_delay(self, station_nsl: NSL, phase: PhaseDescription) -> float:

Returns:

float: The traveltime delay in seconds.

"""

-

- def get_delay() -> float:

+ if (station_nsl, phase) not in self._traveltime_delay_cache:

try:

station = self.get_station(station_nsl)

except KeyError:

@@ -425,8 +444,6 @@ def get_delay() -> float:

return station.get_median_delay(phase, self.weighting)

raise ValueError(f"unknown measure {self.measure!r}")

- if (station_nsl, phase) not in self._traveltime_delay_cache:

- self._traveltime_delay_cache[station_nsl, phase] = get_delay()

return self._traveltime_delay_cache[station_nsl, phase]

def get_delays(

@@ -443,7 +460,9 @@ def get_delays(

Returns:

np.ndarray: The traveltime delays for the given stations and phase.

"""

- return np.array([self.get_delay(nsl, phase) for nsl in station_nsls])

+ return np.fromiter(

+ (self.get_delay(nsl, phase) for nsl in station_nsls), dtype=float

+ )

def save_plots(self, folder: Path) -> None:

folder.mkdir(exist_ok=True)

@@ -454,7 +473,7 @@ def save_plots(self, folder: Path) -> None:

filename=folder / f"corrections-{correction.station.pretty_nsl}.png"

)

- def save_csv(self, filename: Path) -> None:

+ def export_csv(self, filename: Path) -> None:

"""Save the station corrections to a CSV file.

Args:

@@ -464,10 +483,10 @@ def save_csv(self, filename: Path) -> None:

csv_data = [correction.get_csv_data() for correction in self]

columns = set(chain.from_iterable(data.keys() for data in csv_data))

with filename.open("w") as file:

- file.write(f"{', '.join(columns)}\n")

+ file.write(f"{','.join(columns)}\n")

for data in csv_data:

file.write(

- f"{', '.join(str(data.get(key, -9999.9)) for key in columns)}\n"

+ f"{','.join(str(data.get(key, -9999.9)) for key in columns)}\n"

)

def __iter__(self) -> Iterator[StationCorrection]:

diff --git a/lassie/tracers/__init__.py b/lassie/tracers/__init__.py

index cd6e3ad5..eb6fc0d2 100644

--- a/lassie/tracers/__init__.py

+++ b/lassie/tracers/__init__.py

@@ -1,6 +1,7 @@

from __future__ import annotations

import logging

+from pathlib import Path

from typing import TYPE_CHECKING, Annotated, Iterator, Union

from pydantic import Field, RootModel

@@ -40,18 +41,30 @@ async def prepare(

octree: Octree,

stations: Stations,

phases: tuple[PhaseDescription, ...],

+ rundir: Path | None = None,

) -> None:

- logger.info("preparing ray tracers")

+ prepared_tracers = []

for phase in phases:

tracer = self.get_phase_tracer(phase)

- await tracer.prepare(octree, stations)

+ if tracer in prepared_tracers:

+ continue

+ phases = tracer.get_available_phases()

+ logger.info(

+ "preparing ray tracer %s for phase %s", tracer.tracer, ", ".join(phases)

+ )

+ await tracer.prepare(octree, stations, rundir)

+ prepared_tracers.append(tracer)

- def get_available_phases(self) -> tuple[str]:

+ def get_available_phases(self) -> tuple[str, ...]:

phases = []

for tracer in self:

phases.extend([*tracer.get_available_phases()])

if len(set(phases)) != len(phases):

- raise ValueError("A phase was provided twice")

+ duplicate_phases = {phase for phase in phases if phases.count(phase) > 1}

+ raise ValueError(

+ f"Phases {', '.join(duplicate_phases)} was provided twice."

+ " Rename or remove the duplicate phases from the tracers."

+ )

return tuple(phases)

def get_phase_tracer(self, phase: str) -> RayTracer:

@@ -60,13 +73,16 @@ def get_phase_tracer(self, phase: str) -> RayTracer:

return tracer

raise ValueError(

f"No tracer found for phase {phase}."

- f" Available phases: {', '.join(self.get_available_phases())}"

+ " Please add a tracer for this phase or rename the phase to match a tracer."

+ f" Available phases: {', '.join(self.get_available_phases())}."

)

def __iter__(self) -> Iterator[RayTracer]:

yield from self.root

- def iter_phase_tracer(self) -> Iterator[tuple[PhaseDescription, RayTracer]]:

- for tracer in self:

- for phase in tracer.get_available_phases():

- yield (phase, tracer)

+ def iter_phase_tracer(

+ self, phases: tuple[PhaseDescription, ...]

+ ) -> Iterator[tuple[PhaseDescription, RayTracer]]:

+ for phase in phases:

+ tracer = self.get_phase_tracer(phase)

+ yield (phase, tracer)

diff --git a/lassie/tracers/base.py b/lassie/tracers/base.py

index dc654b02..b9167f64 100644

--- a/lassie/tracers/base.py

+++ b/lassie/tracers/base.py

@@ -10,6 +10,7 @@

if TYPE_CHECKING:

from datetime import datetime

+ from pathlib import Path

from lassie.models.station import Stations

from lassie.octree import Octree

@@ -24,7 +25,12 @@ class ModelledArrival(PhaseArrival):

class RayTracer(BaseModel):

tracer: Literal["RayTracer"] = "RayTracer"

- async def prepare(self, octree: Octree, stations: Stations):

+ async def prepare(

+ self,

+ octree: Octree,

+ stations: Stations,

+ rundir: Path | None = None,

+ ):

...

def get_available_phases(self) -> tuple[str, ...]:

diff --git a/lassie/tracers/cake.py b/lassie/tracers/cake.py

index d3e3bc9b..278208fa 100644

--- a/lassie/tracers/cake.py

+++ b/lassie/tracers/cake.py

@@ -2,6 +2,7 @@

import logging

import re

+import struct

import zipfile

from datetime import datetime, timedelta

from functools import cached_property

@@ -20,6 +21,7 @@

Field,

FilePath,

PrivateAttr,

+ ValidationError,

constr,

model_validator,

)

@@ -91,21 +93,21 @@ class CakeArrival(ModelledArrival):

class EarthModel(BaseModel):

filename: FilePath | None = Field(

- DEFAULT_VELOCITY_MODEL_FILE,

+ default=DEFAULT_VELOCITY_MODEL_FILE,

description="Path to velocity model.",

)

format: Literal["nd", "hyposat"] = Field(

- "nd",

- description="Format of the velocity model. nd or hyposat is supported.",

+ default="nd",