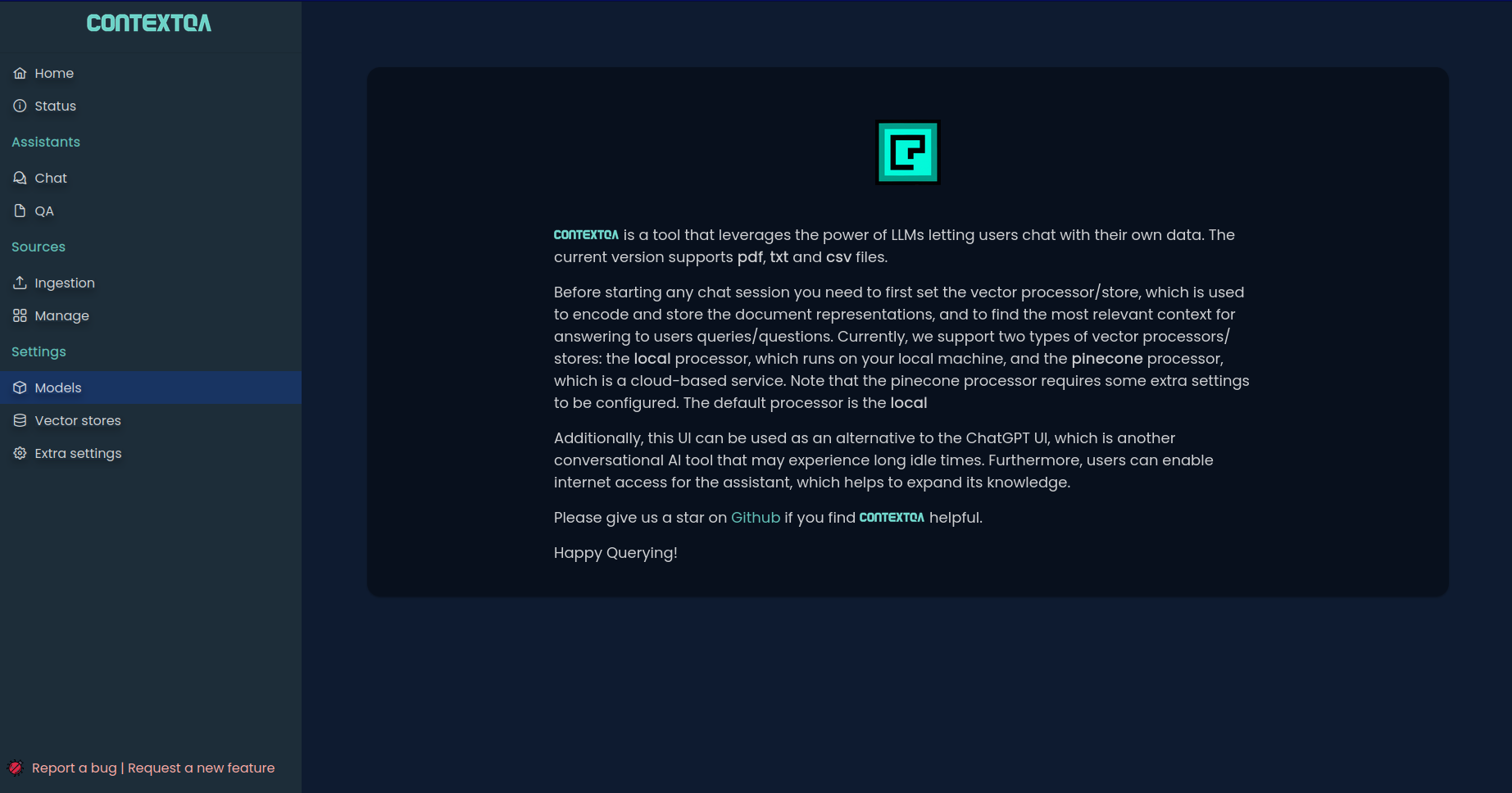

Chat with your data by leveraging the power of LLMs and vector databases

ContextQA is a modern utility that provides a ready-to-use LLM-powered application. It is built on top of giants such as FastAPI and LangChain.

Key features include:

- Regular chat supporting knowledge expansion via internet access

- Conversational QA with relevant sources

- Streaming responses

- Ingestion of data sources used in QA sessions

- Data sources management

- LLM settings: Configure parameters such as provider, model, temperature, etc. Currently, the supported providers are OpenAI and Google

- Vector DB settings. Adjust parameters such as engine, chunk size, chunk overlap, etc. Currently, the supported engines are ChromaDB and Pinecone

- Other settings: Choose embedded or external LLM memory (Redis), media directory, database credentials, etc.

pip install contextqaOn installation contextqa provides a CLI tool

contextqa initCheck out the available parameters by running the following command

contextqa init --help$ contextqa init

2024-07-08 12:50:55,304 - INFO - Using SQLite

INFO: Started server process [29337]

INFO: Waiting for application startup.

2024-07-08 12:50:55,450 - INFO - Running initial migrations...

2024-07-08 12:50:55,452 - INFO - Context impl SQLiteImpl.

2024-07-08 12:50:55,452 - INFO - Will assume non-transactional DDL.

2024-07-08 12:50:55,465 - INFO - Running upgrade -> 0bb7d192c063, Initial migration

2024-07-08 12:50:55,471 - INFO - Running upgrade 0bb7d192c063 -> b7d862d599fe, Support for store types and related indexes

2024-07-08 12:50:55,487 - INFO - Running upgrade b7d862d599fe -> 3058bf204a05, unique index name

INFO: Application startup complete.

INFO: Uvicorn running on http://localhost:8080 (Press CTRL+C to quit)Open your browser at http://localhost:8080. You will see the initialization stepper which will guide you through the initial configurations

Or the main contextqa view - If the initial configuration has already been set