This describes necessary steps to train models on Google Cloud, assuming you are familiar with Google Cloud console and installed Google Cloud SDK

- Compute

- Billing

- Storage

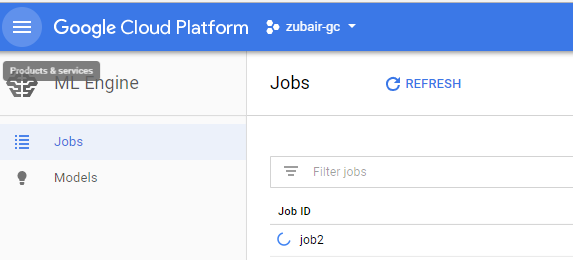

- ML Engine

Rename bucket name with your own wherever it says zubair-gc-bucket

gsutil -m cp -r datasets gs://zubair-gc-bucket/datasets- Create a **trainer** folder and move all your project files in it

- Create empty init.py in a trainer folder under your project

- Add Setup.py outside trainer folder

- Add following in the cloudml-gpu.yaml configuration file

trainingInput:

scaleTier: BASIC_GPU

runtimeVersion: "1.4"

pythonVersion: "3.5"'''Cloud ML Engine package configuration.'''

from setuptools import setup, find_packages

setup(name='shallownet_keras',

version='1.0',

packages=find_packages(),

include_package_data=True,

description='Model Training using Keras on Google Cloud',

author='Zubair',

author_email='your email',

license='MIT',

install_requires=[

'keras',

'h5py'],

zip_safe=False)add.add_argument(

'--job-dir',

help='Cloud storage bucket to export the model and store temp files')

args = vars(ap.parse_args())if args["--job-dir"] != '':

with file_io.FileIO(args["model"], mode='r') as input_f:

with file_io.FileIO(args["--job-dir"] + '/' + args["model"], mode='w+') as output_f:

output_f.write(input_f.read())On windows cmd doesn't work with multiline text

gcloud ml-engine jobs submit training job7 --package-path=./trainer --module-name=trainer.shallownet_train --job-dir=gs://zubair-gc-bucket/jobs/job7 --region=us-central1 --config=trainer/cloudml-gpu.yaml --runtime-version="1.4" -- --job_name="zubair-gc-job7" --dataset=dataset/animals --model=shallownet_weights1.hdf5