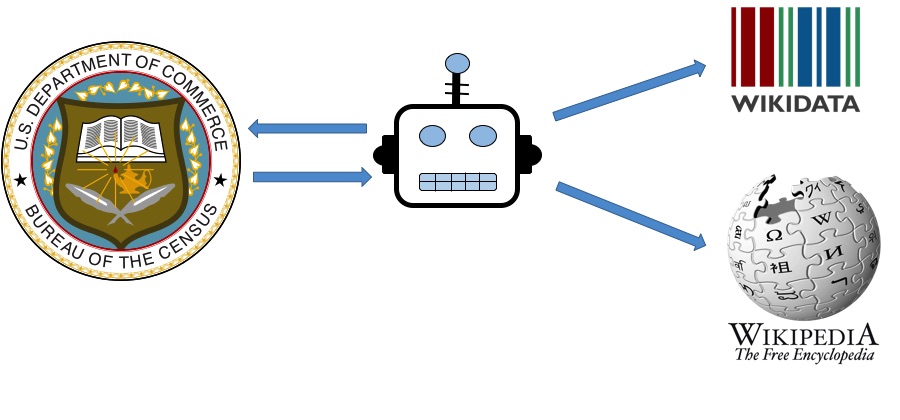

This suite of bots interact with Wikidata and Wikipedia using the Pywikibot library. These bots are designed specifically for pushing Census data to specified Wikis, and have been created in collaboration with the U.S. Census Bureau.

pip install virtualenv

cd /path/to/repository

virtualenv venv

source venv/bin/activate

pip install -r requirements.txt

In order to use these bots, you must create an account for each Wiki and receive bot approval in order to operate as a bot. More information for Wikidata can be found here and for Wikipedia here.

You will need to create two files to define the login credentials for the wiki bots. Templates for these files are provided, but the real files are excluded using .gitignore for security.

This file should contain the information that Pywikibot uses to log a user into a specified wiki. You will include your user account information in this file. A sample file (user-config.py.sample) has been provided to use.

This file should contain passwords for your bots. This is optional. A sample file (user-password.py.sample) has been provided to use.

After the above files have been set up, you may run the login script (login.py) to login in to all of your wiki accounts. In order to do this, you may run the following:

python login.py -all

If the script was successful, you should see messages that you have been logged in to each wiki you specified in your configuration.

You can log into a specific account by specifying the language and family of your target:

python login.py -family:wikipedia -lang:en

In order to log out of these wikis, you may run:

python login.py -all -logout

This file should contain the API key that will be provided to you after registering with the Census Bureau. In order to obtain a Census API key, refer to the API documentation. A sample file (app_config.ini.sample) has been provided.

This application contains data configuration files that instruct bots on where and how to look for source data, as well as how to push that data to wikis. These files are contained in the /data directory. These files are split up into Wikidata production and test cases. data.json is for the Wikidata production site and data_test.json is for the test site (Note, currently, these files are not used for Wikipedia). Currently, these files are populated with initial configurations used for these bots, but you may change or add to them as needed. These files are in JSON format, and the schema is defined in census_bot_data.schema.json.

Lastly, reference.json contains definitions of wiki property tags and what that property represents in order to make understanding of the tags defined in the main data files easier.

Once all setup has been completed, the Wikidata bot can be run by executing wikidata_bot.py and the Wikipedia bot can be run by executing wikipedia_bot.py.

The wikidata and wikipedia bots can be run using different modes. More information about argument usage is available by using --help. The following modes are available for running the Wikidata bot are available:

The -m t argument can be passed to the script in order to run it in test mode. For the wikidata bot, this causes the bot to communicate with the test site instead of the production site. In order to have pages to test against, you must create test pages and make sure you are referring to the proper values in your configuration file. For wikipedia, this causes to use the test data defined in the test_data parameter in the code.

The -m p argument can be passed to the script in order to run it in production mode (i.e., use the main site for wikidata and use API data for wikipedia).

The -d argument can be passed to the script in order to run it in debug mode. This will allow you to run the script on test or production without actually making any edits.

Logs for bot actions are available in the '/logs' directory. Log filenames are prefaced with censusbot-log+YYYYMMDD.

The bot searches for values specified in the data configuration files (as explained above). If the bot finds a single result for a particular wiki search, it will access that result page and check if the statement it is instructed to look for is present. If so, it will check the claims for that statement. If there is a claim with no point in time value, it will be deleted. Subsequently, it will check for any entries referring to the specified point in time year. If any entries are found they will be checked for completeness. if any entry is incomplete, it will be deleted and a new complete entry will be created instead. If no entries are present for the specified statement or point in time, the bot will create add one.

to be added

This README contains a repository image which uses logos for the U.S. Census Bureau, Wikidata, and Wikipedia.