Optimizer for configuration of hyperparameters in neural networks.

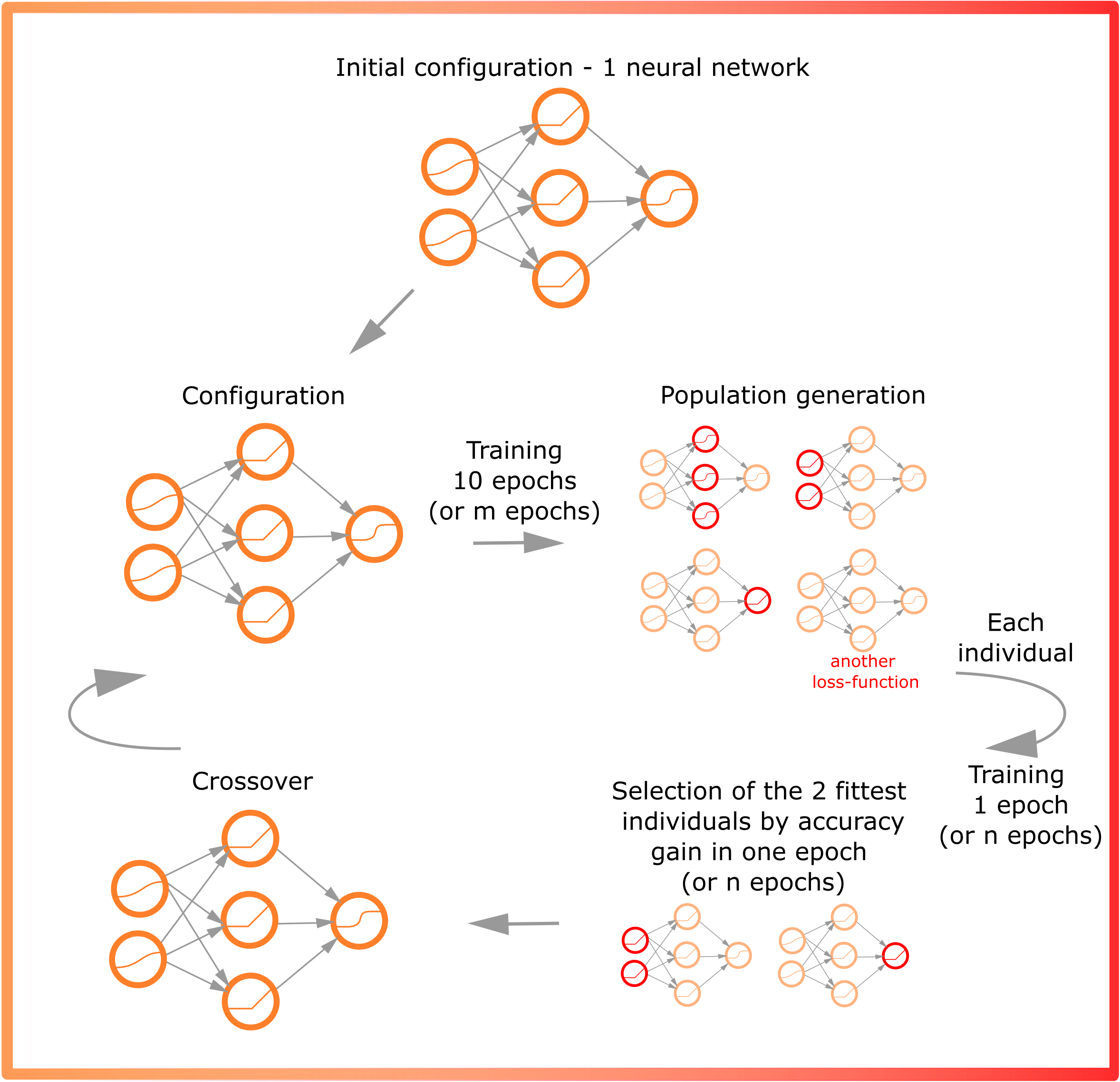

What does this library do? - Module can optimize hyperparameters of a neural network for a pre-defined architecture.

What deep learning libraries can this module work with? - PyTorch.

What algorithm is used for optimization? - An evolutionary algorithm with mutation and crossover operators is used. The neural network is continuously trained in the process of evolution.

'python>=3.7',

'numpy',

'cudatoolkit==10.2',

'torchvision==0.7.0',

'pytorch==1.6.0'

Description of the submodules:

For now all the necessary description can be found in docstring.

How to run the algorithm can be seen in the examples:

- FNN classification task - MNIST classification (The effectiveness of MIHA is compared with the optuna framework)

- CNN regression task - gap-filling in remote sensing data (The effectiveness of MIHA is compared with init neural network training without hyperparameters search) (in russian)

Feel free to contact us:

-

Mikhail Sarafanov | [email protected]