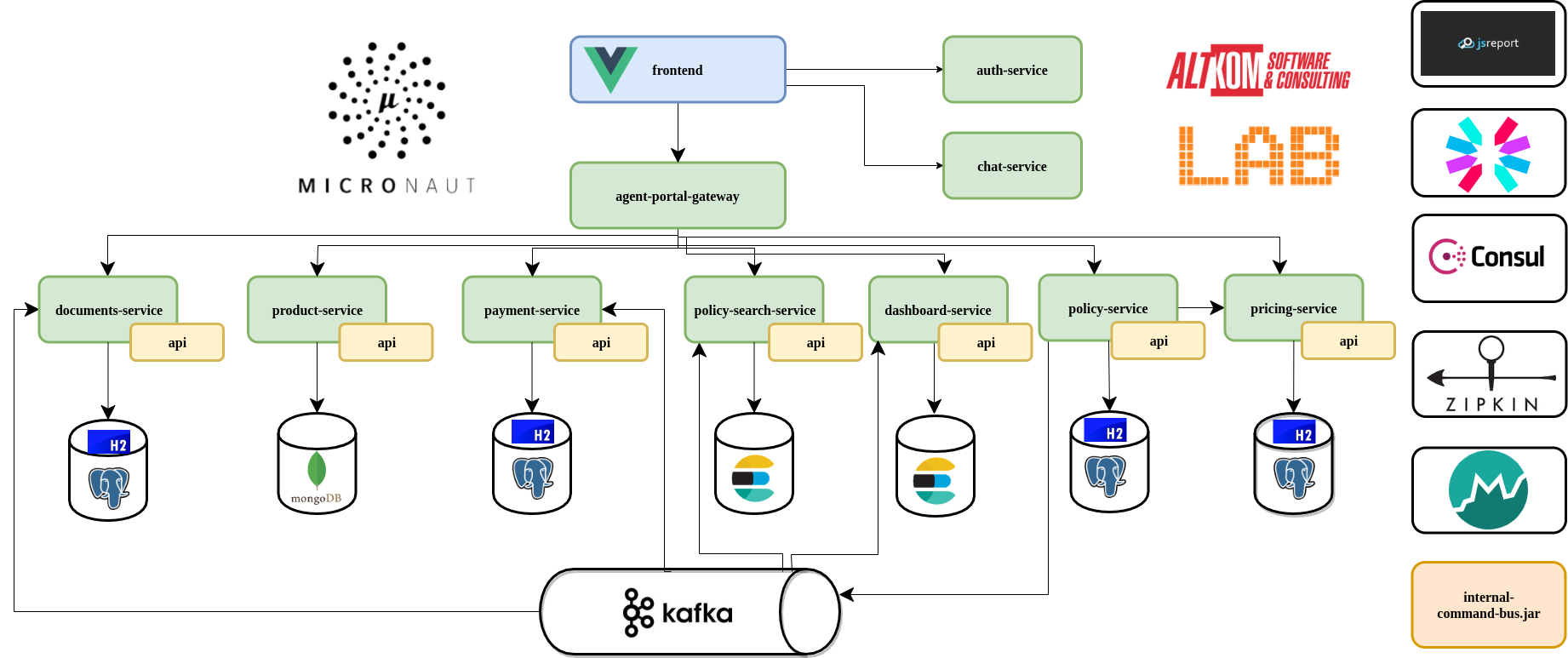

This is an example of a very simplified insurance sales system made in a microservice architecture using Micronaut.

Comprehensive guide describing exactly the architecture, applied design patterns and technologies can be found on our blog in article Building Microservices with Micronaut.

We have recently upgraded to Java 14 (you must have JDK14 in order to build and run the project).

We encourage you to read, because in this README there is only a substitute for all information.

Other articles around microservices that could be interesting:

- Building Business Dashboards with Micronaut and Elasticsearch Aggregations Framework

- Simplify Data Access Code With Micronaut Data

- Micronaut with RabbitMQ integration

- CQRS and Event Sourcing Intro For Developers

- From monolith to microservices – to migrate or not to migrate?

- Event Storming — innovation in IT projects

- [SLIDES] Building microservices with Micronaut - practical approach

-

agent-portal-gateway - Gateway pattern from EAA Catalog implementation.

The complexity of “business microservices” will be hidden by using Gateway pattern. This component is responsible for the proper redirection of requests to the appropriate services based on the configuration. The frontend application will only communicate with this component. This component show usage of non-blocking http declarative clients. -

payment-service - main responsibilities: create Policy Account, show Policy Account list, register in payments from bank statement file.

This module is taking care of a managing policy accounts. Once the policy is created, an account is created in this service with expected money income. Payment-service also has an implementation of a scheduled process where CSV file with payments is imported and payments are assigned to policy accounts. This component shows asynchronous communication between services using Kafka and ability to create background jobs using Micronaut. It also features accessing database using JPA. -

policy-service - creates offers, converts offers to insurance policies.

In this service we demonstrated usage of CQRS pattern for better read/write operation isolation. This service demonstrates two ways of communication between services: synchronous REST based calls topricing-servicethrough HTTP Client to get the price, and asynchronous event based using Apache Kafka to publish information about newly created policies. In this service we also access RDBMS using JPA. -

policy-search-service - provides insurance policy search.

This module listens for events from Kafka, converts received DTOs to “read model” (used later in search) and saves this in database (ElasticSearch). It also exposes REST endpoint for search policies. -

pricing-service - calculates price for selected insurance product.

For each product a tariff should be defined. The tariff is a set of rules on the basis of which the price is calculated. MVEL language was used to define the rules. During the policy purchase process, thepolicy-serviceconnects with this service to calculate a price. Price is calculated based on user’s answers for defined questions. -

product-service - simple insurance product catalog.

Information about products are stored in MongoDB. Each product has code, name, image, description, cover list and question list (affect the price defined by the tariff). This module shows usage of reactive Mongo client. -

auth-service - JWT based authentication service, this services provides login functionality.

Based on login and password users get authenticated and JWT token with their privileges is created and returned. This services shows built-in Micronaut support for JWT based security. -

documents-service - Service build with kotlin. Responsible for generating pdf document when new policy event is received.

-

chat-service - Example WebSocket usage. Chat for salesman.

-

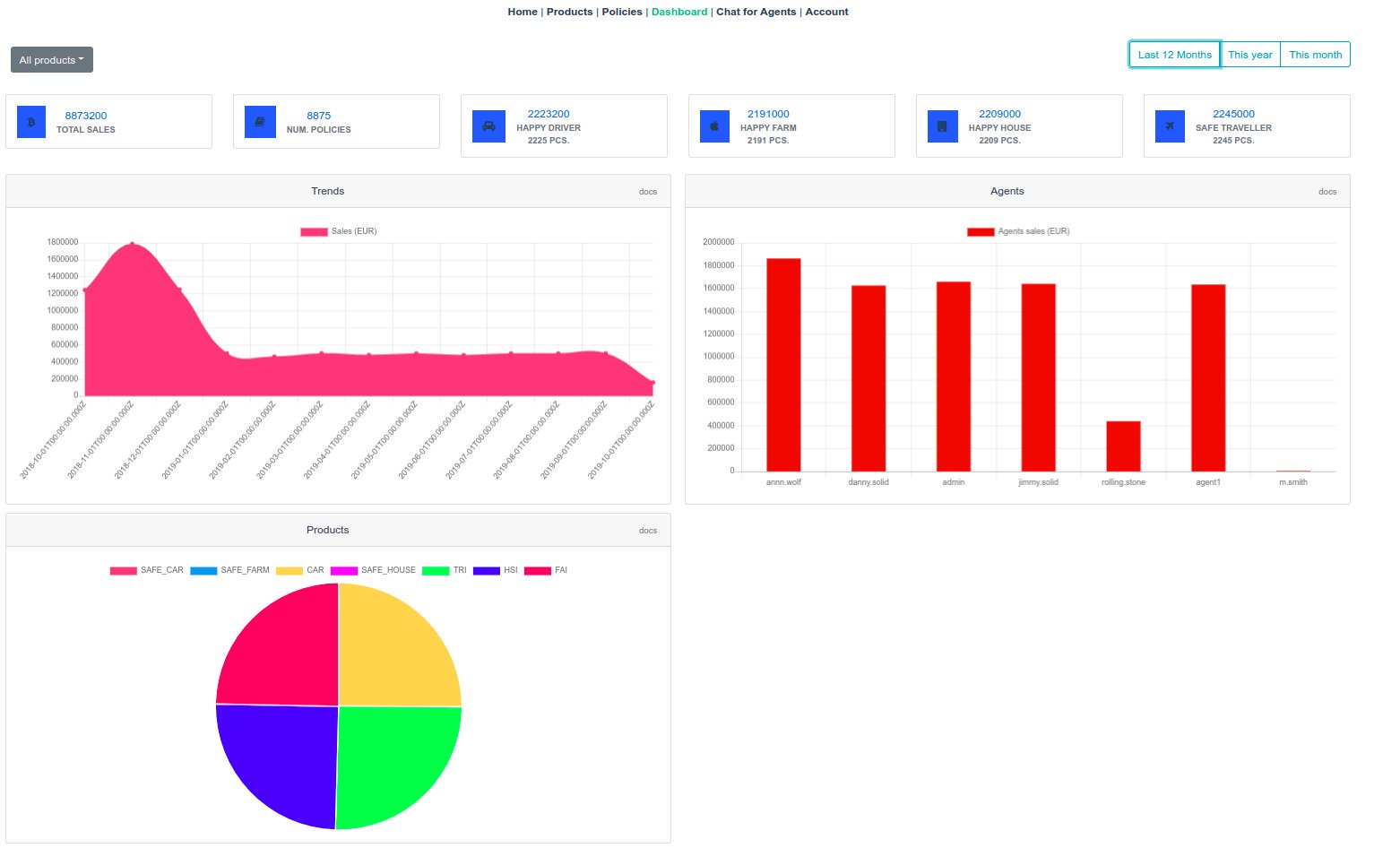

dashboard-service - Business dashboards that presents our agents sales results. Dashboard service subscribes to events of selling policies and index sales data in ElasticSearch. Then ElasticSearch aggregation framework is used to calculate sales stats like: total sales and number of policies per product per time period, sales per agent in given time period and sales timeline. Sales stats are nicely visualized using ChartJS.

-

web-vue - SPA application built with Vue.js and Bootstrap for Vue.

Each business microservice has also -api module (payment-service-api, policy-service-api etc.), where we defined commands, events, queries and operations.

In the picture you can also see the component internal-command-bus. This component is used internally by microservices if we want to use a CQRS pattern inside (simple example in OfferController in policy-service).

This step requires Java 14 (JDK), Maven and Yarn.

For demo purposes build process is automated by a shell script. For Unix-based systems:

build-without-tests.sh

For Windows:

build-without-tests.bat

If you already run the necessary infrastructure (Kafka, Consul etc.), you should run build with all tests: For Unix-based systems:

build.sh

For Windows:

build.bat

- docker

- docker-compose

Windows users: make sure to set core.autocrlf false in git configuration before cloning this repository.

For Windows users, append below line C:\Windows\System32\drivers\etc\hosts:

127.0.0.1 kafkaserver

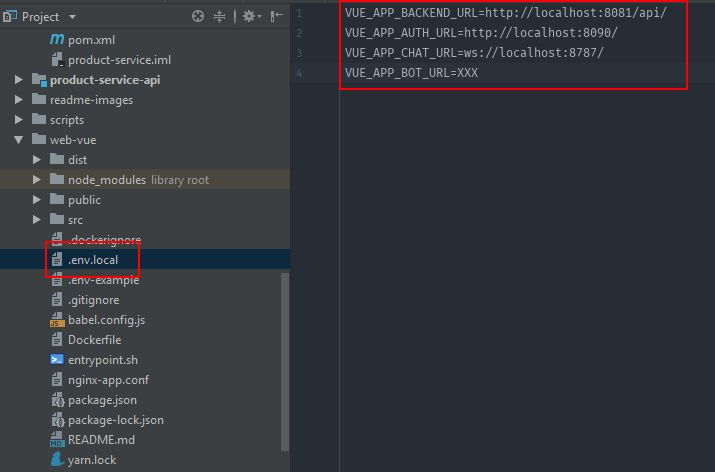

For frontend app running, you must add file .env.local based on .env-example .

To run the whole system on local machine just type:

docker-run.sh

Make sure you've first built the microservices! Check this.

This script will provision required infrastructure and start all services.

Setup is powered by docker-compose and configured via docker-compose.yml file.

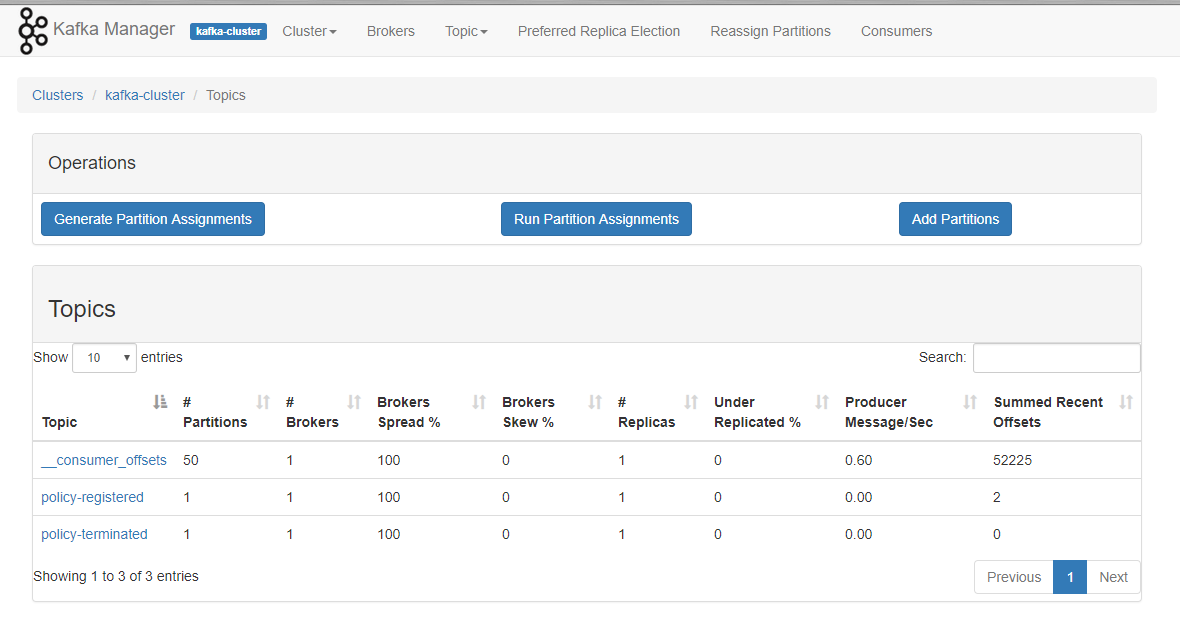

Afterwards you need to add kafka cluster - either via web UI (Kafka Manager -> Cluster -> Add Cluster) or using provided script:

kafka-create-cluster.sh

At this point system is ready to use: http://localhost

If you want to run services manually (eg. from IDE), you have to provision infrastructure with script from scripts folder:

infra-run.sh

Afterwards you need to add kafka cluster - either via web UI (Kafka Manager -> Cluster -> Add Cluster) or using provided script:

kafka-create-cluster.sh

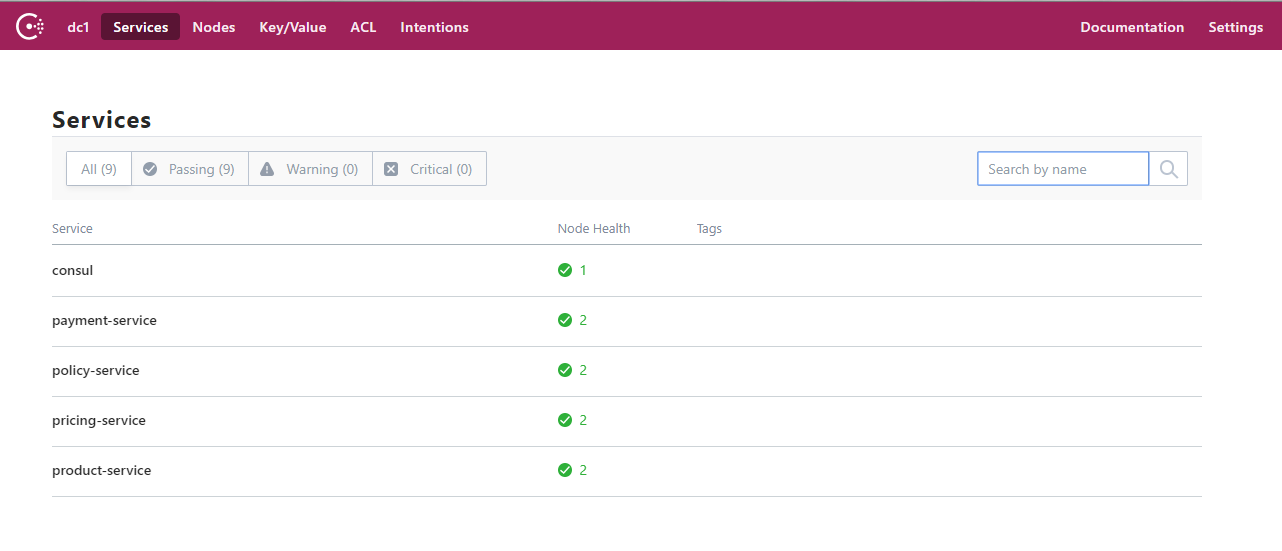

- Consul dashboard:

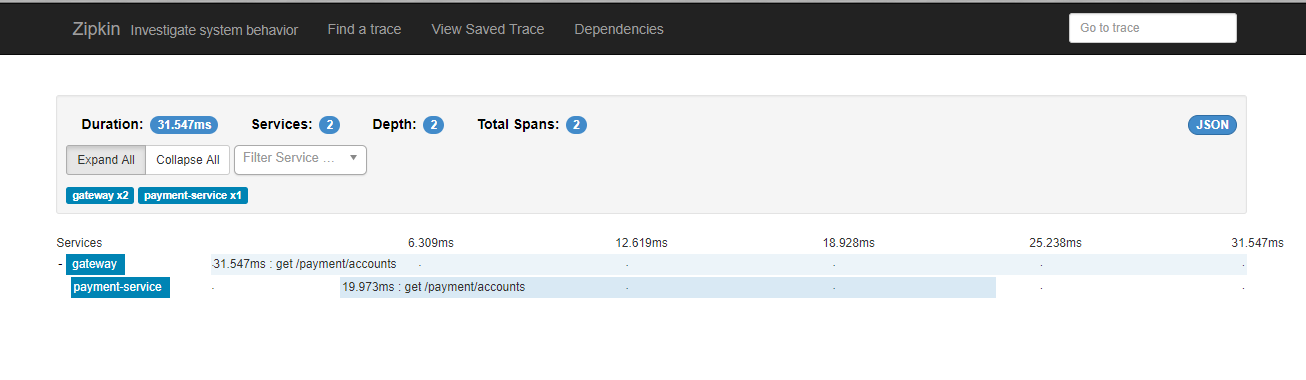

http://localhost:8500 - Zipkin dashboard:

http://localhost:9411/zipkin/ - Kafka Manager dashboard:

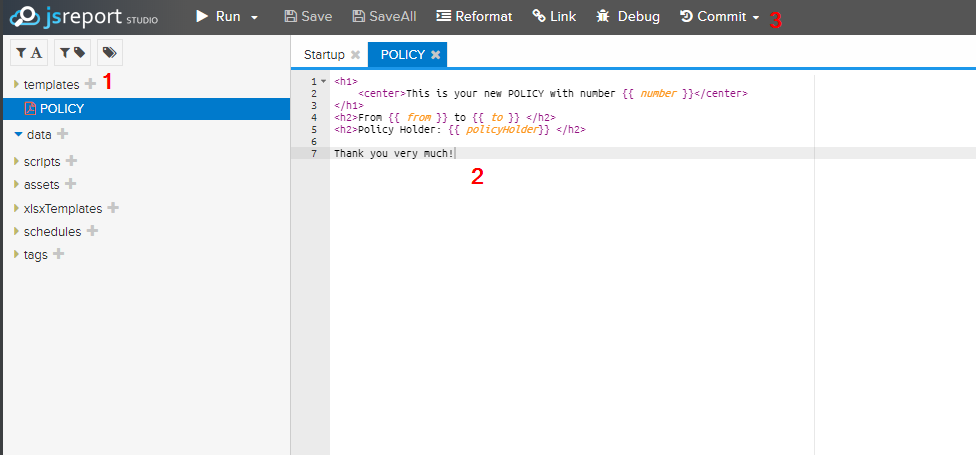

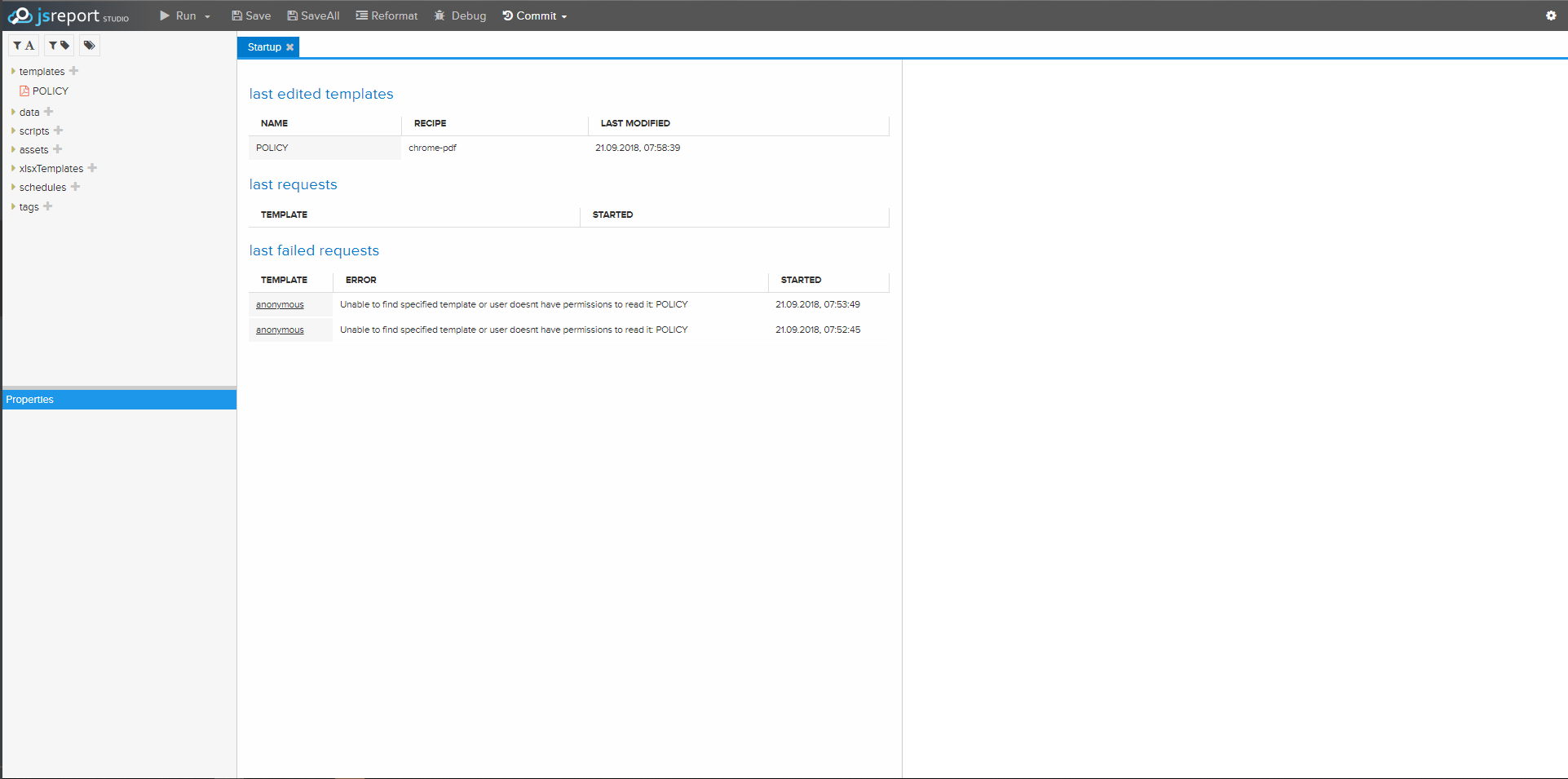

http://localhost:9000/ - JSReport dashboard:

http://localhost:5488/

Check this;

- Click "+".

- Type name "POLICY" and content from file policy.template.

- Commit changes.

docker run -p 8500:8500 consul

docker run -d -p 9411:9411 openzipkin/zipkin

Setup Kafka on Windows with this instruction.

docker run -p 5488:5488 jsreport/jsreport

agent-portal-gateway provides the API description.

You can go to URL: [http://localhost:8081/swagger/lab-insurance-sales-portal-api-1.0.yml][http://localhost:8081/swagger/lab-insurance-sales-portal-api-1.0.yml]

Create new microservice with Micronaut CLI:

mn create-app pl.altkom.asc.lab.[SERVICE-NAME]-service -b maven

This command generate project in Java and Maven as build tool.

- admin / admin

- jimmy.solid / secret

- danny.solid / secret

- agent1 / agent1