forked from huggingface/blog

-

Notifications

You must be signed in to change notification settings - Fork 16

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Add accelerate-v1.md and CN translation #136

Open

Hoi2022

wants to merge

6

commits into

huggingface-cn:main

Choose a base branch

from

Hoi2022:main

base: main

Could not load branches

Branch not found: {{ refName }}

Loading

Could not load tags

Nothing to show

Loading

Are you sure you want to change the base?

Some commits from the old base branch may be removed from the timeline,

and old review comments may become outdated.

Open

Changes from 3 commits

Commits

Show all changes

6 commits

Select commit

Hold shift + click to select a range

967a9ae

Add accelerate-v1.md

Hoi2022 737e1ed

Add accelerate-v1.md

Hoi2022 4e036a7

Add accelerate-v1.md CN translation

Hoi2022 62dbd06

Update accelerate-v1.md zh translation

Hoi2022 9bd0bf8

update _blog.yml for accelerate-v1 zh translation

Hoi2022 463619a

Merge branch 'main' into main

Hoi2022 File filter

Filter by extension

Conversations

Failed to load comments.

Loading

Jump to

Jump to file

Failed to load files.

Loading

Diff view

Diff view

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,101 @@ | ||

| --- | ||

| title: "Accelerate 1.0.0" | ||

| thumbnail: /blog/assets/186_accelerate_v1/accelerate_v1_thumbnail.png | ||

| authors: | ||

| - user: muellerzr | ||

| - user: marcsun13 | ||

| - user: BenjaminB | ||

| --- | ||

|

|

||

| # Accelerate 1.0.0 | ||

|

|

||

| ## What is Accelerate today? | ||

|

|

||

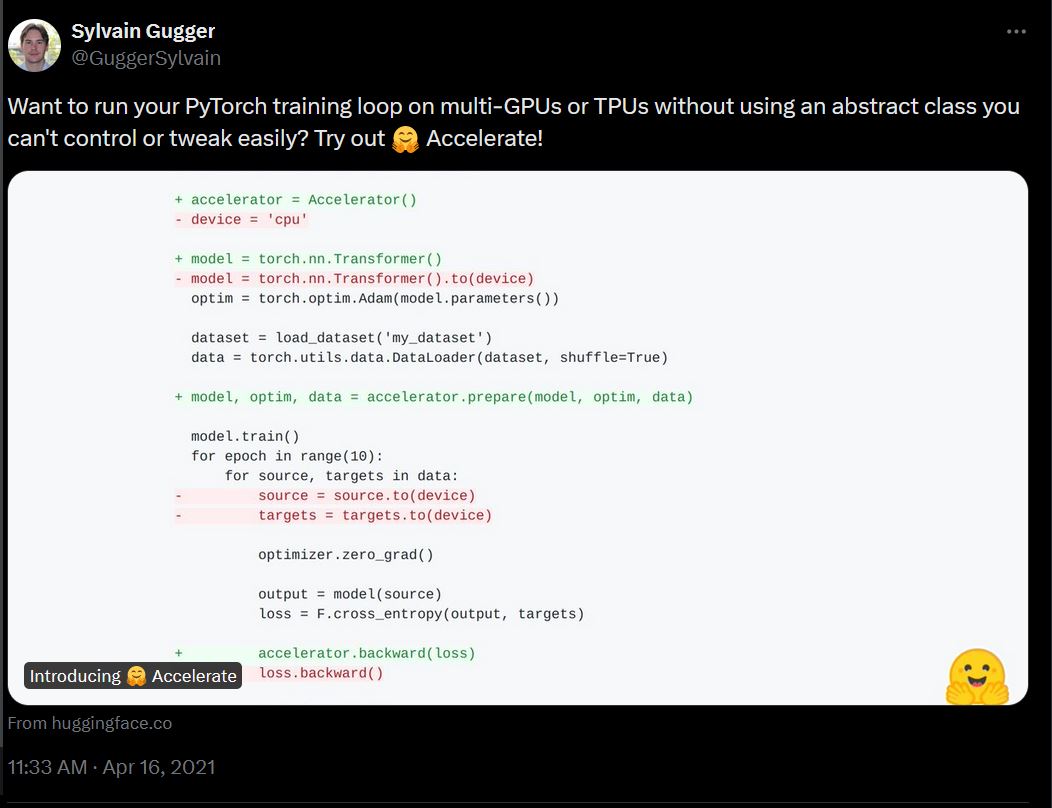

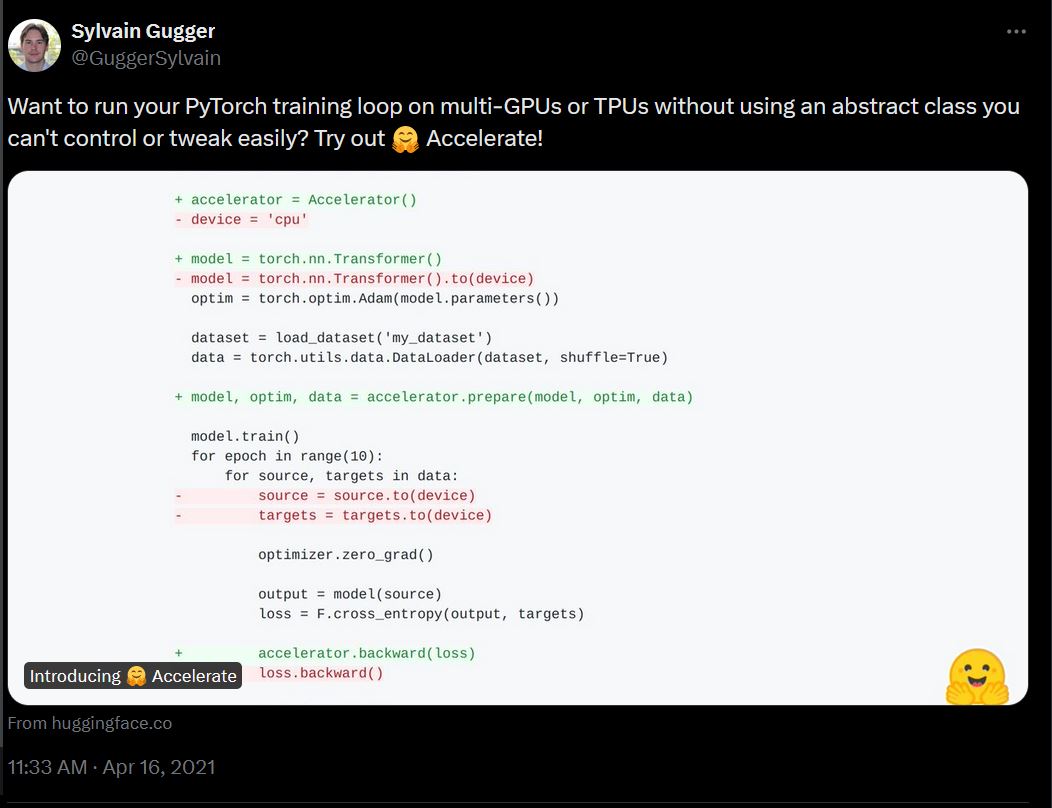

| 3.5 years ago, [Accelerate](https://github.com/huggingface/accelerate) was a simple framework aimed at making training on multi-GPU and TPU systems easier | ||

| by having a low-level abstraction that simplified a *raw* PyTorch training loop: | ||

|

|

||

|  | ||

|

|

||

| Since then, Accelerate has expanded into a multi-faceted library aimed at tackling many common problems with | ||

| large-scale training and large models in an age where 405 billion parameters (Llama) are the new language model size. This involves: | ||

|

|

||

| * [A flexible low-level training API](https://huggingface.co/docs/accelerate/basic_tutorials/migration), allowing for training on six different hardware accelerators (CPU, GPU, TPU, XPU, NPU, MLU) while maintaining 99% of your original training loop | ||

| * An easy-to-use [command-line interface](https://huggingface.co/docs/accelerate/basic_tutorials/launch) aimed at configuring and running scripts across different hardware configurations | ||

| * The birthplace of [Big Model Inference](https://huggingface.co/docs/accelerate/usage_guides/big_modeling) or `device_map="auto"`, allowing users to not only perform inference on LLMs with multi-devices but now also aiding in training LLMs on small compute through techniques like parameter-efficient fine-tuning (PEFT) | ||

|

|

||

| These three facets have allowed Accelerate to become the foundation of **nearly every package at Hugging Face**, including `transformers`, `diffusers`, `peft`, `trl`, and more! | ||

|

|

||

| As the package has been stable for nearly a year, we're excited to announce that, as of today, we've published **the first release candidates for Accelerate 1.0.0**! | ||

|

|

||

| This blog will detail: | ||

|

|

||

| 1. Why did we decide to do 1.0? | ||

| 2. What is the future for Accelerate, and where do we see PyTorch as a whole going? | ||

| 3. What are the breaking changes and deprecations that occurred, and how can you migrate over easily? | ||

|

|

||

| ## Why 1.0? | ||

|

|

||

| The plans to release 1.0.0 have been in the works for over a year. The API has been roughly at a point where we wanted, | ||

| centering on the `Accelerator` side, simplifying much of the configuration and making it more extensible. However, we knew | ||

| there were a few missing pieces before we could call the "base" of `Accelerate` "feature complete": | ||

|

|

||

| * Integrating FP8 support of both MS-AMP and `TransformersEngine` (read more [here](https://github.com/huggingface/accelerate/tree/main/benchmarks/fp8/transformer_engine) and [here](https://github.com/huggingface/accelerate/tree/main/benchmarks/fp8/ms_amp)) | ||

| * Supporting orchestration of multiple models when using DeepSpeed ([Experimental](https://huggingface.co/docs/accelerate/usage_guides/deepspeed_multiple_model)) | ||

| * `torch.compile` support for the big model inference API (requires `torch>=2.5`) | ||

| * Integrating `torch.distributed.pipelining` as an [alternative distributed inference mechanic](https://huggingface.co/docs/accelerate/main/en/usage_guides/distributed_inference#memory-efficient-pipeline-parallelism-experimental) | ||

| * Integrating `torchdata.StatefulDataLoader` as an [alternative dataloader mechanic](https://github.com/huggingface/accelerate/blob/main/examples/by_feature/checkpointing.py) | ||

|

|

||

| With the changes made for 1.0, accelerate is prepared to tackle new tech integrations while keeping the user-facing API stable. | ||

|

|

||

| ## The future of Accelerate | ||

|

|

||

| Now that 1.0 is almost done, we can focus on new techniques coming out throughout the community and find integration paths into Accelerate, as we foresee some radical changes in the PyTorch ecosystem very soon: | ||

|

|

||

| * As part of the multiple-model DeepSpeed support, we found that while generally how DeepSpeed is currently *could* work, some heavy changes to the overall API may eventually be needed as we work to support simple wrappings to prepare models for any multiple-model training scenario. | ||

| * With [torchao](https://github.com/pytorch/ao) and [torchtitan](https://github.com/pytorch/torchtitan) picking up steam, they hint at the future of PyTorch as a whole. Aiming at more native support for FP8 training, a new distributed sharding API, and support for a new version of FSDP, FSDPv2, we predict that much of the internals and general usage API of Accelerate will need to change (hopefully not too drastic) to meet these needs as the frameworks slowly become more stable. | ||

| * Riding on `torchao`/FP8, many new frameworks are bringing in different ideas and implementations on how to make FP8 training work and be stable (`transformer_engine`, `torchao`, `MS-AMP`, `nanotron`, to name a few). Our aim with Accelerate is to house each of these implementations in one place with easy configurations to let users explore and test out each one as they please, intending to find the ones that wind up being the most stable and flexible. It's a rapidly accelerating (no pun intended) field of research, especially with NVIDIA's FP4 training support on the way, and we want to make sure that not only can we support each of these methods but aim to provide **solid benchmarks for each** to show their tendencies out-of-the-box (with minimal tweaking) compared to native BF16 training | ||

|

|

||

| We're incredibly excited about the future of distributed training in the PyTorch ecosystem, and we want to make sure that Accelerate is there every step of the way, providing a lower barrier to entry for these new techniques. By doing so, we hope the community will continue experimenting and learning together as we find the best methods for training and scaling larger models on more complex computing systems. | ||

|

|

||

| ## How to try it out | ||

|

|

||

| To try the first release candidate for Accelerate today, please use one of the following methods: | ||

|

|

||

| * pip: | ||

|

|

||

| ```bash | ||

| pip install --pre accelerate | ||

| ``` | ||

|

|

||

| * Docker: | ||

|

|

||

| ```bash | ||

| docker pull huggingface/accelerate:gpu-release-1.0.0rc1 | ||

| ``` | ||

|

|

||

| Valid release tags are: | ||

| * `gpu-release-1.0.0rc1` | ||

| * `cpu-release-1.0.0rc1` | ||

| * `gpu-fp8-transformerengine-release-1.0.0rc1` | ||

| * `gpu-deepspeed-release-1.0.0rc1` | ||

|

|

||

| ## Migration assistance | ||

|

|

||

| Below are the full details for all deprecations that are being enacted as part of this release: | ||

|

|

||

| * Passing in `dispatch_batches`, `split_batches`, `even_batches`, and `use_seedable_sampler` to the `Accelerator()` should now be handled by creating an `accelerate.utils.DataLoaderConfiguration()` and passing this to the `Accelerator()` instead (`Accelerator(dataloader_config=DataLoaderConfiguration(...))`) | ||

| * `Accelerator().use_fp16` and `AcceleratorState().use_fp16` have been removed; this should be replaced by checking `accelerator.mixed_precision == "fp16"` | ||

| * `Accelerator().autocast()` no longer accepts a `cache_enabled` argument. Instead, an `AutocastKwargs()` instance should be used which handles this flag (among others) passing it to the `Accelerator` (`Accelerator(kwargs_handlers=[AutocastKwargs(cache_enabled=True)])`) | ||

| * `accelerate.utils.is_tpu_available` should be replaced with `accelerate.utils.is_torch_xla_available` | ||

| * `accelerate.utils.modeling.shard_checkpoint` should be replaced with `split_torch_state_dict_into_shards` from the `huggingface_hub` library | ||

| * `accelerate.tqdm.tqdm()` no longer accepts `True`/`False` as the first argument, and instead, `main_process_only` should be passed in as a named argument | ||

| * `ACCELERATE_DISABLE_RICH` is no longer a valid environmental variable, and instead, one should manually enable `rich` traceback by setting `ACCELERATE_ENABLE_RICH=1` | ||

| * The FSDP setting `fsdp_backward_prefetch_policy` has been replaced with `fsdp_backward_prefetch` | ||

|

|

||

| ## Closing thoughts | ||

|

|

||

| Thank you so much for using Accelerate; it's been amazing watching a small idea turn into over 100 million downloads and nearly 300,000 **daily** downloads over the last few years. | ||

|

|

||

| With this release candidate, we hope to give the community an opportunity to try it out and migrate to 1.0 before the official release. | ||

|

|

||

| Please stay tuned for more information by keeping an eye on the [github](https://github.com/huggingface/accelerate) and on [socials](https://x.com/TheZachMueller)! |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,101 @@ | ||

| --- | ||

| title: "Accelerate 1.0.0" | ||

| thumbnail: /blog/assets/186_accelerate_v1/accelerate_v1_thumbnail.png | ||

| authors: | ||

| - user: muellerzr | ||

| - user: marcsun13 | ||

| - user: BenjaminB | ||

| translators: | ||

| - user: hugging-hoi2022 | ||

| - user: | ||

| proofreader: | ||

| --- | ||

|

|

||

| # Accelerate 1.0.0 | ||

|

|

||

| ## Accelerate 发展概况 | ||

|

|

||

| 在三年半以前、项目发起之初时,[Accelerate](https://github.com/huggingface/accelerate) 的目标还只是制作一个简单框架,通过一个低层的抽象来简化多 GPU 或 TPU 训练,以此替代原生的 PyTorch 训练流程: | ||

|

|

||

|  | ||

|

|

||

| 自此,Accelerate 开始不断扩展,逐渐成为一个有多方面能力的代码库。当前,像 Llama 这样的模型已经达到了 405B 参数的量级,而 Accelerate 也致力于应对大模型和大规模训练所面临的诸多难题。这其中的贡献包括: | ||

|

|

||

| * [灵活、低层的训练 API](https://huggingface.co/docs/accelerate/basic_tutorials/migration):支持在六种不同硬件设备(CPU、GPU、TPU、XPU、NPU、MLU)上训练,同时在代码层面保持 99% 原有训练代码不必改动。 | ||

| * 简单易用的[命令行界面](https://huggingface.co/docs/accelerate/basic_tutorials/launch):致力于在不同硬件上进行配置,以及运行训练脚本。 | ||

| * [Big Model Inference](https://huggingface.co/docs/accelerate/usage_guides/big_modeling) 功能,或者说是 `device_map="auto"`:这使得用户能够在多种不同硬件设备上进行大模型推理,同时现在可以通过诸如高效参数微调(PEFT)等技术以较小计算量来训练大模型。 | ||

|

|

||

| 这三方面的贡献,使得 Accelerate 成为了**几乎所有 Hugging Face 代码库**的基础依赖,其中包括 `transformers`、`diffusers`、`peft`、`trl`。 | ||

|

|

||

| 在 Accelerate 开发趋于稳定将近一年后的今天,我们正式发布了 Accelerate 1.0.0 —— Accelerate 的第一个发布候选版本。 | ||

|

|

||

| 本文将会详细说明以下内容: | ||

|

|

||

| 1. 为什么我们决定开发 1.0 版本? | ||

| 2. Accelerate 的未来发展,怎样结合 PyTorch 一同发展? | ||

| 3. 新版本有哪些重大改变?如何迁移代码到新版本? | ||

|

|

||

| ## 为什么要开发 1.0 | ||

|

|

||

| 发行这一版本的计划已经进行了一年多。Acceelerate 的 API 集中于 Accelerator 一侧,配置简单,代码扩展性强。但是,我们仍然认识到 Accelerate 还存在诸多有待完成的功能,这包括: | ||

|

|

||

| * 为 MS-AMP 和 `TransformerEngine` 集成 FP8 支持(详见[这里](https://github.com/huggingface/accelerate/tree/main/benchmarks/fp8/transformer_engine)和[这里](https://github.com/huggingface/accelerate/tree/main/benchmarks/fp8/ms_amp)) | ||

| * 支持在 DeepSpeed 中使用多个模型(详见[这里](https://huggingface.co/docs/accelerate/usage_guides/deepspeed_multiple_model)) | ||

| * 使 `torch.compile` 支持大模型推理 API(需要 `torch>=2.5`) | ||

| * 集成 `torch.distributed.pipelining` 作为 [替代的分布式推理机制](https://huggingface.co/docs/accelerate/main/en/usage_guides/distributed_inference#memory-efficient-pipeline-parallelism-experimental) | ||

| * 集成 `torchdata.StatefulDataLoader` 作为 [替代的数据载入机制](https://github.com/huggingface/accelerate/blob/main/examples/by_feature/checkpointing.py) | ||

|

|

||

| 通过在 1.0 版本中作出的改动,Accelerate 已经有能力在不改变用户 API 界面的情况下不断融入新的技术能力了。 | ||

|

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. "界面" can be replaced by "接口" |

||

|

|

||

| ## Accelerate 的未来发展 | ||

|

|

||

| 在 1.0 版本推出以后,我们将重点关注技术社区里的新技术,并寻找方法去融合进 Accelerate 中。可以预见,一些重大的改动将会不久发生在 PyTorch 生态系统中: | ||

|

|

||

| * 作为支持 DeepSpeed 多模型的一部分,我们发现虽然当前的 DeepSpeed 方案还能正常工作,但后续可能还是需要大幅度改动整体的 API。因为我们需要为任意多模型训练场景去制作封装类。 | ||

| * 由于 [torchao](https://github.com/pytorch/ao) 和 [torchtitan](https://github.com/pytorch/torchtitan) 逐渐变得受欢迎,可以推测将来 PyTorch 可能会将这些集成进来成为一个整体。为了致力于更原生的 FP8 训练、新的分布式分片 API,以及支持新版 FSDP(FSDPv2),我们推测 Accelerate 内部和通用的很多 API 也将会更改(希望改动不大)。 | ||

| * 借助 `torchao`/FP8,很多新框架也带来了不同的理念和实现方法,来使得 FP8 训练有效且稳定(例如 `transformer_engine`、`torchao`、`MS-AMP`、`nanotron`)。针对 Accelerate,我们的目标是把这些实现都集中到一个地方,使用简单的配置方法让用户探索和试用每一种方法,最终我们希望形成稳定灵活的代码架构。这个领域发展迅速,尤其是 NVidia 的 FP4 技术即将问世。我们希望不仅能够支持这些方法,同时也为不同方法提供可靠的基准测试,来和原生的 BF16 训练对比,以显示技术趋势。 | ||

|

|

||

| 我们也对 PyTorch 社区分布式训练的发展感到期待,希望 Accelerate 紧跟步伐,为最近技术提供一个低门槛的入口。也希望社区能够继续探索实验、共同学习,让我们寻找在复杂计算系统上训练、扩展大模型的最佳方案。 | ||

|

|

||

| ## 如何使用 1.0 版本 | ||

|

|

||

| 如想使用 1.0 版本,需要先使用如下方法获取 Accelerate: | ||

|

|

||

| * pip: | ||

|

|

||

| ```bash | ||

| pip install --pre accelerate | ||

| ``` | ||

|

|

||

| * Docker: | ||

|

|

||

| ```bash | ||

| docker pull huggingface/accelerate:gpu-release-1.0.0rc1 | ||

| ``` | ||

|

|

||

| 可用的版本标记有: | ||

| * `gpu-release-1.0.0rc1` | ||

| * `cpu-release-1.0.0rc1` | ||

| * `gpu-fp8-transformerengine-release-1.0.0rc1` | ||

| * `gpu-deepspeed-release-1.0.0rc1` | ||

|

|

||

| ## 代码迁移指南 | ||

|

|

||

| 下面是关于弃用 API 的详细说明: | ||

|

|

||

| * 给 `Accelerator()` 传递 `dispatch_batches`、`split_batches`、`even_batches`、`use_seedable_sampler` 参数的这种方式已经被弃用。新的方法是创建一个 `accelerate.utils.DataLoaderConfiguration()` 然后传给 `Accelerator()`(示例:`Accelerator(dataloader_config=DataLoaderConfiguration(...))`)。 | ||

| * `Accelerator().use_fp16` 和 `AcceleratorState().use_fp16` 已被移除。新的替代方式是检查 `accelerator.mixed_precision == "fp16"`。 | ||

| * `Accelerator().autocast()` 不再接收 `cache_enabled` 参数。该参数被包含在 `AutocastKwargs()` 里(示例:`Accelerator(kwargs_handlers=[AutocastKwargs(cache_enabled=True)])`)。 | ||

| * `accelerate.utils.is_tpu_available` 被 `accelerate.utils.is_torch_xla_available` 替代。 | ||

| * `accelerate.utils.modeling.shard_checkpoint` 应被 `huggingface_hub` 里的 `split_torch_state_dict_into_shards` 替代。 | ||

| * `accelerate.tqdm.tqdm()` 的第一个参数不再是 `True`/`False`,`main_process_only` 需要以命名参数的形式传参。 | ||

| * `ACCELERATE_DISABLE_RICH` 不再是一个有效的环境变量。用户需通过设置 `ACCELERATE_ENABLE_RICH=1` 手动启动详细的回溯(traceback)信息。 | ||

| * FSDP 中的 `fsdp_backward_prefetch_policy` 已被 `fsdp_backward_prefetch` 代替。 | ||

|

|

||

| ## 总结 | ||

|

|

||

| 首先感谢使用 Accelerate,看到一个小的想法转变成一个总下载量超过一亿、日均下载量接近三十万的项目还是很令人惊叹的。 | ||

|

|

||

| 通过本版发行,我们希望社区能够踊跃尝试,尽快在官方发行版出现前迁移到 1.0 版本。 | ||

|

|

||

| 请大家持续关注,及时追踪我们 [GitHub](https://github.com/huggingface/accelerate) 和 [社交软件](https://x.com/TheZachMueller) 上的最新信息。 | ||

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The

proofreaderproperty can be omitted in your submission, one with- user: hugging-hoi2022will be cool!