-

Notifications

You must be signed in to change notification settings - Fork 276

2019 Whistler

This page contains references to our Whistler discussions, serving as a context for further work as well as establishing some of the decisions we made.

Agenda: https://public.etherpad-mozilla.org/p/GFXWhistler19plans

Android is a must. Everybody has to test it.

History:

- (2008) XUL Fennec, 30 sec first paint time...

- (2012) native Java UI + Gecko engine, unclear division

- (2019) GeckoView, highly opinionated (e10s only, lean JS, no prefs), suggest WebExtensions for config

Mach is mach: bootstrap, build, package, run, test, link, ...

gw, kvark

Problem: having a stack of clip chains (which are sequences of clips) per item is not elegant, especially since most elements only have a single clip chain instance.

Still need multiple clip chains for the case where a scroll root associated with an element also does axis-aligned clipping.

Could rework the concept of clip chains and/or the way primitives link to them to stay closer to Gecko.

Resolution: defer until we start working on producing the trees of clips and spatial nodes by Gecko.

gw, kvark, Gankro, jrmuizel

Q: what do we get from document splitting if all the picture caching optimizations planned today are done? A: not much! When WR builds a scene where the content picture is unchanged, it will use the interned/cached data, so both scene and frame building are cheap. Rendering itself is done in tiles, so only the appropriate chrome tiles are updated, which once again is cheap.

Resolution: try to finish the doc-splitting changes and land them now, get the benefits, and then see if we can disable it after the picture caching is mature (6 months from now).

gw, kvark, nical, lee

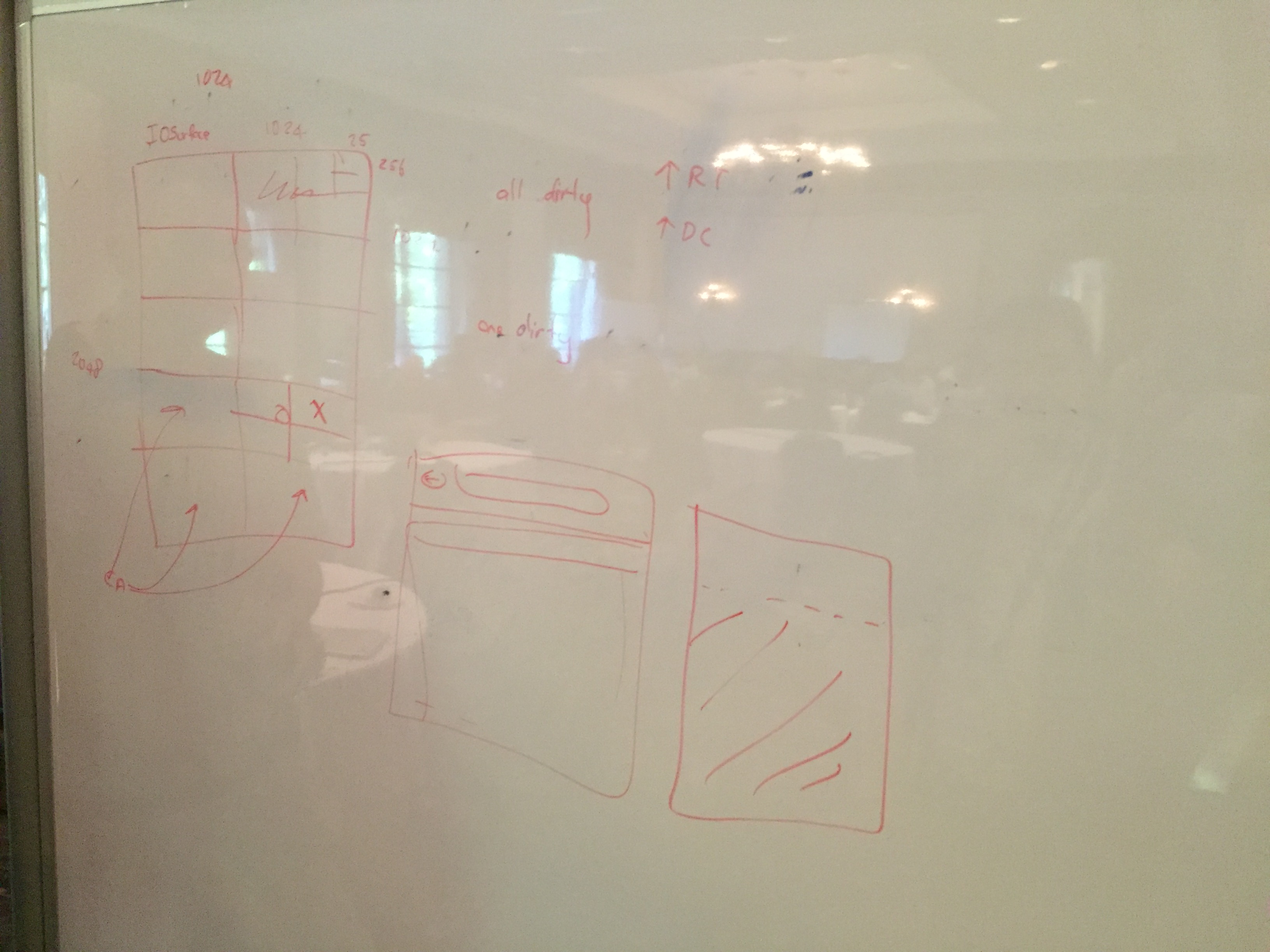

Start with 1024x1024 tiles. Each frame we'd select one of the tiles that changed and split it into 4 sub-tiles, for which we are going to be evaluating the dirty state separately. Each sub-tile would be backed by a separate direct composition layer.

Concerns:

- adaptation is very lazy

- creation of a composition layer can be too extensive

- complexity of logic

Resolution: keep the tile size constant for now and just update the dirty rect within it. We can come back to the hierarchy idea once the direct composition works with a single dirty rect per tile.

gw, kvark, nical, lee

Q: what state do we associate with an interned picture? A: surface list, picture sub-tree, primitive instances. More to be seen when we start implementing it.

Q: what if a cached picture is big? A: tile it!

Q: how do we track the dirty tiles of a parent (cached) picture, based on the dirty tiles of a sub-picture? A: as we go top to bottom, we'll only be considering the tiles of a sub-picture that intersect with the current parent tile, automagically

gw, kvark, nical, lee

Q: do we have support for both RGBA8 and BGRA8 data coming to images? A: no. State of things:

- desktop GL drivers expect BGRA8 data already

- most real Android harware supports BGRA_EXT

- Android emulator can left with a slow conversion path (by the driver).

- Mac just needs to be fixed to use BGRA8 internal format.

Resolution:

- double-check the facts about Android support of BGRA8_EXT

- measure upload speed on desktop GL to verify if BGRA8 input is good

- instead of landing the swizzling changes, just prefer the slow path

- fix macOS internal format

gw, kvark, nical, lee

Problem: quite often in large images there are sections that are fully transparent and/or fully opaque.

General approach: tile things, know for each tile if its opaque, semi-transparent, or fully transparent.

We can generate this data per tile on GPU (in various ways), and keep a hold of it for as long as the image is needed.

We do not have to download the data on CPU in order to batch things (although we still could). Instead, we can have the image vertex shader modified in such a way that it fetches this extra bit of information and discards the polygon if needed (the condition is different between opaque and alpha passes).

We believe we can enable this on all image shaders, without breaking the batches. Since it's all on GPU, we should be able to do it on cached pictures as well, which would improve the way we handle picture caching in general and plane splitting in particular.

Bad things:

- product people were convinced WR was ready, just needed integration

- half of the team was pushing and improving non-WR path

- lack of coordination with Gecko, e.g. Markus needed to be a part of clipping design from the start

- team reduced (Taipei lay offs, people leaving)

Circumstances:

- estimating is hard, because of HW dependence

- couldn't scale the team efficiently

Good things:

- early merge into m-c, dogfooding

- some breathing room in 2017

- tooling (Wrench, captures, etc)

- kept on Github for some time

- reftests and CI

Plan: take swiftshader/GL and see why it's bad.

We need a way to do custom software rendering together with swiftshader, sharing the targets on CPU.

- text

- tile blits

- simple D3D compositor on Win7

Better handling of world-space aligned rectangular clips.

(hidden for politeness)