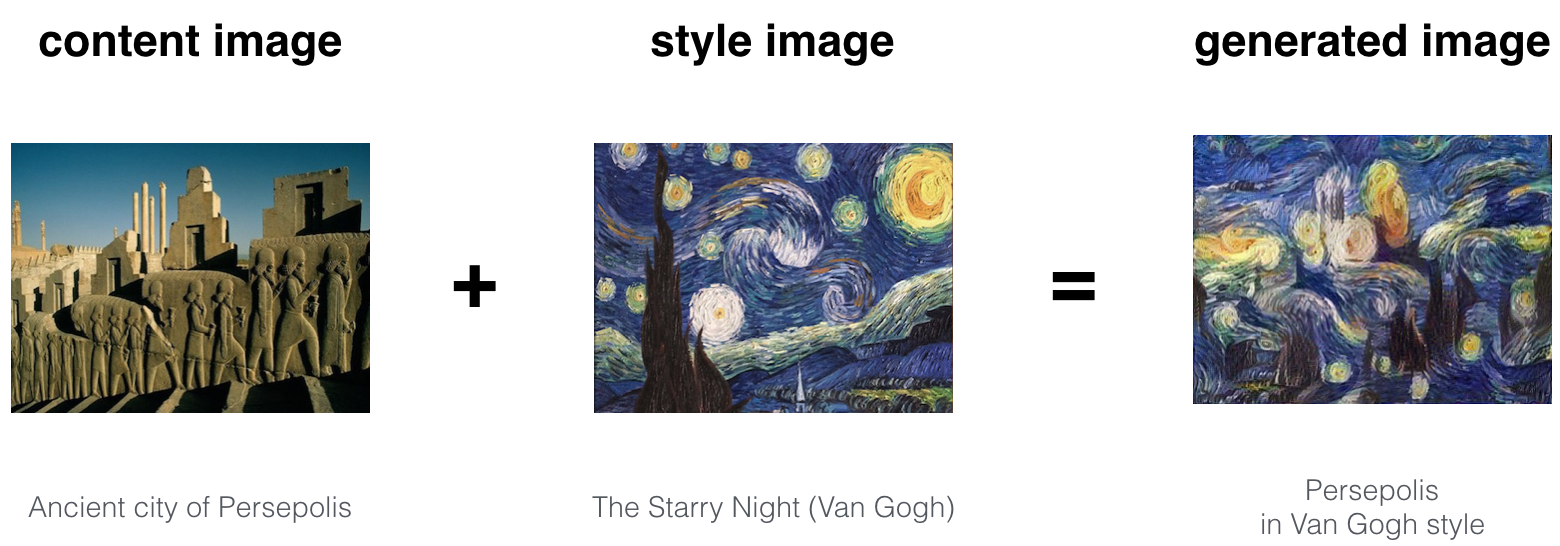

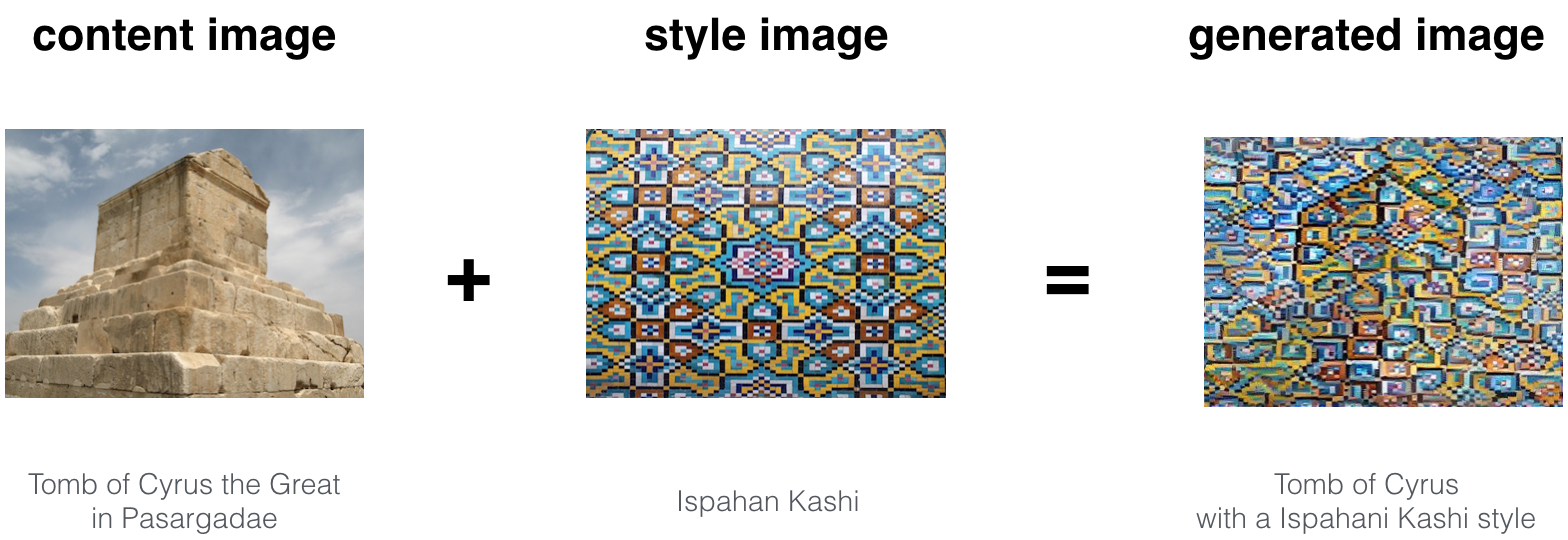

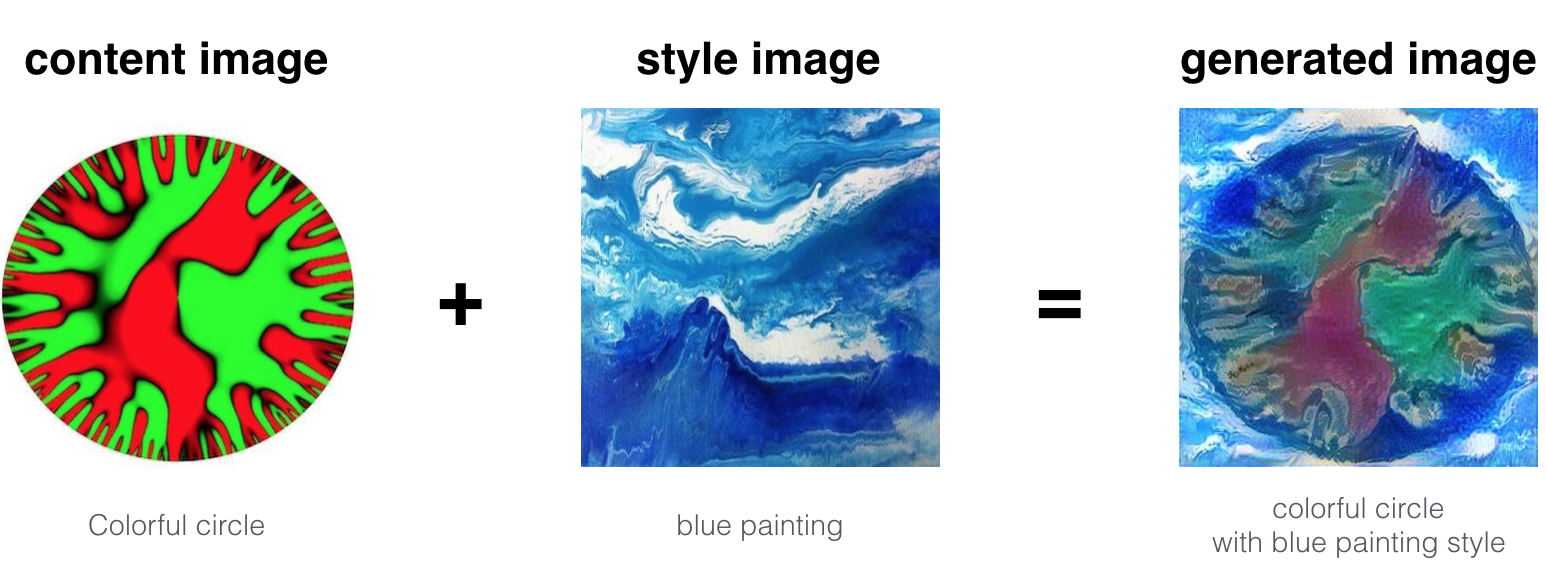

Neural Style Transfer is an algorithm that given a content image C and a style image S can generate novel artistic image

- The beautiful ruins of the ancient city of Persepolis (Iran) with the style of Van Gogh (The Starry Night)

- The tomb of Cyrus the great in Pasargadae with the style of a Ceramic Kashi from Ispahan

- A scientific study of a turbulent fluid with the style of a abstract blue fluid painting

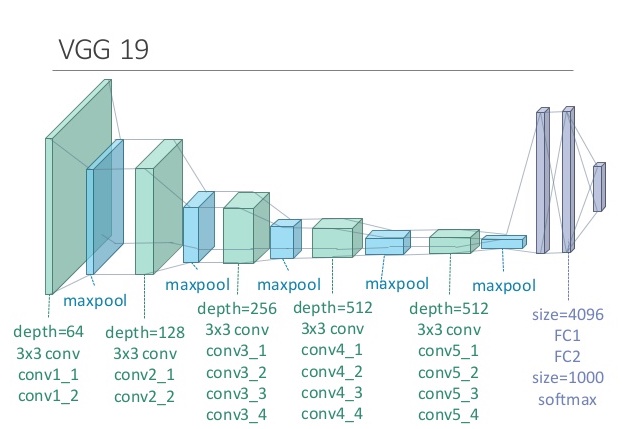

Neural Style Transfer (NST) uses a previously trained convolutional network, and builds on top of that. The idea of using a network trained on a different task and applying it to a new task is called transfer learning.

Following the original NST paper, I have used the VGG network. Specifically, VGG-19, a 19-layer version of the VGG network. This model has already been trained on the very large ImageNet database, and thus has learned to recognize a variety of low level features (at the earlier layers) and high level features (at the deeper layers)

Most of the algorithms optimize a cost function to get a set of parameter values. In NST, optimize a cost function to get pixel values!

-

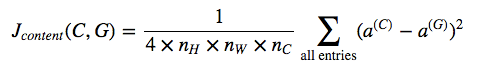

Building the content cost function

"Generated" image G should have similar content as the input image C. The most visually pleasing results will be generated if a layer is chosen in the middle of the network--neither too shallow nor too deep. Set the image C as the input to the pretrained VGG network, and run forward propagation. a(C) be the hidden layer activation in the layer you had chosen. Set G as the input, and run forward propagation. a(G) be the corresponding hidden layer activation. The cost function will be:

When minimizing the content cost later, it helps make sure G has similar content as C

-

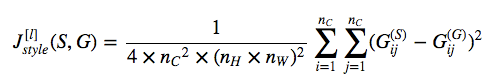

Building the style cost function

Gram matrix (or style matrix) which serves as the basic building block of style cost function computes the correlation between filters. This matrix is of dimensions (nC,nC) where nC is the number of filters. The value G(i,j) measures how similar the activation of filter i are to the activation of filter j. The Style matrix G measures the style of an image. After generating the Style matrix (Gram matrix), goal is to minimize the distance between the Gram matrix of the "style" image S and that of the "generated" image G

where G(S) and G(G) are respectively the Gram matrices of the "style" image and the "generated" image, computed using the hidden layer activations for a particular hidden layer in the network.

Better results are obtained when style costs from several different layers are merged. This is in contrast to the content representation, where usually using just a single hidden layer is sufficient. λ[l] is the weights given to different layers

Minimizing the style cost will cause the image G to follow the style of the image S

-

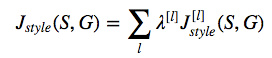

Putting it together to get total cost

Cost function that minimizes both the style and the content cost

The total cost is a linear combination of the content cost and the style cost. α and β are hyperparameters that control the relative weighting between content and style

Now, reduce the cost function and "generated" image will be a combination of content of content image and style of style image

The following steps are to be followed to synthesize new images. Find correlation between the steps mentioned below and nst_main.py

- Create an Interactive tensorflow session

- Load the content image

- Load the style image

- Randomly initialize the image to be generated

- Load the pretrained VGG16 model

- Build the TensorFlow graph:

- Run the content image through the VGG16 model and compute the content cost

- Run the style image through the VGG16 model and compute the style cost

- Compute the total cost

- Define the optimizer and the learning rate

- Initialize the TensorFlow graph and run it for a large number of iterations(200 here), updating the generated image at every step

- Download pretrained VGG model from here and place it in

pretrained_modelfolder. Change theconfig.pyfile to point to VGG19 model path - Run the

nst_main.pyon different style and content images placed inimagesfolder. Change theconfig.pyaccordingly - Content & style images can be found in

imagesdirectory. Corresponding output images can be found inoutputdirectory

- Leon A. Gatys, Alexander S. Ecker, Matthias Bethge, (2015). A Neural Algorithm of Artistic Style

- Harish Narayanan, Convolutional neural networks for artistic style transfer

- Log0, TensorFlow Implementation of "A Neural Algorithm of Artistic Style"

- Karen Simonyan and Andrew Zisserman (2015). Very deep convolutional networks for large-scale image recognition

- MatConvNet