-

Notifications

You must be signed in to change notification settings - Fork 196

vrx_2019 phase2_practice

The objective of this tutorial is to provide step-by-step guidance for local testing in preparation for Phase 2 - Dress Rehearsal. As described in the VRX Competition Documents, each of the VRX tasks will be evaluated over multiple trials, where each trial presents the task in a different configuration (e.g., a different waypoint location) and with different environmental conditions (wind, waves, lighting, etc.) The specifications of the environmental envelope (the range of possible environmental parameters) is included in VRX Technical Guide and the specifications of each task are in the VRX Competition and Task Descriptions - both documents are available on the VRX Competition Documents wiki.

The VRX Tasks: Examples wiki provides general instructions and example of a single trial for each task, which is a great place to start. However, if your solution is going to do well in the competition, it should be able to perform over a wide range of task and environment conditions. The purpose of this tutorial is to show you...

- How to locally test each task with multiple trials

- How to evaluate and verify your performance

For this tutorial, all of your testing will be done locally, on the host machine or a local docker container. For the actual competition, these solutions will be evaluated automatically. If you are interested in testing that aspect of the evaluation, you can setup your own evaluation setup, equivalent to the one used in the competition, using the tools described in the vrx-docker repository.

We have generated three trials for each task that cover much of the allowable task and environment parameters. These trials are notionally

- Easy - simplified task in negligible wave, wind and visual (fog) environmental factors.

- Medium - moderate task difficulty and environmental influence

- Hard - at or close to the limit of task difficulty and environmental factors.

Our intention is to execute the evaluation of submissions to the Phase 2 challenge in using very similar (but not exactly the same) trials of each task. Each trial consists of worlds and models to define the instance of the task and the operating environment.

All examples are stored in the vrx/vrx_gazebo/worlds directory.

You should be able to run the individual examples as

WORLD=stationkeeping0.world

roslaunch vrx_gazebo vrx.launch verbose:=true \

paused:=false \

wamv_locked:=true \

world:=${HOME}/vrx_ws/src/vrx/vrx_gazebo/worlds/2019_practice/${WORLD}

where you will want to change the value of the WORLD variable to match each of the worlds you want to run. Also, note that the world files (e.g., stationkeeping0.world) are in the VRX workspace. In the example above, the workspace is ${HOME}/vrx_ws. Yours might be different, e.g., ${HOME}/catkin_ws, in which case you will need to make that change.

The three example worlds provided for the Stationkeeping task are as follows:

-

stationkeeping0.world: Easy environment. -

stationkeeping1.world: Medium difficulty environment. -

stationkeeping2.world: Hard difficulty environment.

These three worlds all have goals set relatively close to the WAM-V starting position. Due to the nature of the task, they vary primarily in terms of environmental factors.

Details of the ROS interface are provided in the VRX competition documents available on the Documentation Wiki.

For all tasks, monitoring the task information will tell you about the task and the current status, e.g.,

rostopic echo /vrx/task/info

name: "stationkeeping"

state: "running"

ready_time:

secs: 10

nsecs: 0

running_time:

secs: 20

nsecs: 0

elapsed_time:

secs: 135

nsecs: 0

remaining_time:

secs: 165

nsecs: 0

timed_out: False

score: 10.3547826152

---

The goal pose (x, y and yaw) is provided as a ROS topic, e.g.

rostopic echo /vrx/station_keeping/goal

header:

seq: 0

stamp:

secs: 10

nsecs: 0

frame_id: ''

pose:

position:

latitude: 21.31085

longitude: -157.8886

altitude: 0.0

orientation:

x: 0.0

y: 0.0

z: 0.501213004674

w: 0.865323941623

---

Note that the yaw component of the goal is provided as a quaternion in the ENU coordinate frame (see REP-103). It may be desirable to determine the yaw angle from the quaternion. There are a variety of tools to convert from quaternions to Euler angles. Here is a Python example that uses the ROS tf module:

In [1]: from tf.transformations import euler_from_quaternion

In [2]: # Quaternion in order of x, y, z, w

In [3]: q = (0, 0, 0.501213004674, 0.865323941623)

In [4]: yaw = euler_from_quaternion(q)[2]

In [5]: print yaw

1.05

Note that the yaw angle is in radians, ENU.

You can monitor the instantaneous pose error with

rostopic echo /vrx/station_keeping/pose_error

and the cummulative pose error with

rostopic echo /vrx/station_keeping/rms_error

Note: As discussed in the current VRX Competition and Task Descriptions document, the following ROS API topics are only available for development/debugging, they will not be available to the team's software during scored runs of the competition.

/vrx/station_keeping/pose_error/vrx/station_keeping/rms_error

The three example worlds provided for the Wayfinding task are as follows:

-

wayfinding0.world: Easy environment. Three waypoints, relatively close together. -

wayfinding1.world: Medium difficulty environment. Four waypoints, more widely dispersed. -

wayfinding2.world: Hard difficulty environment. Five waypoints in a more challenging arrangement.

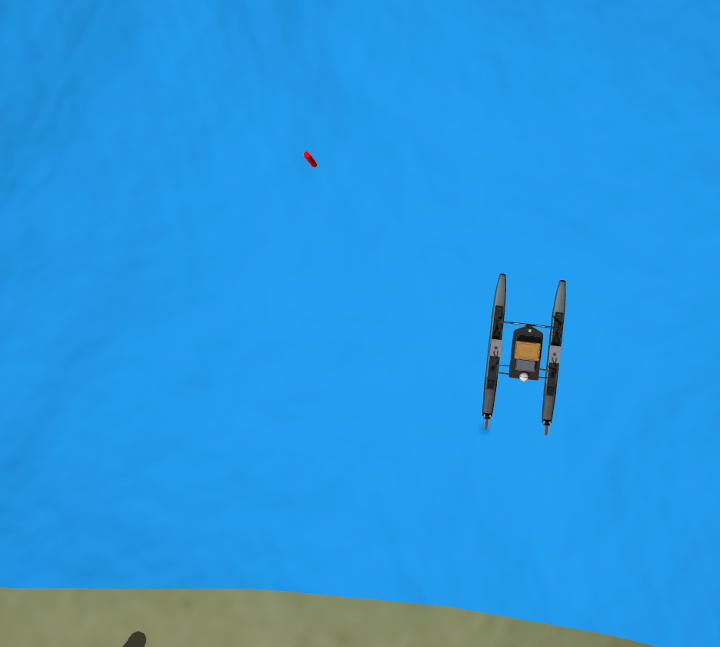

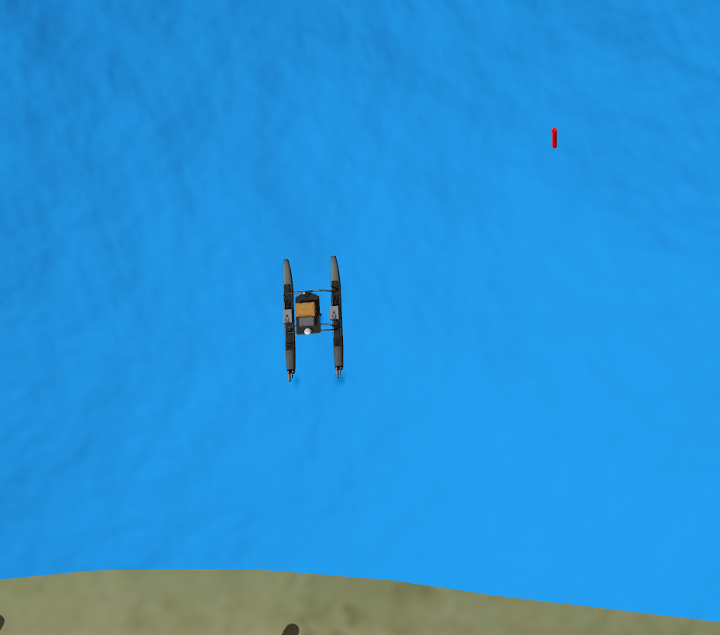

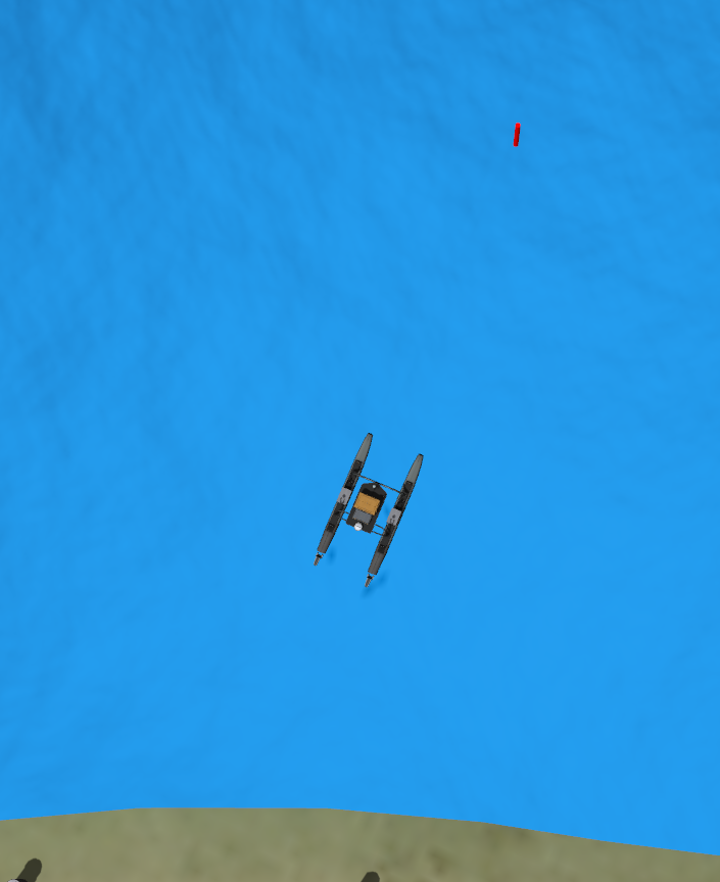

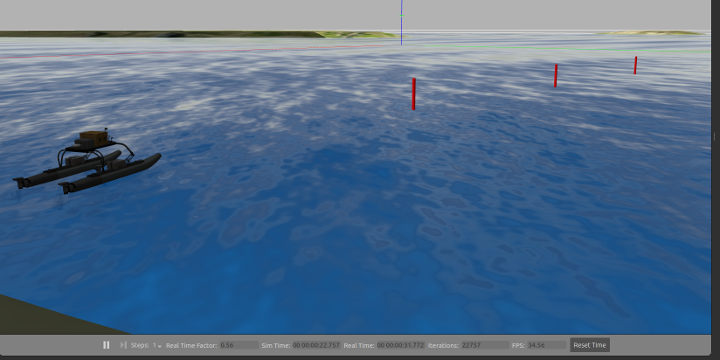

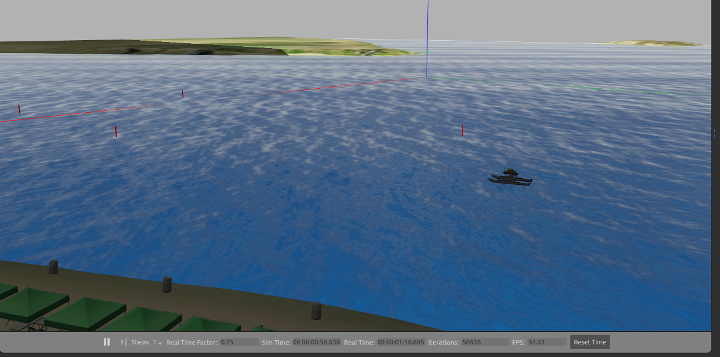

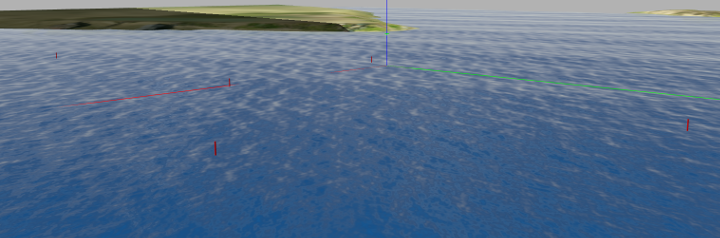

See below for screenshots showing the arrangement of waypoints in each example world.

The waypoints (goal poses) for each trial are available via ROS topic:

rostopic echo /vrx/wayfinding/waypoints

The waypoint error metrics are also available, e.g.,

rostopic echo /vrx/wayfinding/min_errors

rostopic echo /vrx/wayfinding/mean_error

Note: As discussed in the current VRX Competition and Task Descriptions document, the following ROS API topics are only available for development/debugging, they will not be available to the team's software during scored runs of the competition.

/vrx/wayfinding/min_errors/vrx/wayfinding/mean_error

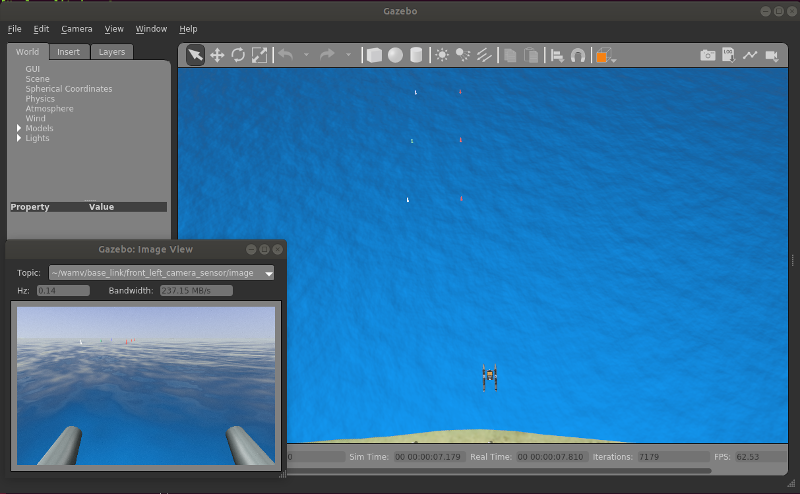

Easy environment. Three waypoints, relatively close together.

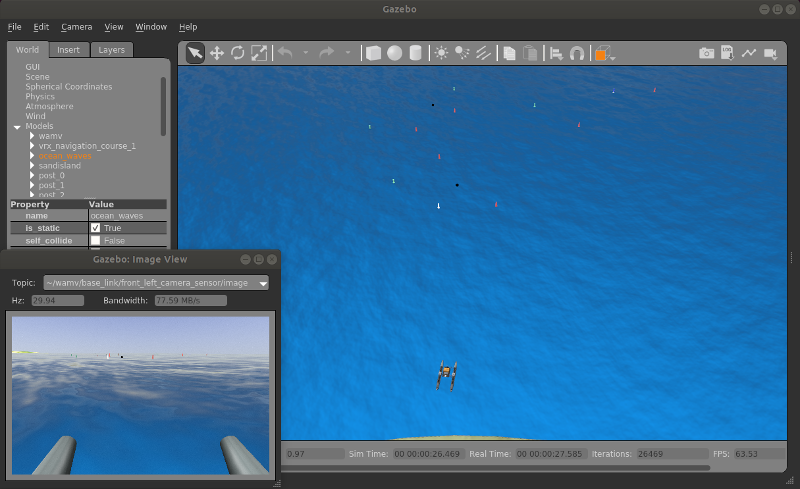

Medium difficulty environment. Four waypoints, more widely dispersed.

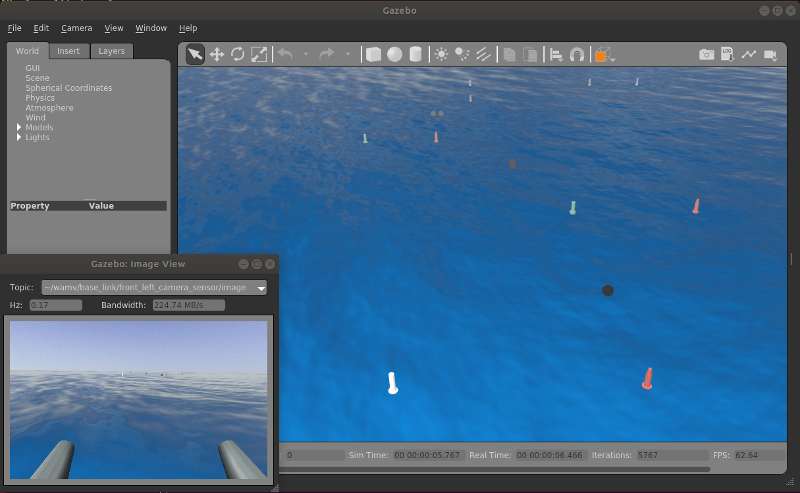

Hard difficulty environment. Five waypoints in a more challenging arrangement.

The three example worlds provided for the Perception task are as follows:

-

perception0.world: Easy environment. -

perception1.world: Medium difficulty environment. -

perception2.world: Hard difficulty environment.

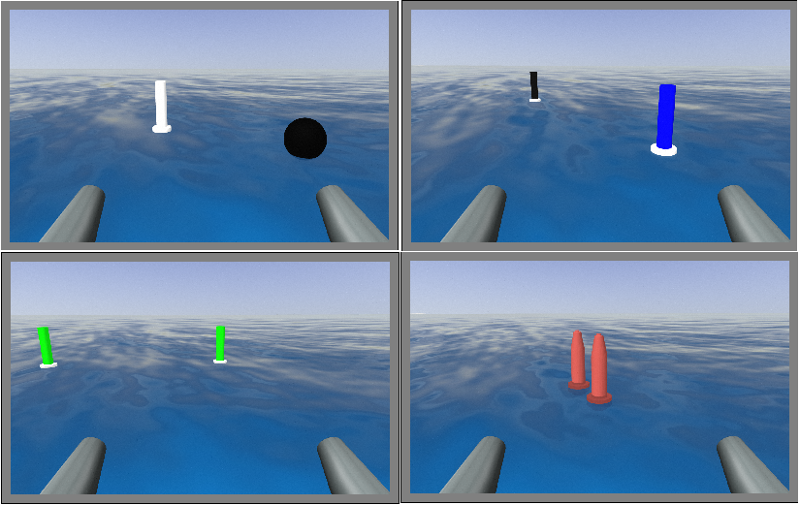

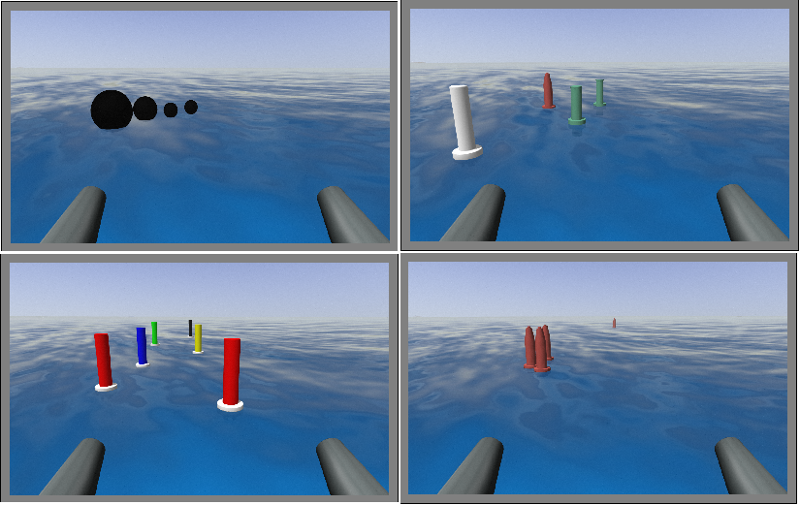

See below for screenshots showing the objects spawn in each trial.

In this world, there is up to one object per trial.

In this world, there is up to two objects per trial and even repeated models.

In this world, there is up to six objects per trial, partial occlusions and objects at long distance.

The three example worlds provided for the Navigation task are as follows:

-

nav_challenge0.world: Easy environment. -

nav_challenge1.world: Medium difficulty environment. -

nav_challenge2.world: Hard difficulty environment.

See below for screenshots showing the arrangement of the navigation course in each example world.

This is very similar to the RobotX navigation channel.

This layout has low obstacle density.

This is the hardest layout! Medium obstacle density.

Three example worlds are supplied to represent three potential trials of the Dock task.

-

dock0.world: Easy environment. Correct bay is the red_cross directly ahead of the WAM-V in its initial position. -

dock1.world: Medium difficulty environment. Correct bay is the green_triangle. -

dock2.world: Hard difficulty environment. The correct bay is red_triangle.

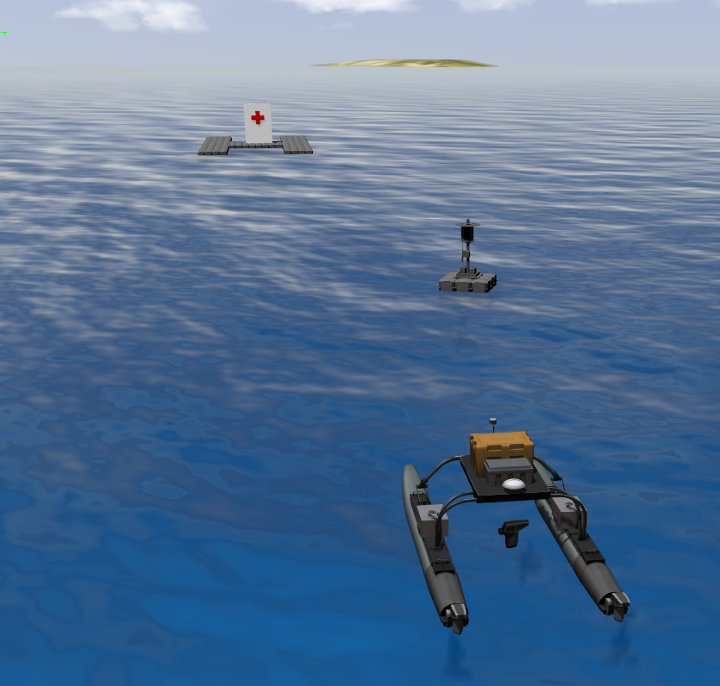

Easy environment. Correct bay is the red_cross directly ahead of the WAM-V in its initial position.

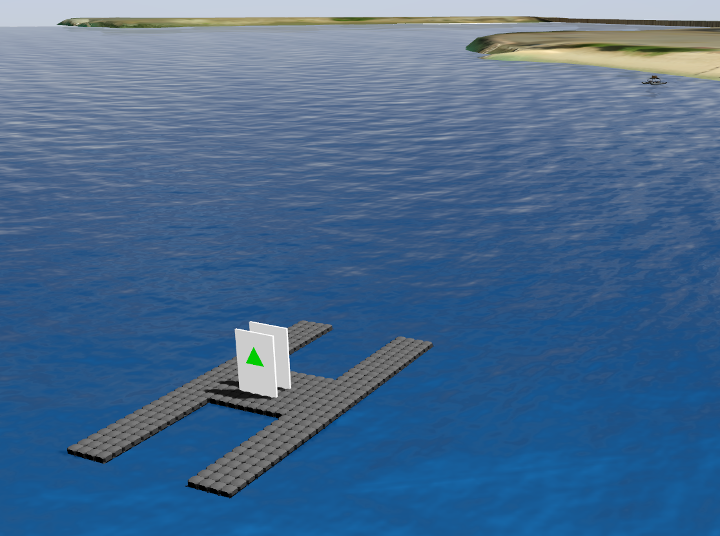

Medium difficulty environment. Correct bay is the green_triangle.

Hard difficulty environment. The correct bay is red_triangle.

The VRX Tasks: Examples wiki provides general notes on the API and Docking Details wiki describes the how successful docking is determined and how the docking state is provided to stdout.

The three example trials for this task have the same environmental and dock layout of Task 5: Dock, described above. In each example world a RoboX Light Buoy is added and the system must find the light buoy, read the code, and infer the correct dock from the light sequence.

-

scan_and_dock0.world: Easy environment. Correct bay is the red_cross (color sequence is "red" "green" "blue") directly ahead of the WAM-V in its initial position. Light buoy is immediately visible. -

scan_and_dock1.world: Medium difficulty environment. Correct bay is the green_triangle. -

scan_and_dock2.world: Hard difficulty environment. The correct bay is red_triangle.

Additional points are awarded when correctly reporting the color sequence through the ROS service API. For example, for scan_and_dock0.world if you call the service with the correct color sequence:

rosservice call /vrx/scan_dock/color_sequence "red" "green" "blue"

- The service will return

success: True- the service returns this as long as the color sequence is valid (has three items, etc.) Even if the sequence is incorrect ,it will returnsuccess: True - In the Gazebo terminal stdout will report some debugging information, e.g.,

[ INFO] [1570143447.600102983, 12.694000000]: Received color sequence is correct. Additional points will be scored.

[Msg] Adding <10> points for correct reporting of color sequence

- The score will be incremented, which you can see with

rostopic echo /vrx/task/info